English | 🇨🇳中文

Library ants implements a goroutine pool with fixed capacity, managing and recycling a massive number of goroutines, allowing developers to limit the number of goroutines in your concurrent programs.

- Managing and recycling a massive number of goroutines automatically

- Purging overdue goroutines periodically

- Abundant APIs: submitting tasks, getting the number of running goroutines, tuning capacity of pool dynamically, releasing pool, rebooting pool

- Handle panic gracefully to prevent programs from crash

- Efficient in memory usage and it even achieves higher performance than unlimited goroutines in Golang

- Nonblocking mechanism

- 1.8.x

- 1.9.x

- 1.10.x

- 1.11.x

- 1.12.x

- 1.13.x

go get -u github.com/panjf2000/antsgo get -u github.com/panjf2000/ants/v2Just take a imagination that your program starts a massive number of goroutines, resulting in a huge consumption of memory. To mitigate that kind of situation, all you need to do is to import ants package and submit all your tasks to a default pool with fixed capacity, activated when package ants is imported:

package main

import (

"fmt"

"sync"

"sync/atomic"

"time"

"github.com/panjf2000/ants/v2"

)

var sum int32

func myFunc(i interface{}) {

n := i.(int32)

atomic.AddInt32(&sum, n)

fmt.Printf("run with %d\n", n)

}

func demoFunc() {

time.Sleep(10 * time.Millisecond)

fmt.Println("Hello World!")

}

func main() {

defer ants.Release()

runTimes := 1000

// Use the common pool.

var wg sync.WaitGroup

syncCalculateSum := func() {

demoFunc()

wg.Done()

}

for i := 0; i < runTimes; i++ {

wg.Add(1)

_ = ants.Submit(syncCalculateSum)

}

wg.Wait()

fmt.Printf("running goroutines: %d\n", ants.Running())

fmt.Printf("finish all tasks.\n")

// Use the pool with a function,

// set 10 to the capacity of goroutine pool and 1 second for expired duration.

p, _ := ants.NewPoolWithFunc(10, func(i interface{}) {

myFunc(i)

wg.Done()

})

defer p.Release()

// Submit tasks one by one.

for i := 0; i < runTimes; i++ {

wg.Add(1)

_ = p.Invoke(int32(i))

}

wg.Wait()

fmt.Printf("running goroutines: %d\n", p.Running())

fmt.Printf("finish all tasks, result is %d\n", sum)

}package main

import (

"io/ioutil"

"net/http"

"github.com/panjf2000/ants/v2"

)

type Request struct {

Param []byte

Result chan []byte

}

func main() {

pool, _ := ants.NewPoolWithFunc(100000, func(payload interface{}) {

request, ok := payload.(*Request)

if !ok {

return

}

reverseParam := func(s []byte) []byte {

for i, j := 0, len(s)-1; i < j; i, j = i+1, j-1 {

s[i], s[j] = s[j], s[i]

}

return s

}(request.Param)

request.Result <- reverseParam

})

defer pool.Release()

http.HandleFunc("/reverse", func(w http.ResponseWriter, r *http.Request) {

param, err := ioutil.ReadAll(r.Body)

if err != nil {

http.Error(w, "request error", http.StatusInternalServerError)

}

defer r.Body.Close()

request := &Request{Param: param, Result: make(chan []byte)}

// Throttle the requests traffic with ants pool. This process is asynchronous and

// you can receive a result from the channel defined outside.

if err := pool.Invoke(request); err != nil {

http.Error(w, "throttle limit error", http.StatusInternalServerError)

}

w.Write(<-request.Result)

})

http.ListenAndServe(":8080", nil)

}// Option represents the optional function.

type Option func(opts *Options)

// Options contains all options which will be applied when instantiating a ants pool.

type Options struct {

// ExpiryDuration sets the expired time (second) of every worker.

ExpiryDuration time.Duration

// PreAlloc indicates whether to make memory pre-allocation when initializing Pool.

PreAlloc bool

// Max number of goroutine blocking on pool.Submit.

// 0 (default value) means no such limit.

MaxBlockingTasks int

// When Nonblocking is true, Pool.Submit will never be blocked.

// ErrPoolOverload will be returned when Pool.Submit cannot be done at once.

// When Nonblocking is true, MaxBlockingTasks is inoperative.

Nonblocking bool

// PanicHandler is used to handle panics from each worker goroutine.

// if nil, panics will be thrown out again from worker goroutines.

PanicHandler func(interface{})

}

// WithOptions accepts the whole options config.

func WithOptions(options Options) Option {

return func(opts *Options) {

*opts = options

}

}

// WithExpiryDuration sets up the interval time of cleaning up goroutines.

func WithExpiryDuration(expiryDuration time.Duration) Option {

return func(opts *Options) {

opts.ExpiryDuration = expiryDuration

}

}

// WithPreAlloc indicates whether it should malloc for workers.

func WithPreAlloc(preAlloc bool) Option {

return func(opts *Options) {

opts.PreAlloc = preAlloc

}

}

// WithMaxBlockingTasks sets up the maximum number of goroutines that are blocked when it reaches the capacity of pool.

func WithMaxBlockingTasks(maxBlockingTasks int) Option {

return func(opts *Options) {

opts.MaxBlockingTasks = maxBlockingTasks

}

}

// WithNonblocking indicates that pool will return nil when there is no available workers.

func WithNonblocking(nonblocking bool) Option {

return func(opts *Options) {

opts.Nonblocking = nonblocking

}

}

// WithPanicHandler sets up panic handler.

func WithPanicHandler(panicHandler func(interface{})) Option {

return func(opts *Options) {

opts.PanicHandler = panicHandler

}

}ants.Optionscontains all optional configurations of ants pool, which allows you to customize the goroutine pool by invoking option functions to set up each configuration in NewPool/NewPoolWithFuncmethod.

ants also supports customizing the capacity of pool. You can invoke the NewPool method to instantiate a pool with a given capacity, as following:

// Set 10000 the size of goroutine pool

p, _ := ants.NewPool(10000)Tasks can be submitted by calling ants.Submit(func())

ants.Submit(func(){})You can tune the capacity of ants pool in runtime with Tune(int):

pool.Tune(1000) // Tune its capacity to 1000

pool.Tune(100000) // Tune its capacity to 100000Don't worry about the synchronous problems in this case, the method here is thread-safe (or should be called goroutine-safe).

ants allows you to pre-allocate memory of goroutine queue in pool, which may get a performance enhancement under some special certain circumstances such as the scenario that requires a pool with ultra-large capacity, meanwhile each task in goroutine lasts for a long time, in this case, pre-mallocing will reduce a lot of memory allocation in goroutine queue.

// ants will pre-malloc the whole capacity of pool when you invoke this method

p, _ := ants.NewPool(100000, ants.WithPreAlloc(true))pool.Release()// A pool that has been released can be still used once you invoke the Reboot().

pool.Reboot()All tasks submitted to ants pool will not be guaranteed to be addressed in order, because those tasks scatter among a series of concurrent workers, thus those tasks would be executed concurrently.

-

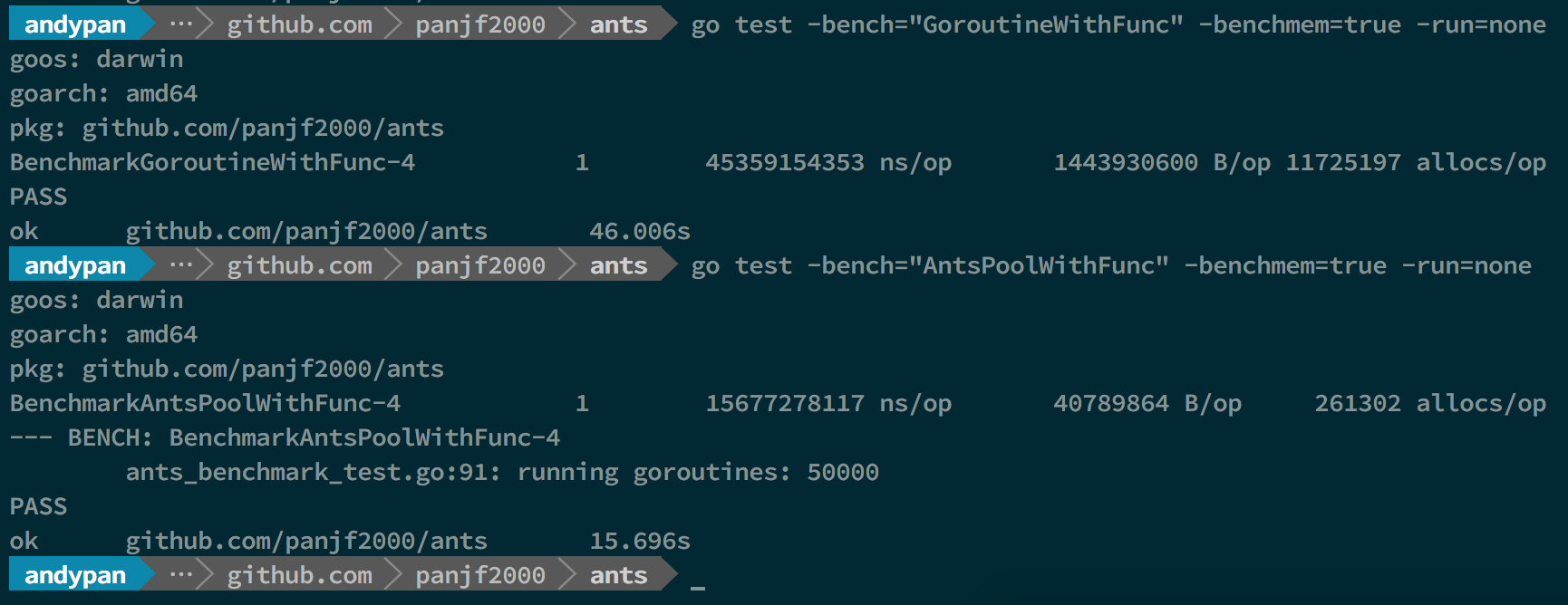

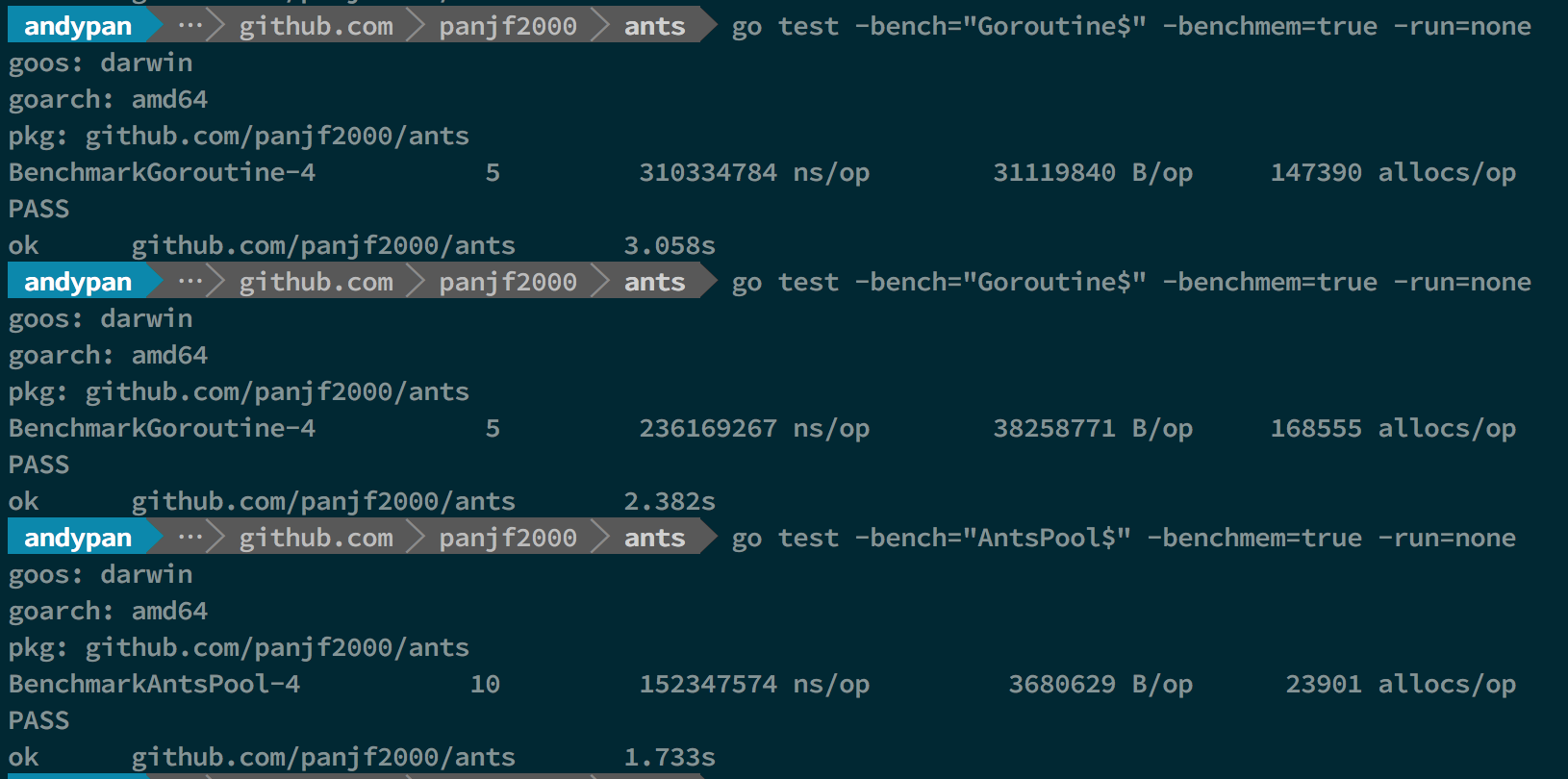

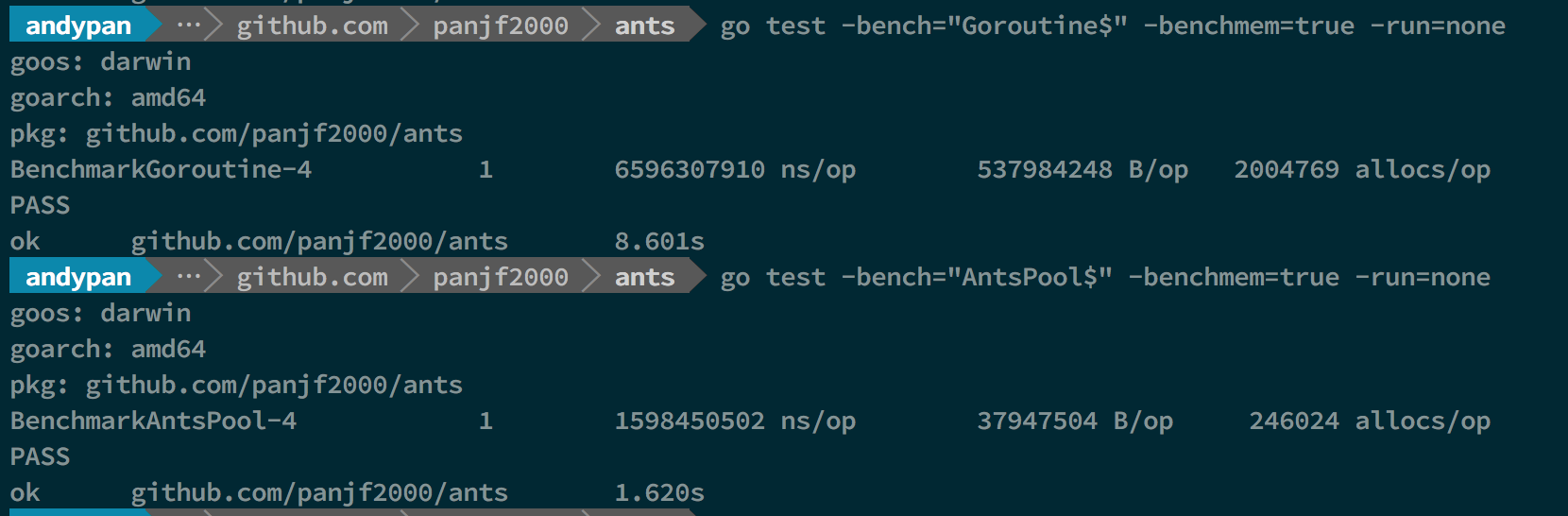

BenchmarkGoroutine-4 represents the benchmarks with unlimited goroutines in golang.

-

BenchmarkPoolGroutine-4 represents the benchmarks with a

antspool.

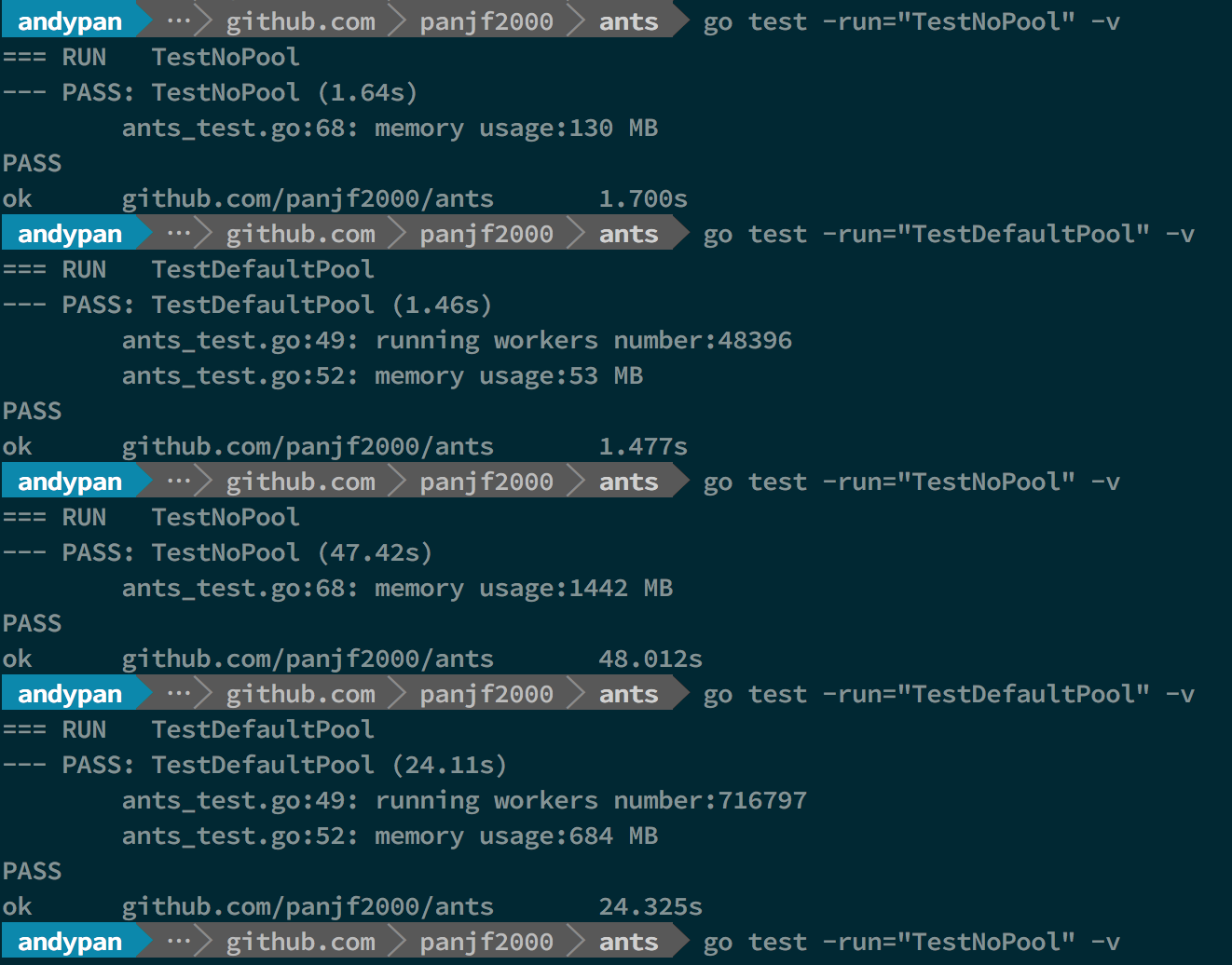

In above benchmark result, the first and second benchmarks performed test cases with 1M tasks and the rest of benchmarks performed test cases with 10M tasks, both in unlimited goroutines and ants pool, and the capacity of this ants goroutine-pool was limited to 50K.

As you can see, ants performs 2 times faster than goroutines without pool (10M tasks) and it only consumes half the memory comparing with goroutines without pool. (both in 1M and 10M tasks)

Throughput (it is suitable for scenarios where tasks are submitted asynchronously without waiting for the final results)

In conclusion, ants performs 2~6 times faster than goroutines without a pool and the memory consumption is reduced by 10 to 20 times.

Please read our Contributing Guidelines before opening a PR and thank you to all the developers who already made contributions to ants!

Source code in ants is available under the MIT License.

ants had been being developed with GoLand under the free JetBrains Open Source license(s) granted by JetBrains s.r.o., hence I would like to express my thanks here.

Support us with a monthly donation and help us continue our activities.

Become a bronze sponsor with a monthly donation of $10 and get your logo on our README on Github.