We provide PyTorch implementation for:

GANILLA: Generative Adversarial Networks for Image to Illustration Translation.

- (February, 2021) We released code for our recent work on sketch colorization Adversarial Segmentation Loss for Sketch Colorization.

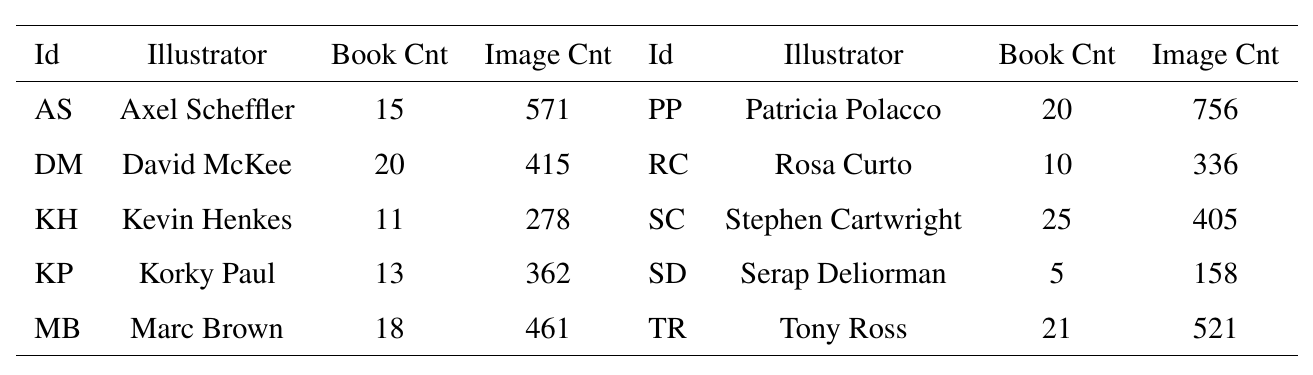

Dataset Stats:

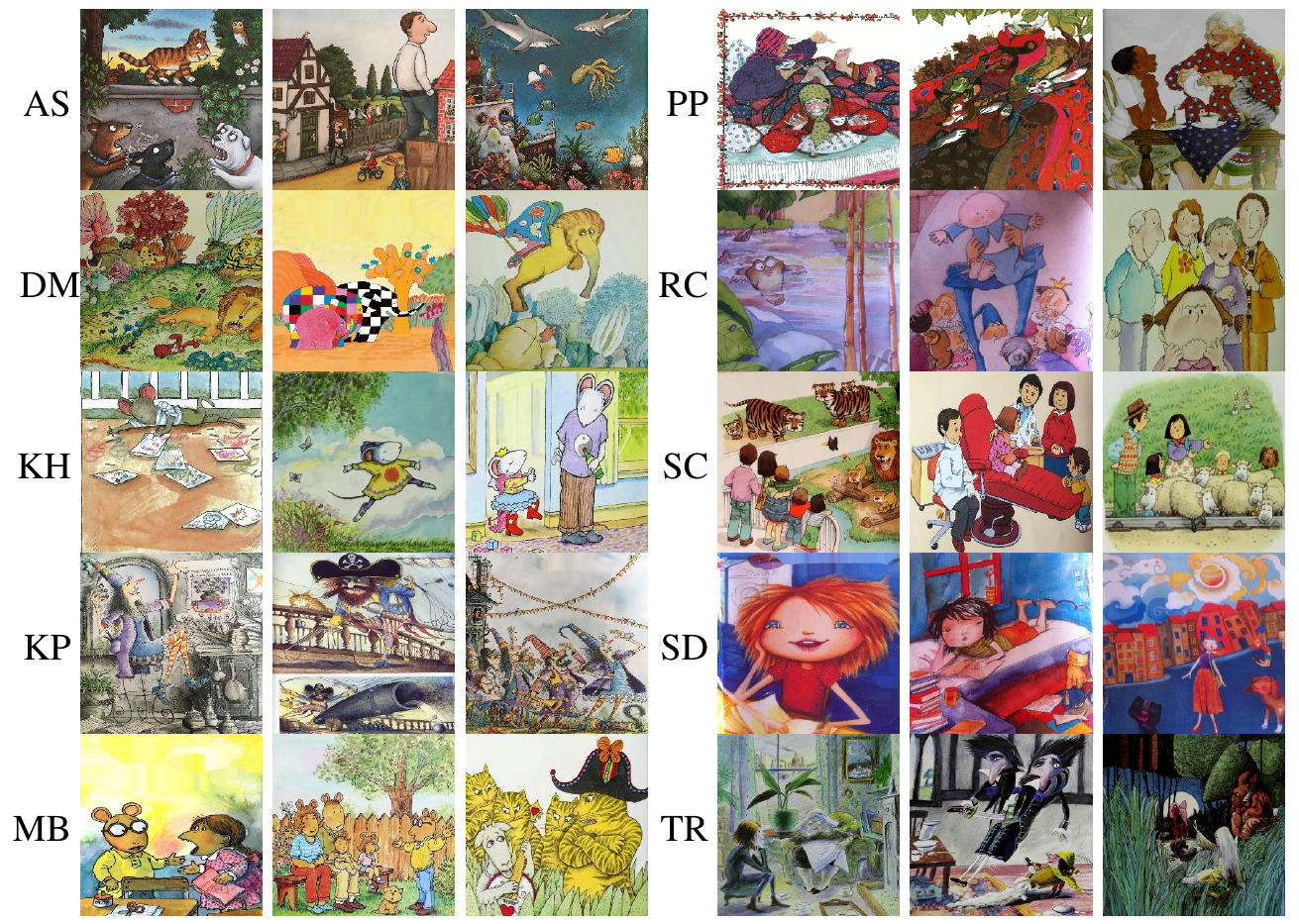

Sample Images:

GANILLA:

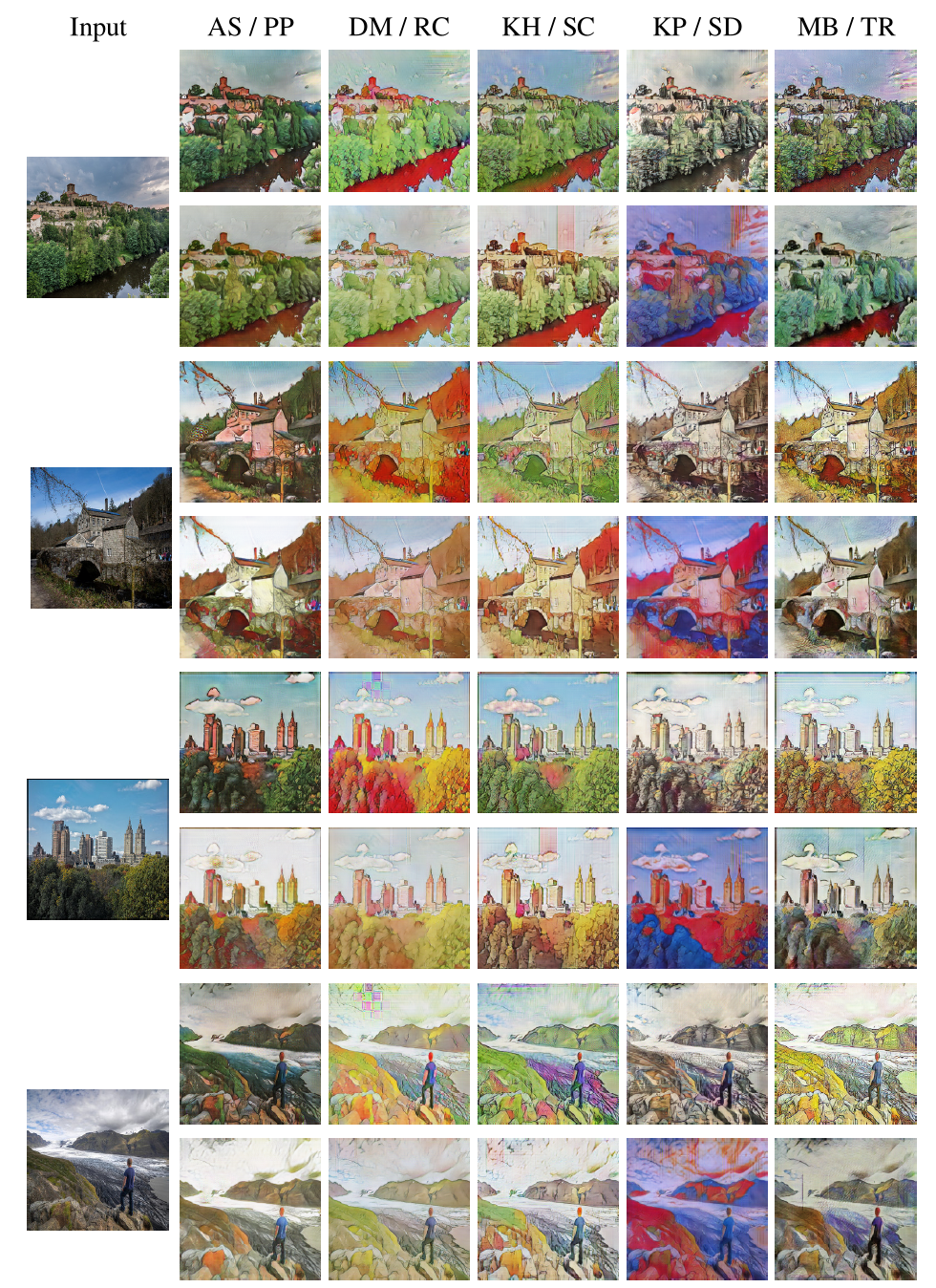

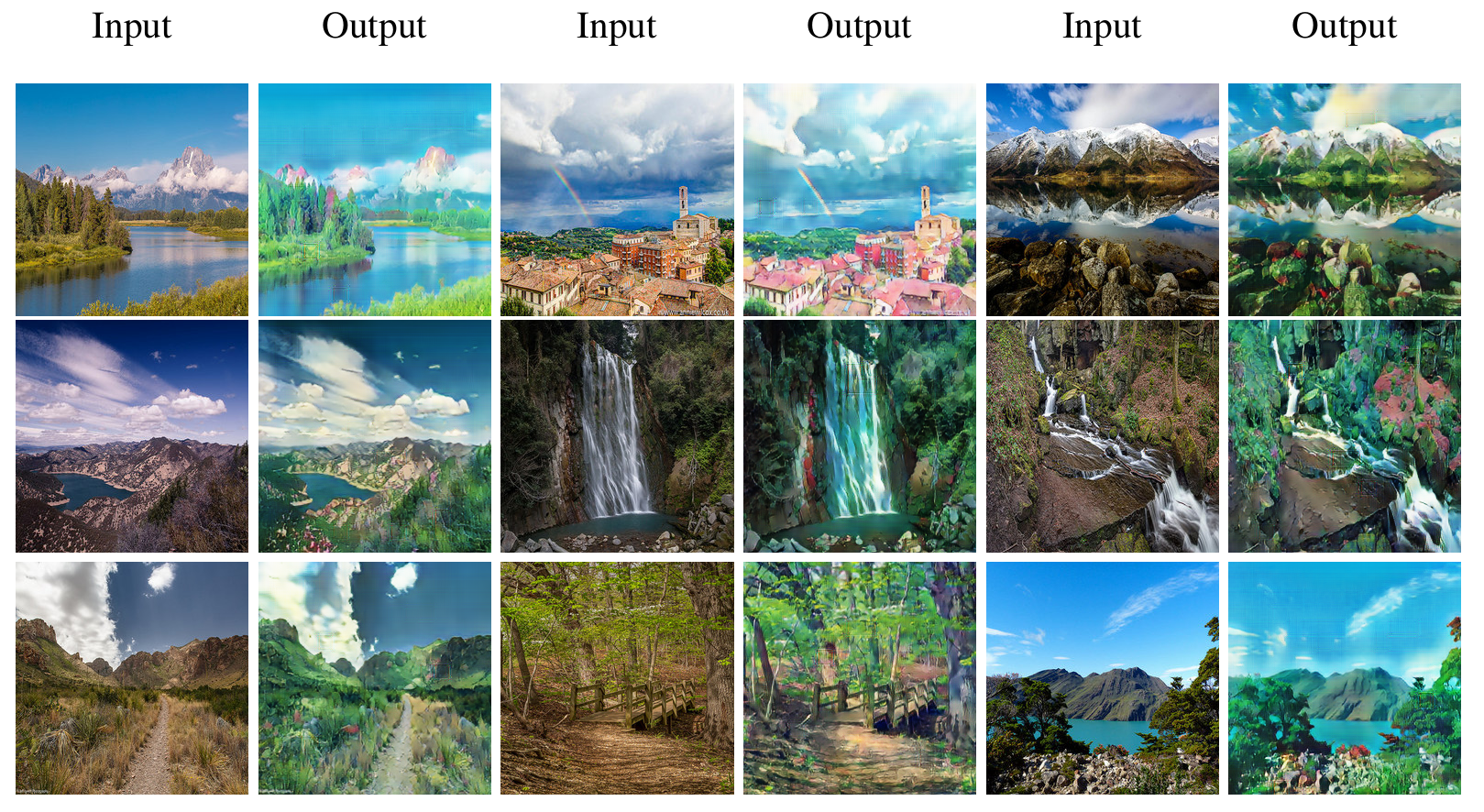

GANILLA results on the illustration dataset:

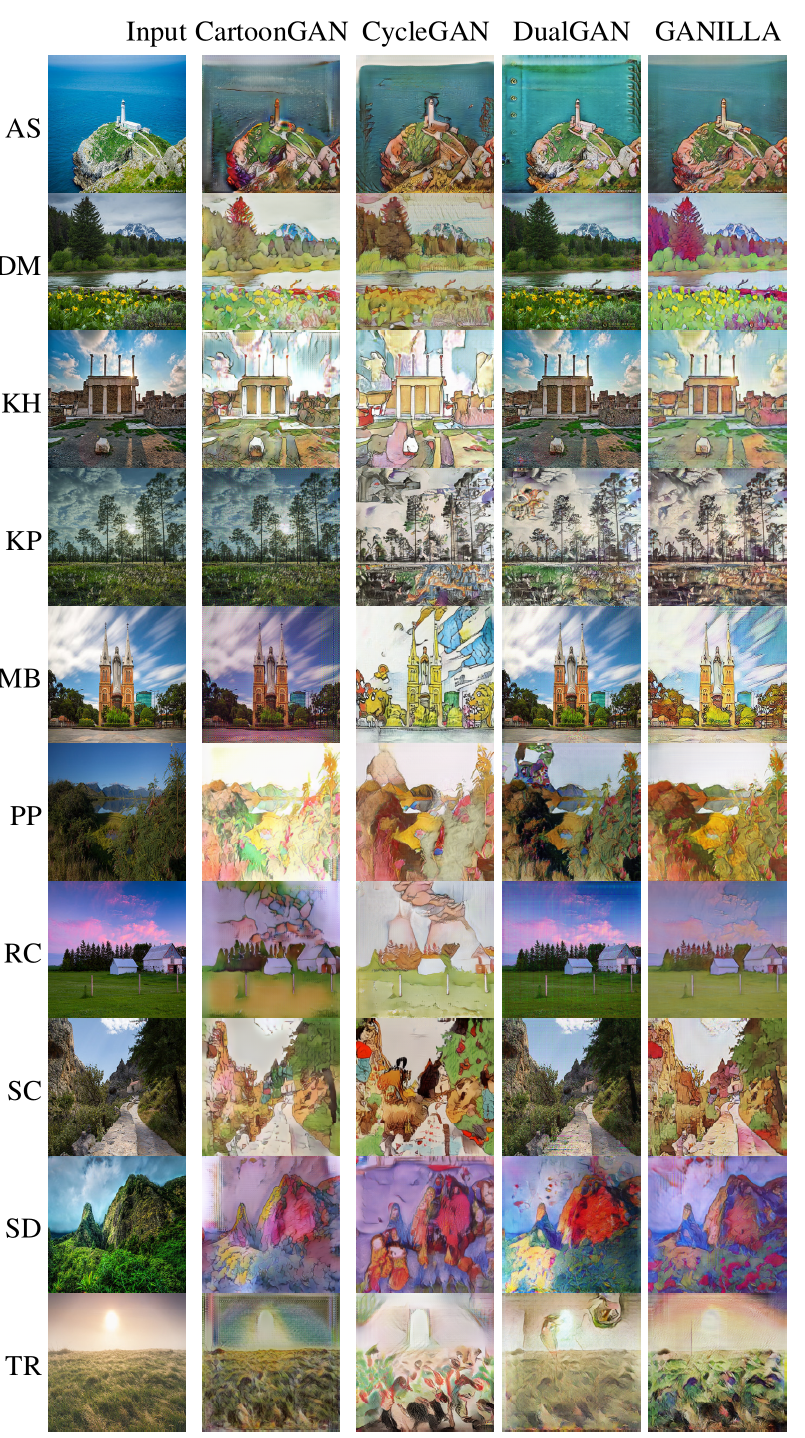

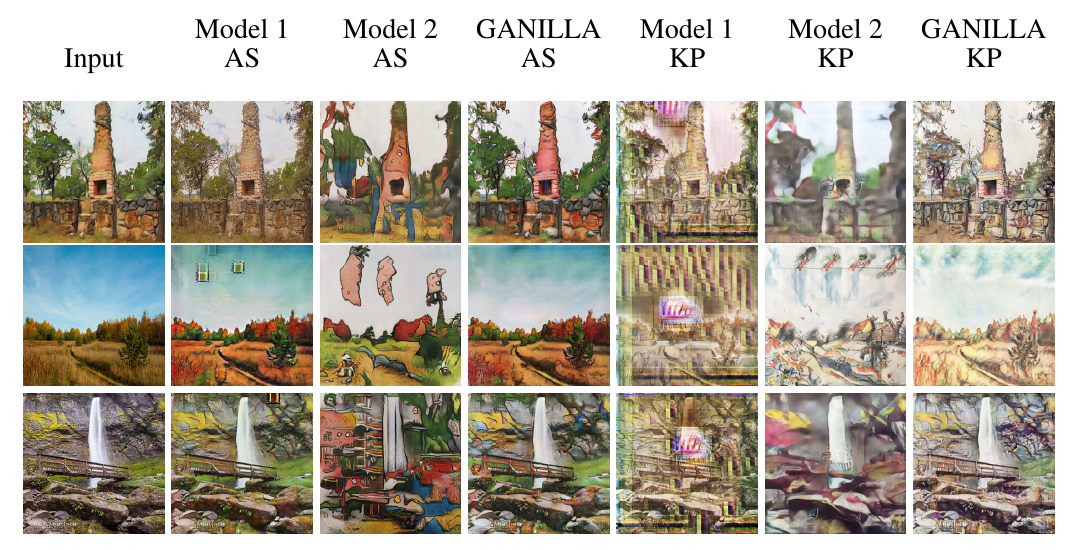

Comparison with other methods:

Style transfer using Miyazaki's anime images:

Ablation Experiments:

- Linux, macOS or Windows

- Python 2 or 3

- CPU or NVIDIA GPU + CUDA CuDNN

Please refer to datasets.md for details.

- Clone this repo:

git clone https://github.com/giddyyupp/ganilla.git

cd ganilla- Install PyTorch 0.4+ and torchvision from http://pytorch.org and other dependencies (e.g., visdom and dominate). You can install all the dependencies by

pip install -r requirements.txt- For Conda users, we include a script

./scripts/conda_deps.shto install PyTorch and other libraries.

- Download a GANILLA/CycleGAN dataset (e.g. maps):

bash ./datasets/download_cyclegan_dataset.sh maps- Train a model:

#!./scripts/train_ganilla.sh

python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --netG resnet_fpn- To view training results and loss plots, run

python -m visdom.serverand click the URL http://localhost:8097. To see more intermediate results, check out./checkpoints/maps_cyclegan/web/index.html - Test the model:

#!./scripts/test_cyclegan.sh

python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan --netG resnet_fpnThe test results will be saved to a html file here: ./results/maps_cyclegan/latest_test/index.html.

You can find more scripts at scripts directory.

- You can download pretrained models using following link

Put a pretrained model under ./checkpoints/{name}_pretrained/100_net_G.pth.

- To test the model, you also need to download the monet2photo dataset and use trainB images as source:

bash ./datasets/download_cyclegan_dataset.sh monet2photo- Then generate the results using

python test.py --dataroot datasets/monet2photo/testB --name {name}_pretrained --model testThe option --model test is used for generating results of GANILLA only for one side. python test.py --model cycle_gan will require loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at ./results/. Use --results_dir {directory_path_to_save_result} to specify the results directory.

- If you would like to apply a pre-trained model to a collection of input images (rather than image pairs), please use

--dataset_mode singleand--model testoptions. Here is a script to apply a model to Facade label maps (stored in the directoryfacades/testB).

#!./scripts/test_single.sh

python test.py --dataroot ./datasets/monet2photo/testB/ --name {your_trained_model_name} --model testYou might want to specify --netG to match the generator architecture of the trained model.

We shared style & content CNNs in this repo. It contains train/test procedure as well as pretrained weights for both cnns.

Best practice for training and testing your models.

Before you post a new question, please first look at the above Q & A and existing GitHub issues.

If you use this code for your research, please cite our papers.

@article{hicsonmez2020ganilla,

title={GANILLA: Generative adversarial networks for image to illustration translation},

author={Hicsonmez, Samet and Samet, Nermin and Akbas, Emre and Duygulu, Pinar},

journal={Image and Vision Computing},

pages={103886},

year={2020},

publisher={Elsevier}

}

@inproceedings{Hicsonmez:2017:DDN:3078971.3078982,

author = {Hicsonmez, Samet and Samet, Nermin and Sener, Fadime and Duygulu, Pinar},

title = {DRAW: Deep Networks for Recognizing Styles of Artists Who Illustrate Children's Books},

booktitle = {Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval},

year = {2017}

}

Our code is heavily inspired by CycleGAN.

The numerical calculations reported in this work were fully performed at TUBITAK ULAKBIM, High Performance and Grid Computing Center (TRUBA resources).