In recent years, Procedural Level Generation via Machine Learning (PLGML) techniques have been applied to generate game levels with machine learning. These approaches rely on human-annotated representations of game levels. Creating annotated datasets for games requires domain knowledge and is time-consuming. Hence, though a large number of video games exist, annotated datasets are curated only for a small handful. Thus current PLGML techniques have been explored in limited domains, with Super Mario Bros. as the most common example. To address this problem, we present tile embeddings, a unified, affordance-rich representation for tile-based 2D games. To learn this embedding, we employ autoencoders trained on the visual and semantic information oft iles from a set of existing, human-annotated games. We evaluate this representation on its ability to predict affordancesfor unseen tiles, and to serve as a PLGML representation for annotated and unannotated games.

To promote future research and as a contribution to PCGML community, through this repository we provide:

- An end to end implementation for extracting the data and training an autoencoder to get a single, unified representation which we refer as tile embeddings.

- A tutorial notebook for generating levels of the game Bubble Bobble using LSTMs and tile embeddings.

- JSON files with context data for every unique tile type.

- Preprocessed level images for the game Bubble Bobble.

- Data Extraction and Preparation

- Autoencoder Training

- Level Representation using tile embeddings

- Bubble Bobble level generation using LSTM

- How can you contribute to this project

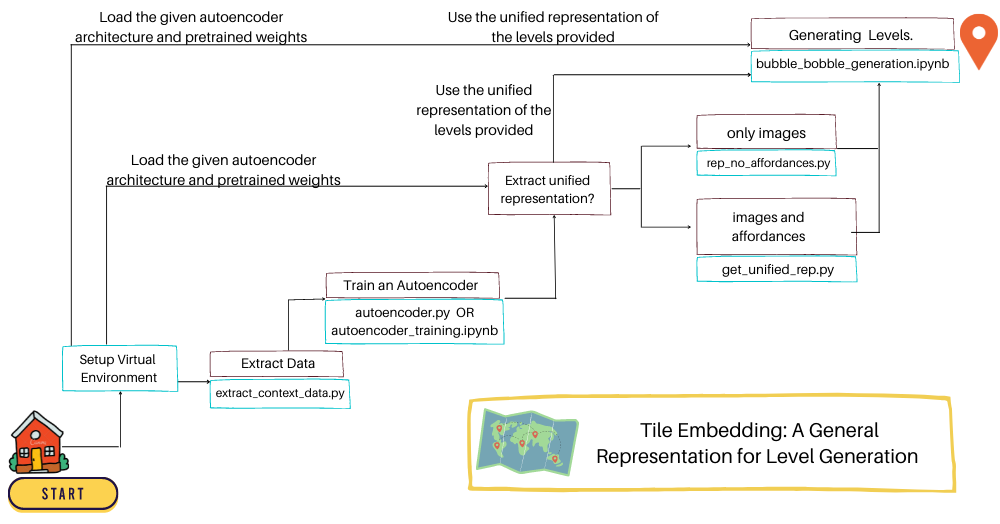

Too many scripts to run? The flow chart below answers the questions related to which scripts to run and in what order :)

git clone https://github.com/js-mrunal/tile_embeddings.git

- Install Pipenv

pip3 install pipenv

- Setup the virtual environment

make init

- Try and run jupyter notebook on your local server

make jupyter-notebook

=======

Prerequisites:

- Step 0

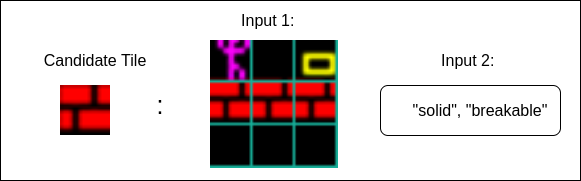

The training data for our implementation includes five games: Super Mario Bros, Kid Icarus, Legend of Zelda, Lode Runner, Megaman. To train the autoencoder for obtain an embedded representation of tile, we draw on local pixel context and the affordances of the candidate tile.

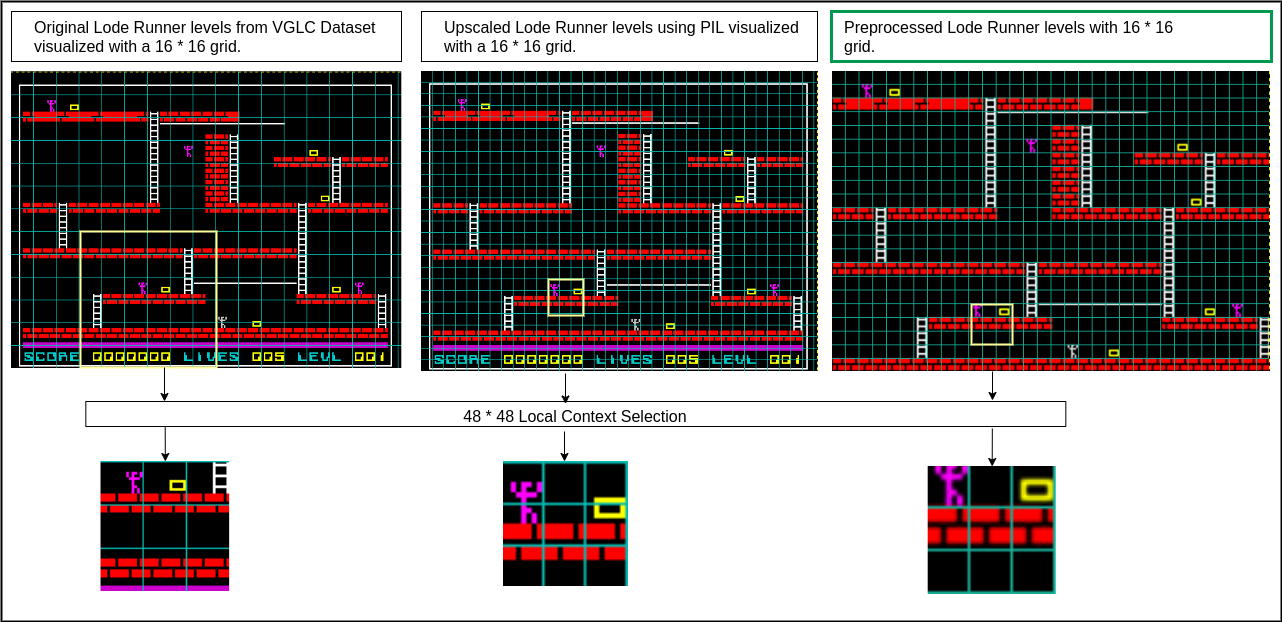

- Local Pixel Context: To extract the 16 * 16 tiles along with its local context, we slide a 48 * 48 window over the level images. The parent dataset for level images is VGLC. However, level images for some games have extra pixels along the vertical/horizontal axis which result in off-centered tile sprite extraction(demonstrated in fig). We perform prelimnary image manipulations on this dataset to fit the dimensions of such level images. Lode Runner levels has 8 * 8 tile size which we upscaled to 16 * 16 using the PIL library. We provide the preprocessed dataset directory vglc.

To extract the context for all five games run the following.

- Move to directory: context_extraction

cd notebooks/

- Run the following command in shell

pipenv run python extract_context_data.py

On successful execution of this code, navigate to the folder data > context_data >. Each game directory populated with visual local context seperated with sub-directories of tile characters. Each game directory also has an JSON file created. It is a dictionary with key as the centre tile, enlisting all possible neighbourhoods it.

- Affordances: We define a single, unified set of 13 tags across the games. The tile character to behaviour mapping is provided as JSON files.

Inputs obtained are as follows:

Prerequisites:

- Step 0

- You can skip Step 1 ONLY IF you want to directly load the provided autoencoder architecture and its pretrained weights (Step (2c)).

Now that we have both our inputs ready, we have to integrate them into a single latent vector representation, which we call tile embeddings. To get the embedding, we employ the X-shaped autoencoder architecture. Please find the details of the architecture in our paper.

2a. The jupyter notebook "notebooks > autoencoder_training.ipynb" provides a step by step guide for autoencoder training.

2b. You can also run the following commands to train the autoencoder and save the weights:

Move to the directory: notebooks

cd notebooks/

Run the following command in shell

pipenv run python autoencoder_training.py

2c. Load the directly provided architecture and pretrained weights to perform evaluation. Sample Notebook:

evaluating_autoencoder_on_text.ipynb

Prerequisites:

- Step 0

- (Optional) Step 1 followed by Step 2

In this step we convert the levels to the embedding representation using tile embeddings. There are two ways to go about this step.

3a. If the game data has affordance mapping present, leverage it to get the tile embeddings. The following notebook converts the levels of all the games in our training dataset into a unified level representation by considering visuals as well as affordances.

pipenv run python generate_unified_rep.py

After the execution of this notebook the embedding representation of the levels for the games Super Mario Bros, Kid Icarus, Megaman, Legend of Zelda, Lode Runner will be stored in the directory data>unified_rep.

3b. In case of missing affordances, we provide evidence that the autoencoder can still approximate the embedding vectors (refer paper). To address such datasets, refer the following script

pipenv run python rep_no_affordances.py

After the execution of this python code the embedding representation of the Bubble Bobble levels will be stored in data>unified_rep>

*Note: Feel free to skip this step and directly use the provided the pickle files. Navigate to data>unified_rep, you will find the embedding representation of the levels for the games: Super Mario Bros, Kid Icarus, Megaman, Legend of Zelda, Lode Runner, Bubble Bobble *

Prerequisites:

The notebook bubble_bobble_generation.ipynb provides step-by-step instructions in detail for generating levels of the game Bubble Bobble using LSTM and tile embeddings.

- Add more data! Training the autoencoder on more games and enriching the corpus

- Perform cool experiments with tile embedding, and cite us :)

- Find bugs and help us make this repository better!

Follow the same pipenv steps listed above from the original Repo to install dependancies and activate environment.

cd src

python generate_tiles_from_minigrids.py

python load_data.py

python generate_batches.py

The training potentially can be improved to overcome the error gradients. Currently it cannot last more than 10 epochs before exploding into Nans. :(. But it learns enough to capture all minigrid objects with only some noise.

python generate_batches.py

cd notebooks

run the jupyter notebook evaluate_autoencoder.ipynb

cd notebooks

run the jupyter notebook observations_to_embedding.ipynb