This repository contains the code for the CloudGAN: a method to detect and inpaint clouds of satellite RGB-images. It consists out of two components: cloud detection with an auto-encoder (AE) and cloud inpainting with the SN-PatchGAN (GitHub). An overview of the method is given in the figure below.

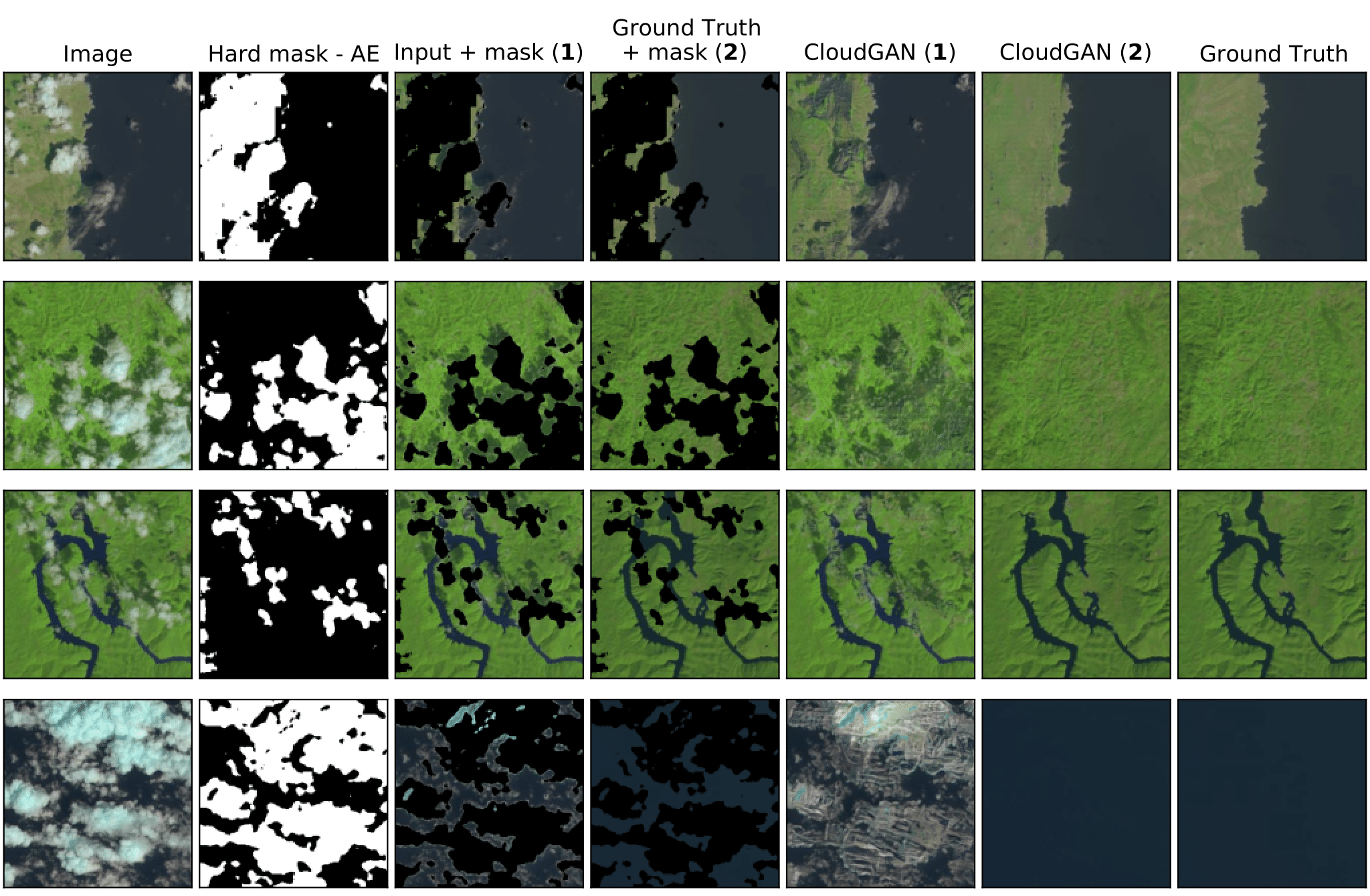

Four examples of the CloudGAN input and output are also visualized here. The columns contain the following items:

- Column 1: RGB-image with the clouds. This serves as the input for CloudGAN.

- Column 2: The cloud mask generated by the AE (white = clouds, black = no clouds).

- Columns 3 and 5: The cloud mask subtracted from the input image (column 3). Highlighted with (1), and is used to generate the output in column 5. This is the actual CloudGAN.

- Columns 4 and 6: The cloud mask subtracted from the ground truth (column 4). Highlighted with (2). This set shows how the method would perform when the cloud mask would be perfect.

- Column 7: The ground truth.

There are significant differences between the output of CloudGAN (1) and CloudGAN (2). In short, this is due to the cloud edges and shadows not being detected by the AE and thus resulting in that the contextual attention from the SN-PatchGAN reuses those textures to fill in the gaps. For more details, please read the included paper.

Here, an overview is given of all included files. Note that some scripts/code contain absolute/relative paths that might need to be updated for your personal machine.

| Directory | Description |

|---|---|

| config | .yml-files containing the configuration for the AE and SN-PatchGAN |

| cloud_detection | Files containing the cloud detections methods (AE, U-Net and a naive approach) |

| cloud_removal | Files in order to train and run the SN-PatchGAN on the cloud inpainting task |

| figures | Figures for the README |

CloudGAN.py |

Python script to run the CloudGAN on your input image |

paper.pdf |

Paper describing the CloudGAN |

requirements.txt |

Python package dependencies |

The following requirements are needed in order to successfully run the CloudGAN code:

python==3.6.13

tensorflow-gpu==1.15

numpy==1.19.5

h5py==2.10.0

neuralgym

scikit-image

For these packages holds that this exact version needs to be used. For all other dependencies holds that other versions might also work. A complete list is given in requirements.txt, but some may not be required.

The weights that we obtained during training can be downloaded with the following links: AE, U-Net and SN-PatchGAN.

Two shellscripts (ae.sh and unet.sh) are provided which contain all the settings used in order to train three replications of each of the networks. To manually train a model, run the main.py script in the cloud_detection directory. Use the flag --help in order to get a summary of all the provided options.

python3 main.py [options]For the naive approach, the cloud-probabilities per HSV-component are computed beforehand and stored in the .npy files. If the files are missing, then it will recompute those probabilities and save them. Currently, the code is implemented such that it is evaluated over the whole test set of 38-Cloud. However, with some minor changes you can use it as your cloud detection method.

For the SN-PatchGAN, we would like you to refer you to their GitHub repository. Important to note is that we made some changes in their code, which unfortunately means that it is not possible to train a new instance with the SN-PatchGAN code in this repository.

In order to run the complete method, use the provided CloudGAN.py script. With the following command, you can input your own satellite image (must have a resolution of 256x256):

python3 -m CloudGAN --img [path_to_input] --target [path_to_target]

--mask [path_to_mask] --output [path_to_output]

--weights_GAN [GAN_checkpoint] --config_GAN [.yml file]

--weights_AE [AE_checkpoint] --config_AE [.yml file]In this example, the generated mask and output files are stored in their given paths. Additionally, it is possible to provide your own cloud mask. In this case, use the --mask option to provide the path to your mask (three channels, so 256x256x3, with values in [0,255]) and enable the --no_AE flag. The --target is optional, but if it is given then it computes the SSIM and PSNR values between the generated and target image. Lastly, there is the option to show more debug-messages from TensorFlow. To enable this add the --debug option to your command.

For the GAN-weights, link to the checkpoint file. As for the AE-weights, link to the checkpoint.h5 file.

Two datasets are used in order to train the models: 38-Cloud for cloud detection and RICE for the cloud removal part. However, since we have had to preprocess the datasets, we also provide our versions of these datasets below. If you want to know the specifics of the preprocessing, we refer you to the paper.