Roy Ganz • Yair Kittenplon • Aviad Aberdam • Elad Ben Avraham

Oren Nuriel • Shai Mazor • Ron Litman

First, clone this repository:

git clone https://github.com/amazon-science/QA-ViT.git

cd QA-ViTNext, to install the requirements in a new conda environment, run:

conda env create -f qavit.yml

conda activate qavitDownload the following datasets from the official websites, and organize them as follows:

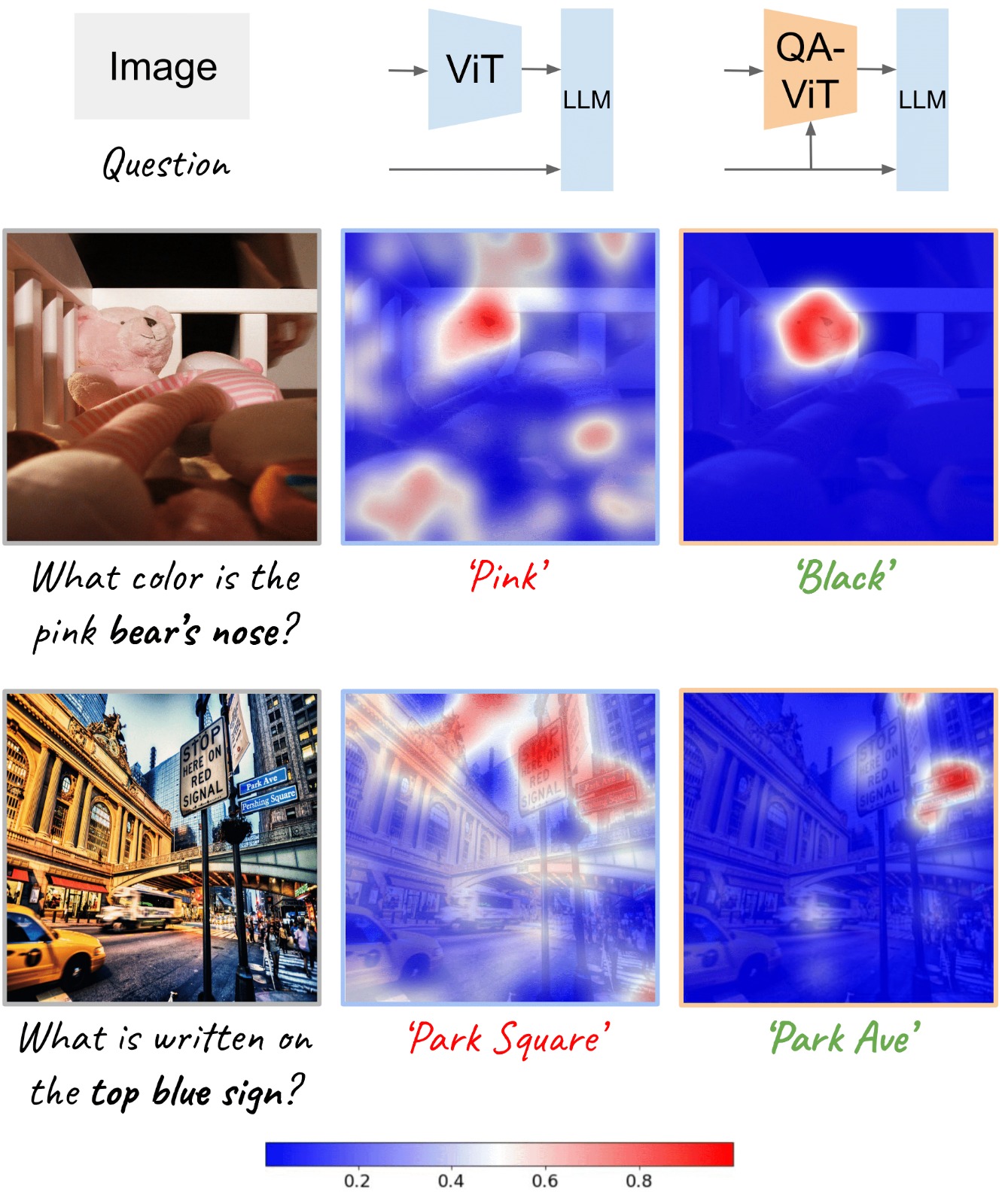

QA-ViT

├── configs

│ ├── ...

├── data

│ ├── textvqa

│ ├── stvqa

│ ├── OCRVQA

│ ├── vqav2

│ ├── vg

│ ├── textcaps

│ ├── docvqa

│ ├── infovqa

│ ├── vizwiz

├── models

│ ├── ...

├── ...

Our framework is based on deepspeed stage 2 and should be configured accordingly:

accelerate configThe accelerate config opens a dialog and should be set as follows:

| Model | DeepSpeed stage | Grad accumulation | Grad clipping | Dtype |

|---|---|---|---|---|

| ViT+T5 base | 2 | ❌ | 1.0 | bf16 |

| ViT+T5 large | 2 | ❌ | 1.0 | bf16 |

| ViT+T5 xl | 2 | 2 | 1.0 | bf16 |

After setting up DeepSpeed, run the following command to train QA-ViT:

accelerate launch run_train.py --config <config> --seed <seed>After setting up DeepSpeed, run the following command to evaluate a trained model:

accelerate launch run_eval.py --config <config> --ckpt <ckpt>where <config> and <ckpt> specify the desired evaluation configuration and trained model checkpoint, respectively.

We provide trained checkpoints of QA-ViT in the table below:

| ViT+T5 base | ViT+T5 large | ViT+T5 xl |

|---|---|---|

| Download | Download | Download |

LLaVA's checkpoints will be uploaded soon.

| Method | VQAv2 vqa-score |

COCO CIDEr |

VQAT vqa-score |

VQAST ANLS |

TextCaps CIDEr |

VizWiz vqa-score |

General Average |

Scene-Text Average |

|---|---|---|---|---|---|---|---|---|

| ViT+T5-base | 66.5 | 110.0 | 40.2 | 47.6 | 86.3 | 23.7 | 88.3 | 65.1 |

| + QA-ViT | 71.7 | 114.9 | 45.0 | 51.1 | 96.1 | 23.9 | 93.3 | 72.1 |

| Δ | +5.2 | +4.9 | +4.8 | +3.5 | +9.8 | +0.2 | +5.0 | +7.0 |

| ViT+T5-large | 70.0 | 114.3 | 44.7 | 50.6 | 96.0 | 24.6 | 92.2 | 71.8 |

| + QA-ViT | 72.0 | 118.7 | 48.7 | 54.4 | 106.2 | 26.0 | 95.4 | 78.9 |

| Δ | +2.0 | +4.4 | +4.0 | +3.8 | +10.2 | +1.4 | +3.2 | +7.1 |

| ViT+T5-xl | 72.7 | 115.5 | 48.0 | 52.7 | 103.5 | 27.0 | 94.1 | 77.0 |

| + QA-ViT | 73.5 | 116.5 | 50.3 | 54.9 | 108.2 | 28.3 | 95.0 | 80.4 |

| Δ | +0.8 | +1.0 | +2.3 | +2.2 | +4.7 | +1.3 | +0.9 | +3.4 |

If you find this code or data to be useful for your research, please consider citing it.

@article{ganz2024question,

title={Question Aware Vision Transformer for Multimodal Reasoning},

author={Ganz, Roy and Kittenplon, Yair and Aberdam, Aviad and Avraham, Elad Ben and Nuriel, Oren and Mazor, Shai and Litman, Ron},

journal={arXiv preprint arXiv:2402.05472},

year={2024}

}