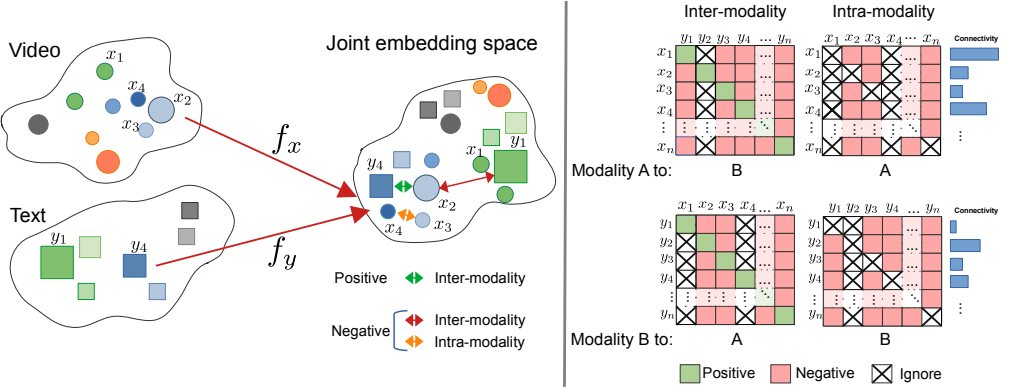

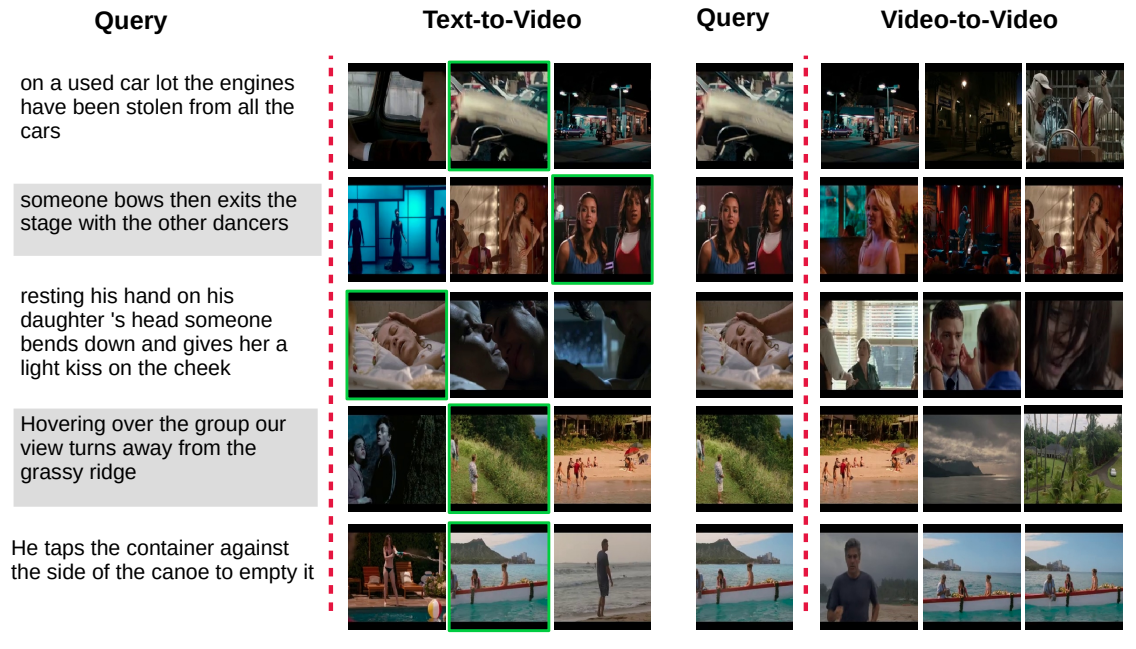

CrossCLR: Cross-modal Contrastive Learning For Multi-modal Video Representations [Paper]

Authors: Mohammadreza Zolfaghari, Yi Zhu, Peter Gehler, Thomas Brox,

The loss function CrossCLR in loss.py takes video features and text features as input, and return the loss.

Usage:

from trainer.loss import CrossCLR_onlyIntraModality

# define loss with a temperature `temp` and weights for negative samples `w`

criterion = CrossCLR_onlyIntraModality(temperature=temp, negative_weight=w)

# features: [bsz, f_dim]

video_features = ...

text_features = ...

# CrossCLR

loss = criterion(video_features, text_features)

...@article{crossclr_aws_21,

author = {Mohammadreza Zolfaghari and

Yi Zhu and

Peter V. Gehler and

Thomas Brox},

title = {CrossCLR: Cross-modal Contrastive Learning For Multi-modal Video Representations},

url = {https://arxiv.org/abs/2109.14910},

eprinttype = {arXiv},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

}

See CONTRIBUTING for more information.

This project is licensed under the Apache-2.0 License.