This is a PyTorch implementation of the following paper:

FPGAN-Control: A Controllable Fingerprint Generator for Training with Synthetic Data, WACV 2024, [paper] [project page].

Alon Shoshan, Nadav Bhonker, Emanuel Ben Baruch, Ori Nizan, Igor Kviatkovsky, Joshua Engelsma, Manoj Aggarwal and Gerard Medioni.

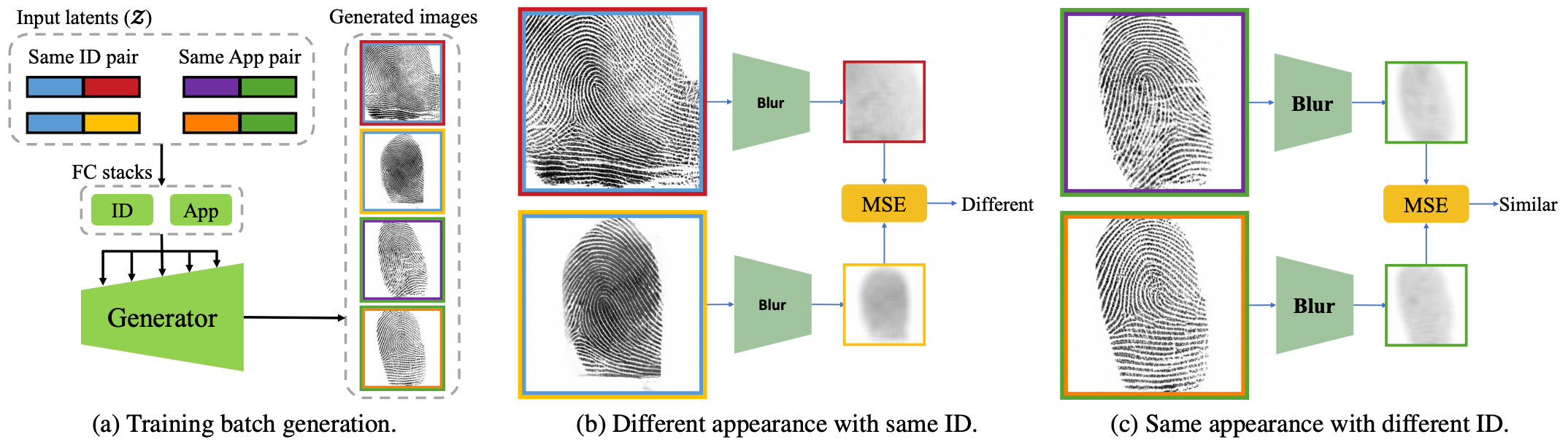

Training fingerprint recognition models using synthetic data has recently gained increased attention in the biometric community as it alleviates the dependency on sensitive personal data. Existing approaches for fingerprint generation are limited in their ability to generate diverse impressions of the same finger, a key property for providing effective data for training recognition models. To address this gap, we present FPGAN-Control, an identity preserving image generation framework which enables control over the fingerprint's image appearance (e.g., fingerprint type, acquisition device, pressure level) of generated fingerprints. We introduce a novel appearance loss that encourages disentanglement between the fingerprint's identity and appearance properties. In our experiments, we used the publicly available NIST SD302 (N2N) dataset for training the FPGAN-Control model. We demonstrate the merits of FPGAN-Control, both quantitatively and qualitatively, in terms of identity preservation level, degree of appearance control, and low synthetic-to-real domain gap. Finally, training recognition models using only synthetic datasets generated by FPGAN-Control lead to recognition accuracies that are on par or even surpass models trained using real data. To the best of our knowledge, this is the first work to demonstrate this.

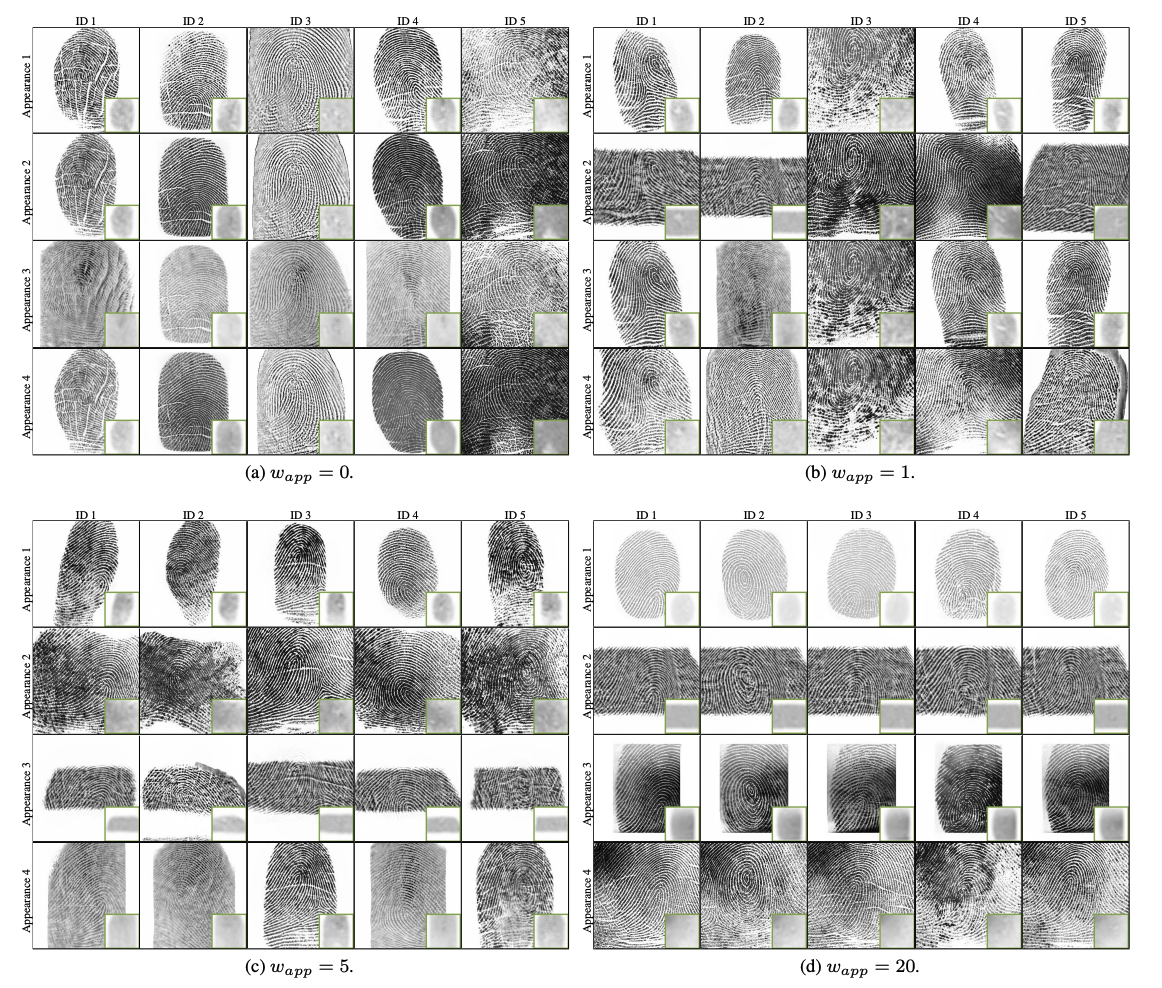

For a specific FPGAN-Control model, each column represents images generated with the same ID latent vector input and each row represents images generated with the same appearance latent vector input. For visualization of the appearance loss, the small images in green borders show the blurred representation of the fingerprint image used by the loss.

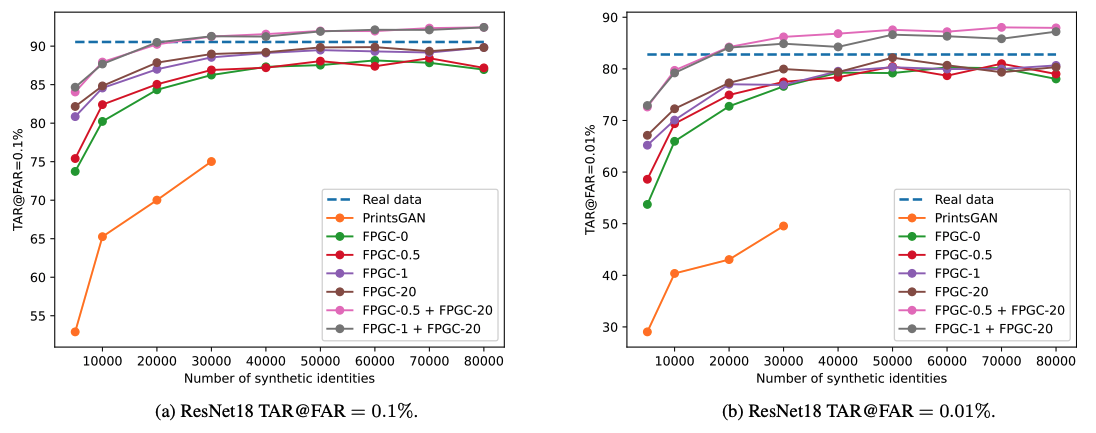

Accuracy vs. number of synthetic identities used during training: Real data corresponds to training the model with the real dataset only, while the rest of the models were trained purely on synthetic identities.

Download the trained GAN and save it in models.

Run:

python generate_random_ids.py

--model_dir models/id06fre20_fingers384_id_noise_same_id_idl005_posel000_large_pose_20230606-082209

--save_path <path for saving the results>

--number_of_ids <number of fingers to generate>

--number_images_per_id <number of images with different appearance to generate per finger>

Please consider citing our work if you find it useful for your research:

@InProceedings{Shoshan_2024_WACV,

author = {Shoshan, Alon and Bhonker, Nadav and Ben Baruch, Emanuel and Nizan, Ori and Kviatkovsky, Igor and Engelsma, Joshua and Aggarwal, Manoj and Medioni, G\'erard},

title = {FPGAN-Control: A Controllable Fingerprint Generator for Training With Synthetic Data},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024},

pages = {6067-6076}

}

This code is heavily borrowed from Rosinality: StyleGAN 2 in PyTorch.

See CONTRIBUTING for more information.

This library is licensed under the Attribution-NonCommercial 4.0 International License.