This repository explains how a decoder-only transformer (GPT) work vs an encoder-decoder Transformer . This repository is based on the material provided by Prof. Massimo Piccardi, NLP lab.

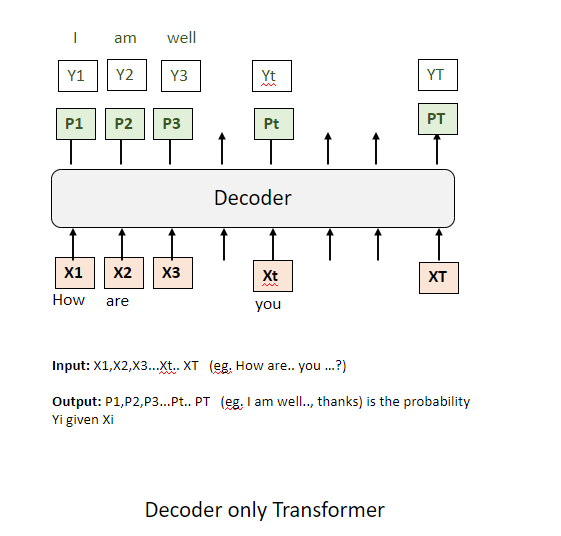

Conceptually, the decoder acts as a module that receives a sequence of input tokens, which can be denoted as

Mathematically, the size of each probability vector is equivalent to the size of the vocabulary,

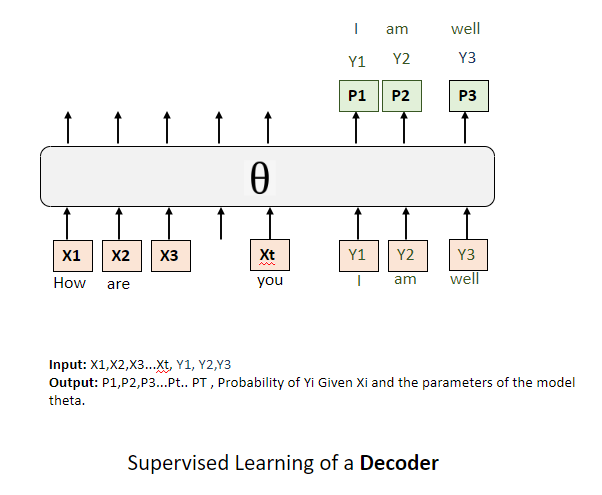

Suppose our objective is to train the Decoder by determining the optimal values for all its internal parameters, which we denote collectively as

During the process of supervised training, we work with a set of definitive tokens, known as the ground truth tokens, which serve as our training targets. These tokens, which we can label as

The concept of targeting here implies that we strive to enhance the probability within the probability vector for a given token identified as our target to be as maximal as possible. Ideally, this probability is 1, but realistically, it may be slightly less, like 0.9, due to the need to balance across multiple sequences and samples. In mathematical terms, if the target token at position

What exactly constitutes our target in more formal terms?

We aim to maximize the probabilities associated with the target tokens

With multiple tokens in the mix, our objective translates into maximizing the cumulative log probability of the target tokens, given all the input tokens

However, by convention, training frameworks favour loss functions, which are framed in terms of minimization. To align with this convention, we introduce a negative sign, transforming the maximization of the log probability into the minimization of its negative. This inversion effectively mirrors the function, converting our search for the maximum into a search for the minimum. This alternative formulation is recognized as the negative log-likelihood or cross-entropy, terms often used interchangeably in the field.

In essence, our training process is designed to minimize:

This approach encapsulates the conventional method of framing and pursuing the optimization objective in supervised learning.

Hence, the training objective is established. Provided with a sequence of input tokens and a corresponding set of target tokens for output, the aim during training is to reduce the negative log-likelihood associated with these target tokens as generated by the decoder.

When can this arrangement be useful?

This module proves particularly effective in scenarios like question-answering or generating responses to prompts. During these tasks, the operation of the Decoder at runtime unfolds as follows: We commence by supplying a subset of input tokens up to a certain juncture; specifically, the initial

Our primary focus is on predicting the initial token of the response, denoted as

This procedure is inherently sequential, implying that while the initial prompt is available in its entirety from the start, the generation of the response tokens must occur in a step-by-step fashion. Each newly predicted token informs the prediction of the subsequent token.

Such is the nature of the Decoder's function during runtime or inference, systematically constructing a response based on a series of inputs and iterative predictions.

What are the practical steps for training one of these decoders and readying it for the training phase? How do we actually employ the negative log-likelihood in this context?

The process is straightforward. Consider an example where we're presented with an input sentence, say, "How are you?" and the corresponding response should be "I'm well." Here, "How are you?" represents the input tokens, and "I'm well" embodies the predicted tokens.

At this juncture, the crux of negative log-likelihood is to predict these response tokens effectively, utilizing the input tokens during training. Given that all tokens are accessible at this stage — both from the prompt ("How are you?") and the manually annotated response ("I'm well") — we can directly feed into the system

As per the operational norms of the GPT library, the entire sequence — comprising both the input and the response — is fed into the decoder. This input isn't just physical; it's also conceptual, as the model might also be designed to predict the end-of-sentence token, thereby recognizing the full length of the sequence and the demarcation of the prefix.

In essence, the training revolves around the decoder recognizing and utilizing the full span of the input sequence while primarily focusing on accurately predicting the subsequent response tokens, as dictated by the negative log-likelihood.

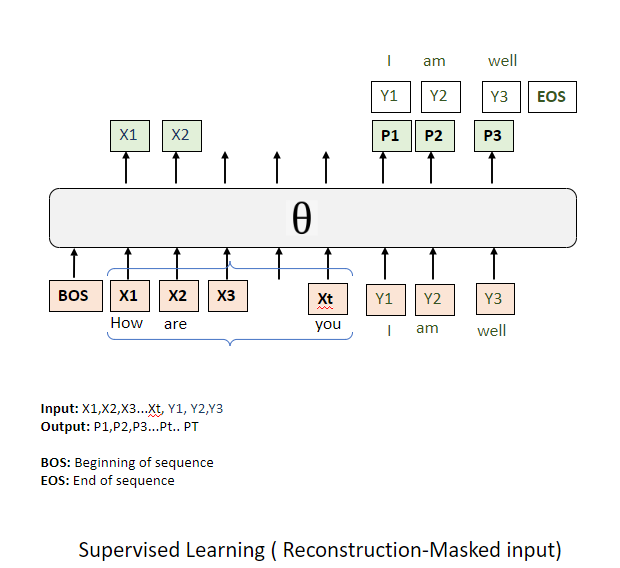

When configuring the negative log-likelihood, the entire sequence, encompassing both the prompt (or prefix) and the response, is supplied to it. However, the system is instructed to apply a mask to a certain number of tokens, a quantity influenced by the prefix's length. This could equate to

This reconstruction or loss function typically requires four parameters:

- The complete sequence integrating both the prompt and the response.

- The total length of this sequence.

- The length of the prefix(prompt): provides a clear demarcation for where the prompt concludes and the response begins within the scope of the negative log-likelihood.

- A 'mask prefix' can be set to either true or false. When set to true, it indicates that the tokens within the prefix are masked. Yet, there's a possibility of configuring the system to predict these prefix tokens, thereby offering a more nuanced objective to the prompt's components. This approach could potentially enhance the internal states of the model, ensuring a more refined predictive capacity for subsequent tokens.

Consequently, the last token of the prompt is aligned to predict the initial token of the response, maintaining the sequential integrity of token prediction. It's plausible that the negative log-likelihood function might include the 'mask prefix' parameter set to false, thereby making both the prefix tokens and the response tokens integral to the model's training targets. The decision between these approaches might not have a clear theoretical superiority, but empirical evidence may favour one over the other.

A subtle variation to this framework could involve incorporating a special 'beginning of sentence' token at the start and also making the very first token of the prompt,

This delineates the operational essence of the decoder-only architecture, elucidating the intricate interplay of inputs, sequence lengths, and the strategic masking of tokens in refining the model's predictive prowess.

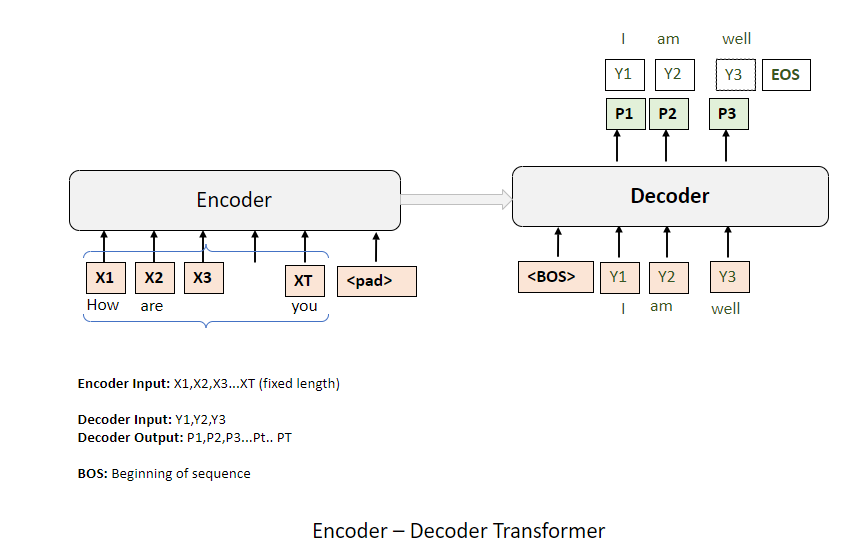

We can succinctly compare this with how a standard transformer operates, one that encompasses both an encoder and a decoder. Briefly put, the architecture of an encoder-decoder is not markedly distinct. It consists of two primary components: the encoder and the decoder. In this arrangement, the separation between the input prompt and the output response is distinctly defined—the input prompt is processed by the encoder, and the output response is generated by the decoder.

In our scenario, the input sequence, denoted as

The two modules are connected through a large number of connections, collectively called cross-attention. The input tokens are processed and transformed into internal states, which are then communicated to the decoder via the cross-attention mechanism.

A beginning-of-sentence token is provided to the decoder as the first token in the input. This allows for the calculation of the probability of the first output token. During training, a reference token, 'y1', is used. In inference, a token is predicted from the probability distribution, typically selecting the token with the highest probability value. This selected token is then used as the input for predicting the next token, 'y2', and the process continues in this manner.

At inference time, one token is predicted at a time. Each predicted token is used as input for the next prediction. This method is known as greedy decoding. An alternative approach is beam decoding or beam search, where multiple predictions are accumulated, but the principle remains the same.

During training, all input tokens and the tokens of the target sentence are available. The model is trained by providing all prompt tokens to the encoder and all response tokens to the decoder, shifted by one position. The training objective is to maximize the log probability of the target tokens, or equivalently, minimize the negative log-likelihood.

The two architectures, traditional transformer and decoder-only, are very similar. The main difference is that the decoder-only architecture simplifies by eliminating padding tokens and does not require cross-attention, as the self-attention within the single module serves the same function. In the decoder-only architecture, all predictions are informed by the hidden state of every other token in the sentence. This approach may be simpler, which could be a reason for its adoption by OpenAI for GPT.

The important aspect is understanding the training objective and how the architecture operates during runtime.