- Introduction

- Architectural Overview

- Information Flow

- Practical Examples

- Fundamental Concepts

- Setup Guide

- Final Remarks

- Further Reading

This repository aims to offer a detailed exploration of Transformer models, a cornerstone in the advancement of Natural Language Processing (NLP). Originated from the seminal paper, Attention is All You Need, this guide outlines the historical milestones of Transformer models:

Transformers are generally categorized into:

- Auto-encoding models like BERT

- Auto-regressive models like GPT

- Sequence-to-sequence models like BART/T5

Transformers are language models trained using self-supervised learning on large text corpuses. They have evolved to be increasingly large to achieve better performance, albeit at the cost of computational and environmental resources. They are generally adapted for specialized tasks through transfer learning.

Transformers have set benchmarks across a multitude of tasks:

📝 Text: Text classification, information retrieval, summarization, translation, and text generation.

🖼️ Images: Image classification, object detection, and segmentation.

🗣️ Audio: Speech recognition and audio classification.

They are also proficient in multi-modal tasks, including question answering from tables, document information extraction, video classification, and visual question answering.

- Concurrency: Parallel processing of tokens enhances training speed.

- Attention: Focuses on relevant portions of the input.

- Scalability: Performs well on diverse data sizes and complexities.

- Versatility: Extends beyond NLP into other domains.

- Pretrained Models: Availability of fine-tunable, pretrained models.

- Resource Intensive: High computational and memory requirements.

- Black Box Nature: Low interpretability.

- Overfitting Risk: Especially on smaller datasets.

- Not Always Optimal: Simpler models may suffice for certain tasks.

- Hyperparameter Sensitivity: Requires careful tuning.

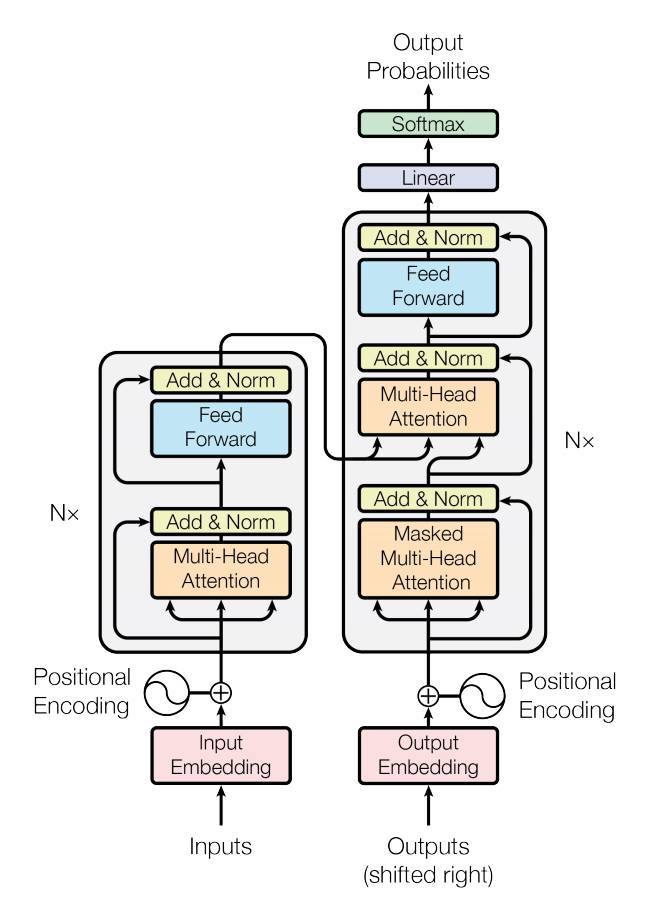

The Transformer model is built on an Encoder-Decoder structure, enhanced by Multi-Head Attention mechanisms. Prebuilt models can be easily imported from the Hugging Face model repository.

The Transformer processes information through the following steps:

- Tokenization and Input Embedding: Tokenizes the input sentence and converts each token into its corresponding embedding.

- Positional Encoding: Adds positional information to the embeddings.

- Encoder: Transforms the input sequence into a hidden representation.

- Decoder: Takes the hidden representation to produce an output sequence.

- Task-Specific Layer: Applies a task-specific transformation to the decoder output.

- Loss Computation and Backpropagation: Computes the loss and updates the model parameters.

- For a comprehensive understanding, refer to the Information Flow section.

pip install transformersconda install -c huggingface transformersThis repository serves as a comprehensive resource for understanding Transformer models across various applications and domains.

Additional deep dives: