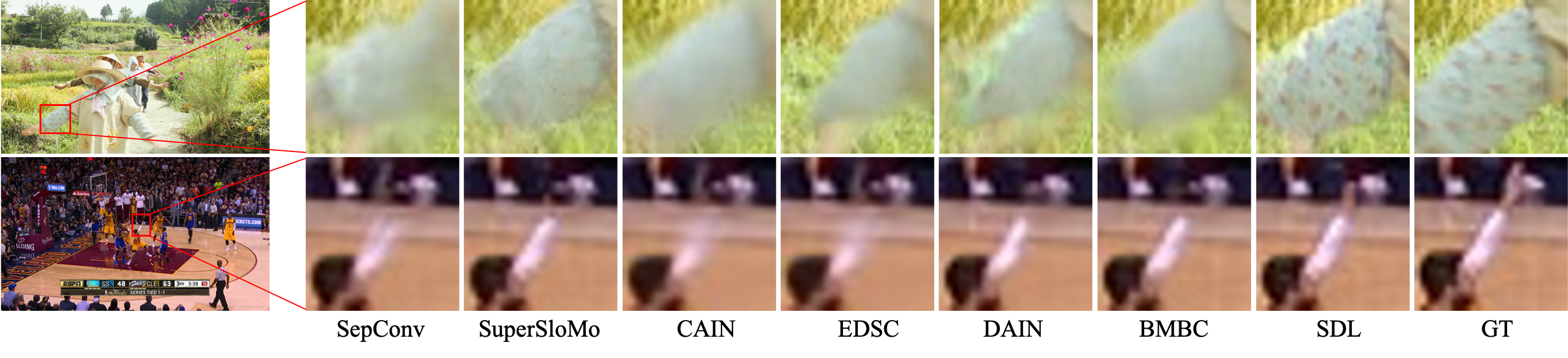

Beyond a Video Frame Interpolator: A Space Decoupled Learning Approach to Continuous Image Transition

Paper | Supplementary Material

Tao Yang1, Peiran Ren1, Xuansong Xie1, Xiansheng Hua1, Lei Zhang2

1DAMO Academy, Alibaba Group, Hangzhou, China

2Department of Computing, The Hong Kong Polytechnic University, Hong Kong, China

(2022-4-15) Add source codes and pre-trained models. Other pre-trained models will be released soon.

- Clone this repository.

git clone https://github.com/yangxy/SDL.git

cd SDL- Install dependencies. (Python 3 + NVIDIA GPU + CUDA. Recommend to use Anaconda)

pip install -r requirements.txt-

Download our pre-trained models and put them into

weights/. Note: SDL_vfi_psnr is obtained by finetuned SDL_vfi_perceptual using only Charbonnier Loss. It performs better in terms of PSNR but fails to recover fine details.SDL_vfi_perceptual | SDL_vfi_psnr | SDL_dog2dog_scale | SDL_cat2cat_scale | SDL_aging_scale | SDL_toonification_scale | SDL_style_transfer_arbitrary | SDL_super_resolution

-

Test our models.

# Testing

python sdl_test.py --task vfi --model SDL_vfi_perceptual.pth --num 1 --source examples/VFI/car-turn/00000.jpg --target examples/VFI/car-turn/00001.jpg --outdir results/VFI

python sdl_test.py --task vfi-dir --model SDL_vfi_perceptual.pth --num 1 --indir examples/VFI/car-turn --outdir results/VFI

python sdl_test.py --task morphing --model SDL_dog2dog_scale.pth --num 7 --size 512 --source examples/Morphing/dog/source/flickr_dog_000045.jpg --target examples/Morphing/dog/target/pixabay_dog_000017.jpg --outdir results/Morphing/dog

python sdl_test.py --task morphing --model SDL_cat2cat_scale.pth --num 7 --size 512 --source examples/Morphing/cat/source/flickr_cat_000008.jpg --target examples/Morphing/cat/target/pixabay_cat_000010.jpg --outdir results/Morphing/cat

python sdl_test.py --task i2i --model SDL_aging_scale.pth --in_ch 3 --num 7 --size 512 --extend_t --source examples/I2I/ffhq-10/00002.png --outdir results/I2I/aging

python sdl_test.py --task i2i --model SDL_toonification_scale.pth --in_ch 3 --num 7 --size 512 --source examples/I2I/ffhq-10/00002.png --outdir results/I2I/toonification

python sdl_test.py --task style_transfer --model SDL_style_transfer_arbitrary.pth --num 7 --source examples/Style_transfer/content/sailboat.jpg --target examples/Style_transfer/style/sketch.png --outdir results/Style_transfer- Train SDL with 4 GPUs.

# Supervised training

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train.py -opt options/train_SDL_VFI.yml --auto_resume --launcher pytorch #--debug

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train.py -opt options/train_SDLGAN_I2I.yml --auto_resume --launcher pytorch #--debug

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train.py -opt options/train_SDLGAN_Morphing.yml --auto_resume --launcher pytorch #--debug

# Unsupervised training

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train.py -opt options/train_SDL_StyleTransfer.yml --auto_resume --launcher pytorch #--debug- Prepare the training dataset by following this instruction.

Please check out run.sh for more details.

If our work is useful for your research, please consider citing:

@inproceedings{Yang2022SDL,

title={Beyond a Video Frame Interpolator: A Space Decoupled Learning Approach to Continuous Image Transition},

author={Tao Yang, Peiran Ren, Xuansong Xie, Xiansheng Hua and Lei Zhang},

journal={arXiv:2203.09771},

year={2022}

}

© Alibaba, 2022. For academic and non-commercial use only.

This project is built based on the excellent BasicSR-examples project.

If you have any questions or suggestions about this paper, feel free to reach me at yangtao9009@gmail.com.