This Github repository hosts the data and baseline models for the SemEval-2022 shared task 10 on structured sentiment. In this repository you will find the datasets, baselines, and other useful information on the shared task.

6.10.2021: Updated MPQA and Darmstadt dev data on the codalab. You may need to check your data to make sure that you're working with the newest version in order to submit.

- Problem description

- Subtasks

- Evaluation

- Data format

- Resources

- Submission via Codalab

- Baselines

- Important dates

- Frequently Asked Questions

- Task organizers

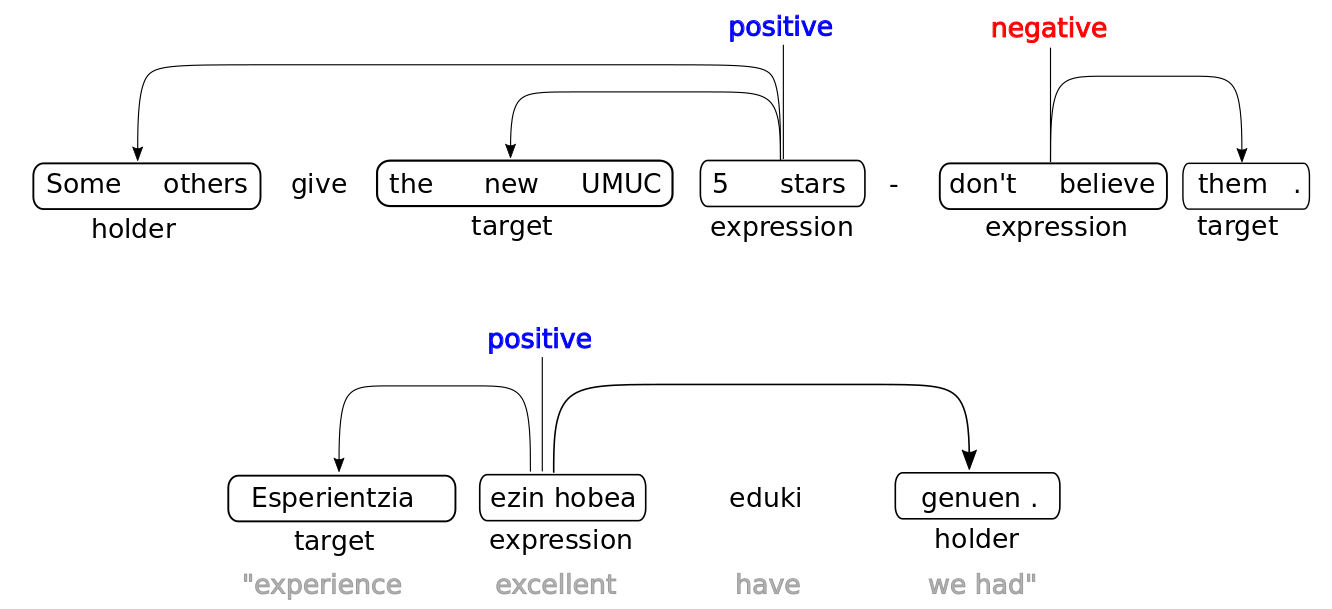

The task is to predict all structured sentiment graphs in a text (see the examples below). We can formalize this as finding all the opinion tuples O = Oi,...,On in a text. Each opinion Oi is a tuple (h, t, e, p)

where h is a holder who expresses a polarity p towards a target t through a sentiment expression e, implicitly defining the relationships between the elements of a sentiment graph.

The two examples below (first in English, then in Basque) give a visual representation of these sentiment graphs.

Participants can then either approach this as a sequence-labelling task, or as a graph prediction task.

This track assumes that you train and test on the same language. Participants will need to submit results for seven datasets in five languages.

The datasets can be found in the data directory.

| Dataset | Language | # sents | # holders | # targets | # expr. |

|---|---|---|---|---|---|

| NoReC_fine | Norwegian | 11437 | 1128 | 8923 | 11115 |

| MultiBooked_eu | Basque | 1521 | 296 | 1775 | 2328 |

| MultiBooked_ca | Catalan | 1678 | 235 | 2336 | 2756 |

| OpeNER_es | Spanish | 2057 | 255 | 3980 | 4388 |

| OpeNER_en | English | 2494 | 413 | 3850 | 4150 |

| MPQA | English | 10048 | 2279 | 2452 | 2814 |

| Darmstadt_unis | English | 2803 | 86 | 1119 | 1119 |

This track will explore how well models can generalize across languages. The test data will be the MultiBooked Datasets (Catalan and Basque) and the OpeNER Spanish dataset. For training, you can use any of the other datasets, as well as any other resource that does not contain sentiment annotations in the target language.

This setup is often known as zero-shot cross-lingual transfer and we will assume that all submissions follow this format.

The two subtasks will be evaluated separately. In both tasks, the evaluation will be based on Sentiment Graph F1.

This metric defines true positive as an exact match at graph-level, weighting the overlap in predicted and gold spans for each element, averaged across all three spans.

For precision we weight the number of correctly predicted tokens divided by the total number of predicted tokens (for recall, we divide instead by the number of gold tokens), allowing for empty holders and targets which exist in the gold standard.

The leaderboard for each dataset, as well as the average of all 7. The winning submission will be the one that has the highest average Sentiment Graph F1.

We provide the data in json lines format.

Each line is an annotated sentence, represented as a dictionary with the following keys and values:

-

'sent_id': unique sentence identifiers -

'text': raw text version of the previously tokenized sentence -

opinions': list of all opinions (dictionaries) in the sentence

Additionally, each opinion in a sentence is a dictionary with the following keys and values:

-

'Source': a list of text and character offsets for the opinion holder -

'Target': a list of text and character offsets for the opinion target -

'Polar_expression': a list of text and character offsets for the opinion expression -

'Polarity': sentiment label ('Negative', 'Positive', 'Neutral') -

'Intensity': sentiment intensity ('Average', 'Strong', 'Weak')

{

"sent_id": "../opener/en/kaf/hotel/english00164_c6d60bf75b0de8d72b7e1c575e04e314-6",

"text": "Even though the price is decent for Paris , I would not recommend this hotel .",

"opinions": [

{

"Source": [["I"], ["44:45"]],

"Target": [["this hotel"], ["66:76"]],

"Polar_expression": [["would not recommend"], ["46:65"]],

"Polarity": "Negative",

"Intensity": "Average"

},

{

"Source": [[], []],

"Target": [["the price"], ["12:21"]],

"Polar_expression": [["decent"], ["25:31"]],

"Polarity": "Positive",

"Intensity": "Average"}

]

}

You can import the data by using the json library in python:

>>> import json

>>> with open("data/norec/train.json") as infile:

norec_train = json.load(infile)

The task organizers provide training data, but participants are free to use other resources (word embeddings, pretrained models, sentiment lexicons, translation lexicons, translation datasets, etc). We do ask that participants document and cite their resources well.

We also provide some links to what we believe could be helpful resources:

- pretrained word embeddings

- pretrained language models

- translation data

- sentiment resources

- syntactic parsers

Submissions will be handled through our codalab competition website.

The task organizers provide two baselines: one that takes a sequence-labelling approach and a second that converts the problem to a dependency graph parsing task. You can find both of them in baselines.

- Training data ready: September 3, 2021

- Evaluation data ready: December 3, 2021

- Evaluation start: January 10, 2022

- Evaluation end: by January 31, 2022

- Paper submissions due: roughly February 23, 2022

- Notification to authors: March 31, 2022

Q: How do I participate?

A: Sign up at our codalab website, download the data, train the baselines and submit the results to the codalab website.

Q: Can I run the graph parsing baseline on GPU?

A: The code is not set up to run on GPU right now, but if you want to implement it and issue a pull request, we'd be happy to incorporate that into the repository.

- Corresponding organizers

- Jeremy Barnes: contact for info on task, participation, etc. (jeremycb@ifi.uio.no)

- Andrey Kutuzov: andreku@ifi.uio.no

- Organizers

- Jan Buchman

- Laura Ana Maria Oberländer

- Enrica Troiano

- Rodrigo Agerri

- Lilja Øvrelid

- Erik Velldal

- Stephan Oepen