This project demonstrates how to build an intelligent system to classify emails as Spam or Not Spam using Machine Learning and Natural Language Processing (NLP). It serves as a step-by-step guide for students to understand and implement such a system.

Through this project, you’ll gain hands-on experience in:

- Preprocessing textual data using NLP techniques.

- Extracting meaningful numerical features from text with TF-IDF Vectorization.

- Training a Machine Learning model (Naive Bayes) for classification tasks.

- Building an interactive web-based application using Streamlit.

The system classifies an email message into two categories:

- Spam: Unwanted or promotional emails.

- Not Spam: Important, relevant emails.

This process involves:

- Text Preprocessing: Cleaning and preparing text for analysis.

- Feature Extraction: Converting text into numerical data using TF-IDF.

- Model Training: Using a Naive Bayes classifier to detect patterns.

- Interactive Interface: Analyzing new emails via a web app.

-

What’s the dataset?

- The SMS Spam Collection Dataset (labeled SMS messages).

-

How to get it?

- Download from Kaggle.

-

File location:

- Save the file as

spam.csvin thedata/directory.

- Save the file as

Before training the model, we clean the text:

- Lowercasing: Converts characters to lowercase.

- Removing Special Characters: Strips symbols, numbers, and extra spaces.

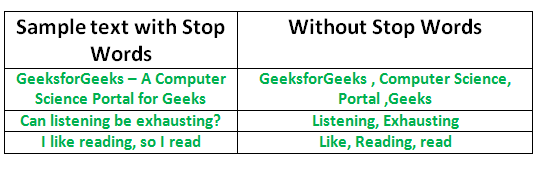

- Stopword Removal: Removes common words like “is”, “the”, “and” using NLTK.

- Lemmatization: Reduces words to their base form (e.g., “running” → “run”).

Why preprocessing?

- It reduces noise.

- Ensures focus on meaningful words.

-

What is TF-IDF?

- A technique transforming text into numerical values based on:

- TF: Term Frequency — how often a word appears.

- IDF: Inverse Document Frequency — how unique a word is.

- A technique transforming text into numerical values based on:

-

Why use it?

- It prioritizes relevant words over common ones.

-

Example:

- In the phrase "Win a free iPhone now!", words like "Win" and "free" get higher weights than "a" or "now".

-

Which model?

- Naive Bayes Classifier — fast, simple, and effective for text classification.

-

Why Naive Bayes?

- Works well with text.

- Calculates probabilities for each class.

-

What is Streamlit?

- A Python library for creating interactive web apps.

-

What does it do?

- Lets users input an email and see if it’s Spam or Not Spam, with a confidence score.

spam_detector/

├── data/

│ └── spam.csv # Dataset

├── model/

│ ├── spam_model.joblib # Trained model

│ └── vectorizer.joblib # TF-IDF vectorizer

├── src/

│ ├── train_model.py # Script to train the model

│ └── predict.py # Script to make predictions

├── GUI/

│ └── main.py # Streamlit app

├── requirements.txt # Required libraries

└── README.md # Project documentation

git clone https://github.com/your_username/spam-detector-nlp.git

cd spam-detector-nlppython -m venv .venv- Windows:

.venv\Scripts\activate

- macOS/Linux:

source .venv/bin/activate

pip install -r requirements.txtRun the training script:

python src/train_model.pyThis generates:

spam_model.joblib(trained model).vectorizer.joblib(TF-IDF vectorizer).

Start the web app:

streamlit run GUI/main.py- Open the link (e.g., http://localhost:8501).

- Input an email and click Analyze Email.

-

Spam Email:

Congratulations! You’ve won $1,000,000! Click here to claim now!- Prediction: Spam

- Confidence Score: 95%

-

Not Spam Email:

Hi John, can we reschedule our meeting to tomorrow at 2 PM?- Prediction: Not Spam

- Confidence Score: 99%

- Python: The programming language.

- Libraries:

- Streamlit: For the web interface.

- Scikit-learn: For the ML model.

- NLTK: For text preprocessing.

- Joblib: For saving/loading models.

- Preprocessing: Clean and standardize the input text.

- Feature Extraction: Convert text into numerical data.

- Training: Train the Naive Bayes model.

- Prediction: Classify new emails.

- Support for Additional Languages.

- Advanced Models: Experiment with deep learning models.

- Batch Classification: Process multiple emails simultaneously.

- End-to-end understanding of text classification.

- Experience with data preprocessing and feature extraction.

- Deployment skills with Streamlit.

Made with ❤️ by Amr Alkhouli

This project is licensed under the MIT License.