This repo is a hands on lab for Spark MLlib based scalable machine learning on Google Cloud, powered by Dataproc Serverless Spark and showcases integration with Vertex AI AIML platform. The focus is on demystifying the products and integration (and not about a perfect model), and features a minimum viable end to end machine learning use case.

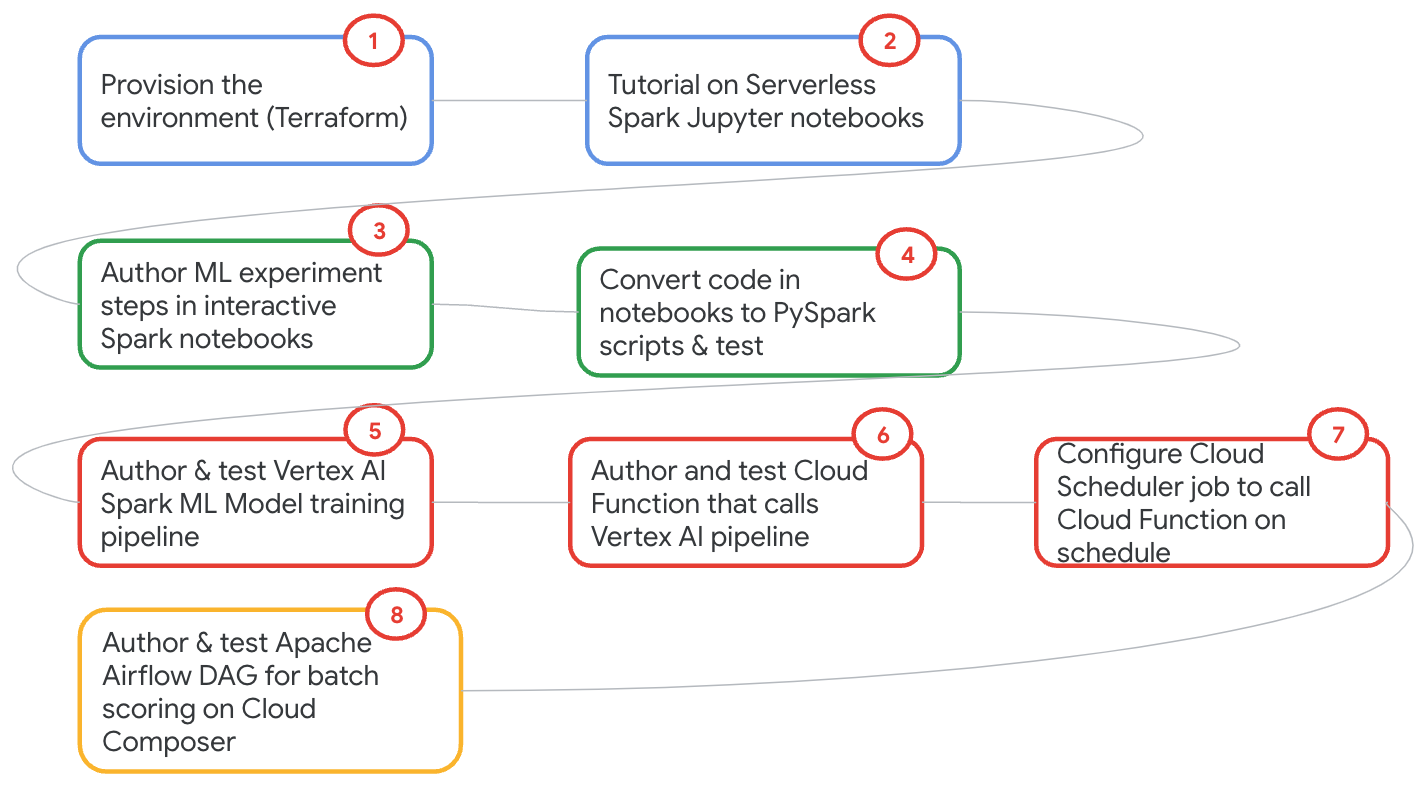

The lab is fully scripted (no research needed), with (fully automated) environment setup, data, code, commands, notebooks, orchestration, and configuration. Clone the repo and follow the step by step instructions for an end to end MLOps experience.

Expect to spend ~8 hours to fully understand and execute if new to GCP and the services and at least ~6 hours otherwise.

L300 - framework (Spark), services/products, integration

The intended audience is anyone with (access to Google Cloud and) interest in the usecase, products and features showcased.

Knowledge of Apache Spark, Machine Learning, and GCP products would be beneficial but is not entirely required, given the format of the lab. Access to Google Cloud is a must unless you want to just read the content.

Simplify your learning and adoption journey of our product stack for scalable data science with -

- Just enough product knowledge of Dataproc Serverless Spark & Vertex AI integration for machine learning at scale on Google Cloud

- Quick start code for ML at scale with Spark that can be repurposed for your data and ML experiments

- Terraform for provisioning a variety of Google Cloud data services in the Spark ML context, that can be repurposed for your use case

Telco Customer Churn Prediction with a Kaggle dataset and Spark MLLib, Random Forest Classifer

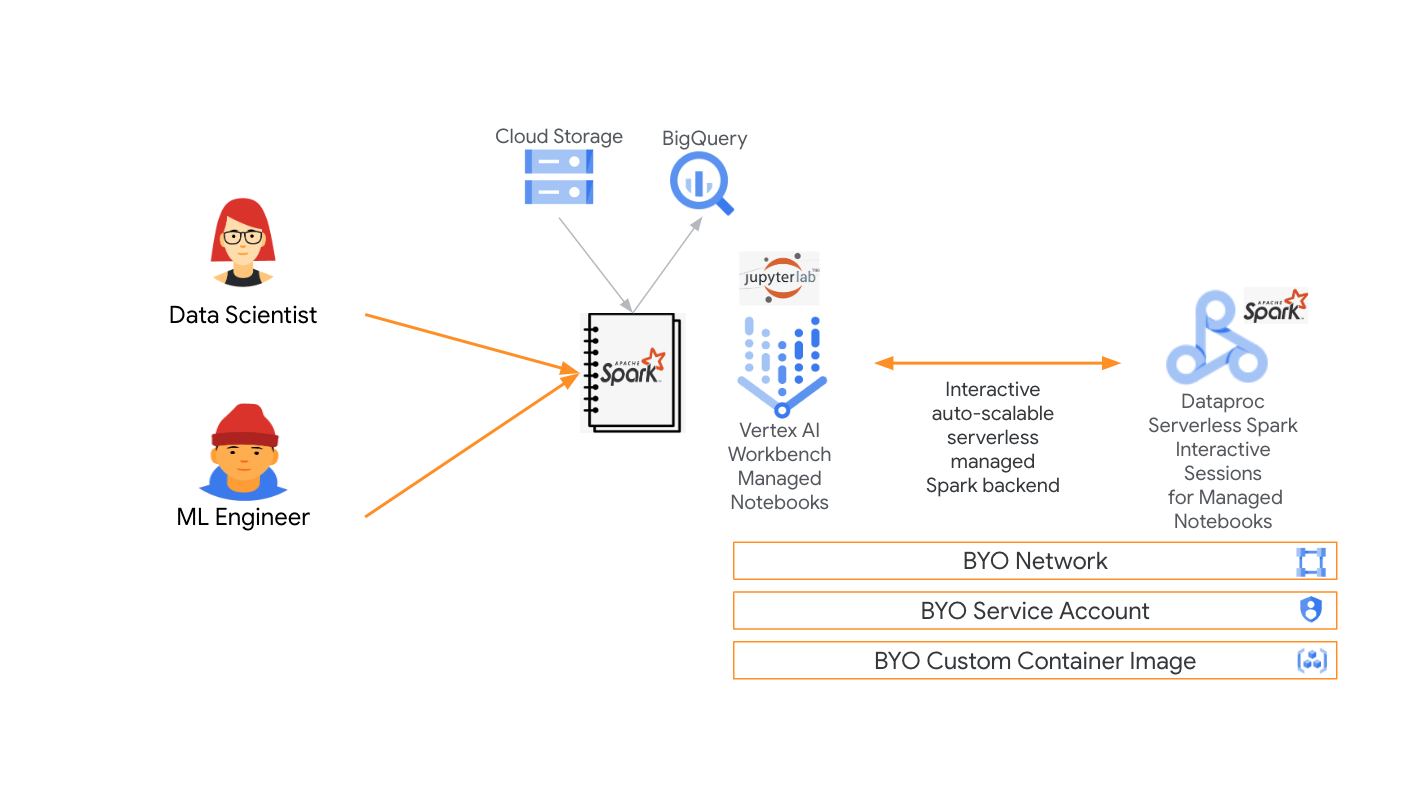

About Dataproc Serverless Spark Interactive: Fully managed, autoscalable, secure Spark infrastructure as a service for use with Jupyter notebooks on Vertex AI Workbench managed notebooks. Use as an interactive Spark IDE, for accelerating development and speed to production.

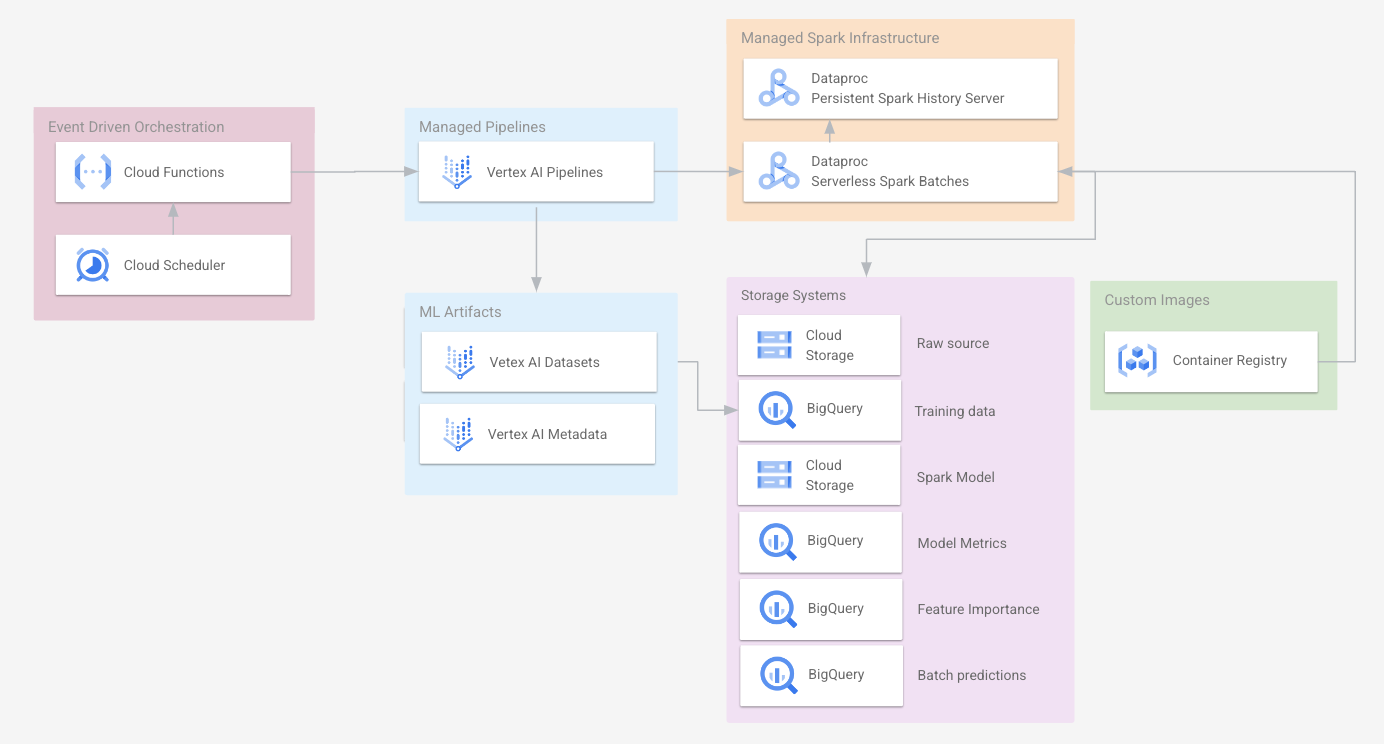

About Dataproc Serverless Spark Batches:

Fully managed, autoscalable, secure Spark jobs as a service that eliminates administration overhead and resource contention, simplifies development and accelerates speed to production. Learn more about the service here.

- Find templates that accelerate speed to production here

- Want Google Cloud to train you on Serverless Spark for free, reach out to us here

- Try out our other Serverless Spark centric hands on labs here

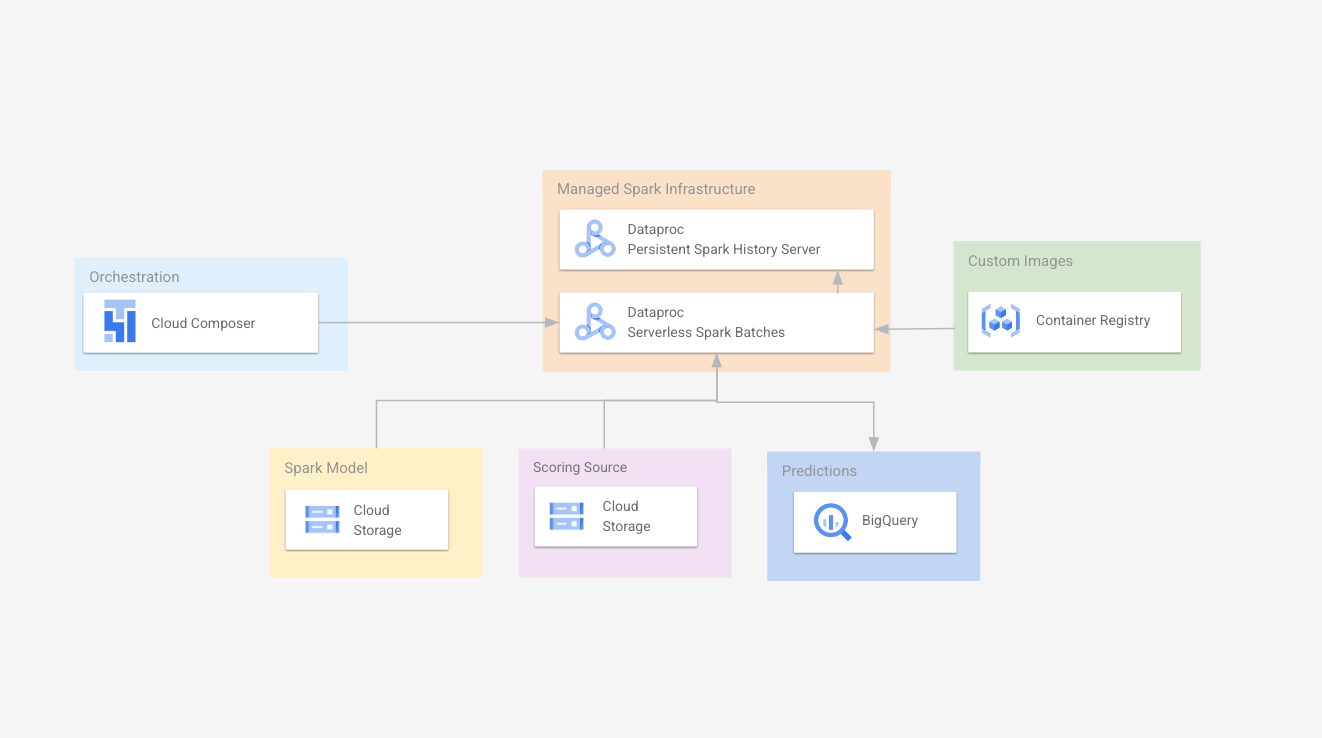

There are multiple options.

Vertex AI supports operationalizing batch serving of Spark ML Models in conjunction with MLEAP.

ARCHITECTURE DIAGRAM TO BE ADDED

CODE MODULE - Work in progress

For your convenience, all the code is pre-authored, so you can focus on understanding product features and integration.

Complete the lab modules in a sequential manner. For a better lab experience, read all the modules and then start working on them.

The lab includes custom container image creation and usage.

Shut down/delete resources when done to avoid unnecessary billing.

| # | Google Cloud Collaborators | Contribution |

|---|---|---|

| 1. | Anagha Khanolkar | Creator |

| 2. | Dr. Thomas Abraham Brian Kang |

ML consultation, testing, best practices and feedback |

| 3. | Rob Vogelbacher Proshanta Saha |

ML consultation |

| 4. | Ivan Nardini Win Woo |

ML consultation, inspiration through samples and blogs |

The source code was evolved by the creator from a base developed by a partner for Google Cloud.

Community contribution to improve the lab is very much appreciated.

If you have any questions or if you found any problems with this repository, please report through GitHub issues.

| Date | Details |

|---|---|

| 20220930 | Added serializing model to MLEAP bundle Affects: 1. Terraform main.tf 2. Hyperparameter tuning notebook 3. Hyperparameter tuning PySpark script 4. VAI pipeline notebook 5. VAI Json Template |

| 20221202 | Added a Python SDK (notebook) sample for preprocessing |