StyLitGAN: Image-based Relighting via Latent Control

Anand Bhattad, James Soole, D.A. Forsyth

Project Page |

Paper | arXiv

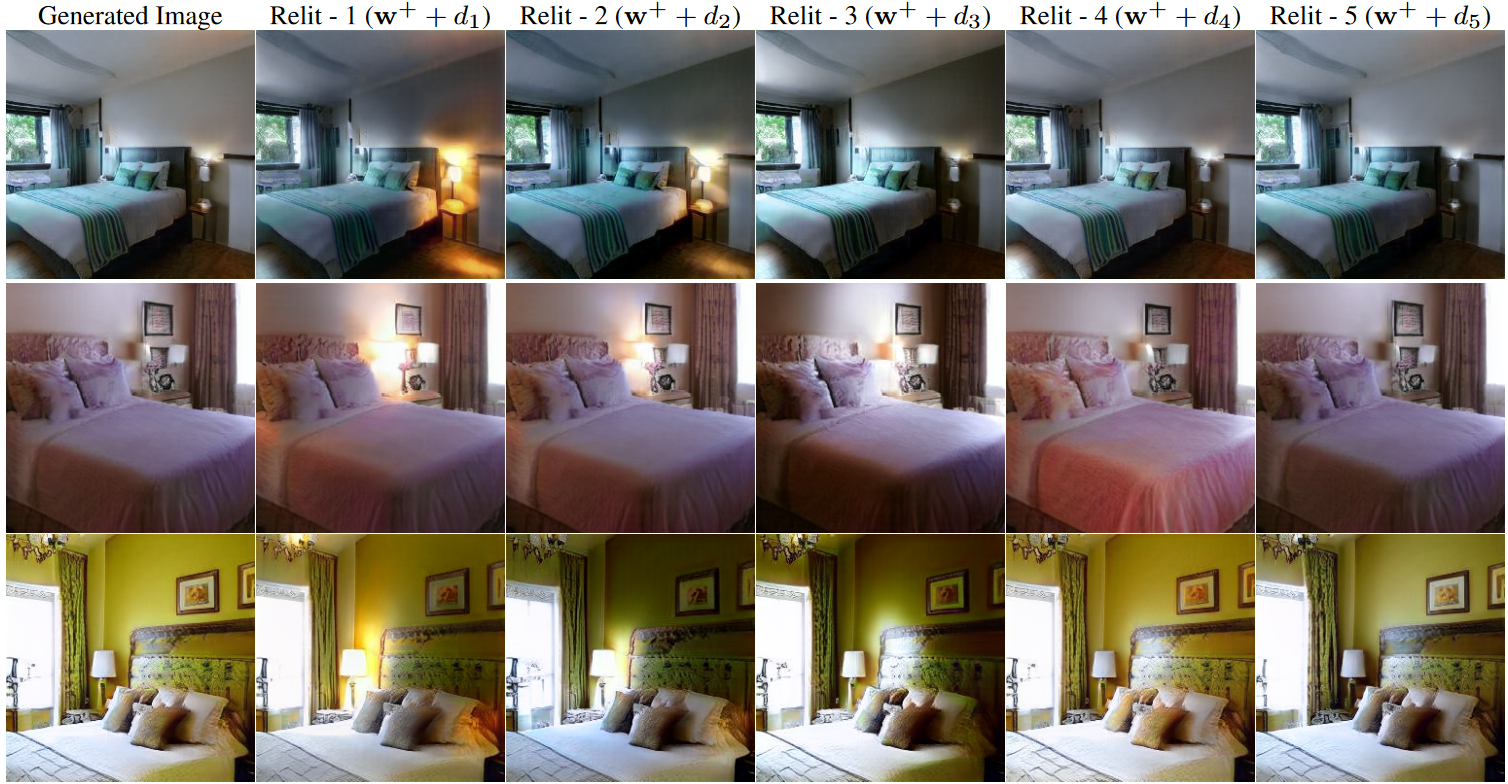

Abstract: We describe a novel method, StyLitGAN, for relighting and resurfacing images in the absence of labeled data. StyLitGAN generates images with realistic lighting effects, including cast shadows, soft shadows, inter-reflections, and glossy effects, without the need for paired or CGI data. StyLitGAN uses an intrinsic image method to decompose an image, followed by a search of the latent space of a pretrained StyleGAN to identify a set of directions. By prompting the model to fix one component (e.g., albedo) and vary another (e.g., shading), we generate relighted images by adding the identified directions to the latent style codes. Quantitative metrics of change in albedo and lighting diversity allow us to choose effective directions using a forward selection process. Qualitative evaluation confirms the effectiveness of our method.

- Python Libraries:

pip install click requests ninja notebook - Requirements are largely the same as in the Official StyleGan2 Pytorch Implementation

- We have tested on Linux with

python=3.9.18,pytorch=2.1.1 torchvision=0.16.1 pytorch-cuda=11.8,Conda 23.7.3,CUDA Version: 11.6 - We recommend installing PyTorch (find a compatible version for your CUDA device here) then doing

pip installas above - also see provided

environment.yml

See stylitgan.ipynb for sample relightings using latent directions given in stylitgan_directions_7.npy.

Latent directions were found for a model trained on LSUN-Bedroom, provided in stylitgan_bedroom.pkl.

Running the trained model requires utils obtained from StyleGan2.

@InProceedings{StyLitGAN,

title = {StyLitGAN: Image-based Relighting via Latent Control},

author = {Bhattad, Anand and Soole, James and Forsyth, D.A.},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024}

}

We thank Aniruddha Kembhavi, Derek Hoiem, Min Jin Chong, and Shenlong Wang for their feedback and suggestions. We also thank Ning Yu for providing us with pretrained StyleGAN models. This material is based upon work supported by the National Science Foundation under Grant No. 2106825 and by a gift from the Boeing Corporation.