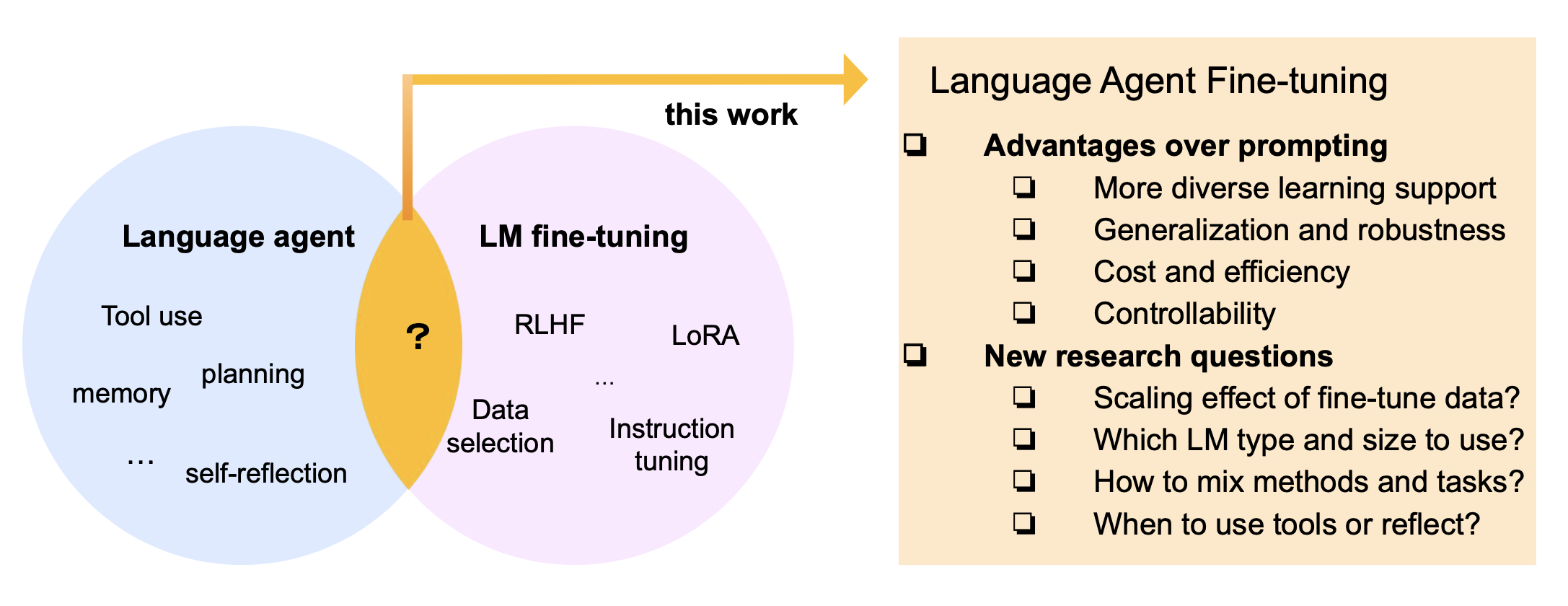

This repository is based on our publication FireAct: Toward Language Agent Fine-tuning (PDF). It contains prompts, demo code and fine-tuning data we generated. It also includes the description and directory for the model family we fine-tuned. If you use this code or data in your work, please cite:

@misc{chen2023fireact,

title={FireAct: Toward Language Agent Fine-tuning},

author={Baian Chen and Chang Shu and Ehsan Shareghi and Nigel Collier and Karthik Narasimhan and Shunyu Yao},

year={2023},

eprint={2310.05915},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

- Define tools in

tools/ - Define tasks in

tasks/ - Collect data & run experiments via

generation.py - Results will be saved in

trajs/

- Data to generate training data and run experiments in

data/. We also include samples of training data for both Alpaca format and GPT format. See details here. - Prompts to generate training data and run experiments in

prompts/

Set up OpenAI API key and store in environment variable (see here)

export OPENAI_API_KEY=<YOUR_KEY>

Set up SERP API key and store in environment variable (see here)

export SERPAPI_API_KEY=<YOUR_KEY>

Create virtual env, for example with conda

conda create -n fireact python=3.9

conda activate fireact

Clone this repo and install dependencies

git clone https://github.com/anchen1011/FireAct.git

pip install -r requirements.txt

Example:

python generation.py \

--task hotpotqa \

--backend gpt-4 \

--promptpath default \

--evaluate \

--random \

--task_split val \

--temperature 0 \

--task_end_index 5

See details with command python generation.py -h

You need to set a high number (thousands) of --task_end_index to get sufficient good data samples. [WARNING] This is costly with gpt-4 and serpapi.

You need to convert trajectories into alpaca format or gpt format for training. See our examples here.

Example:

cd finetune/llama_lora

python finetune.py \

--base_model meta-llama/Llama-2-13b-chat-hf \

--data_path ../../data/finetune/alpaca_format/hotpotqa.json \

--micro_batch_size 8 \

--num_epochs 30 \

--output_dir ../../models/lora/fireact-llama-2-13b \

--val_set_size 0.01 \

--cutoff_len 512 \

See details here.

Example (FireAct Llama):

python generation.py \

--task hotpotqa \

--backend llama \

--evaluate \

--random \

--task_split dev \

--task_end_index 5 \

--modelpath meta-llama/Llama-2-7b-chat \

--add_lora \

--alpaca_format \

--peftpath forestai/fireact_llama_2_7b_lora

Example (FireAct GPT):

python generation.py \

--task hotpotqa \

--backend ft:gpt-3.5-turbo-0613:<YOUR_MODEL> \

--evaluate \

--random \

--task_split dev \

--temperature 0 \

--chatgpt_format \

--task_end_index 5

See details with command python generation.py -h

Set --task_end_index 500 for quantitative evaluations. See our examples here.

We release a selected set of multitask models based on Llama family. Details can be found in their model cards.

| Base Model | Training Method | Hugging Face |

|---|---|---|

| Llama2-7B | LoRA | forestai/fireact_llama_2_7b_lora |

| Llama2-13B | LoRA | forestai/fireact_llama_2_13b_lora |

| CodeLlama-7B | LoRA | forestai/fireact_codellama_7b_lora |

| CodeLlama-13B | LoRA | forestai/fireact_codellama_13b_lora |

| CodeLlama-34B | LoRA | forestai/fireact_codellama_34b_lora |

| Llama2-7B | Full Model | forestai/fireact_llama_2_7b |

- Our generation code is based on ysymyth/ReAct

- Our Llama full model training code is based on tatsu-lab/stanford_alpaca

- Our Llama LoRA training code is based on tloen/alpaca-lora

- Our GPT fine-tuning code is based on anchen1011/chatgpt-finetune-ui