Set your servers and hostnames up (Makefile) and test:

make # make all

make clean # clean results

make warmup # warm up you configured http endpoints

make bench-phalcon # bench phalcon and log to results/

make graph # generate graphs using gnuplot and place them in results/

...

A set of micro webservices using different languages and frameworks that fetch the same amount of content from HTTP and then build a new JSON structure based on the fetched content before returning it to the client.

The purpose of this set of tests is to get an overall impression on "how a technology stack may tend to perform" with the following scenario:

- Bootstrap framework components (or fetch them from memory...)

- Listen on a TCP port for HTTP GET requests

- Accepts HTTP GET on an endpoint (route: /)

- When receiving a valid GET request trigger 10 individual HTTP server-to-server requests to an "external" resource via. TCP/HTTP and stores the result in memory within the application. This will trigger network I/O and simulates similar operations in a real API that fetches external content.

- The external resources are JSON decoded upon retrieval and we then we then start to create a NEW response object based on a subset of that received data. This will trigger some CPU usage on the system under test (basic iterations and a runthrough of JSON serialization/deserialization).

Run a HTTP benchmarking tool against these example-apps on various platforms and get a rough idea if a framework / server is suitable. Sometimes one needs low responsetimes without a reverse proxy cache.

PHP:

-

Zend Framework 2.3 @ Apache/2.4.9 (Unix) + PHP 5.5.13 + zend_extension=opcache.so

-

Phalcon Framwork 1.3.2 @ Apache/2.4.9 (Unix) + PHP 5.5.13 + zend_extension=opcache.so

-

Phalcon Framwork 1.3.2 @ Apache/2.4.9 (Unix) + PHP 5.5.13 + zend_extension=opcache.so + eventlib + REACT PHP

node.js:

- Hapi 6.0.1 @ node v0.10.29

- Restify @ TODO

- Express.js @ TODO

I run the tests on ArchLinux that has all updated packages from upstream.

All the apps contact the same elasticsearch source over HTTP and produce the same output. The output is generated by querying elasticsearch endpoint 10 times, and then doing a single iteration over the resultset from elasticsearch; putting a subset of the items into the api response result.

{

"time": 1402786297032,

"shards": 5,

"hits": [

{

"_index": "entitylib",

"_type": "entity",

"_id": "Ob7UuJC1Q4W6sAXKvxSMPQ",

"_score": 1,

"_source": {

"full": {

"title": "test entity title",

"description": "test entity description"

},

"summary": {

"title": "test entity title"

}

}

},

(... repeated 9 more times...)

]

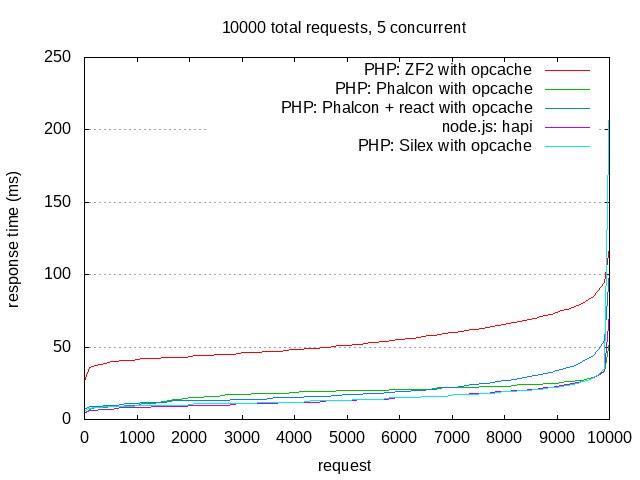

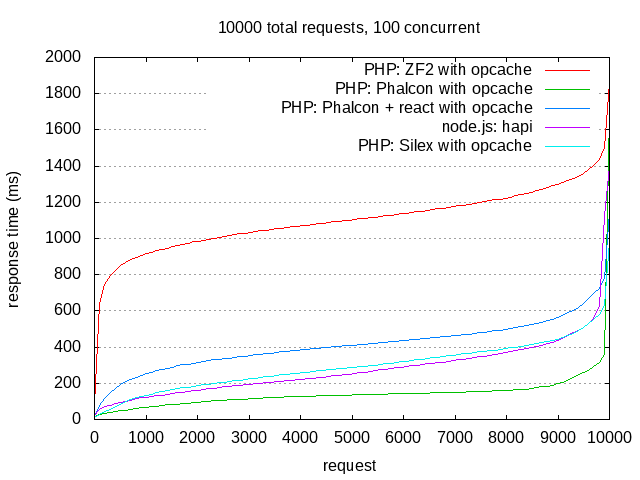

}- ZF2 seems terrible for this particular type of job. I have looked at the entire callstack using XDEBUG because the results were so awful. To summarize ZF2 is CPU-bound quickly as a result of over 900 individual PHP function calls for this simple task of fetching external data and then building a response. Some of these function calls are dynamic PHP call_user_func(...); that take place in order to bootstrap the ZF2 ServiceManager and EventManager at the beginning. These call_user_func and similar are hard for PHP opcache or other optimizers to speed up. At the moment I therefore view ZF2 as unviable for these usecases because of the CPU-intensive overhead. For a very low amount of concurrent requests it does work fine, but you will have to start throwing more hardware at it very fast in order to avoid longer responsetimes. And these tests are all about responsetimes at different amounts of stress! And let's face it, ZF2 does not have a lot of value anymore if you ditch the core Event+Service manager...

- Phalcon is viable, and is much closer to "raw PHP" as it does not run a whole lot of PHP code upon every request that is hard to optimize via opcache. The Phalcon core functionality is all coded in C and installed using a compiled module (PECL).

- node.js frameworks perform fairly well and as expected on this type of thing and can be used with good responsetimes

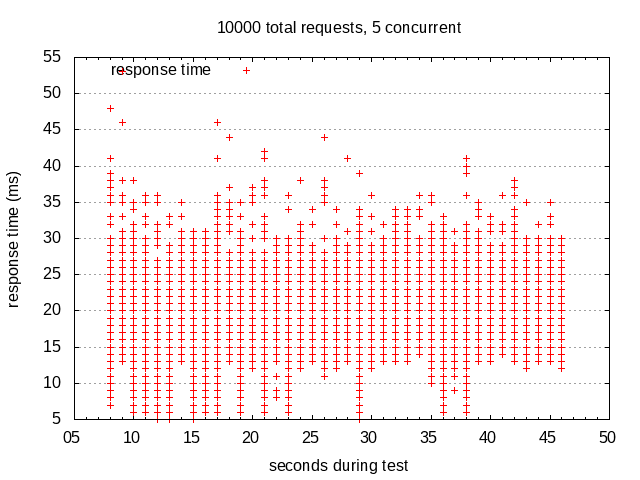

At the moment I am a little surprised that the synchronous (I/O) Phalcon application keeps up so well with node. On these particular tests it even seems better. I would have expected the async I/O running in the node.js applications to pull away a bit more as we do actually perform 10 external http requests for each response we generate. I do however expect the async I/O in nodejs will pay off more in an environment where individual waits on the external resource increase. Elasticsearch is pretty awesome and does not punish synchronous I/O a lot here.

ReactPHP was a bit disappointing as it overall turns out SLOWER than simply running the HTTP requests in a synchronous way from PHP in this particular case. I am no experienced user of this React framework and so this could also be a mistake in the test-implementation. I DID however install and verify that the "eventlib" C extension was installed in order to help ReactPHP performance. I have not taken the time to look at XDEBUG output yet for the React test.

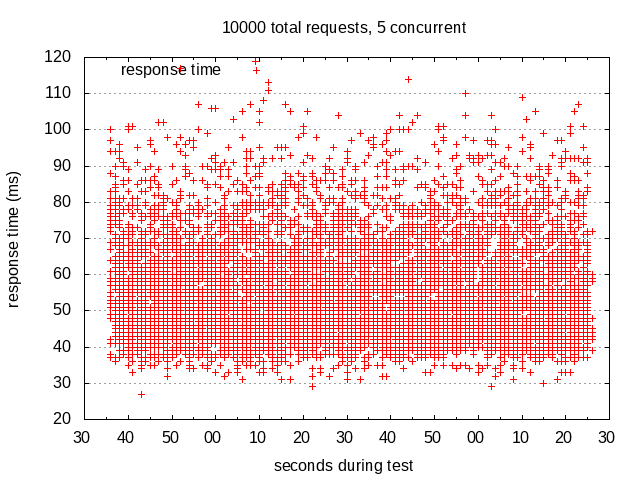

This is fairly tricky stuff to test in a really fair way, so please keep an open and critical mind when interpreting these results.

Work in progress. This project contains some applications that can be bombed using apachebench(ab) or siege. The applications implement a theoretical but realistic scenario implemented in different languages/frameworks. All examples result in the same output; solving the problem in a "realistic" way within the scope of given framework. It is not over-normalized nor very pre-optimized. It aims to represent typical code running in a typical application on the given framework.

This CAN be useful to get an overview on how the technologies behave with default/sane settings on a given machine, but should not be considered as absolute evidence as "the best framework for x" or "runtime x is so much better than y" alone. Use COMMON SENSE when interpreting the results, and be sure to study and understand what the applications actually do. All the code is there. The primary goals is to get a basic idea and overview of the baseline responsetimes to expect when creating a new API using these modern frameworks. If you serve a lot of static data be sure to check out the awesome Varnish Cache (http://varnish-cache.org)