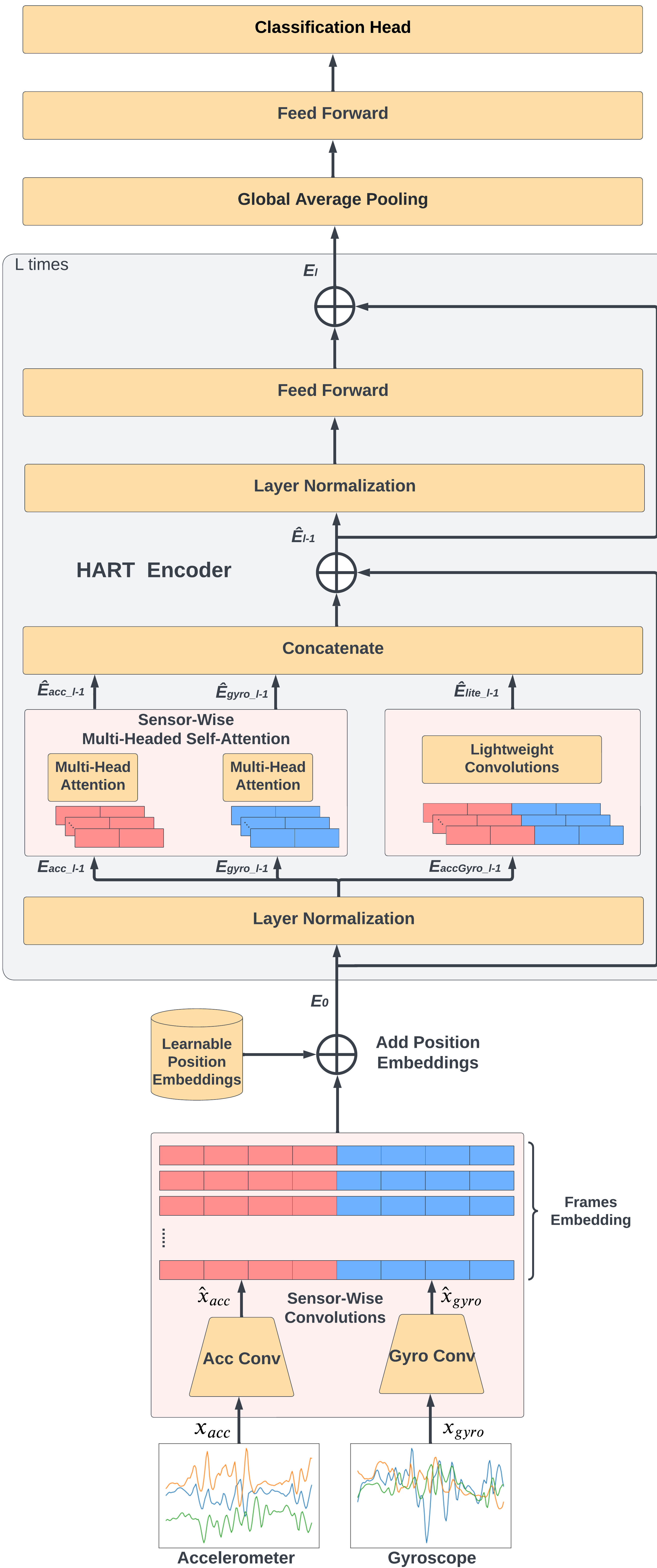

Tensorflow implementation of HART/MobileHART:

Lightweight Transformers for Human Activity Recognition on Mobile Devices [Paper]

Transformer-based Models to Deal with Heterogeneous Environments in Human Activity Recognition

Sannara Ek, François Portet, Philippe Lalanda

If our project is helpful for your research, please consider citing :

@misc{https://doi.org/10.48550/arxiv.2209.11750,

doi = {10.48550/ARXIV.2209.11750},

url = {https://arxiv.org/abs/2209.11750},

author = {EK, Sannara and Portet, François and Lalanda, Philippe},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Artificial Intelligence (cs.AI), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Lightweight Transformers for Human Activity Recognition on Mobile Devices},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}

- 1. Updates

- 2. Installation

- 3. Quick Start

- 4. Position-Wise and Device-Wise Evaluation

- 5. Results

- 6. Acknowledgement

09/02/2023 Initial commit: Code of HART/MobileHART is released.

This code was implemented with Python 3.7, Tensorflow 2.10.1 and CUDA 11.2. Please refer to the official installation. If CUDA 11.2 has been properly installed :

pip install tensorflow==2.10.1

While only Tensorflow and Numpy are required to import model.py to your working environment

To run our training and evaluatioin pipeline, additional dependecies are needed. please launch the following command:

pip install -r requirements.txt

Our baseline experiments were conducted on a Debian GNU/Linux 10 (buster) machine with the following specs:

CPU : Intel(R) Xeon(R) CPU E5-2623 v4 @ 2.60GHz

GPU : Nvidia GeForce Titan Xp 12GB VRAM

Memory: 80GB

We provide scripts to automate downloading and proprocessing the datasets used for this study. See scripts in dataset folders. e.g, for the UCI dataset, run DATA_UCI.py

For running the 'Combined' dataset training and evaluation pipeline, all datasets must first be downloaded and processed. Please run all scripts in the 'datasets' folder.

Tip: Manually downloading the datasets and placing them in the 'datasets/dataset' folder may be a good alternative for stabiltiy if the download pipeline keeps failing VIA the provided scripts.

UCI

https://archive.ics.uci.edu/ml/datasets/human+activity+recognition+using+smartphones

MotionSense

https://github.com/mmalekzadeh/motion-sense/tree/master/data

HHAR

http://archive.ics.uci.edu/ml/datasets/Heterogeneity+Activity+Recognition

RealWorld

https://www.uni-mannheim.de/dws/research/projects/activity-recognition/#dataset_dailylog

SHL Preview

http://www.shl-dataset.org/download/

After running all or the desired datasets for usage in the DATA scripts in the dataset folder, launch either the jupyter notebook or python script to start the training pipeline.

Sample launch commands provided in the following sections

HART and MobileHART are packaged as a keras sequential model.

To use our models with their default hyperparameters, please import and add the following code:

import model

input_shape = (128,6) # The shape of your input data

activityCount = 6 # Number of classification heads

HART = model.HART(input_shape,activityCount)

MobileHART = model.mobileHART_XS(input_shape,activityCount)

Then compile and fit with desired optimizer and loss as conventional keras model.

To run with the default hyperparameters of HART for MotionSense dataset, please launch:

python main.py --architecture HART --dataset MotionSense --localEpoch 200 --batch_size 64

Replace 'MotionSense' with one of the below to train on different a dataset

RealWorld, HHAR, UCI, SHL, MotionSense, COMBINED

To run with the default hyperparameters of MobileHART for MotionSense dataset

python main.py --architecture MobileHART --dataset MotionSense --localEpoch 200 --batch_size 64

Replace 'MotionSense' with one of the below to train on different a dataset

MotionSense,RealWorld,HHAR,UCI,SHL,MotionSense, COMBINED

To run HART/MobileHART in a leave one position out pipeline with the RealWorld dataset, please launch:

python main.py --architecture HART --dataset RealWorld --positionDevice chest --localEpoch 200 --batch_size 64

Replace 'chest' with one of the below to train on different a position

chest, forearm, head, shin, thigh, upperarm, waist

To run HART/MobileHART in a leave one device out pipeline with the HHAR dataset, please launch:

python main.py --architecture HART --dataset HHAR --positionDevice nexus4 --localEpoch 200 --batch_size 64

Replace 'nexus4' with one of the below to train on different a device

nexus4, lgwatch, s3, s3mini, gear, samsungold

The table below shows the results obtained with HART and MobileHART on our training and evaluation pipeline with the 5 and combined datasets.

| Architecture | UCI | MotionSense | HHAR | RealWorld | SHL | Combined |

|---|---|---|---|---|---|---|

| HART | 94.49 | 98.20 | 97.36 | 94.88 | 79.49 | 85.61 |

| MobileHART | 97.20 | 98.45 | 98.19 | 95.22 | 81.36 | 86.74 |

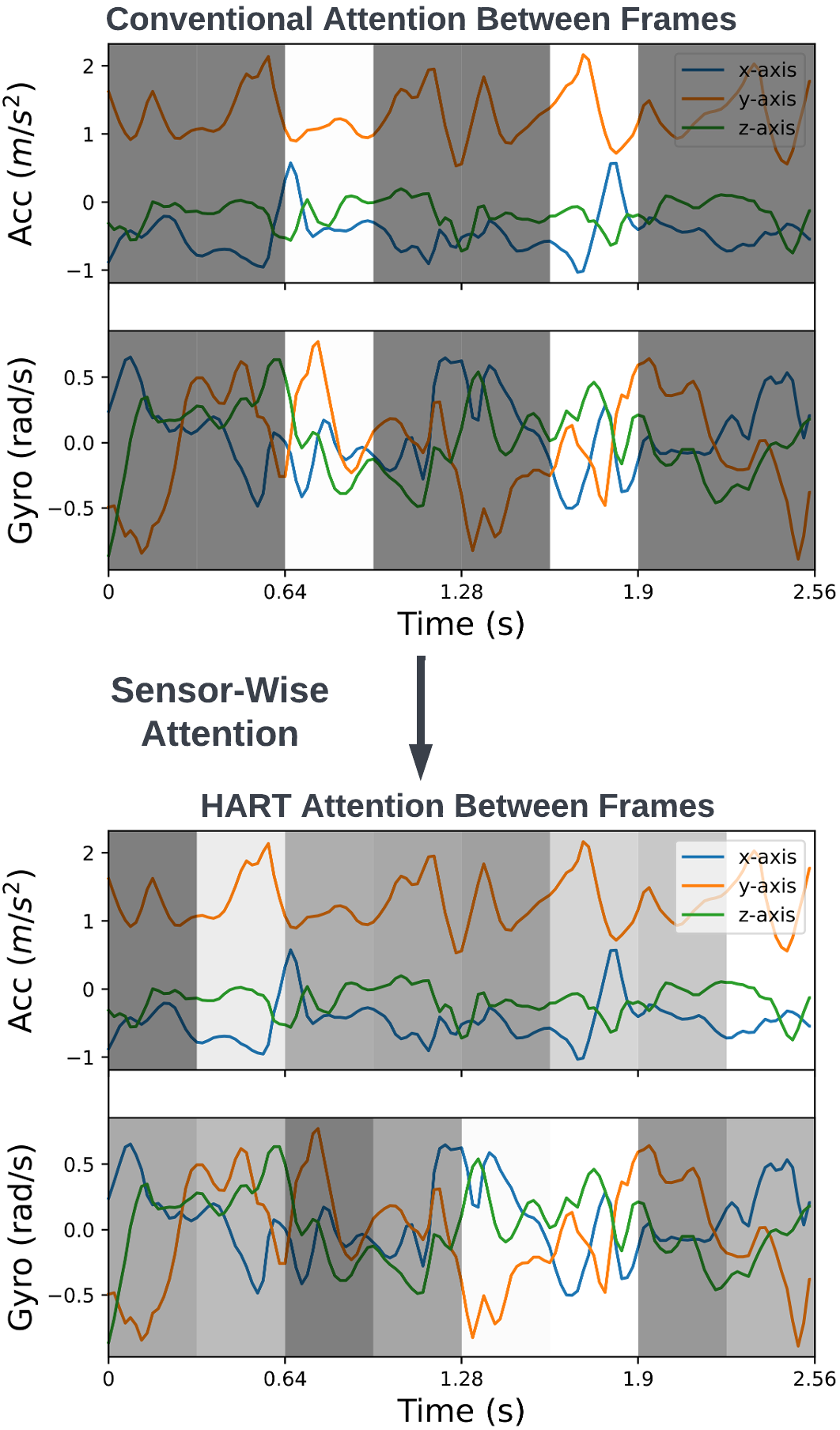

The image below shows the result of our sensor-wise attention compared againts conventional attention:

This work has been partially funded by Naval Group, and by MIAI@Grenoble Alpes (ANR-19-P3IA-0003). This work was also granted access to the HPC resources of IDRIS under the allocation 2022-AD011013233 made by GENCI.