Gather-Tensorflow-Serving

Gather how to deploy tensorflow models as much I can

Covered

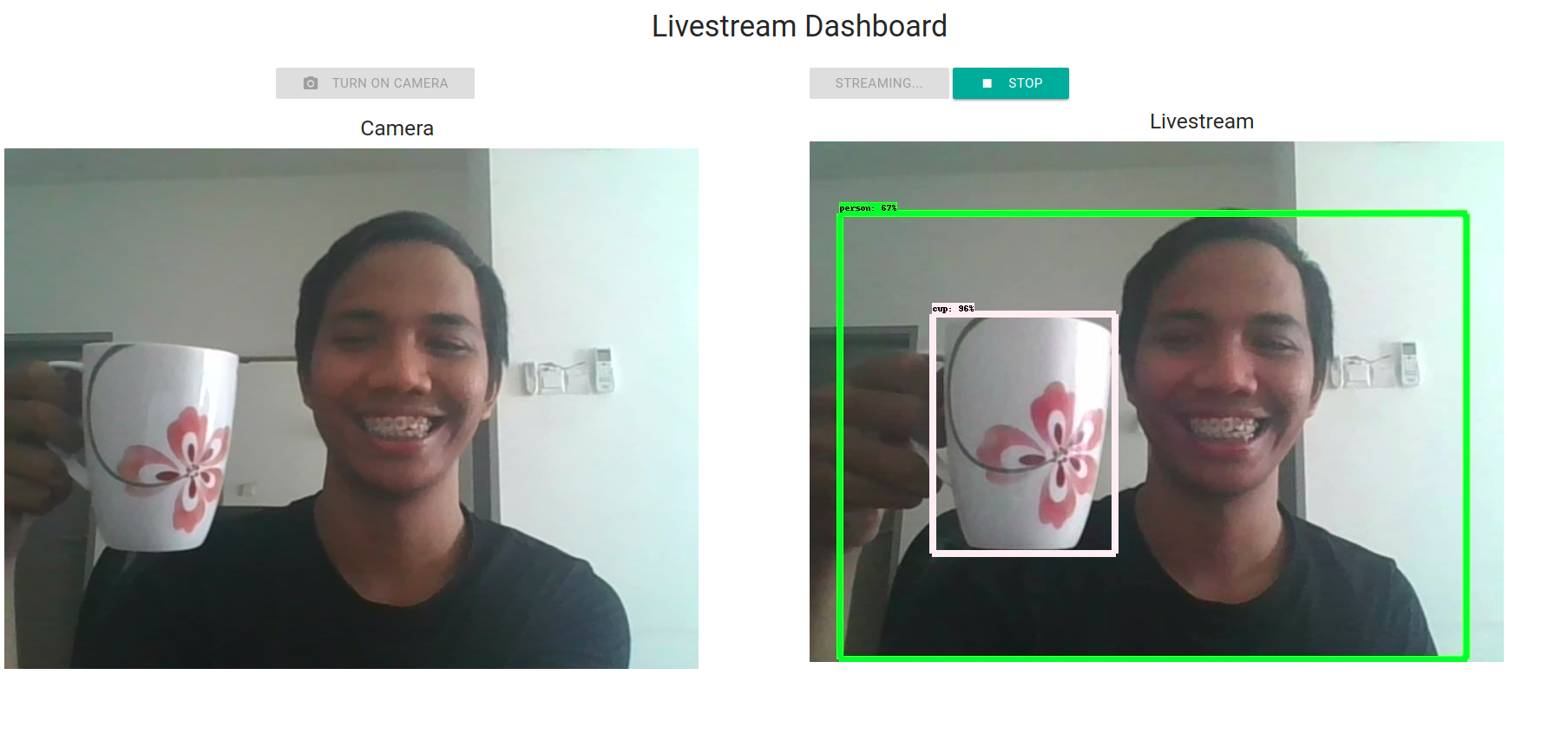

- Object Detection using Flask SocketIO for WebRTC

- Object Detection using Flask SocketIO for opencv

- Speech streaming using Flask SocketIO

- Classification using Flask + Gunicorn

- Classification using TF Serving

- Inception Classification using Flask SocketIO

- Object Detection using Flask + opencv

- Face-detection using Flask SocketIO for opencv

- Face-detection for opencv

- Inception with Flask using Docker

- Multiple Inception with Flask using EC2 Docker Swarm + Nginx load balancer

- Text classification using Hadoop streaming MapReduce

- Text classification using Kafka

- Text classification on Distributed TF using Flask + Gunicorn + Eventlet

- Text classification using Tornado + Gunicorn

- Celery with Hadoop for Massive text classification using Flask

- Luigi scheduler with Hadoop for Massive text classification

Technology used

Printscreen

All folders contain printscreens or logs.

Notes

- Deploy them on a server, change

localin code snippets to your own IP. - WebRTC chrome only can tested on HTTPS server.

- When come to real deployment, always prepare for up-scaling architectures. Learn about DevOps.

- Please aware with your cloud cost!