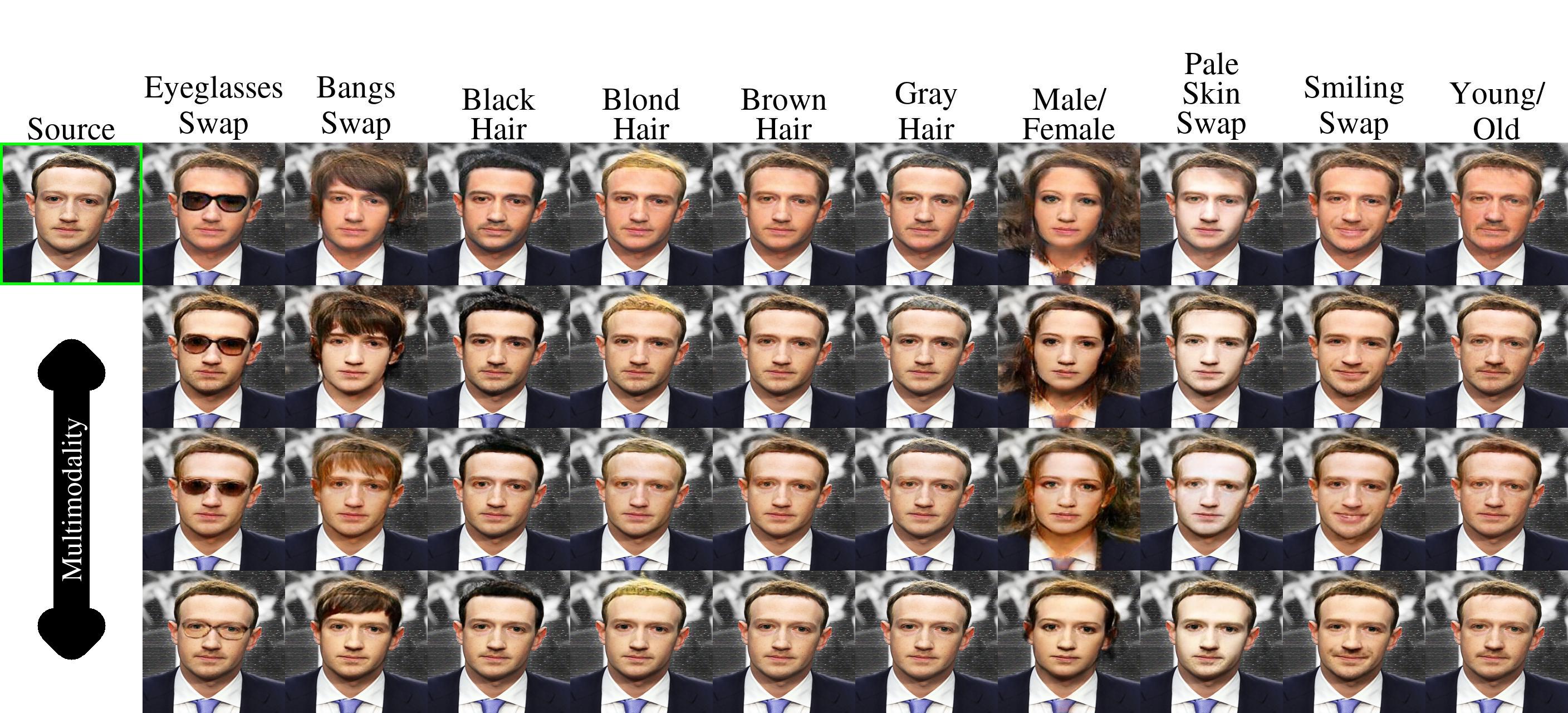

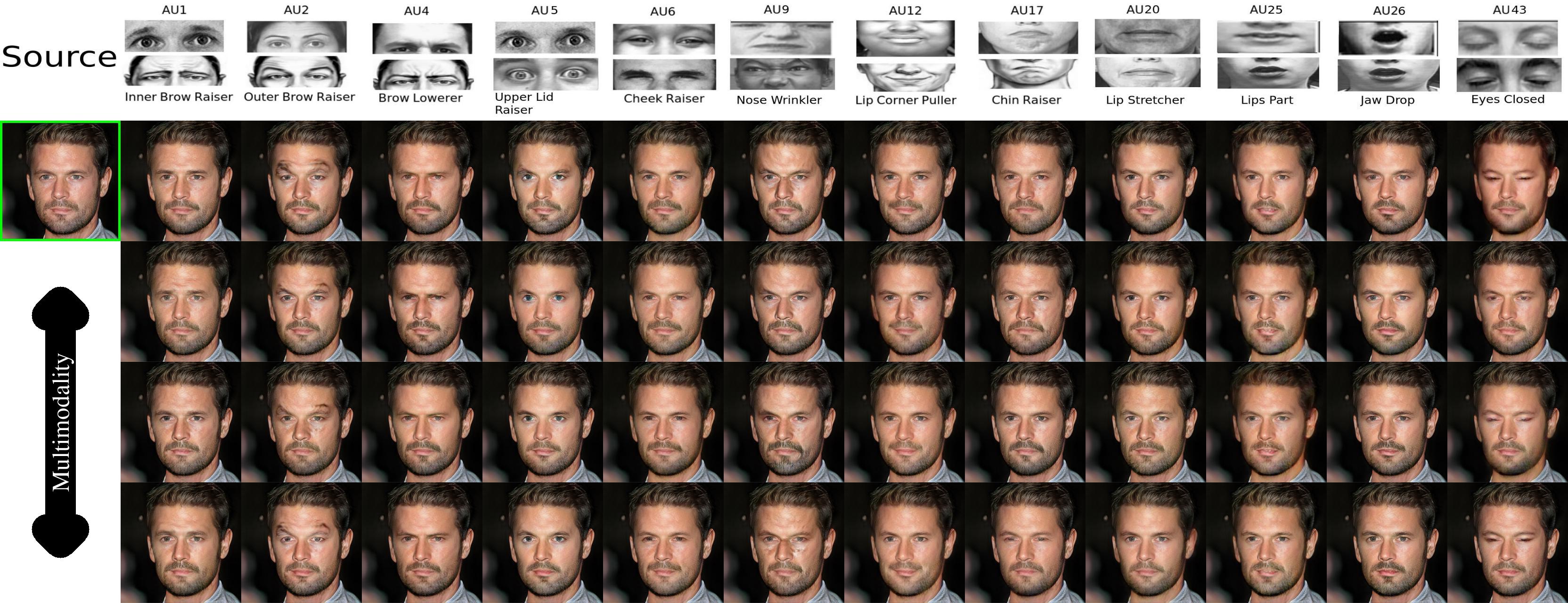

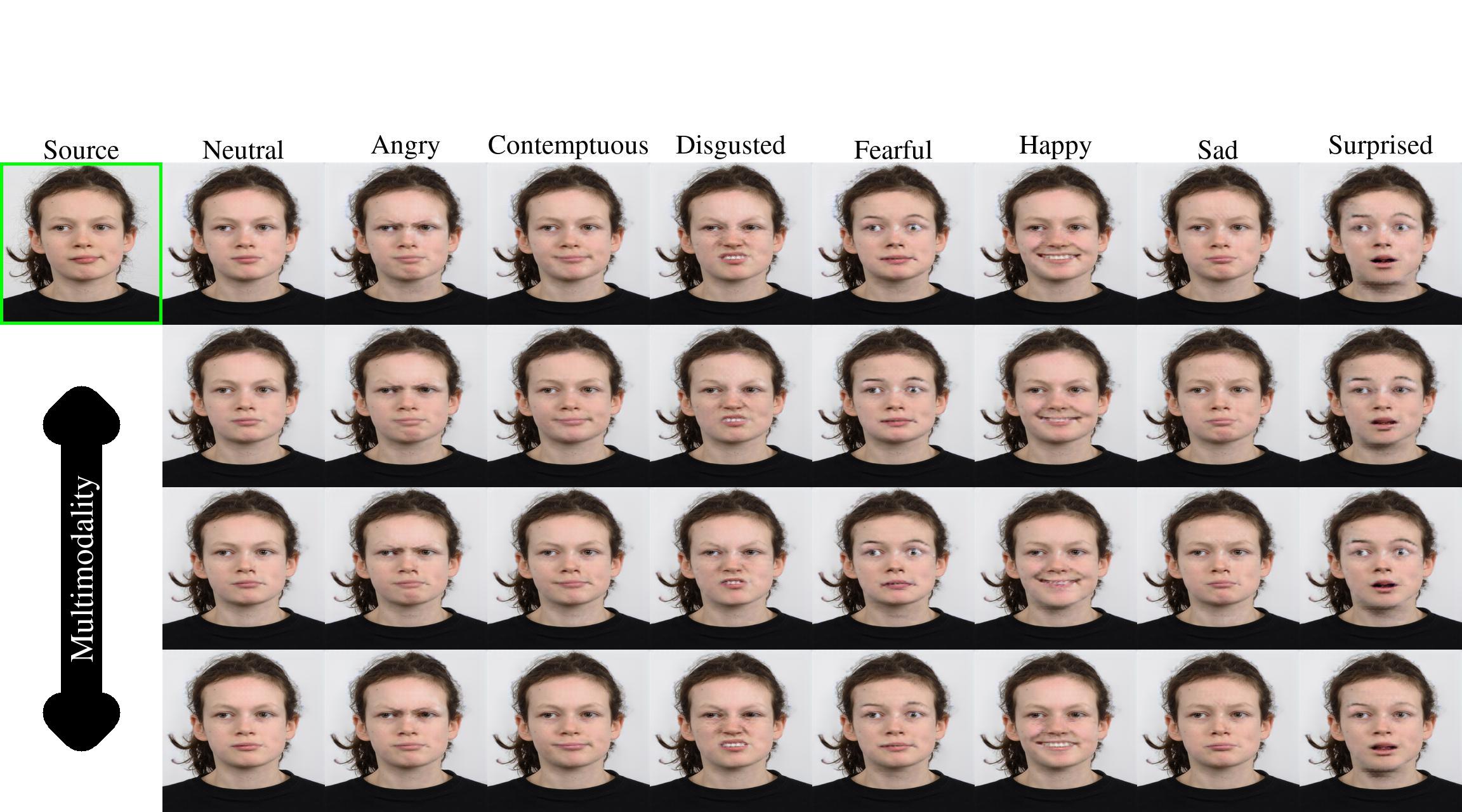

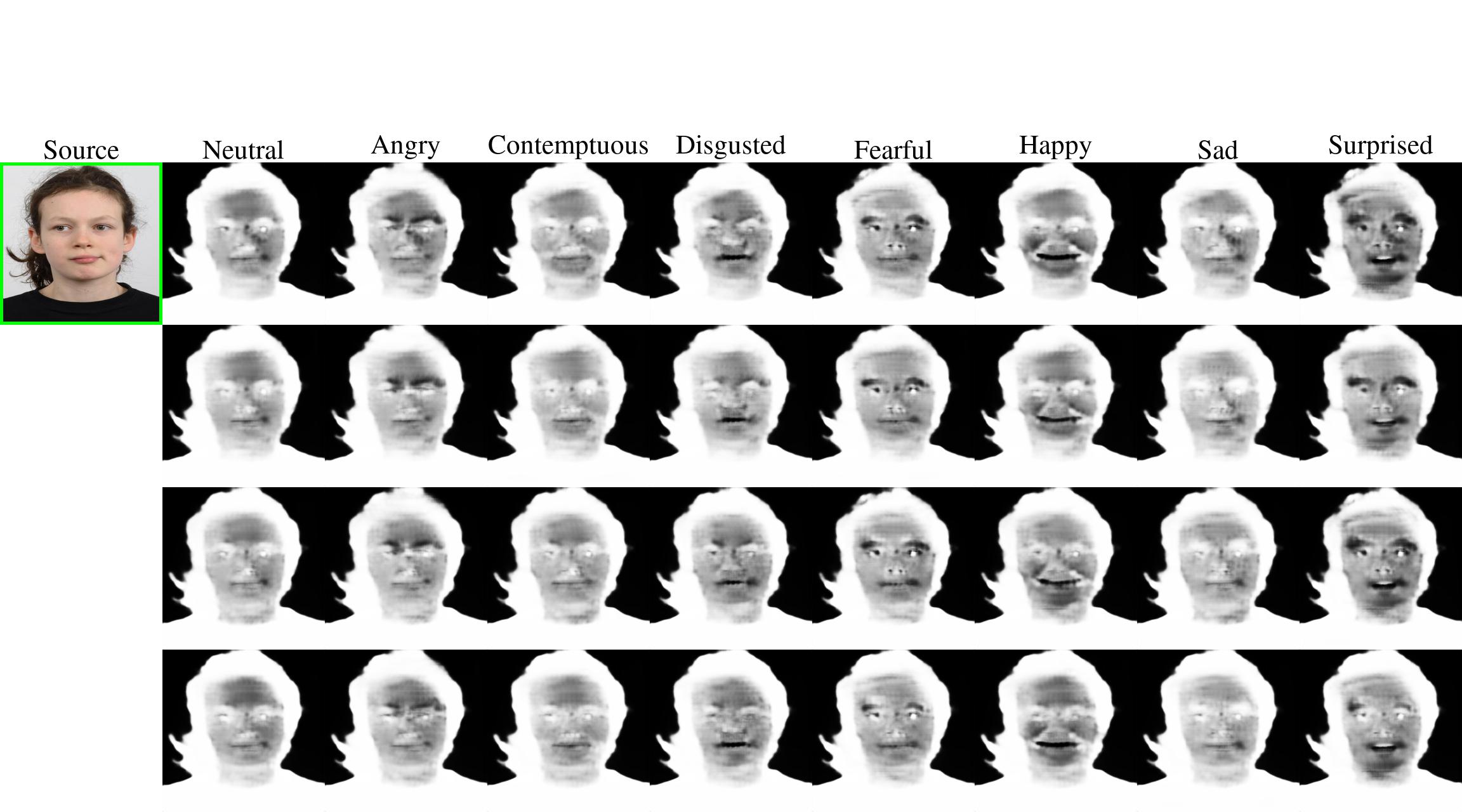

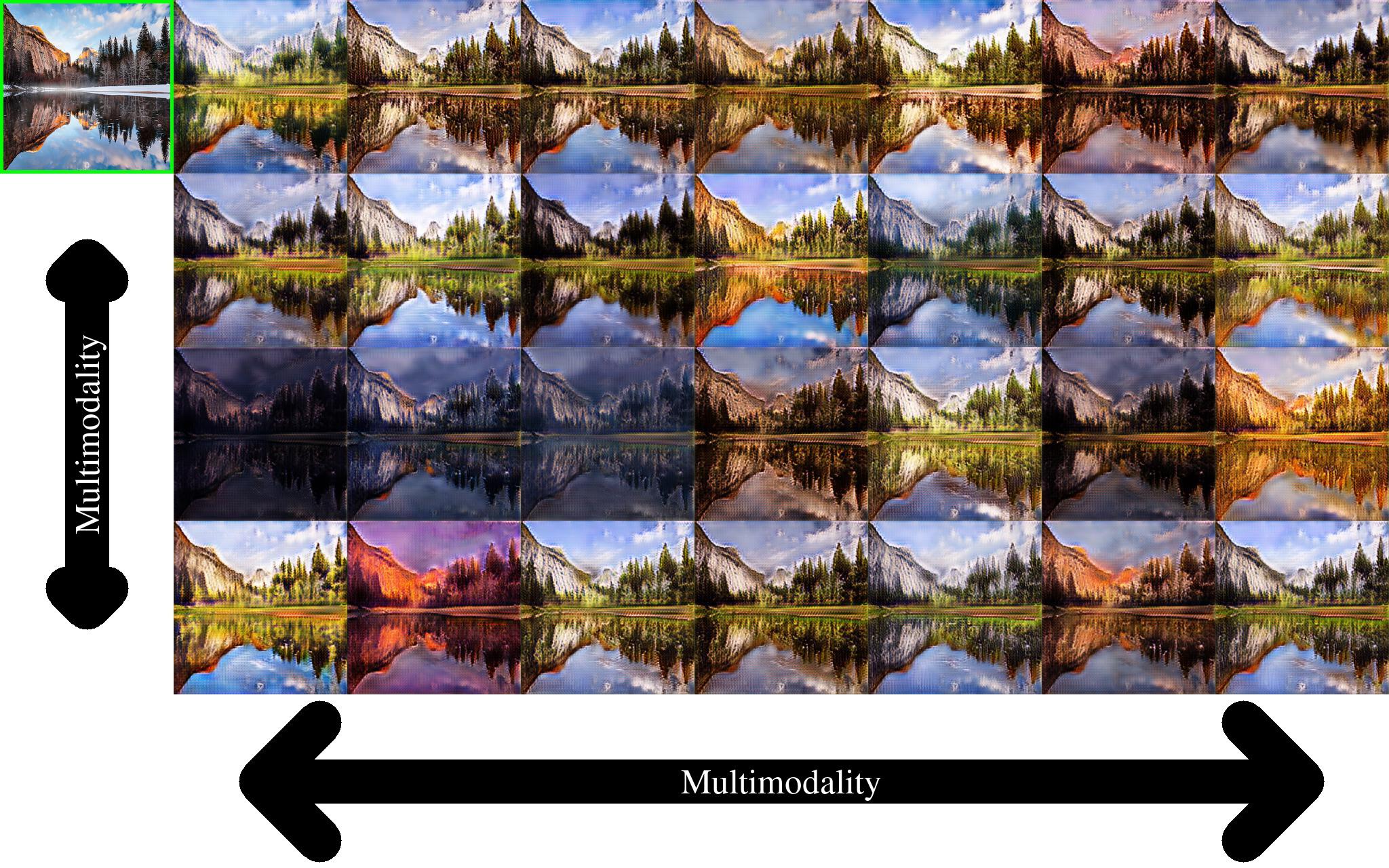

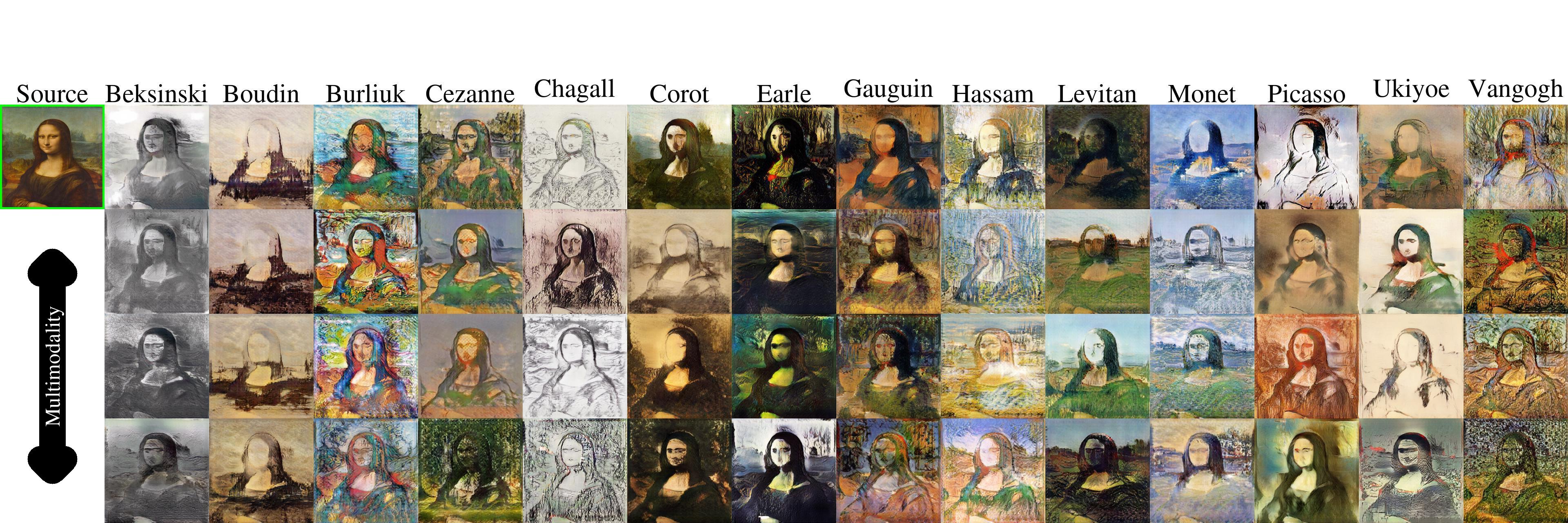

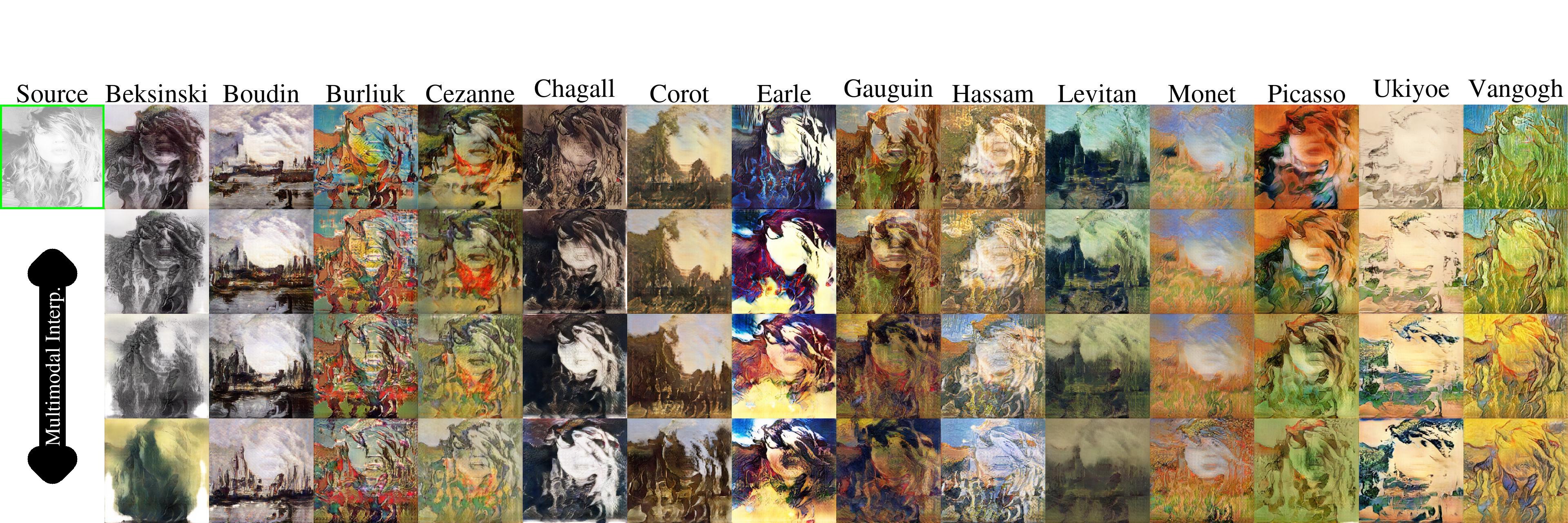

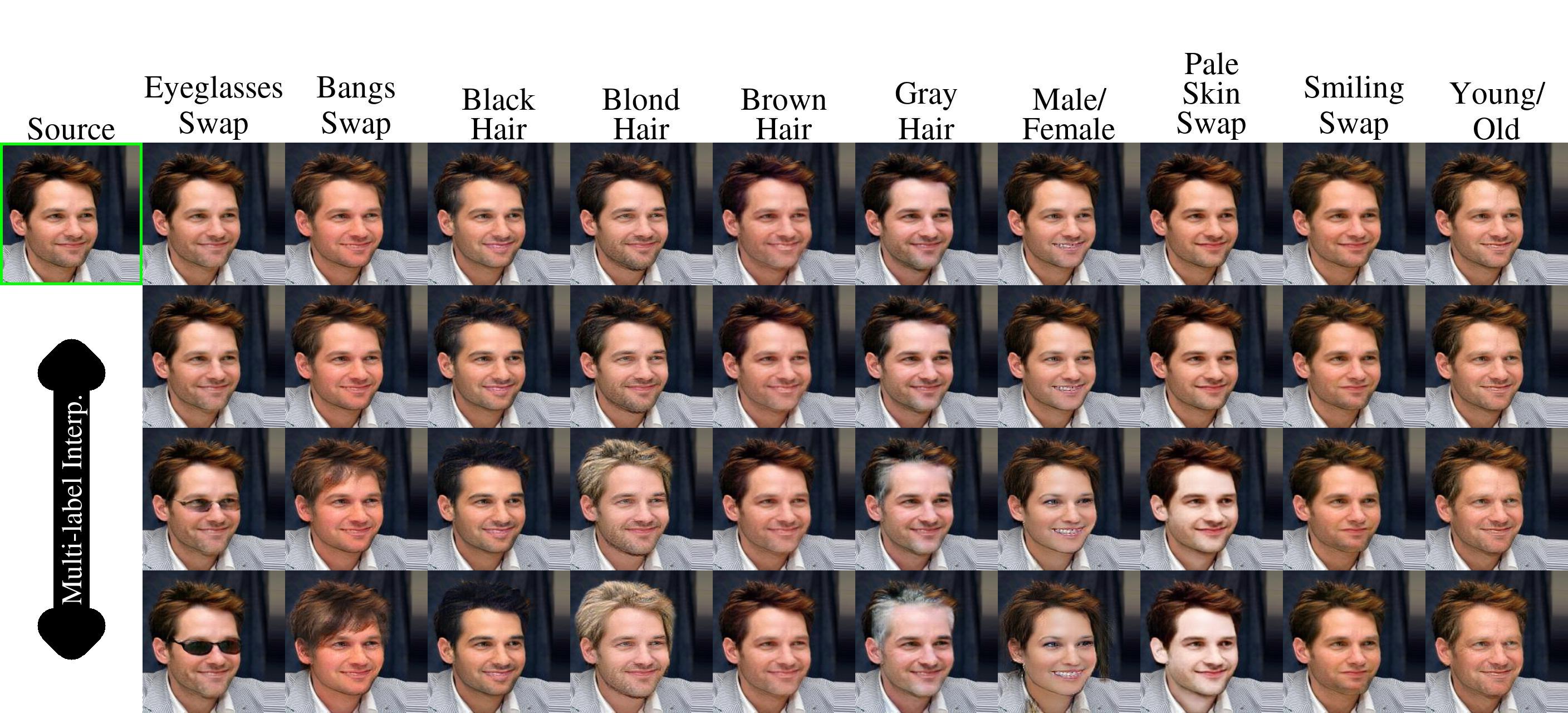

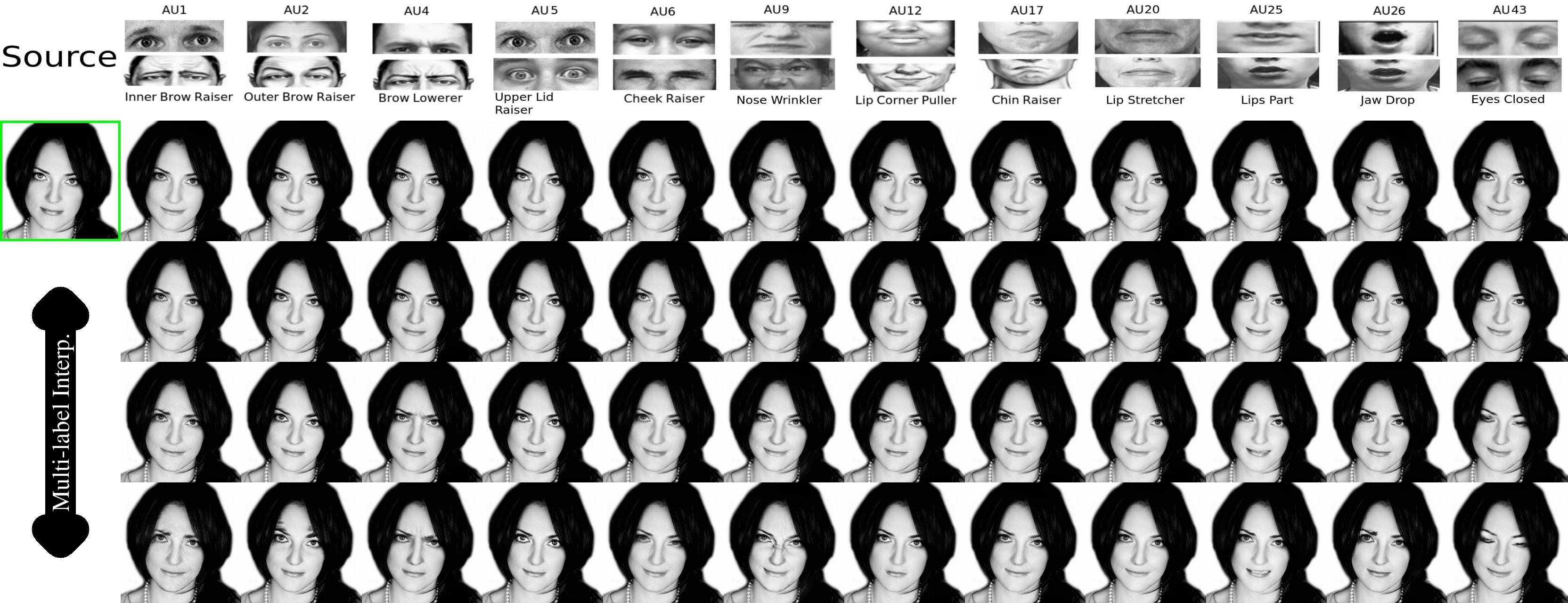

This repository provides a PyTorch implementation of SMIT. SMIT can stochastically translate an input image to multiple domains using only a single generator and a discriminator. It only needs a target domain (binary vector e.g., [0,1,0,1,1] for 5 different domains) and a random gaussian noise.

SMIT: Stochastic Multi-Label Image-to-image Translation

Andrés Romero 1, Pablo Arbelaez1, Luc Van Gool 2, Radu Timofte 2

1 Biomedical Computer Vision (BCV) Lab, Universidad de Los Andes.

2 Computer Vision Lab (CVL), ETH Zürich.

@article{romero2019smit,

title={SMIT: Stochastic Multi-Label Image-to-Image Translation},

author={Romero, Andr{\'e}s and Arbel{\'a}ez, Pablo and Van Gool, Luc and Timofte, Radu},

journal={ICCV Workshops},

year={2019}

}

$ git clone https://github.com/BCV-Uniandes/SMIT.git

$ cd SMITTo download the CelebA dataset:

$ bash generate_data/download.sh./main.py --GPU=$gpu_id --dataset_fake=CelebAEach dataset must has datasets/<dataset>.py and datasets/<dataset>.yaml files. All models and figures will be stored at snapshot/models/$dataset_fake/<epoch>_<iter>.pth and snapshot/samples/$dataset_fake/<epoch>_<iter>.jpg, respectivelly.

./main.py --GPU=$gpu_id --dataset_fake=CelebA --mode=testSMIT will expect the .pth weights are stored at snapshot/models/$dataset_fake/ (or --pretrained_model=location/model.pth should be provided). If there are several models, it will take the last alphabetical one.

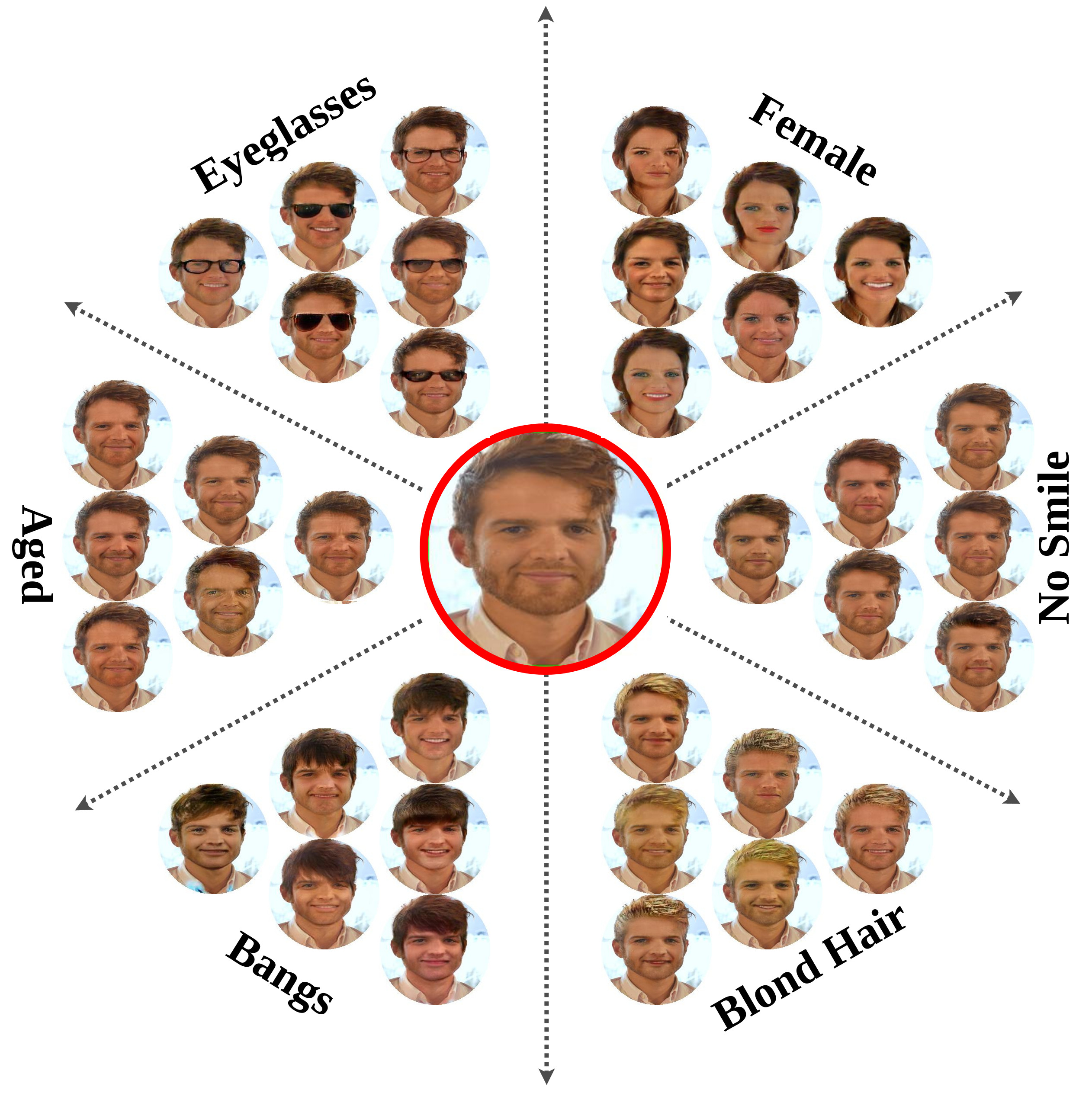

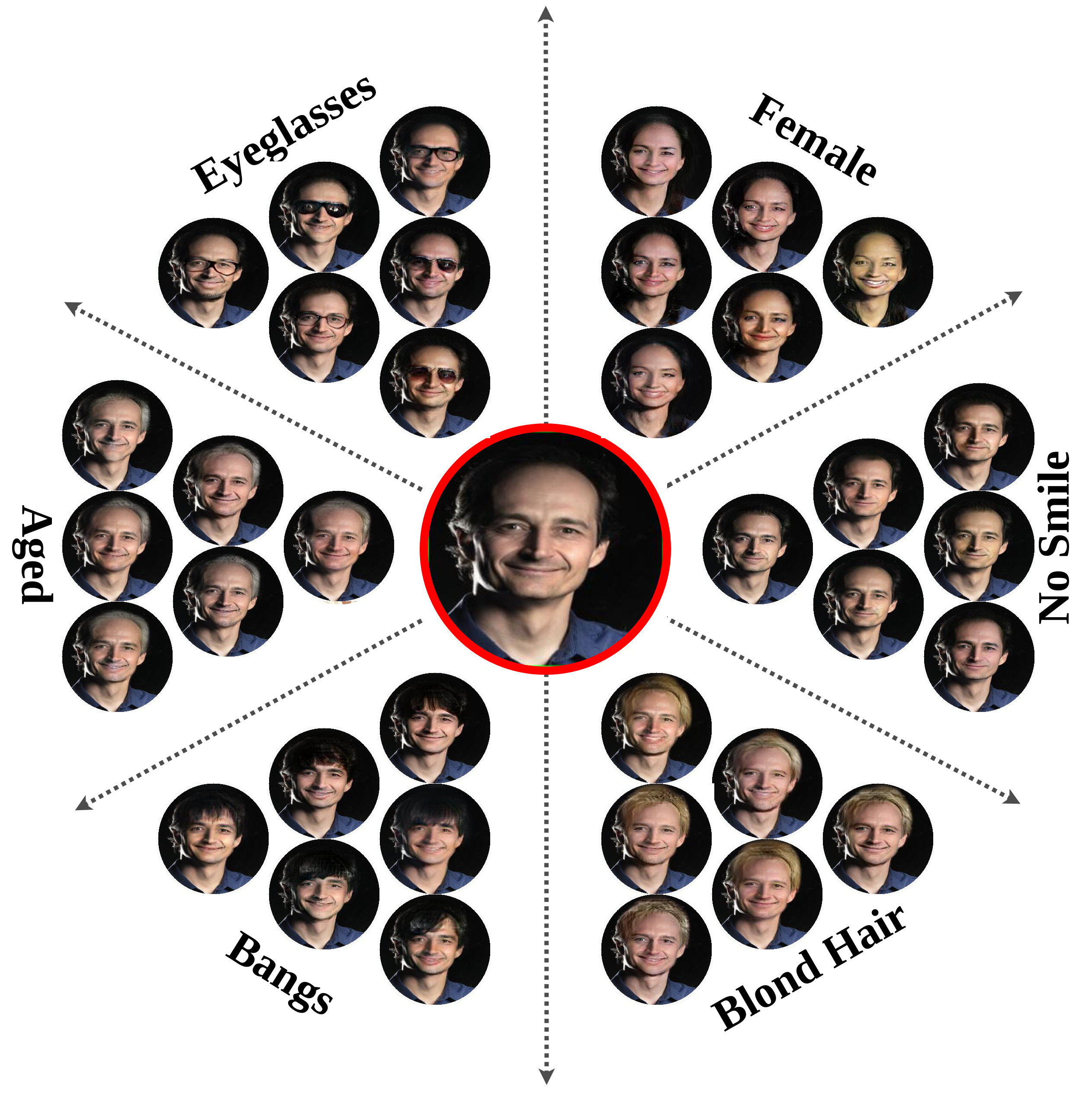

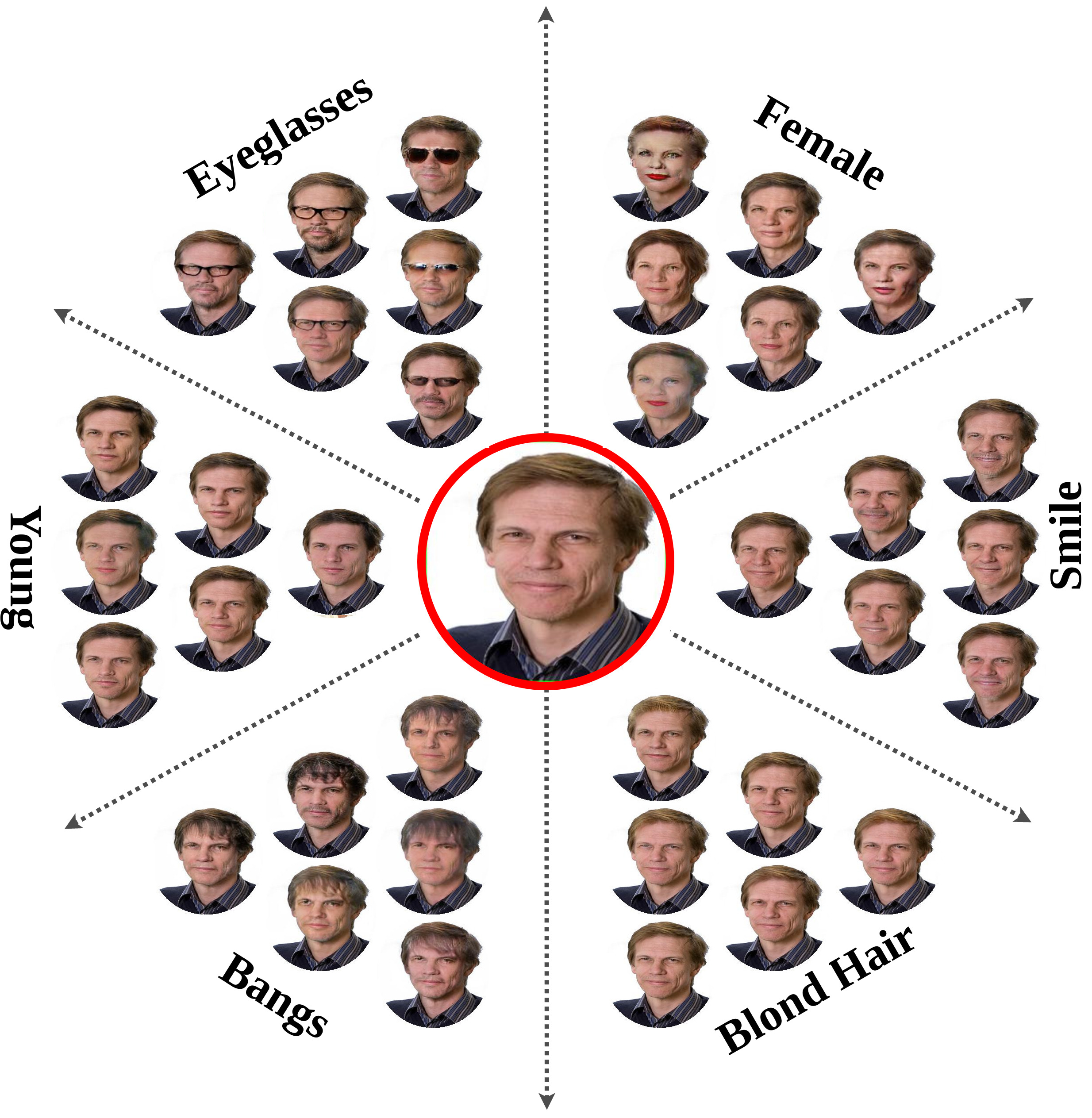

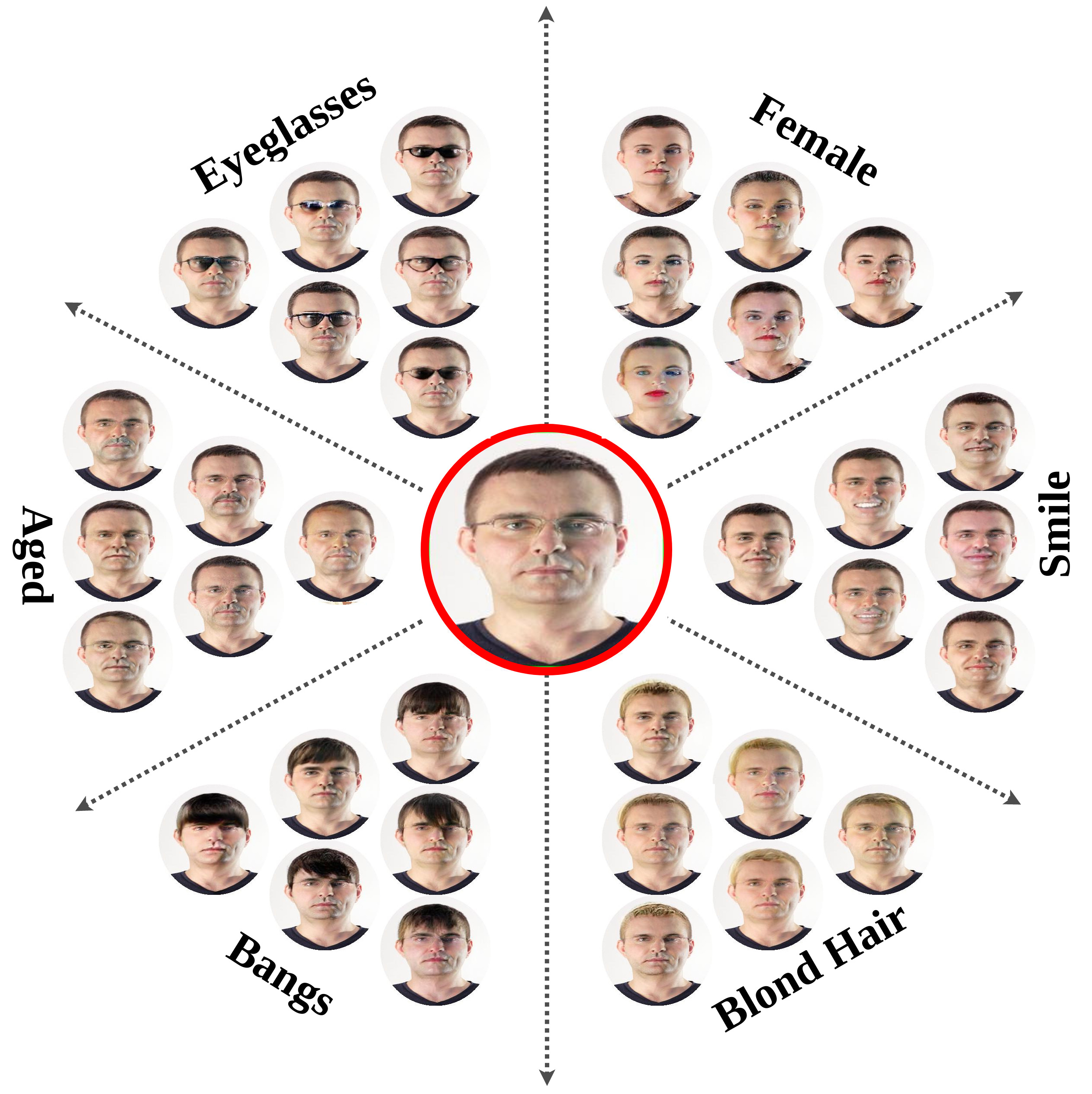

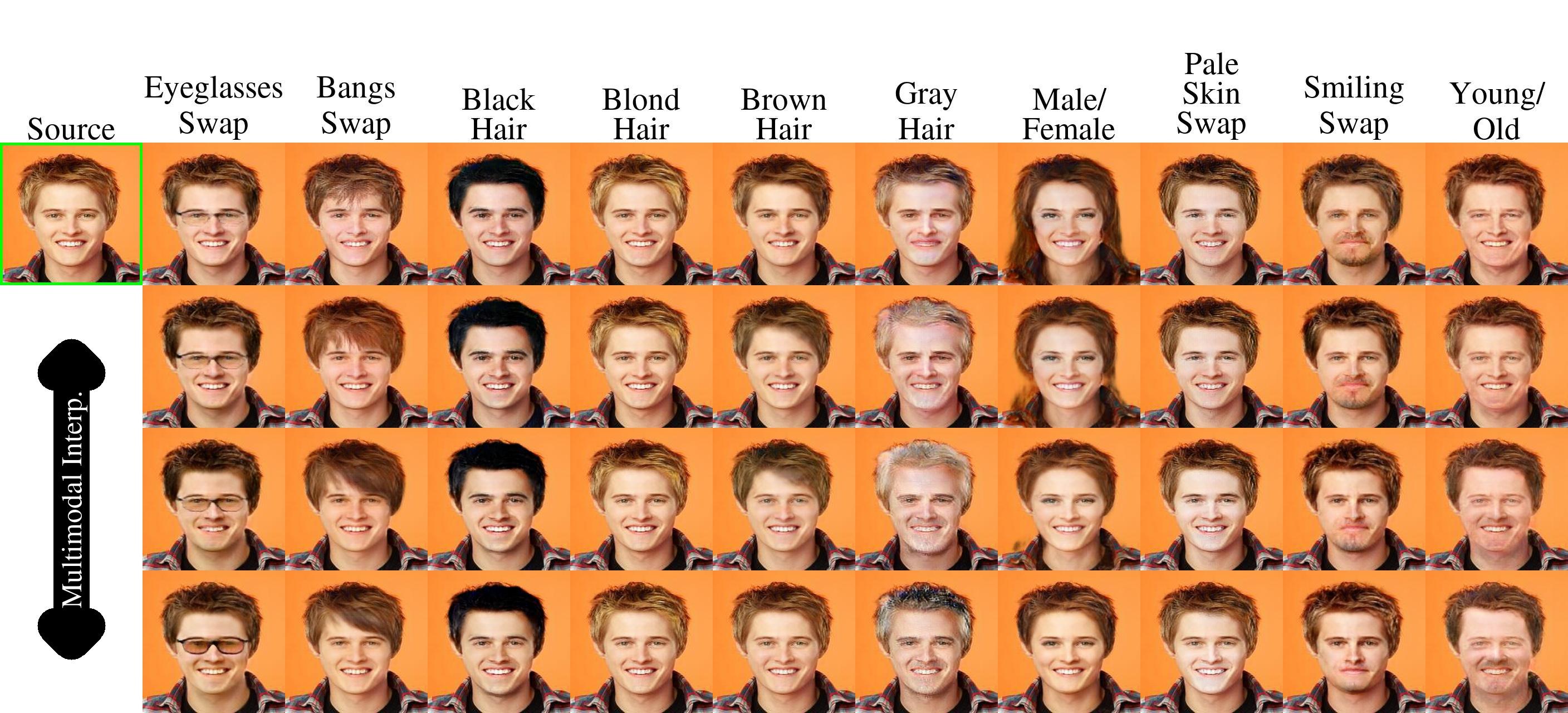

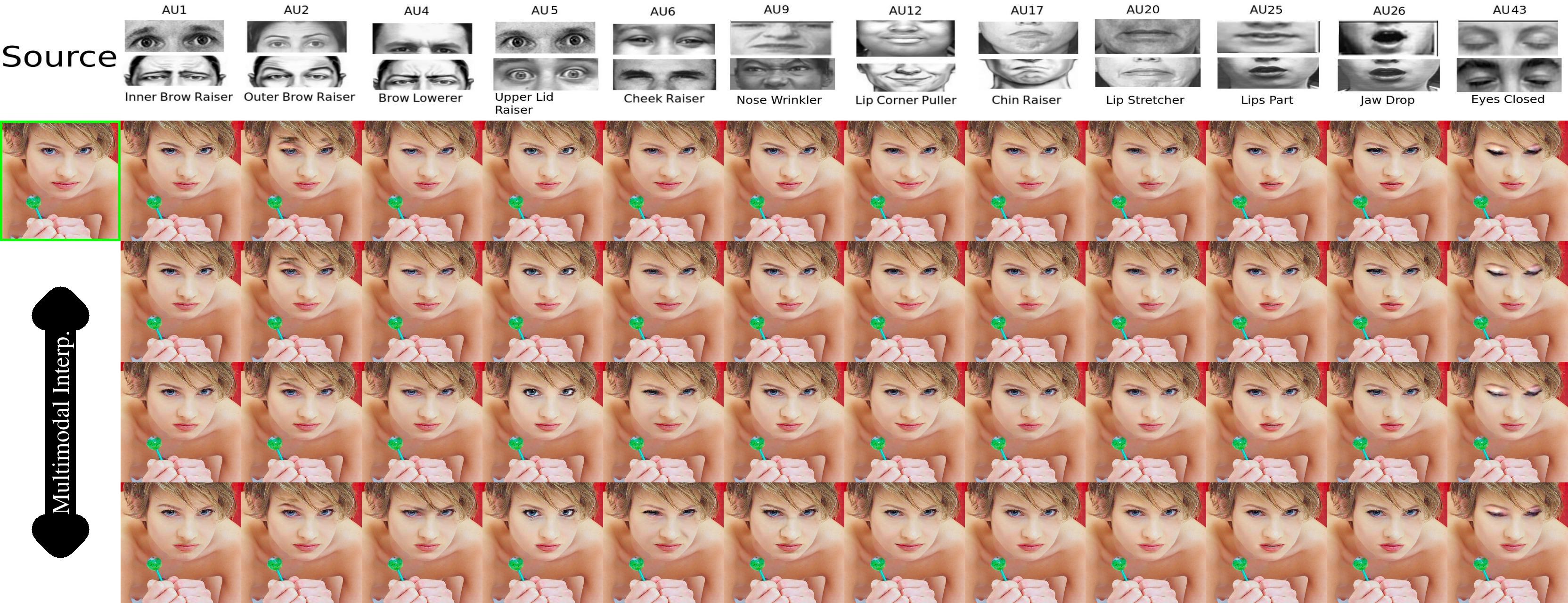

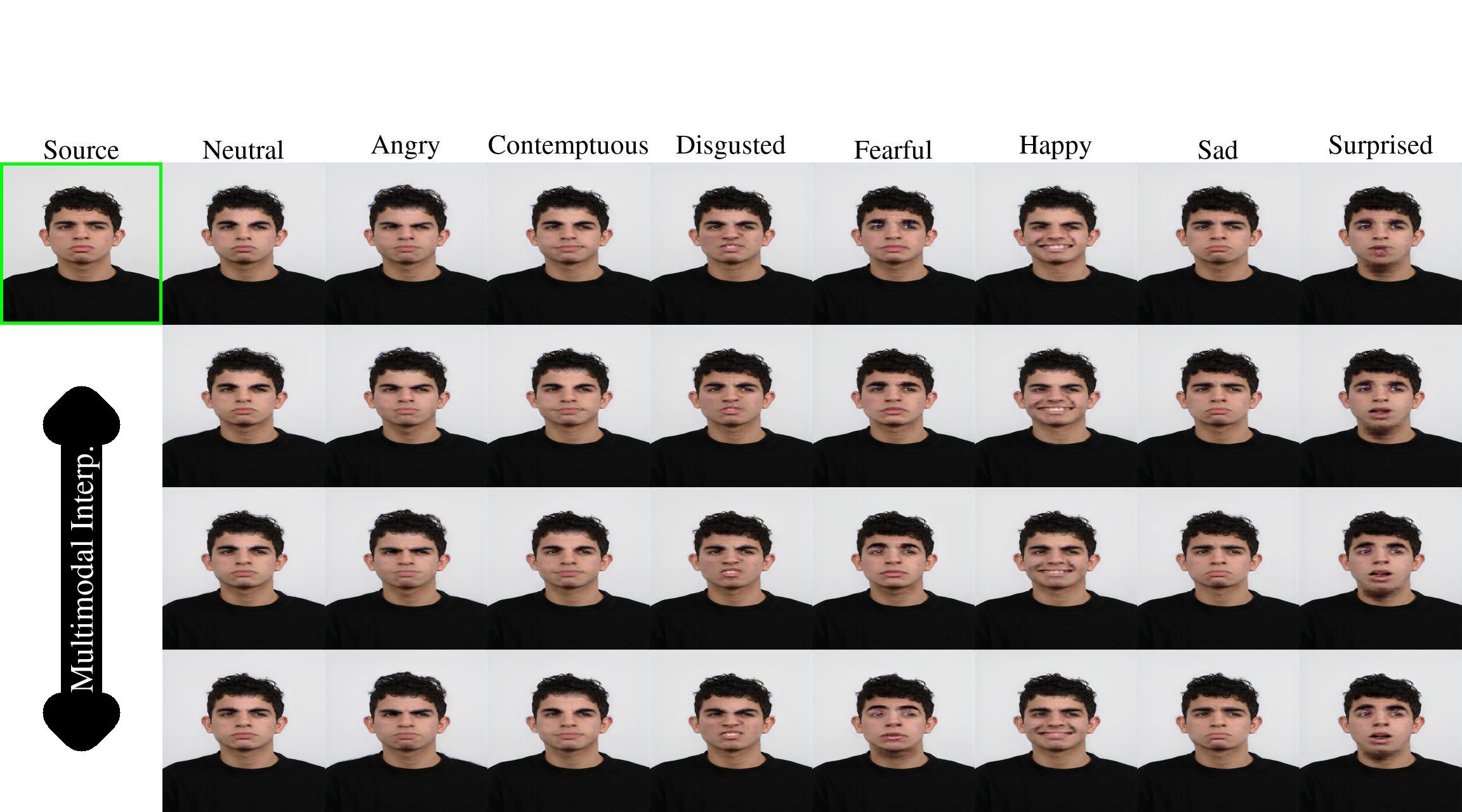

./main.py --GPU=$gpu_id --dataset_fake=CelebA --mode=test --DEMO_PATH=location/image_jpg/or/location/dirDEMO performs transformation per attribute, that is swapping attributes with respect to the original input as in the images below. Therefore, --DEMO_LABEL is provided for the real attribute if DEMO_PATH is an image (If it is not provided, the discriminator acts as classifier for the real attributes).

Models trained using Pytorch 1.0.

For multiple GPUs we use Horovod. Example for training with 4 GPUs:

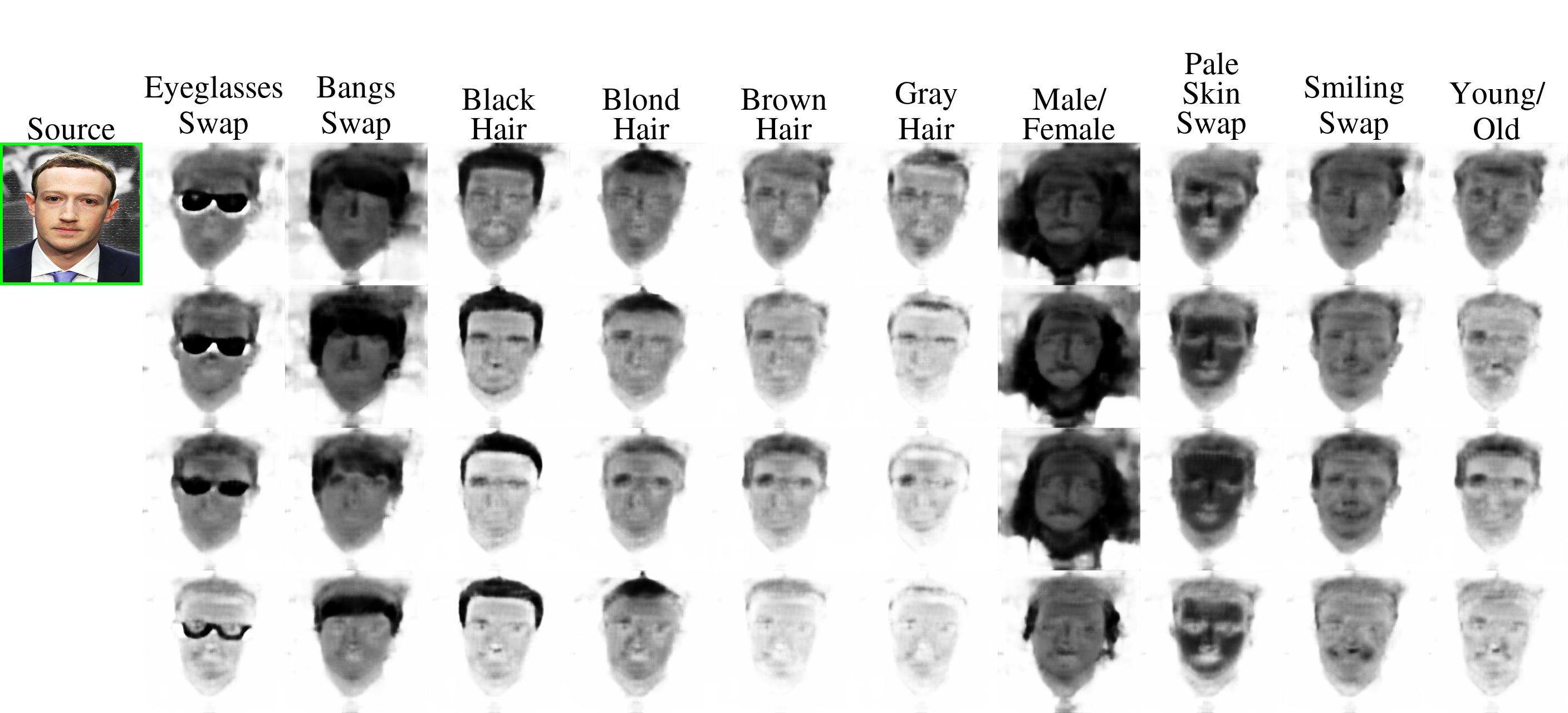

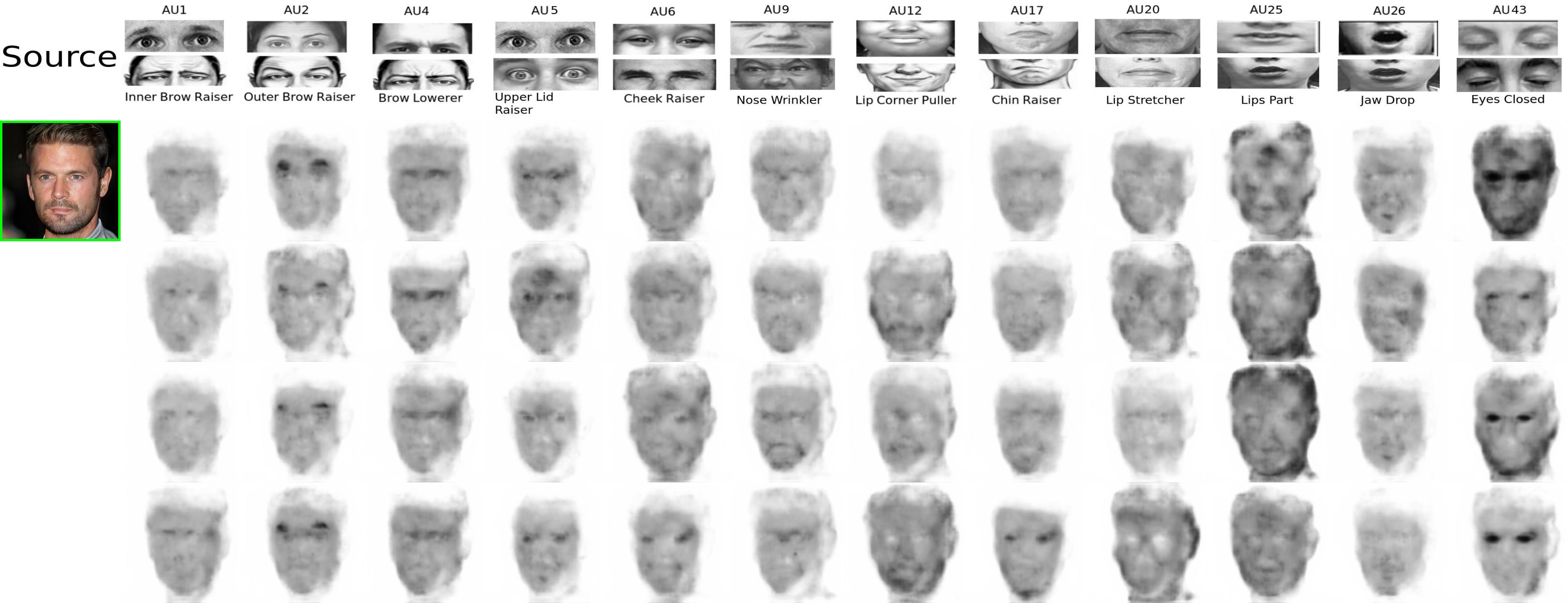

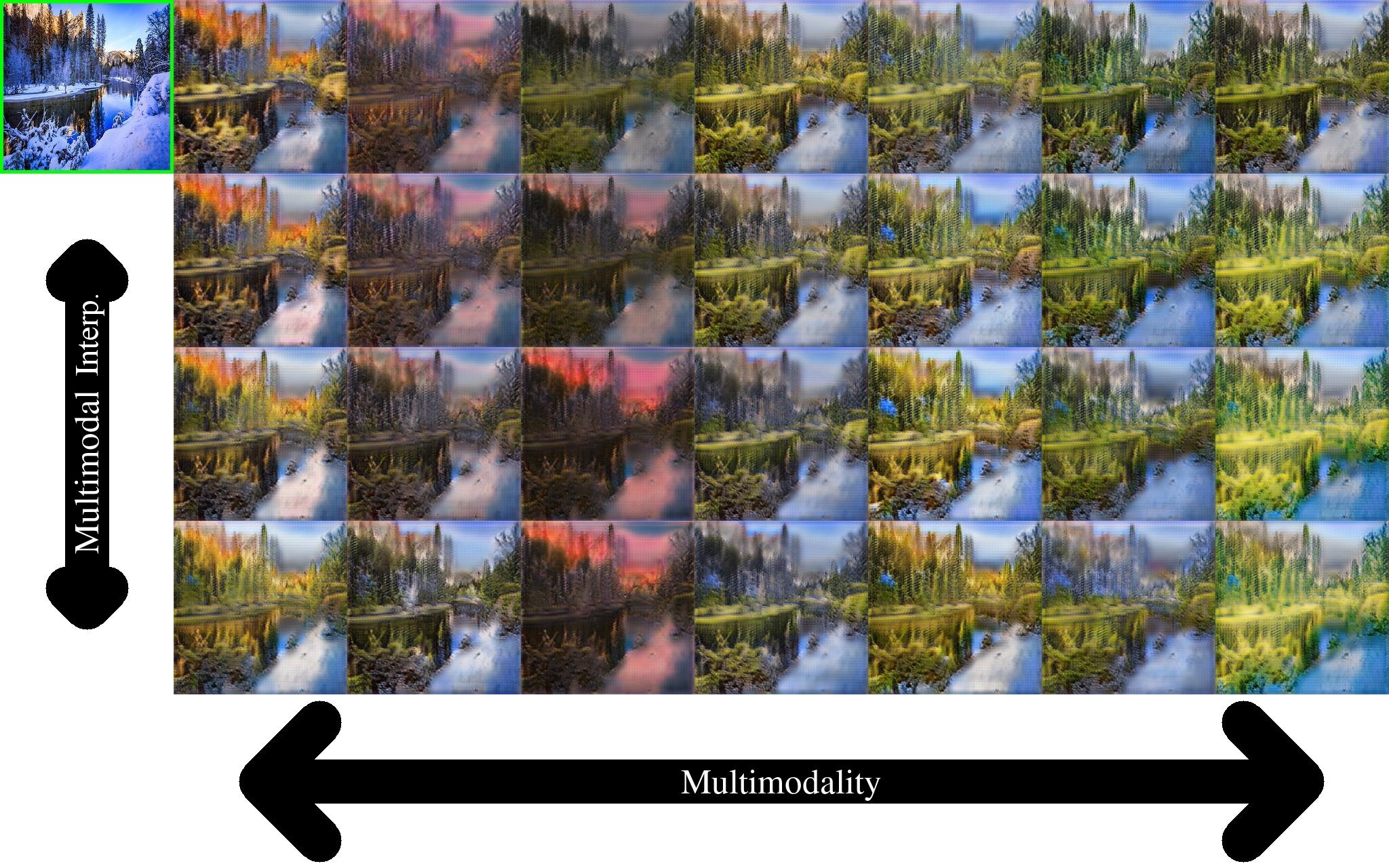

mpirun -n 4 ./main.py --dataset_fake=CelebAFirst column (original input) -> Last column (Opposite attributes: smile, age, genre, sunglasses, bangs, color hair). Up: Continuous interpolation for the fake image. Down: Continuous interpolation for the attention mechanism.