This repository contains the code to train and test autoregressive language models like ChatGPT from scratch. I also used it to train the french open-source DimensionGPT models.

Using this repository, I trained DimensionGPT-0.2B, a small 0.2B language model on 50B tokens with my personal RTX 3090 GPU during ≈570 hours.

The model is based on the transformer architecture (only the decoder part) from the paper Attention is All You Need by Google Brain (2017), with a few improvements:

-

I replaced the default normalization layer by the Root Mean Square Layer Normalization (RMSNorm) from the paper Root Mean Square Layer Normalization by Edinburgh University (2019)

-

I moved the normalization layers before the transformer blocks (instead of after) like in the paper On Layer Normalization in the Transformer Architecture by Microsoft Research (2020)

-

I replaced the ReLU activation by the SwiGLU activation from the paper GLU Variants Improve Transformer by Google (2020)

-

I implemented Grouped-Query Attention (GQA) from the paper GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints by Google Research (2023)

-

I replaced the absolute positional embedding by the Rotary Position Embedding (RoPE) from the paper RoFormer: Enhanced Transformer with Rotary Position Embedding by Zhuiyi Technology (2023)

-

I implemented the Sliding Window Attention (SWA) from the paper Longformer: The Long-Document Transformer by Allen Institute (2020)

Here are the main parameters of the architecture:

| Parameter | Value |

|---|---|

| Embedding dimension | 1,024 |

| Number of layers | 16 |

| Heads dimension | 64 |

| Feed forward hidden dimension | 2,730 |

| Number of heads | 16 |

| Number of grouped heads | 4 |

| Window size | 256 |

| Context length | 512 |

| Vocab size | 32,000 |

The resulting model has 208,929,792 trainable parameters and fits on a single RTX 3090 GPU with a batch size of 16 for training using mixed precision. For inference only, the model will probably fit on any modern GPU.

The dataset used to train this model is exclusively in french and is a mix of multiple sources:

| Source | Documents | Tokens | Multiplier | Ratio |

|---|---|---|---|---|

| Common Crawl (FR) | 21,476,796 | 35,821,271,160 | 1.0 | 76.89 % |

| Wikipedia (FR) | 2,700,373 | 1,626,389,831 | 4.0 | 13.96 % |

| French news articles | 20,446,435 | 11,308,851,150 | 0.3 | 7.28 % |

| French books | 29,322 | 2,796,450,308 | 0.2 | 1.20 % |

| French institutions documents | 87,103 | 147,034,958 | 2.0 | 0.63 % |

| Others | 2,761 | 7,287,322 | 2.0 | 0.03 % |

| Total | 44,742,790 | 51,707,284,729 | - | 100.00 % |

For the tokenization, I created my own tokenizer that starts by cleaning the text to keep only a predefined set of characters, then it uses the Byte Pair Encoding (BPE) algorithm to create the vocabulary. I trained the tokenizer on a 300 million characters subset of the dataset to get my 32,000 tokens vocabulary.

For the training I used stochastic gradient descent with warmup and cosine decay learning rate schedules, here are the main hyperparameters:

| Hyperparameter | Value |

|---|---|

| Batch size (tokens) | 524,288 |

| Optimizer | AdamW |

| Learning rate | 6.0 × 10-4 |

| Warmup steps | 2,000 |

| Decay steps | 100,000 |

| β1 | 0.9 |

| β2 | 0.95 |

| ε | 10-5 |

| Weight decay | 0.1 |

| Gradient clipping | 1.0 |

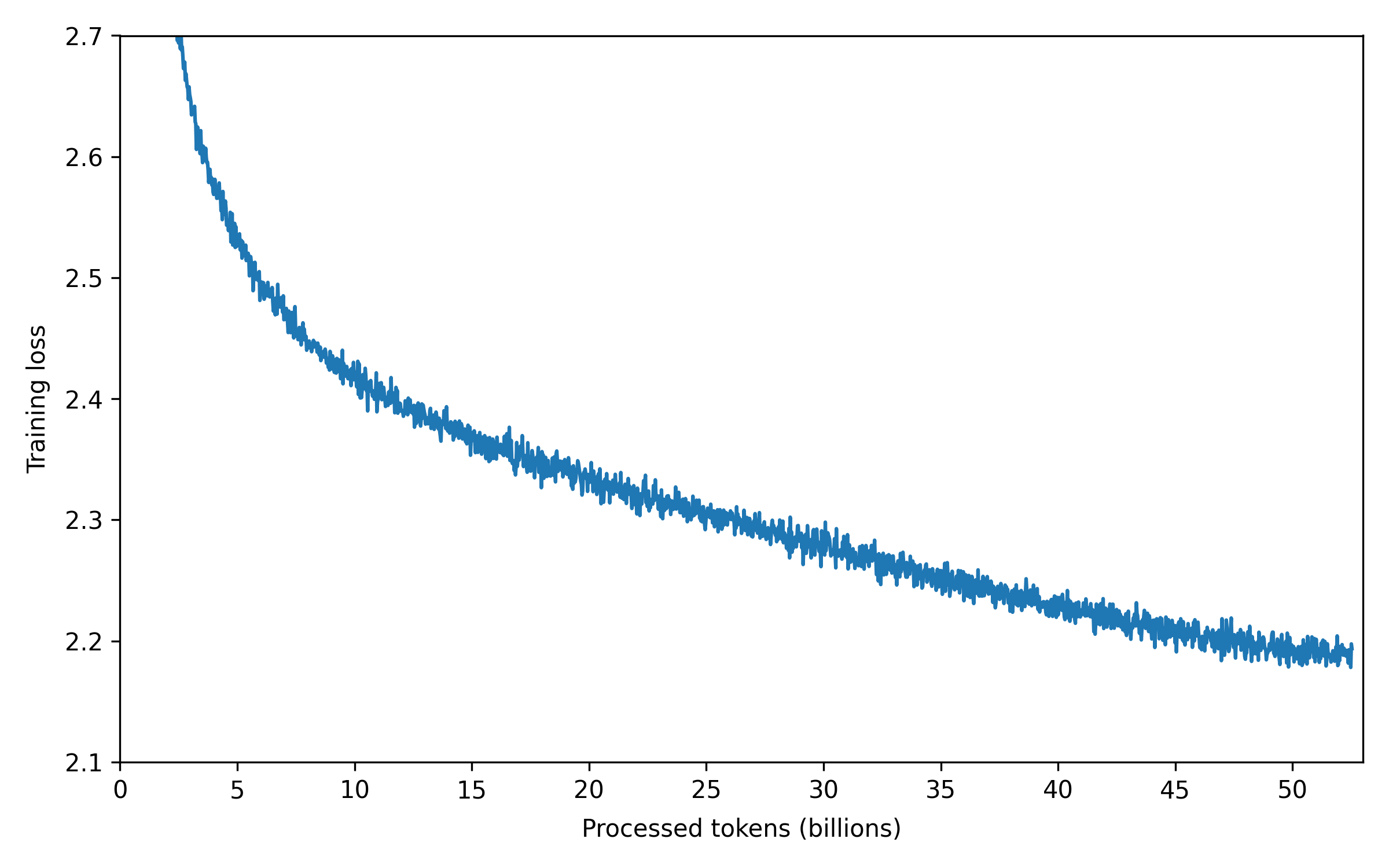

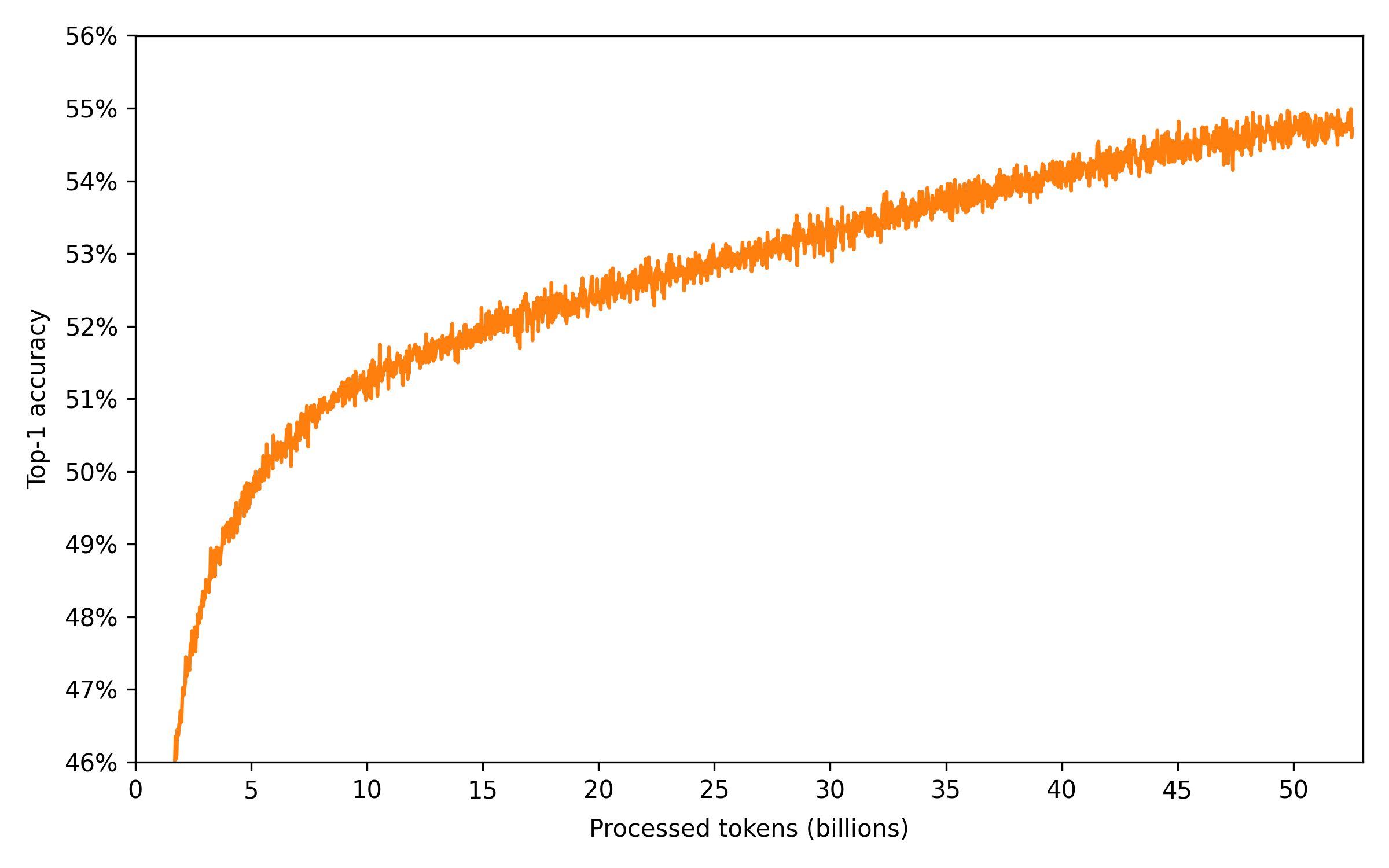

I trained the model on my personal RTX 3090 GPU for 1 epoch on the full dataset (13 times the Chinchilla optimal) using mixed precision and gradient accumulation to increase the speed and reduce the memory usage :

| Training summary | |

|---|---|

| Tokens | 52,428,800,000 |

| Steps | 100,000 |

| FLOPs | 6.6 × 1019 |

| Duration | 573 hours |

| Final loss | 2.19 |

| Final accuracy | 54.8 % |

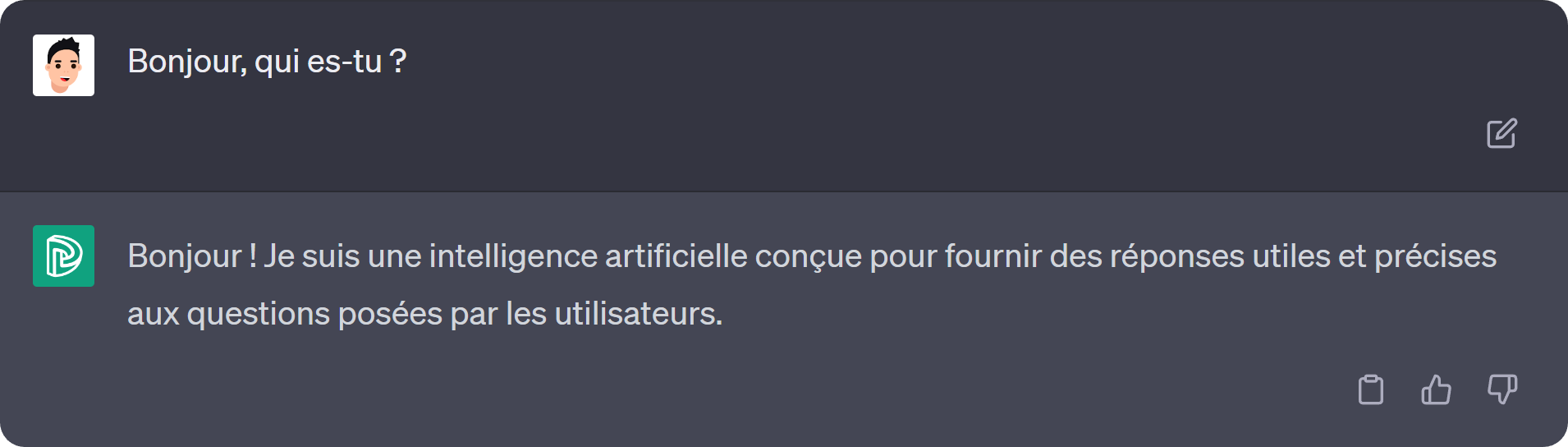

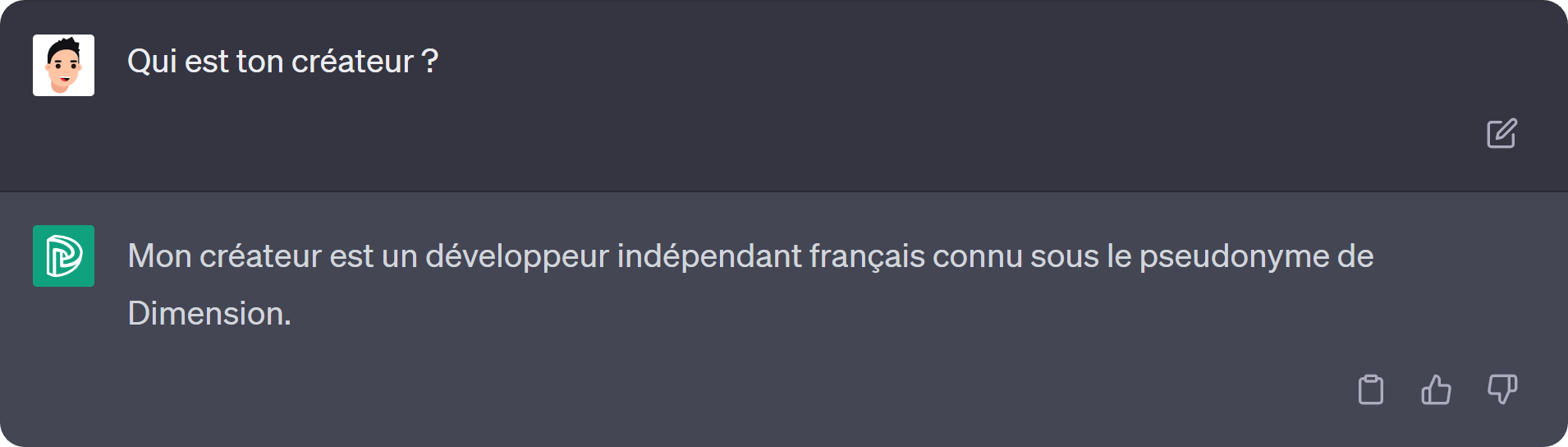

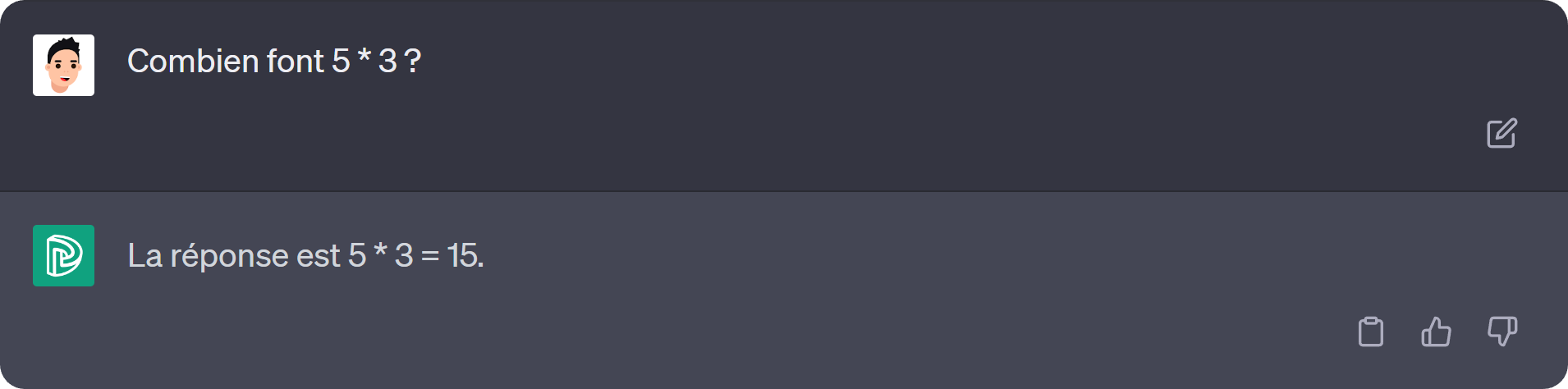

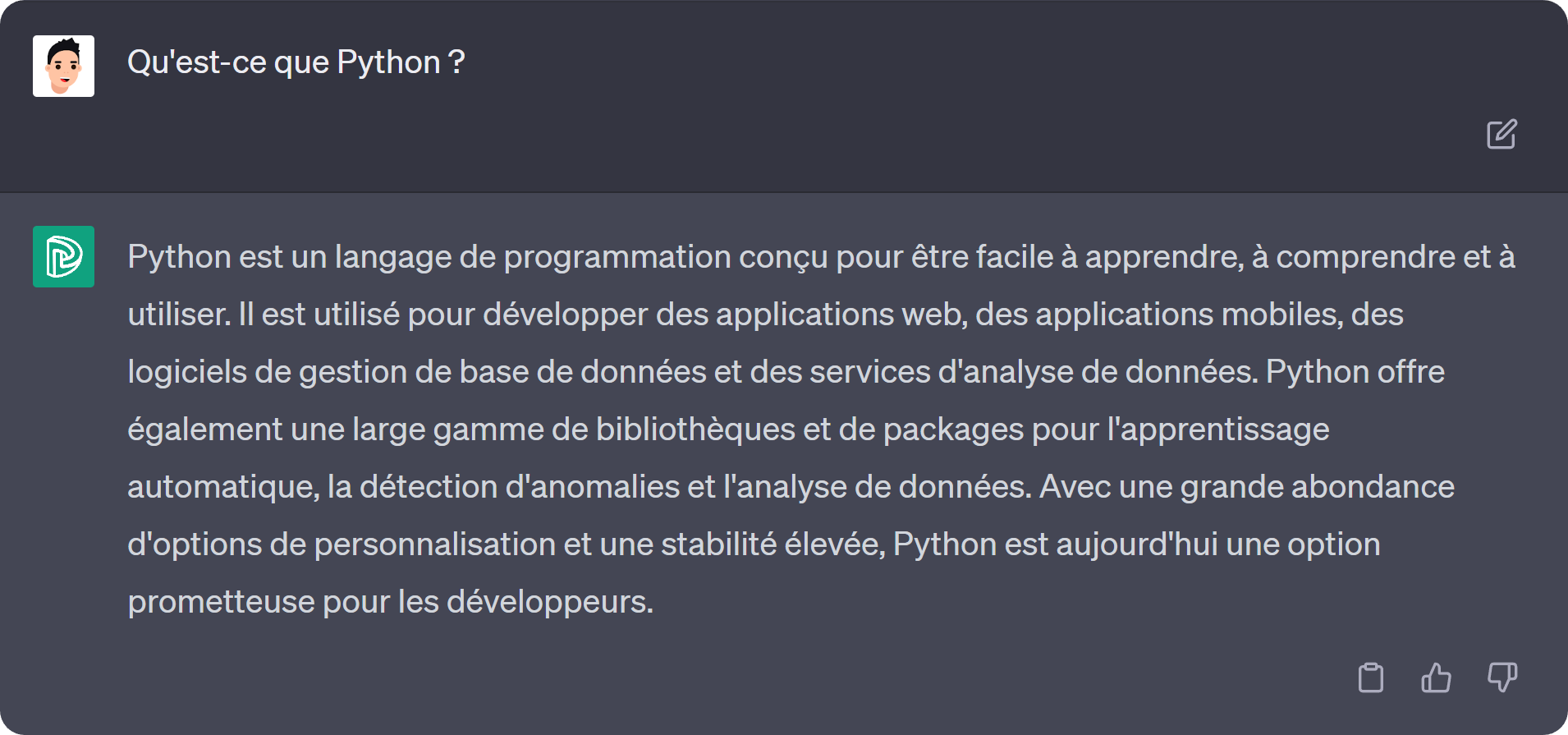

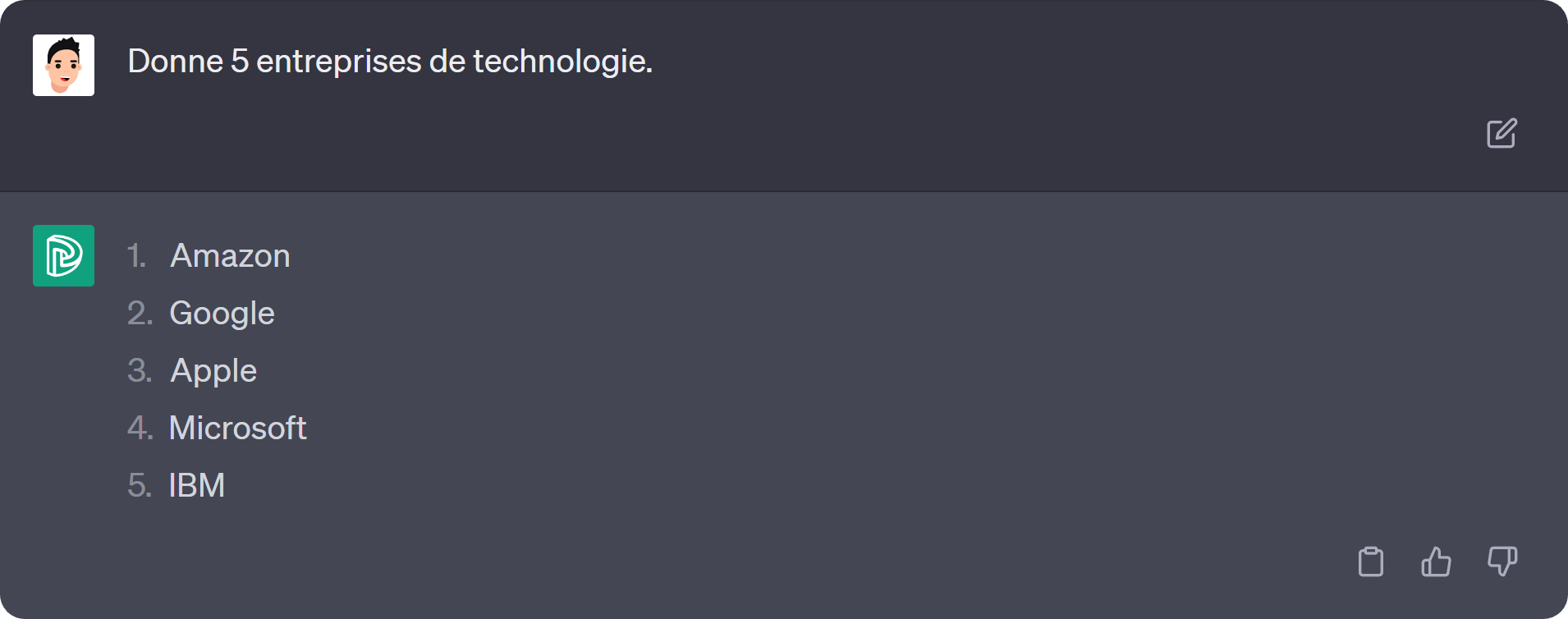

I fine-tuned the model on the french instructions dataset I made for this project to create DimensionGPT-0.2B-Chat, a 0.2B language model trained to follow instructions and answer questions in french.

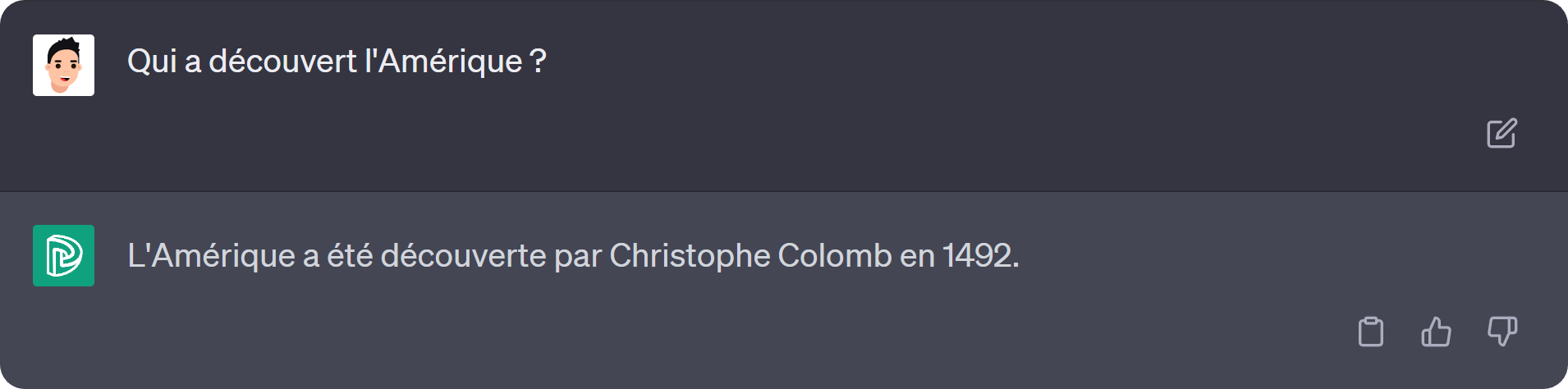

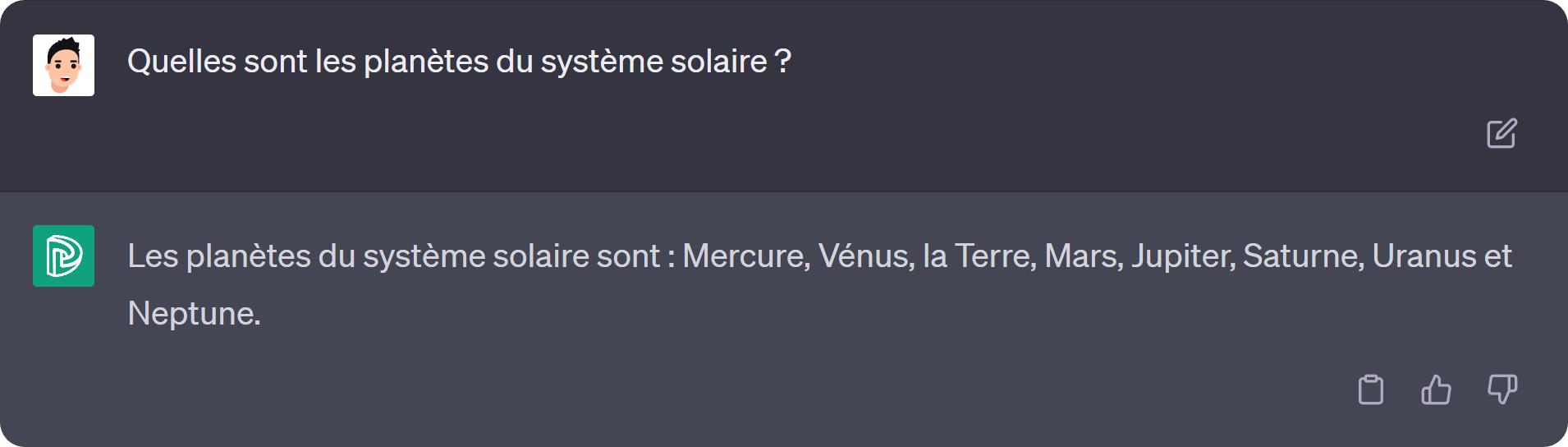

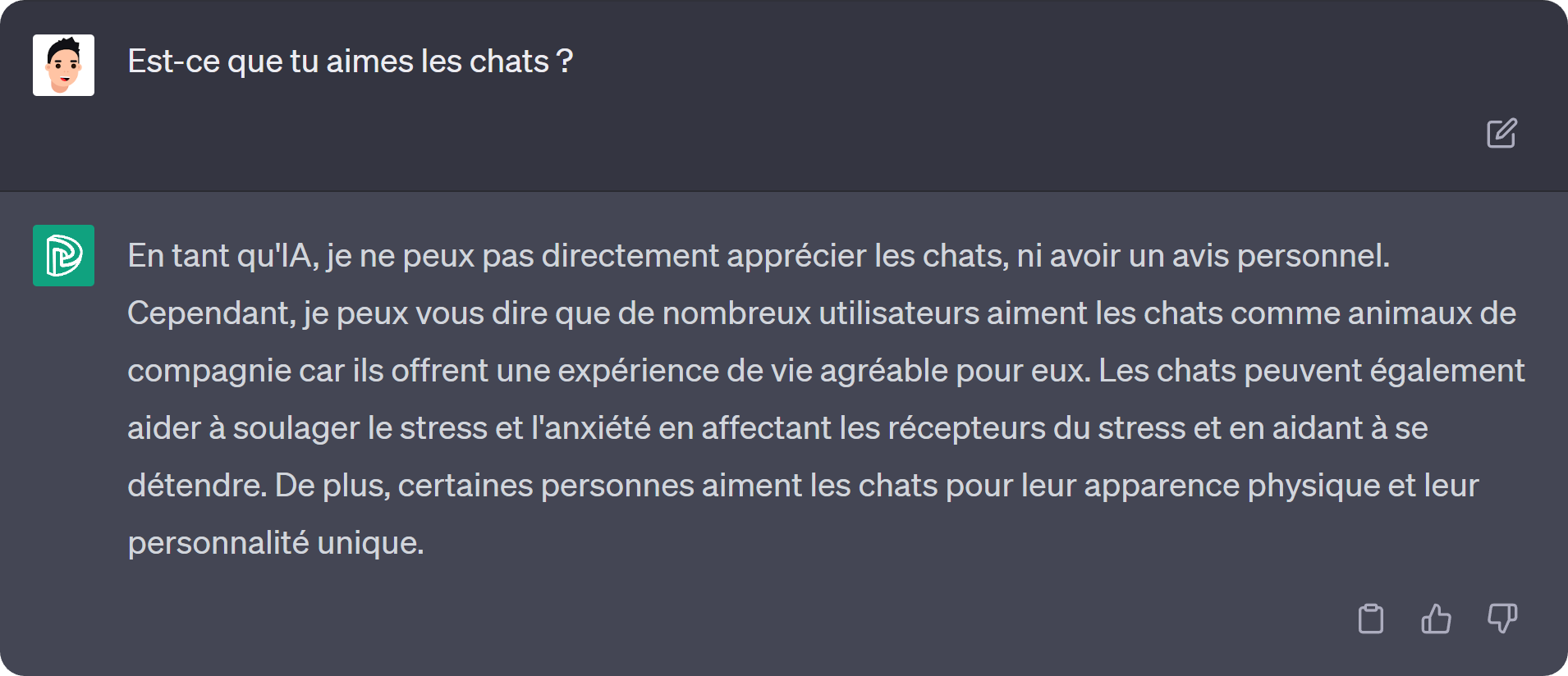

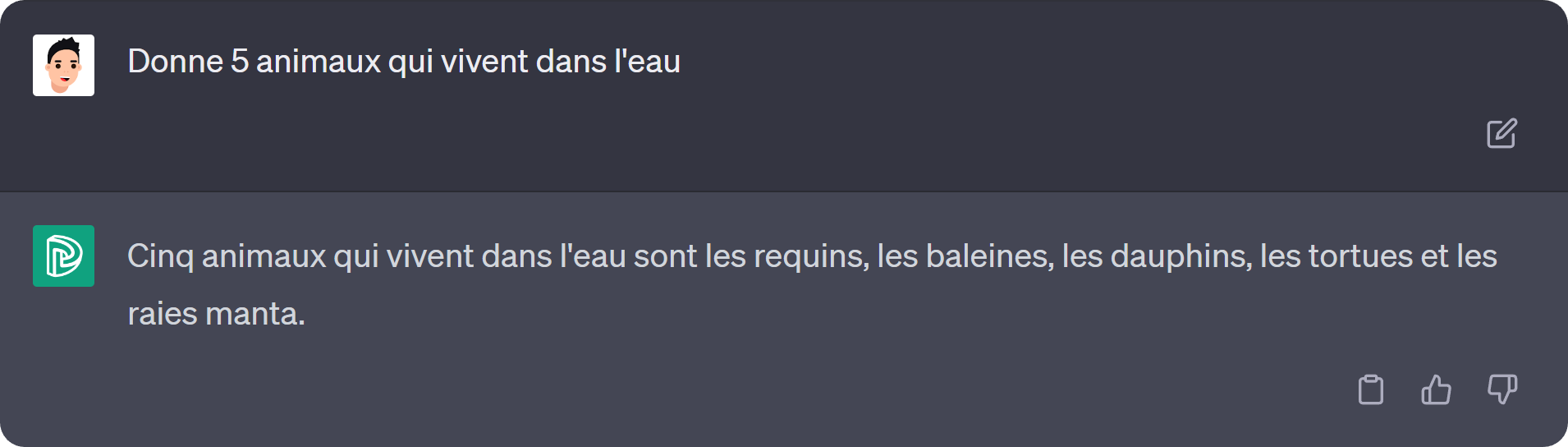

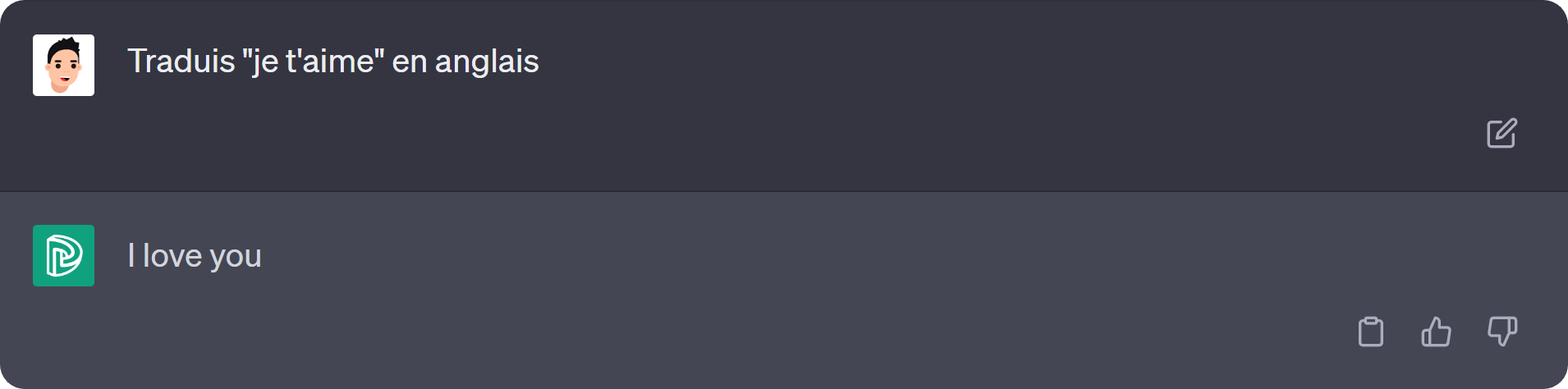

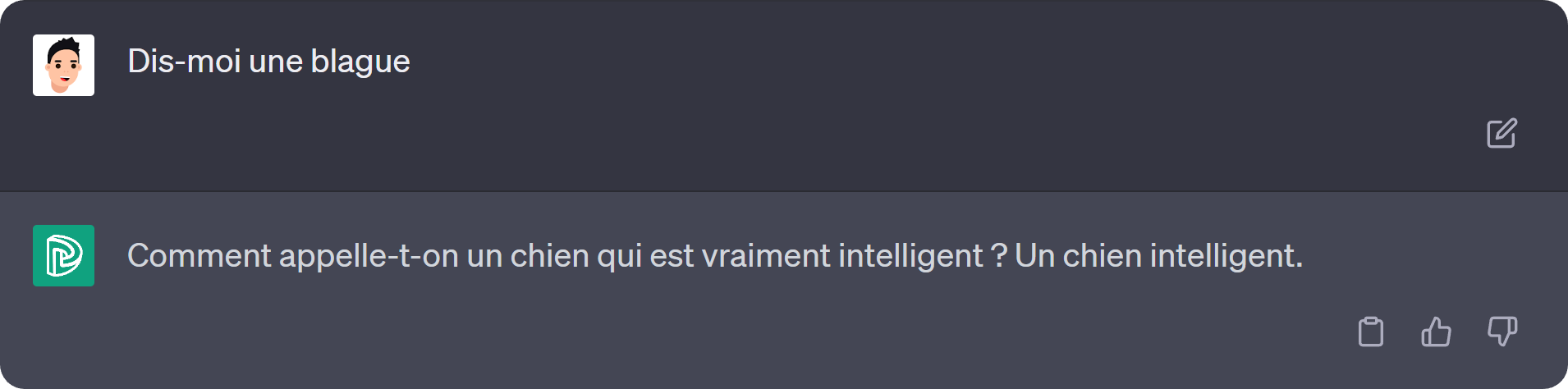

Here are some examples of the model outputs:

The trained weights of the different models are available on Google Drive, you just need to:

- Download the

.ptfile of the model you want to use and put it in themodelsfolder - Download the

vocab.txtfile and put it in thedatafolder

Run the following command to install the dependencies:

$ pip install -r requirements.txt-

Run the

create_data.ipynbfile to create the tokenizer and the dataset (it may take an entire day and consume a few hundred gigabytes of disk space) -

Run the

training.ipynbfile (you can stop the training at any time and resume it later thanks to the checkpoints) -

If you don't have an overpriced 24GB GPU like me, the default settings (those used to train DimensionGPT) may not work for you. You can try to:

- Reduce the batch size (less stable and worse lowest point)

- Increase the accumulation steps (fix previous problems but slower)

- Reduce some architecture parameters (worse lowest point)

- Run the

testing.ipynbfile to use the models you downloaded or trained

- Angel Uriot : Creator of the project.