- Introduction

- Dependencies and Installation

- Quick Inference

- Codes Demos

- Image Quality Assessment

- Inference Dataset

- Citation

- Acknowledgement

005_1.mp4

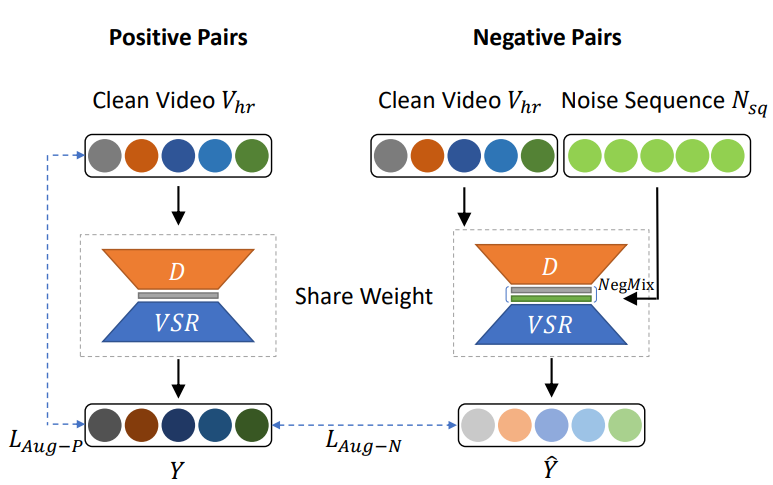

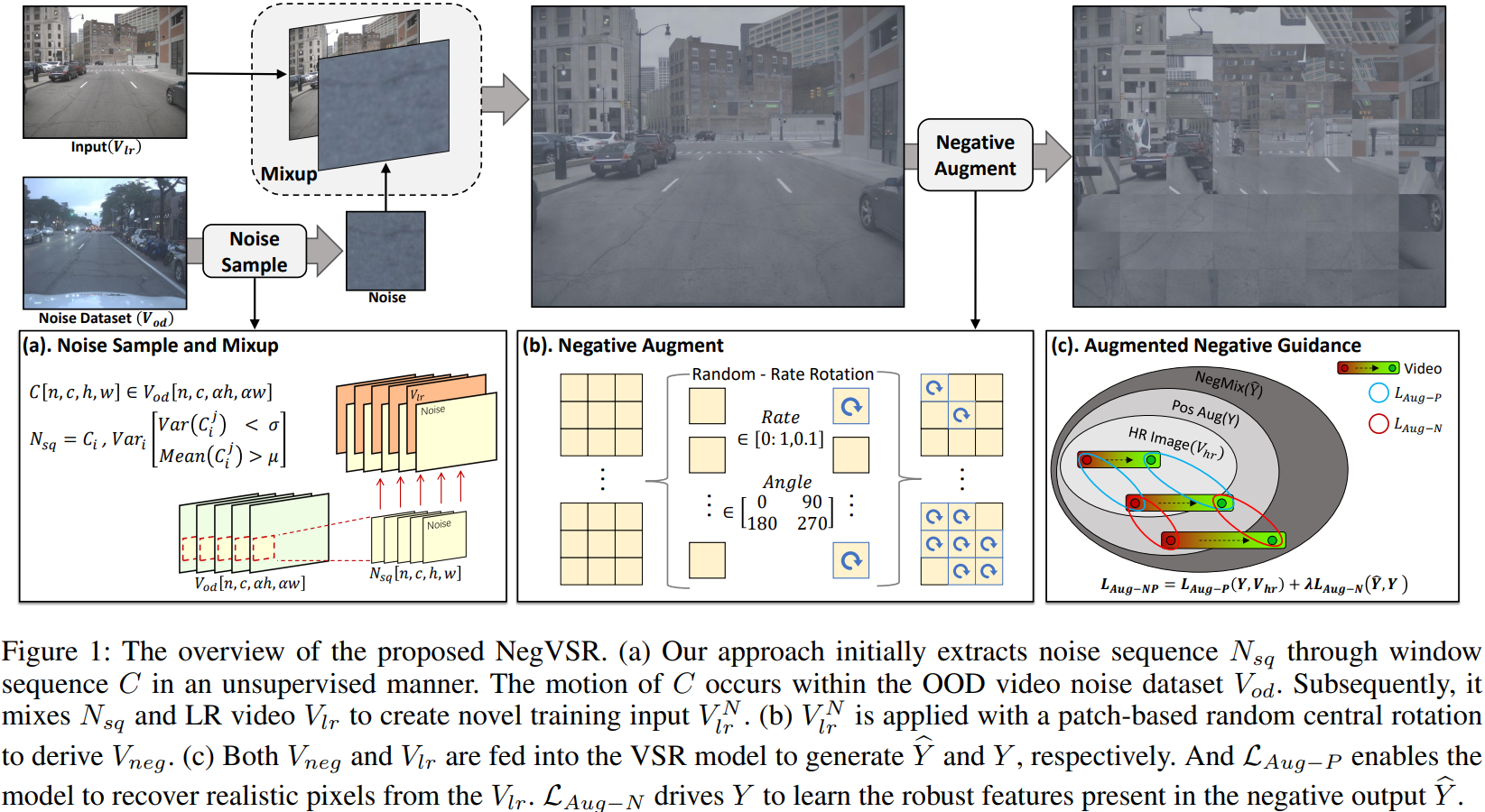

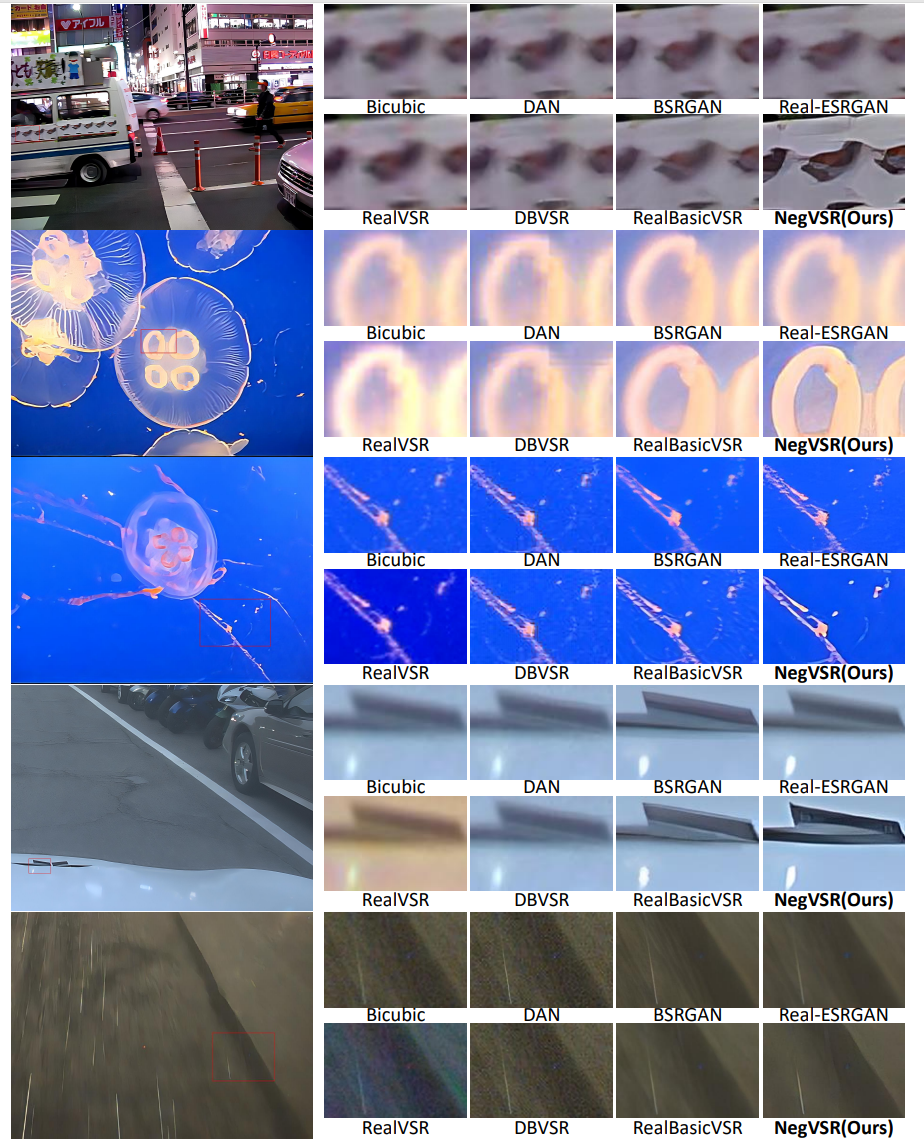

We propose a Negatives augmentation strategy for generalized noise modeling in Video Super-Resolution (NegVSR) task. Specifically, we first propose sequential noise generation toward real-world data to extract practical noise sequences. Then, the degeneration domain is widely expanded by negative augmentation to build up various yet challenging real-world noise sets. We further propose the augmented negative guidance loss to learn robust features among augmented negatives effectively. Extensive experiments on real-world datasets (e.g., VideoLQ and FLIR) show that our method outperforms state-of-the-art methods with clear margins.

- Following RealBasicVSR

- Python == 3.8

- Recommend mmedit version == 0.15.1

-

Clone repo

git clone https://github.com/NegVSR/NegVSR.git cd NegVSR -

Install

Following RealBasicVSR

Download the pre-trained NegVSR models [Baidu Drive] (code:1234), and put them into the weights folder. Currently, the available pre-trained models are:

pretrain_wNegVSR_100000itr_finetune_woNegVSR_150000itr_VideoLQ.pth: The sharper model for VideoLQ (Table 2).pretrain_wNegVSR_100000itr_finetune_woNegVSR_150000itr_FLIR.pth: The sharper model for FLIR (Table 3).pretrain_wNegVSR_100000itr_finetune_wNegVSR_150000itr.pth: Both pretrain and finetune use our method (Better visualization).

Inference on Frames

Replace the basicvsr_net.py in mmedit ('[Your anaconda root]/envs/[Your envs name]/lib/python3.x/site-packages/mmedit/models/backbones/sr_backbones'/basicvsr_net.py) with the basicvsr_net.py in our repository.

python codes/inference_realbasicvsr2.pyUsage:

--config The test config file path. Default: 'configs/realbasicvsr_x4.py'

--checkpoint The pre-trained or customly trained model path. Default: 'weights/xxx.pth'

--input_dir The directory of the input video. Default: 'VideoLQ'

VideoLQ:

000:

00000000.png

00000001.png

...

00000099.png

001:

002:

--output_dir Output root.

--max_seq_len The maximum sequence length to be processed. Default: 30

--is_save_as_png Whether to save as png. Default: True

--fps The fps of the (possible) saved videos. Default: 25After run the above command, you will get the SR frames.

Image to Video

We provide code for image to video conversion.

python codes/img2video.pyUsage:

--inputPath The output root of 'inference_realbasicvsr2.py'

VideoLQ:

000:

00000000.png

00000001.png

...

00000099.png

001:

002:

--savePath The output video root

savePath:

000.mp4

001.mp4

...

--fps The fps of the (possible) saved videos. We also provide code for video to image conversion. You can find in video2img.py

Collect noiseSequence

# Run collect_noise_sequence_supplement.py directly

python codes/collect_noise_sequence/collect_noise_sequence_supplement.pyNegative augmentation

# Run rot_p.py directly

python codes/rot_PM/rot_p.pyWe have migrated the negative augmentation code to basicvsr_net.py.

Selecting samples for evaluation

# For FLIR

python codes/flir_sub.py

# For VideoLQ

python codes/videolq_sub.py No-reference metrics calculation

Recommended for using IQA-PyTorch. Our paper results can be found in Results.

- You can download the VideoLQ and our FLIR testing dataset from [Baidu Drive] (code:1234).

- Click on the FLIR for the full FLIR dataset.

If you find this project useful for your research, please consider citing our paper. 😃

@inproceedings{song2024negvsr,

title={Negvsr: Augmenting negatives for generalized noise modeling in real-world video super-resolution},

author={Song, Yexing and Wang, Meilin and Yang, Zhijing and Xian, Xiaoyu and Shi, Yukai},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={9},

pages={10705--10713},

year={2024}

}This project is build based on RealBasicVSR and VQD-SR. We thank the authors for sharing their code.