Tensorflow 2.x Implementation of the original VGGNet Paper

Paper Link

·

Architecture

·

Data Loader

·

Model Trainer

- Table of Contents

- About The Project

- Quick Links

- Features

- Architectures

- Hardware Requirements

- Dataset Download & Setup

- References

- Contributing

- License

- Contact

VGGNet is a Deep Learning Paper published in the year 2015 by Visual Geometry Group, Department of Engineering Science, University of Oxford (Hence, the name). It was one of the first papers to dive into very deep CNN based architectures with up to 144M trainable parameters.

This implementation is a part of my learning where I take an attempt to implement Key Deep Learning Papers using Tensorflow or PyTorch.

The Original Literature Can be found here: VGGNet Paper

- The Original Literature Can be found here: VGGNet Paper

- Data Loader: GitHub | nbViewer

- Model Architecture: GitHub | nbViewer

- Model Trainer: GitHub | nbViewer

- Tf.Data - Optimized Tensorflow Data Pipelining using Tf.Data and Tensorflow Datasets

- Multiple VGG ARchitectures - Implementation of VGGNet from VGG 11 to VGG 19 has been done

- Mixed Precision Training - The Implementation uses FP16 - Mixed Precision Training since that uses the Tensor Cores of the Nvidia GPU with Compute Capability 7.0 or higher.

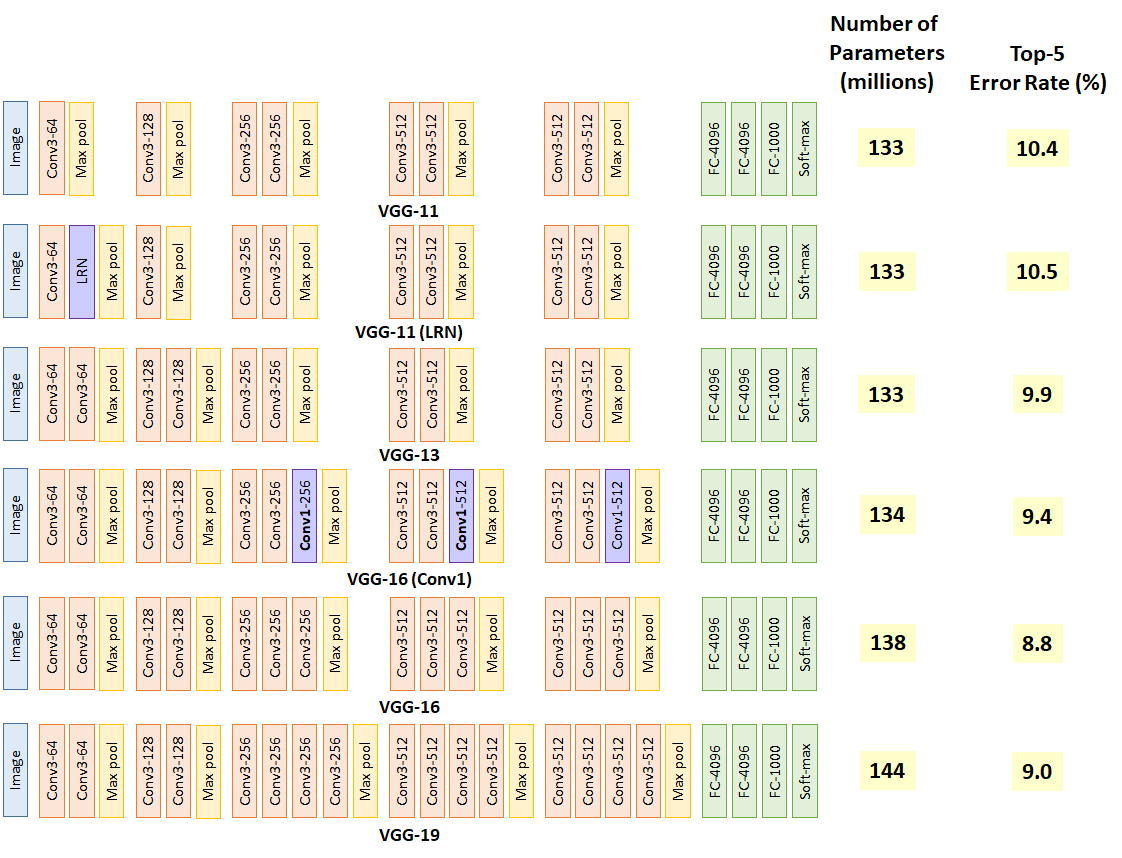

All the VGG Architectures mentioned in the original paper has been implemented as per spec.

- Network A - VGG 11

- Network B - VGG 13

- Network C - VGG 16 with Conv 1x1

- Network D - VGG 16 with Conv 3x3 (Widely Used)

- Network E - VGG 19 (Widely Used)

Nvidia GPU for Training is recommended, However, it can work with CPUs as well (Not Recommended, ImageNet is Huge. It will probably take over a year to Train)

- CPU: AMD Ryzen 7 3700X - 8 Cores 16 Threads

- GPU: Nvidia GeForce RTX 2080 Ti 11 GB

- RAM: 32 GB DDR4 @ 3200 MHz

- Storage: 1 TB NVMe SSD

- OS: Ubuntu 20.04

- CPU: AMD/Intel 4 Core CPU (CPU will become a bottleneck here)

- GPU: Nvidia GeForce GTX 1660 6 GB (You can go lower, but I would not recommend it)

- RAM: 16 GB

- Storage: Minimum of 500 GB SSD (HDD is Not Recommended)

- OS: Any Linux Distribution

Enough HDD/SSD space is required for the following:

- Downloading Raw Dataset - 156.8 GB

- Convert to TFRecord and Store - 155.9 GB

- Total Storage Required - 312.7 GB

An SSD is recommended and a Mechanical HDD should be avoided since it will slow down the data loader significantly.

ImageNet Download Link: Download ImageNet Dataset

- Download Train Images (Required):

ILSVRC2012_img_train.tar- Size 137.7 GB - Download Val Images (Required):

ILSVRC2012_img_val.tar- Size 6.3 GB - Download Train Images (Optional):

ILSVRC2012_img_test.tar- Size 12.7 GB

Download the dataset from the above link and put it in the folder like shown:

imagenet2012/

├── ILSVRC2012_img_test.tar

├── ILSVRC2012_img_train.tar

└── ILSVRC2012_img_val.tarCreate another folder and create the folders data, download & extracted like shown:

imagenet/

├── data/

├── downloaded/

└── extracted/- Source Literature: VGGNet Paper

- Paper Explanation: ImageNet Classification with Deep Convolutional Neural Networks - YouTube

- Download the Dataset by Signing up with your Institution Email Addreess: Download ImageNet Dataset

- Tensorflow Datasets ImageNet2012 Dataset: API Docs

- Efficient Data Pipelining using: Tf.Data

- Tf.Data Training and Testing Split: Slice & Split Docs

- Data Loader Template taken from my own Repository: Zero Coding Tf Classifier

Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the GNU AGPL V3 License. See LICENSE for more information.

- Website: Animikh Aich - Website

- LinkedIn: animikh-aich

- Email: animikhaich@gmail.com

- Twitter: @AichAnimikh