Project | ArXiv | Paper | Poster | Demo

Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan, Jorma Laaksonen & Michael Felsberg

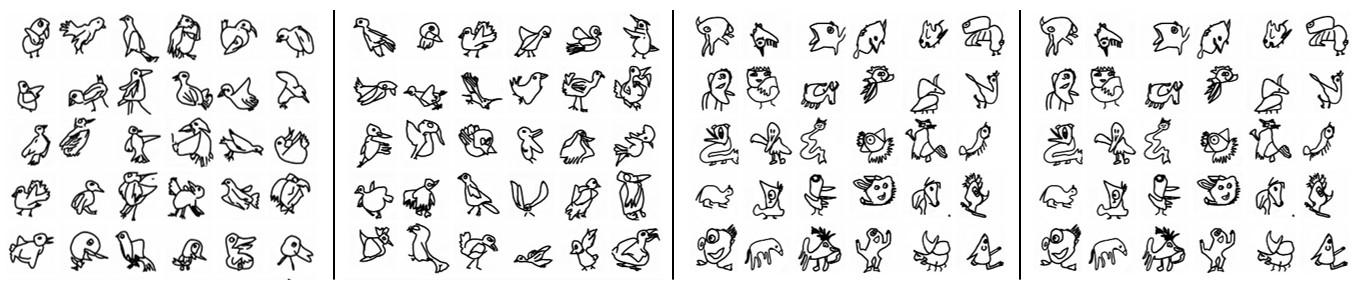

Abstract: Creative sketching or doodling is an expressive activity, where imaginative and previously unseen depictions of everyday visual objects are drawn. Creative sketch image generation is a challenging vision problem, where the task is to generate diverse, yet realistic creative sketches possessing the unseen composition of the visual-world objects. Here, we propose a novel coarse-to-fine two-stage framework, DoodleFormer, that decomposes the creative sketch generation problem into the creation of coarse sketch composition followed by the incorporation of fine-details in the sketch. We introduce graph-aware transformer encoders that effectively capture global dynamic as well as local static structural relations among different body parts. To ensure diversity of the generated creative sketches, we introduce a probabilistic coarse sketch decoder that explicitly models the variations of each sketch body part to be drawn. Experiments are performed on two creative sketch datasets: Creative Birds and Creative Creatures. Our qualitative, quantitative and human-based evaluations show that DoodleFormer outperforms the state-of-the-art on both datasets, yielding realistic and diverse creative sketches. On Creative Creatures, DoodleFormer achieves an absolute gain of 25 in terms of Fr`echet inception distance (FID) over the state-of-the-art. We also demonstrate the effectiveness of DoodleFormer for related applications of text to creative sketch generation and sketch completion..

If you use the code for your research, please cite our paper:

@article{bhunia2021doodleformer,

title={Doodleformer: Creative sketch drawing with transformers},

author={Bhunia, Ankan Kumar and Khan, Salman and Cholakkal, Hisham and Anwer, Rao Muhammad and Khan, Fahad Shahbaz and Laaksonen, Jorma and Felsberg, Michael},

journal={ECCV},

year={2022}

}

- Python 3.7

- PyTorch >=1.4

Please see INSTALL.md for installing required libraries. First, create the enviroment with Anaconda. Install Pytorch and the other packages listed in requirements.txt. The code is tested with PyTorch 1.9 and CUDA 10.2:

git clone https://github.com/ankanbhunia/doodleformer

conda create -n doodleformer python=3.7

conda activate doodleformer

conda install pytorch==1.9.1 torchvision==0.10.1 torchaudio==0.9.1 -c pytorch

pip install -r requirements.txtNext, download the processed Creative Birds and Creative Creatures datasets from the GoogleDrive: https://drive.google.com/drive/folders/14ZywlSE-khagmSz23KKFbLCQLoMOxPzl?usp=sharing and unzip the folders under the directory data/. You can also visit the dataset site for more information https://songweige.github.io/projects/creative_sketech_generation/home.html

To process the raw data from the scratch, check the scripts in data_process.py.

The first stage, PL-Net, takes the initial stroke points as the conditional input and learns to return the bounding boxes corresponding to each body part (coarse structure of the sketch) to be drawn. To train the PL-Net, run the following command:

python PL-Net.py --dataset='sketch-bird' \

--data_path='data/doodledata.npy' \

--exp_name='ztolayout' \

--batch_size=32 \

--beta=1 \

--model_dir='models' \

--save_per_epoch=20 \

--vis_per_step=200 \

--learning_rate=0.0001

The second stage, PS-Net, takes the predicted box locations along with C as inputs and generates the final sketch image. To train the PS-Net, run the following command:

python PS-Net.py --dataset='sketch-bird' \

--data_path='data/doodledata.npy' \

--exp_name='layouttosketch' \

--batch_size=32 \

--model_dir='models' \

--learning_rate=0.0001 \

--total_epoch=200 \

--d_lr=0.0001 \

--g_lr=0.0001 \

.jpg)