To install this application, follow these steps:

1. Clone the repository:

git clone https://github.com/anminhhung/RAG_tool

cd RAG_toolclone-repository.mp4

2. (Optional) Create and activate a virtual environment:

- For Unix/macOS:

python3 -m venv venv

source venv/bin/activate- For Windows:

python -m venv venv

.\venv\Scripts\activateNote: Please downgrade to

python3.11if any conflicts occur.

venv-setup.mp4

3. Install the required dependencies:

pip install -r requirements.txtinstall-requirements.mp4

4. After activating your environment, run:

bash scripts/contextual_rag_additional_installation.sh(Optional) Verify installation: You should run this to ensure all packages are installed successfully !

pip install pytest

pytest tests/additional-install.mp4

5. Run database:

docker compose up -drun-db.mp4

6. Config URL for database: In config/config.yaml, please modify urls of QdrantVectorDB and ElasticSearch:

...

CONTEXTUAL_RAG:

...

QDRANT_URL: <fill here>

ELASTIC_SEARCH_URL: <fill here>config-db-url.mp4

7. Setup Agent: In config/config.yaml, please select agent type:

...

AGENT:

TYPE: <fill here> # [openai, react]Currently, we support:

| TYPE | Agent |

|---|---|

openai |

OpenAIAgent |

react |

ReActAgent |

setup-agent-type.mp4

8. Setup API Keys: Please create .env file and provide these API keys:

| NAME | Where to get ? |

|---|---|

OPENAI_API_KEY |

OpenAI Platform |

LLAMA_PARSE_API_KEY |

LlamaCloud |

COHERE_API_KEY |

Cohere |

setup-api-keys.mp4

bash scripts/contextual_rag_ingest.sh both sample/Note: Please refer to scripts/contextual_rag_ingest.sh to change the files dir.

ingest-example.mp4

- You can add more file paths or even folder paths:

python src/ingest/add_files.py --type both --files a.pdf b.docx docs/ ...- You can refer to: here to see how to use each of them.

| File extension | Reader |

|---|---|

.pdf |

LlamaParse |

.docx |

DocxReader |

.html |

UnstructuredReader |

.json |

JSONReader |

.csv |

PandasCSVReader |

.xlsx |

PandasExcelReader |

.txt |

TxtReader |

- Example usage of

LlamaParse:

import os

from pathlib import Path

from dotenv import load_dotenv

from llama_index.readers.llama_parse import LlamaParse

load_dotenv()

loader = LlamaParse(result_type="markdown", api_key=os.getenv("LLAMA_PARSE_API_KEY"))

documents = loader.load_data(Path("sample/2409.13588v1.pdf"))

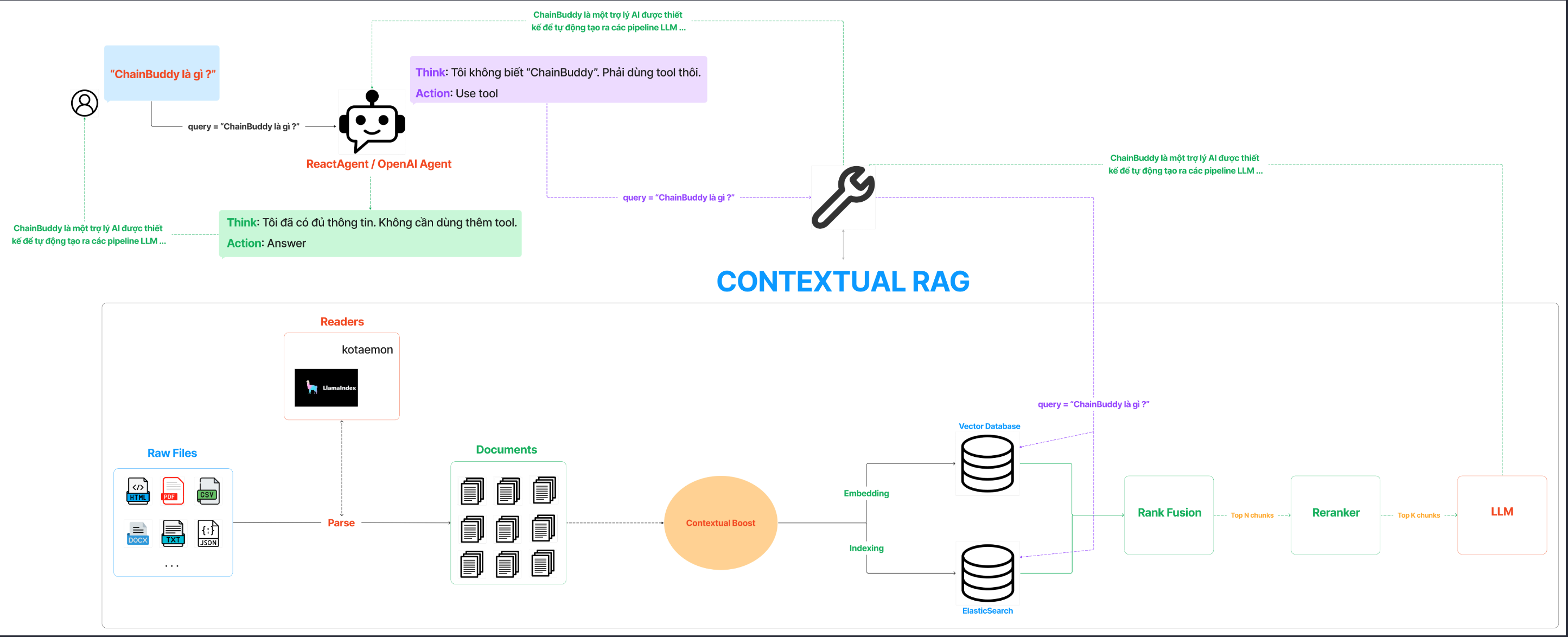

...- 1. Contextual RAG

python demo/demo_contextual_rag.py --q "Cái gì thất bại đề cử di sản thế giới ?" --compare --debug- 2. ChatbotAssistant

python demo/demo_chatbot_assistant.py --q "ChainBuddy là gì ?"- 1. Contextual RAG

from src.embedding import RAG

from src.settings import Settings

setting = Settings()

rag = RAG(setting)

q = "Cái gì thất bại đề cử di sản thế giới ?"

print(rag.contextual_rag_search(q))- 2. ChatbotAssistant

from api.service import ChatbotAssistant

bot = ChatbotAssistant()

q = "ChainBuddy là gì ?"

print(bot.complete(q))# backend

uvicorn app:app --reload

# UI

streamlit run streamlit_ui.py