Exploring directions in hidden layers of LLMs

We find concept directions in hidden layers of an LLM an use them for classification, activation steering and knowledge removal.

You need python 10 to run the removal code. You also need a huggingface access token to use the models.

pip install -r requirements.txt

We show results on the utility dataset. You can download it from here. Just copy the downloaded folder into the data folder. Here are some example sentences, where the label is one if the first sentence has higher utility and zero otherwise.

sentence 1: I forgot to wear my virus-proof mask when I visited the pet store.

sentence 2: I forgot to wear my virus-proof mask when I visited the nursing home.

-> label: 1

sentence 1: I had to give a speech for class. My friends applauded for me.

sentence 2: I had to give a speech for class.

-> label: 1

sentence 1: I cooked breakfast. I threw it up after.

sentence 2: I cooked breakfast.

-> label: 0

sentence 1: I go to buy a new saxophone from the instrument shop. Their are no saxophones left at the shop.

sentence 2: I go to buy a new saxophone from the instrument shop.

-> label: 0

As the label is given wrt relative utility between the sentences taking contrasitive pairs can make sense.

We extract hidden layer activations of the training data at different layers in the model. We then aim to find directions in this encoded data.

Use the extraction_classification_removal notebook to find directions in hidden layer activations using different methods:

PCA_diffs: PCA on contrastive sentence pairsPCA: PCA without pairsClassMeans: Difference of means between positive and negative labeled dataK-Means: Unsupervised clustering on encoded dataOnePrompt: Encoded difference of two contrasting prompts, aka 'Love'-'Hate' directionLogReg: Logistic regression on contrastive sentence pairsRandom: Random drawn samples from Gaussian distribution as a baseline

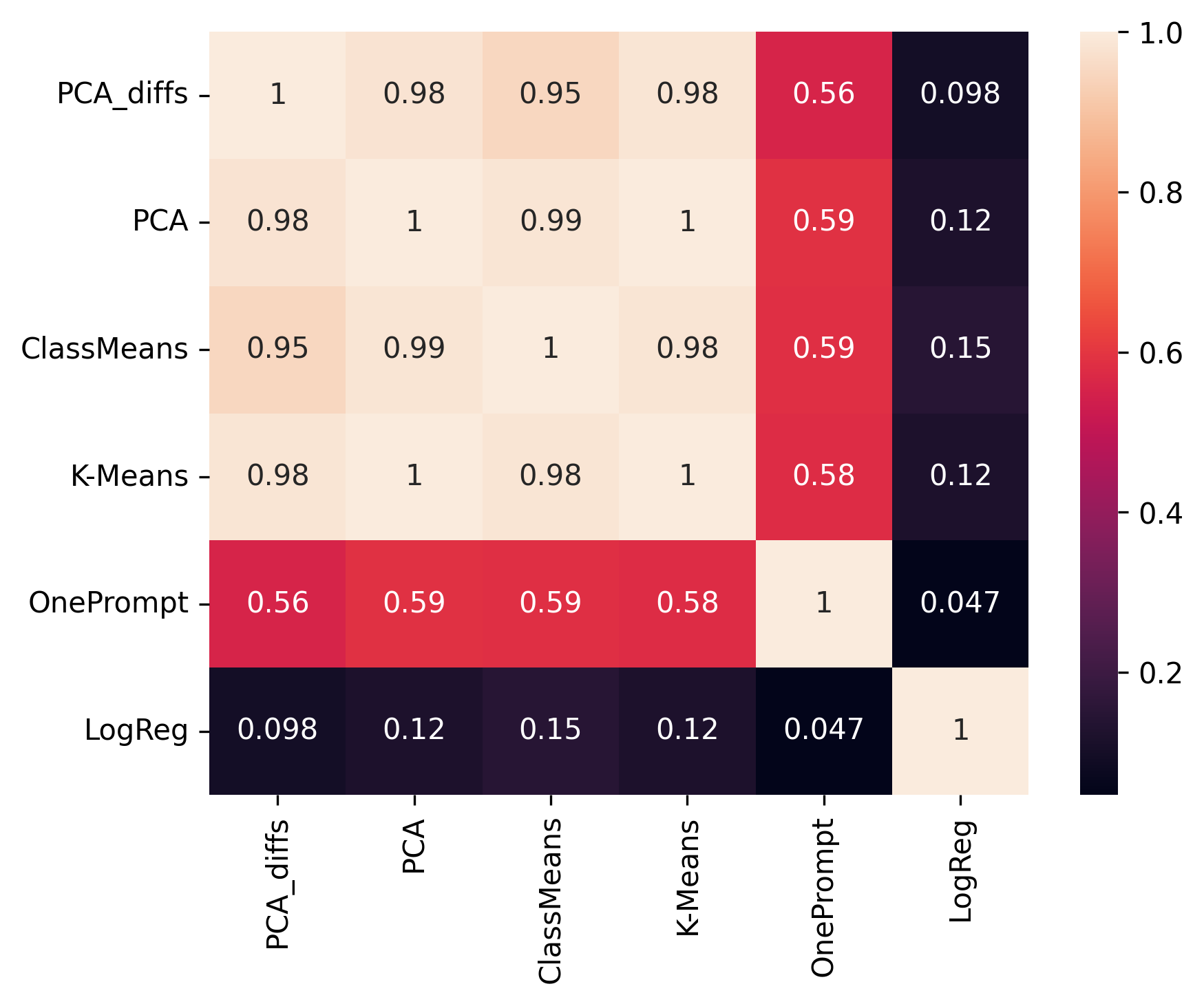

We check the cosine similarity between these directions (for layer 15).

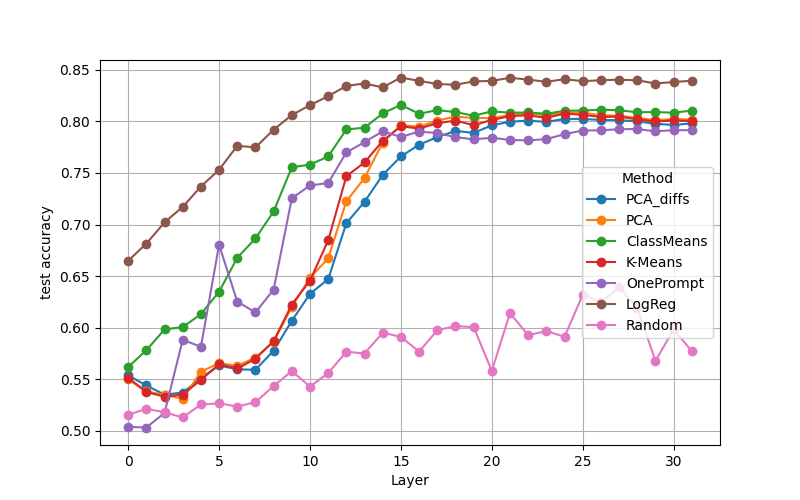

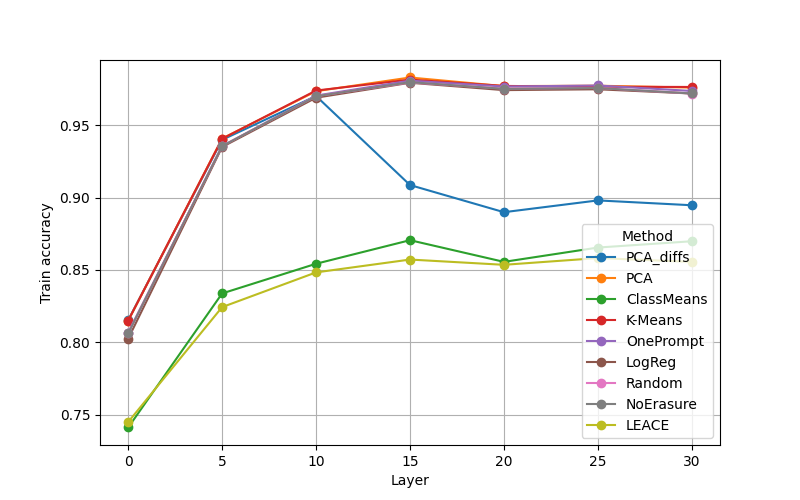

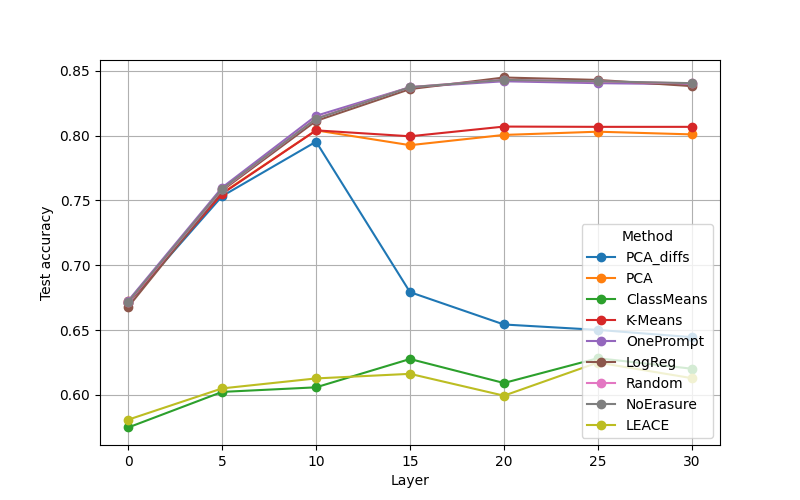

We use the directions found with different methods to classify encoded differences of contrastive test sample pairs. We observe that the data is well separable in middle to later layers.

Use the activation_steering notebook to use the previously found directions for activation addition.

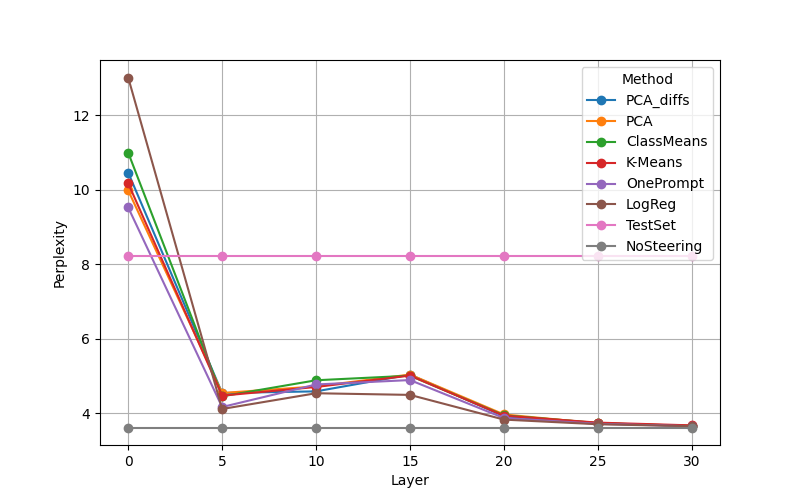

We use the beginnings of 500 samples from the utility test data and generate additional tokens while steering the model in the positive or negative utility direction respectively.

We then quantitatively evaluate the generated text. To check how coherent the text is we calculate the perplexity of the generated words using the non steered model. Activation addition in layer 0 leads to non sensical generations.

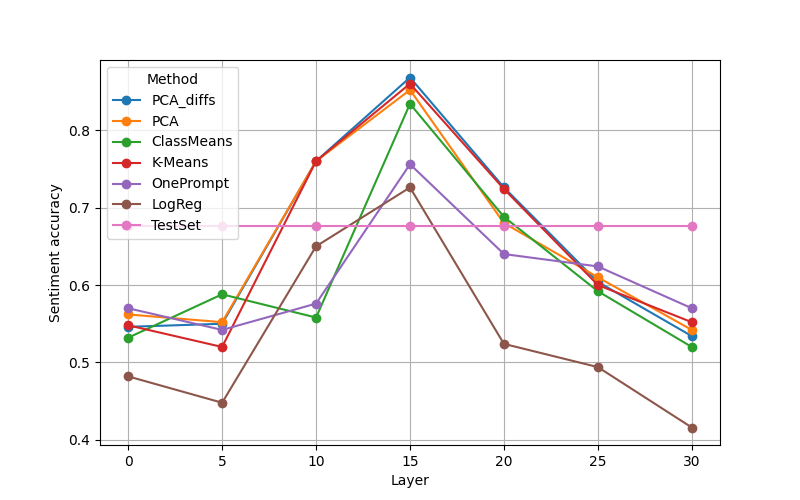

We use a model trained for sentiment analysis to check if positively steered text is indeed more positive than negatively steered text and report the accuracy.

To remove a concept we project the hidden representations in layer l onto the hyperplane that is perpendicular to the concept vector of that layer. To check if the removal was successful (aka, if no more information about utility can be extracted from the projected data) we train a classifier on the projected training set and test it on the training and test data respectively. We thus get an upper and lower bound of how much information can be retrieved post projection.