This repository is the official implementation of ACC-UNet : A Completely Convolutional UNet model for the 2020s using PyTorch.

Ibtehaz, N., Kihara, D. (2023). ACC-UNet: A Completely Convolutional UNet Model for the 2020s. In: Greenspan, H., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2023. MICCAI 2023. Lecture Notes in Computer Science, vol 14222. Springer, Cham. https://doi.org/10.1007/978-3-031-43898-1_66

@InProceedings{10.1007/978-3-031-43898-1_66,

author="Ibtehaz, Nabil and Kihara, Daisuke",

editor="Greenspan, Hayit and Madabhushi, Anant and Mousavi, Parvin and Salcudean, Septimiu and Duncan, James and Syeda-Mahmood, Tanveer and Taylor, Russell",

title="ACC-UNet: A Completely Convolutional UNet Model for the 2020s",

booktitle="Medical Image Computing and Computer Assisted Intervention -- MICCAI 2023",

year="2023",

publisher="Springer Nature Switzerland",

pages="692--702",

isbn="978-3-031-43898-1"

}

This decade is marked by the introduction of Vision Transformer, a radical paradigm shift in broad computer vision. The similar trend is followed in medical imaging, UNet, one of the most influential architectures, has been redesigned with transformers. Recently, the efficacy of convolutional models in vision is being reinvestigated by seminal works such as ConvNext, which elevates a ResNet to Swin Transformer level. Deriving inspiration from this, we aim to improve a purely convolutional UNet model so that it can be on par with the transformer-based models, e.g, Swin-Unet or UCTransNet. We examined several advantages of the transformer-based UNet models, primarily long-range dependencies and cross-level skip connections. We attempted to emulate them through convolution operations and thus propose, ACC-UNet, a completely convolutional UNet model that brings the best of both worlds, the inherent inductive biases of convnets with the design decisions of transformers. ACC-UNet was evaluated on 5 different medical image segmentation benchmarks and consistently outperformed convnets, transformers and their hybrids. Notably, ACC-UNet outperforms state-of-the-art models Swin-Unet and UCTransNet by

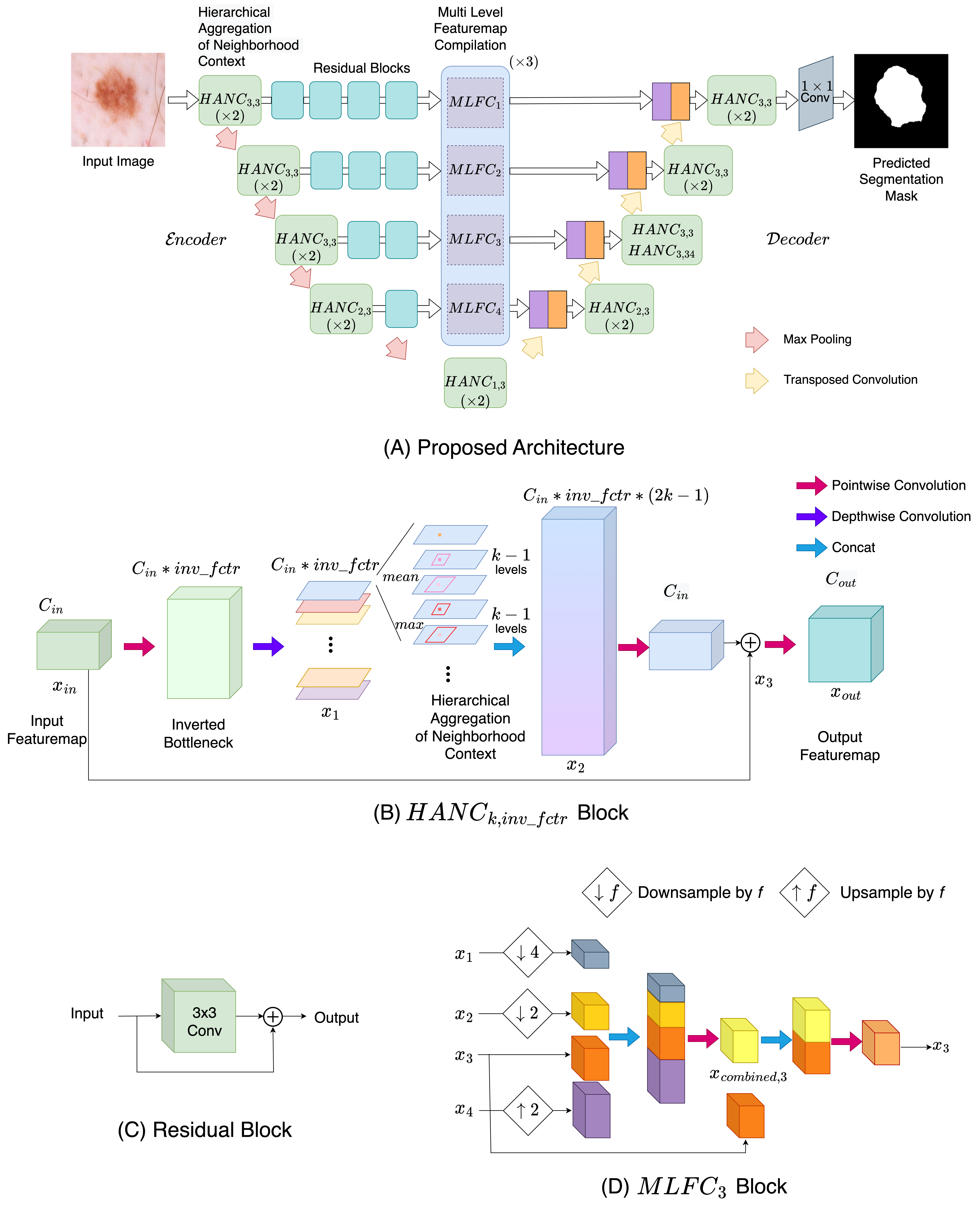

We propose a convolutional UNet, ACC-UNet (Fig. A). We started with a vanilla UNet model and reduced the number of filters in all the layers by half. Then, we replaced the convolutional blocks from the encoder and decoder with our proposed HANC blocks (Fig. B). For all the blocks, we considered

To summarize, in an UNet model, we replaced the classical convolutional blocks with our proposed HANC blocks that perform an approximate version of self-attention and modified the skip connection with MLFC blocks which consider the feature maps from different encoder levels.

The proposed model has

A PyTorch implementation of the model can be found in /ACC_UNet directory

Please refer to the /Experiments directory