Pytorch implementation for “Auto-Embedding Generative Adversarial Networks for High Resolution Image Synthesis”

Python 2.7

Pytorch

In our paper, to sample different images, we train our model on five datasets, respectively.

-

Download Oxford-102 Flowers dataset.

-

Download Caltech-UCSD Birds (CUB) dataset.

-

Download Large-scale CelebFaces Attributes (CelebA) dataset.

-

Download Large-scale Scene Understanding (LSUN) dataset.

-

Download Imagenet dataset.

- Train AEGAN on Oxford-102 Flowers dataset.

python train.py --dataset flowers --dataroot your_images_folder --batchSize 16 --imageSize 512 --niter_stage1 100 --niter_stage2 1000 --cuda --outf your_images_output_folder --gpu 3

- If you want to train the model on Caltech-UCSD Birds (CUB), Large-scale CelebFaces Attributes (CelebA), Large-scale Scene Understanding (LSUN) or your own dataset. Just replace the hyperparameter like these:

python train.py --dataset name_of_dataset --dataroot path_of_dataset

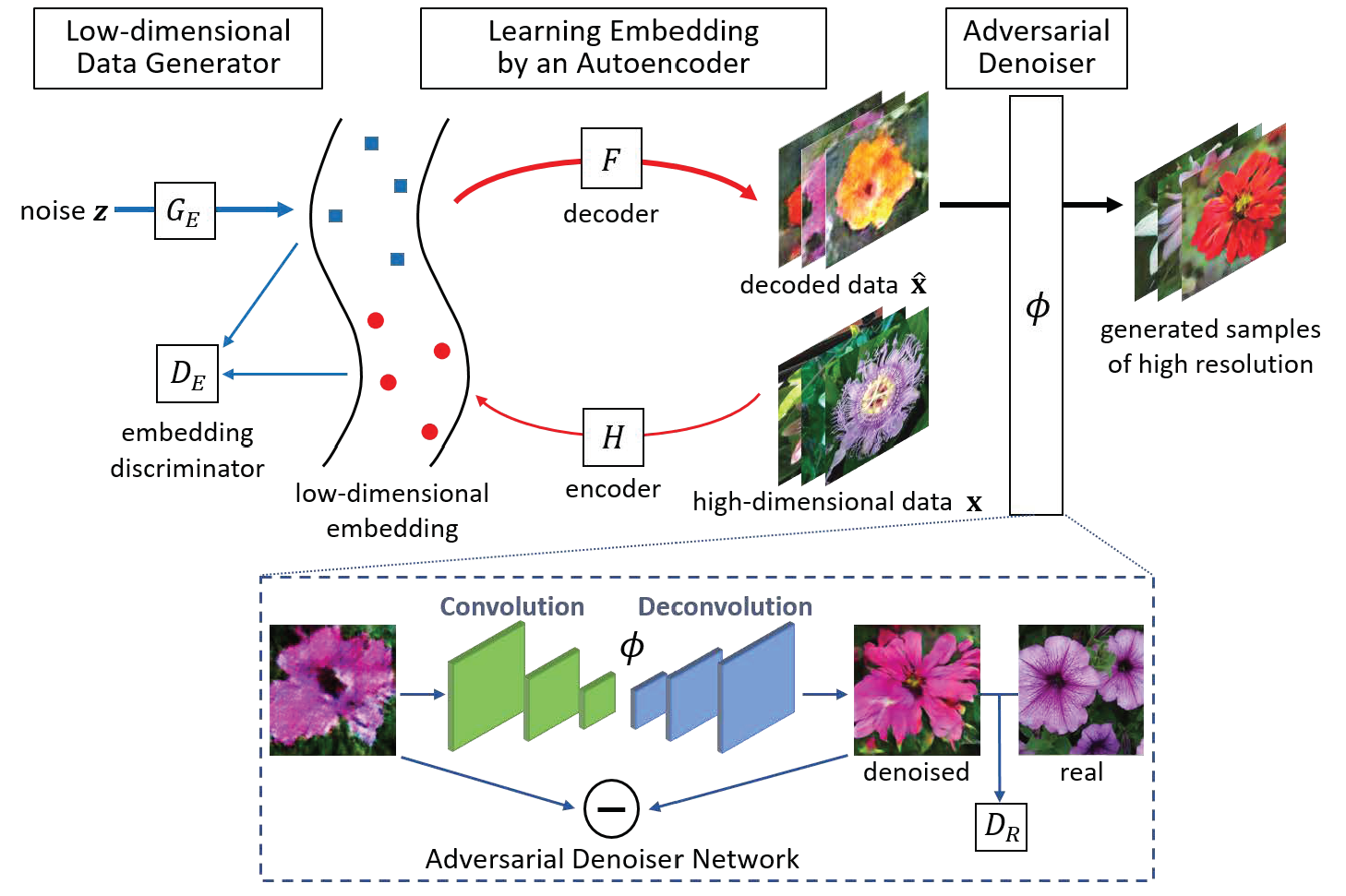

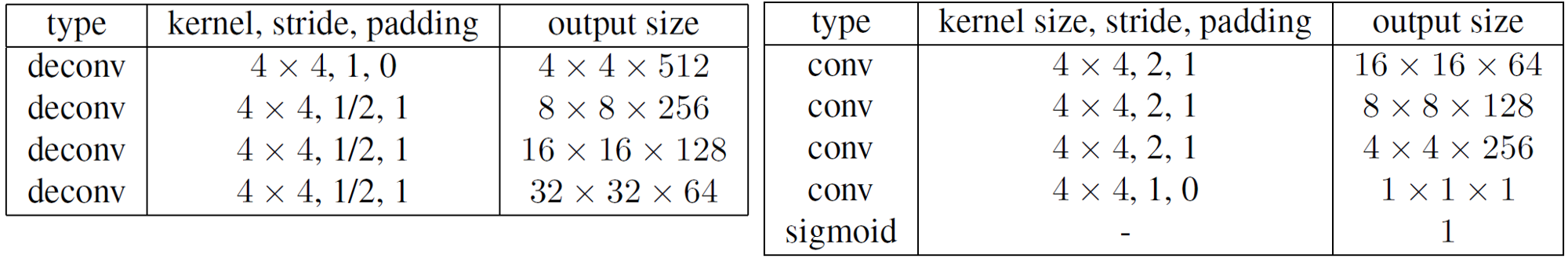

To synthesize embedding of 32x32x64, we use a generator GE (left) and a discriminator DE (right) with four convoluion layers, respectively.

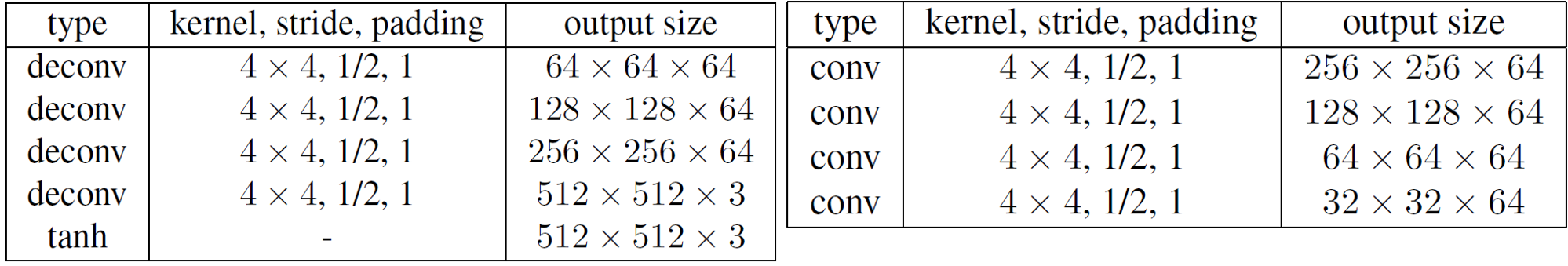

The structure of the auto-encoder model contains a encoder H (righ) and a decoder F (left).

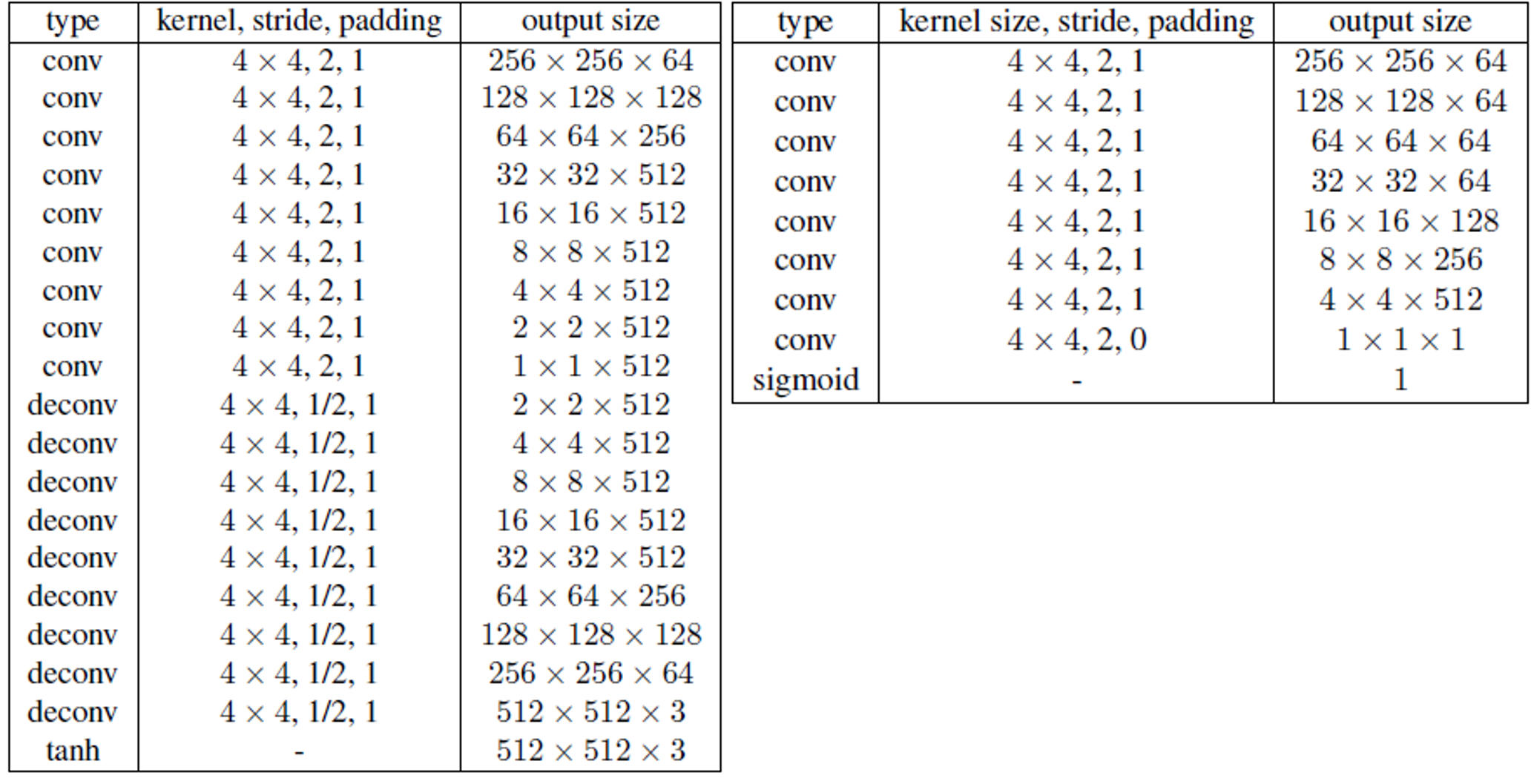

The sturcture of denoiser network includes a encoder-decoder network (left) and a discriminator DR (right).