Scribengin

Pronounced Scribe Engine

Scribengin is a highly reliable (HA) and performant event/logging transport that registers data under defined schemas in a variety of end systems. Scribengin enables you to have multiple flows of data from a source to a sink. Scribengin will tolerate system failures of individual nodes and will do a complete recovery in the case of complete system failure.

Reads data from sources:

- Kafka

- AWS Kinesis

Writes data to sinks:

- HDFS, Hbase, Hive with HCat Integration and Elastic Search

Additonal:

- Monitoring with Ganglia

- Heart Alerting with Nagios

This is part of NeverwinterDP the Data Pipeline for Hadoop

Running

To get your VM up and running:

git clone git://github.com/DemandCube/Scribengin

cd Scribengin/vagrant

vagrant up

For more info on how it all works take a look at [The DevSetup Guide] (https://github.com/DemandCube/Scribengin/blob/master/DevSetup.md)

Community

- Mailing List

- IRC channel #Scribengin on irc.freenode.net

Contributing

See the [NeverwinterDP Guide to Contributing] (https://github.com/DemandCube/NeverwinterDP#how-to-contribute)

The Problem

The core problem is how to reliably and at scale have a distributed application write data to multiple destination data systems. This requires the ability to todo data mapping, partitioning with optional filtering to the destination system.

Status

Currently we are reorganizing the code for V2 of Scribengin to make things more modular and better organized.

Definitions

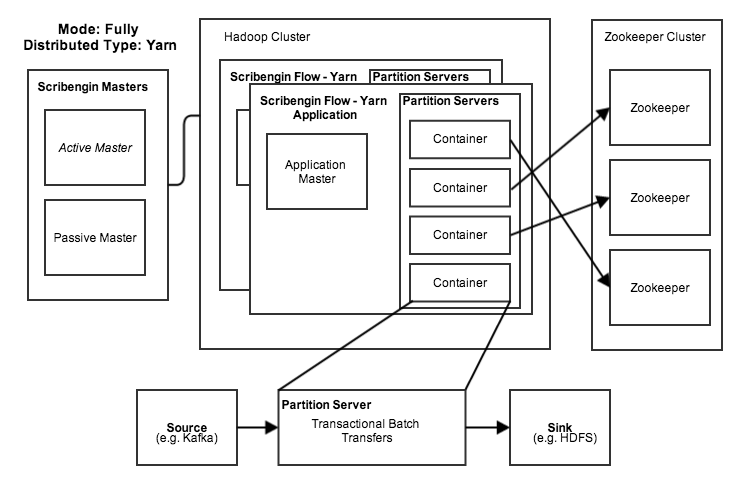

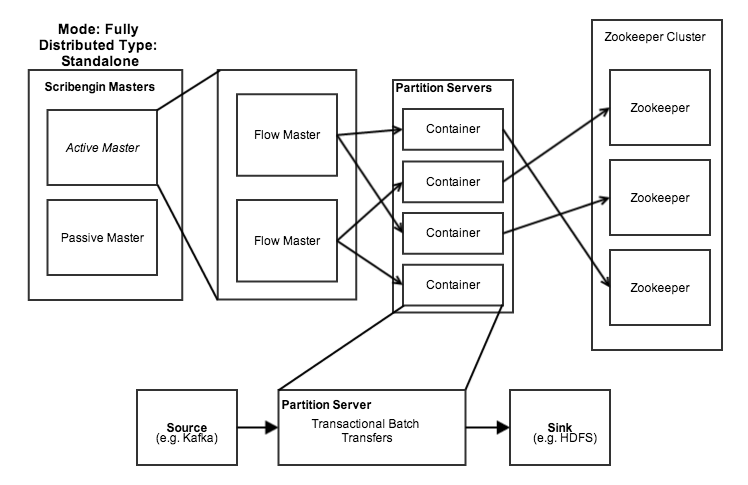

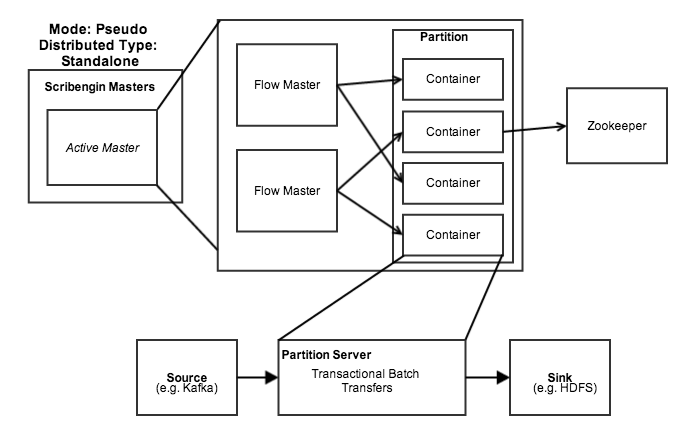

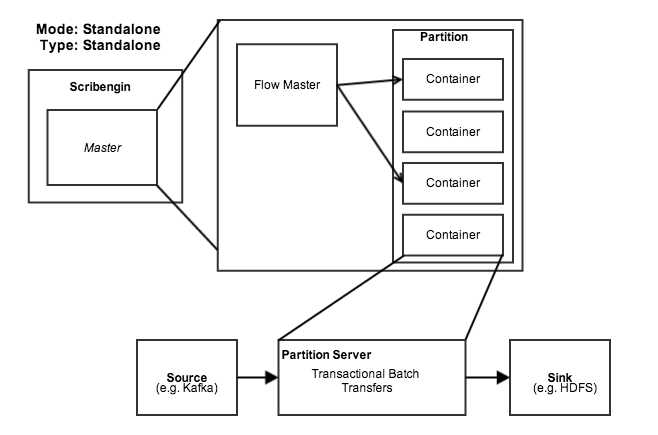

- A Flow - is data being moved from a single source to a single sink

- Source - is a system that is being read to get data from (Kafka, Kinesis e.g.)

- Sink - is a destination system that is being written to (HDFS, Hbase, Hive e.g.)

- A Tributary - is a portion or partition of data from a Flow

Yarn

See the [NeverwinterDP Guide to Yarn] (https://github.com/DemandCube/NeverwinterDP#Yarn)

Potential Implementation Strategies

Poc

- Storm

- Spark-streaming

- Yarn

- Local Mode (Single Node No Yarn)

- Distributed Standalone Cluster (No-Yarn)

- Hadoop Distributed (Yarn)

There is a question of how to implement quaranteed delivery of logs to end systems.

- Storm to HCat

- Storm to HBase

- Create Framework to pick other destination sources

Architecture

Milestones

- Architecture Proposal

- Kafka -> HCatalog

- Notification API

- Notification API Close Partitions HCatalog

- Ganglia Integration

- Nagios Integration

- Unix Man page

- Guide

- Untar and Deploy - Work out of the box

- CentOS Package

- CentOS Repo Setup and Deploy of CentOS Package

- RHEL Package

- RHEL Repo Setup and Deploy of CentOS Package

- Scribengin/Ambari Deployment

- Scribengin/Ambari Monitoring/Ganglia

- Scribengin/Ambari Notification/Nagios

Contributors

Related Project

Research

Yarn Documentation

Keep your fork updated

- Add the remote, call it "upstream":

git remote add upstream git@github.com:DemandCube/Scribengin.git

- Fetch all the branches of that remote into remote-tracking branches,

- such as upstream/master:

git fetch upstream

- Make sure that you're on your master branch:

git checkout master

- Merge upstream changes to your master branch

git merge upstream/master