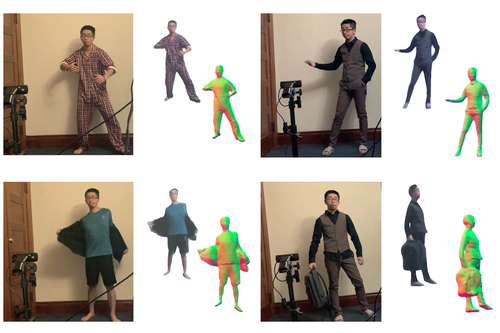

Our volumetric capture system captures a completely clothed human body (including the back) using a single RGB webcam and in real time.

- Python 3.7

- PyOpenGL 3.1.5 (need X server in Ubuntu)

- PyTorch tested on 1.4.0

- ImplicitSegCUDA

- human_inst_seg

- streamer_pytorch

- human_det

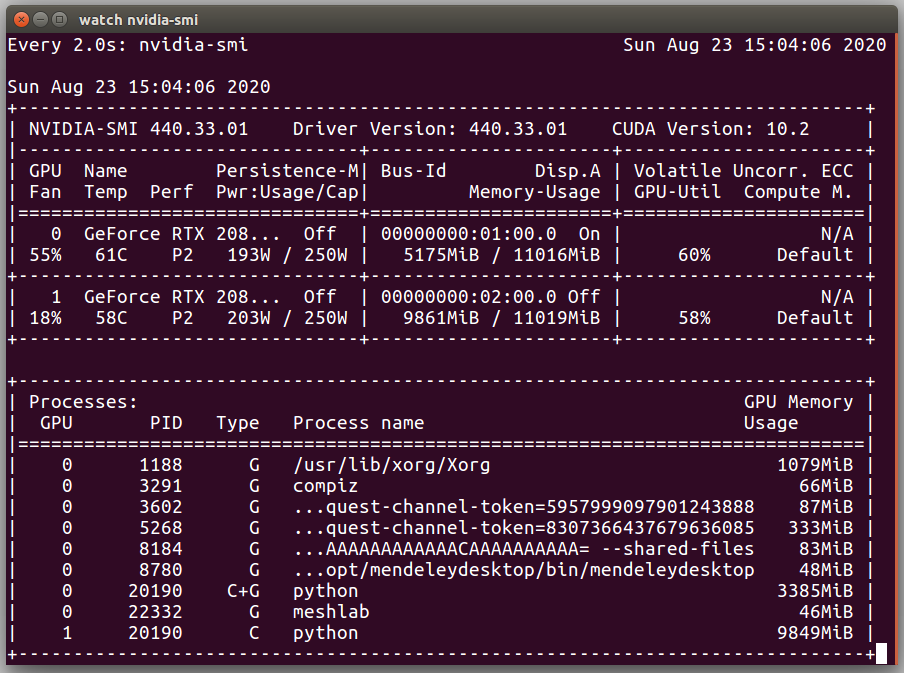

We run the demo with 2 GeForce RTX 2080Ti GPUs, the memory usage is as follows (~3.4GB at GPU1, ~9.7GB at GPU2):

Note: The last four dependencies are also developed by our team, and are all in active maintainess. If you meet any installation problems specificly regarding to those tools, we recommand you to file the issue in the corresponded repo. (You don't need to install them manally here as they are included in the requirements.txt)

First you need to download the model:

sh scripts/download_model.sh

Then install all the dependencies:

pip install -r requirements.txt

# if you want to use the input from a webcam:

python RTL/main.py --use_server --ip <YOUR_IP_ADDRESS> --port 5555 --camera -- netG.ckpt_path ./data/PIFu/net_G netC.ckpt_path ./data/PIFu/net_C

# or if you want to use the input from a image folder:

python RTL/main.py --use_server --ip <YOUR_IP_ADDRESS> --port 5555 --image_folder <IMAGE_FOLDER> -- netG.ckpt_path ./data/PIFu/net_G netC.ckpt_path ./data/PIFu/net_C

# or if you want to use the input from a video:

python RTL/main.py --use_server --ip <YOUR_IP_ADDRESS> --port 5555 --videos <VIDEO_PATH> -- netG.ckpt_path ./data/PIFu/net_G netC.ckpt_path ./data/PIFu/net_C

If everything goes well, you should be able to see those logs after waiting for a few seconds:

loading networkG from ./data/PIFu/net_G ...

loading networkC from ./data/PIFu/net_C ...

initialize data streamer ...

Using cache found in /home/rui/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub

Using cache found in /home/rui/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub

* Serving Flask app "main" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: on

* Running on http://<YOUR_IP_ADDRESS>:5555/ (Press CTRL+C to quit)

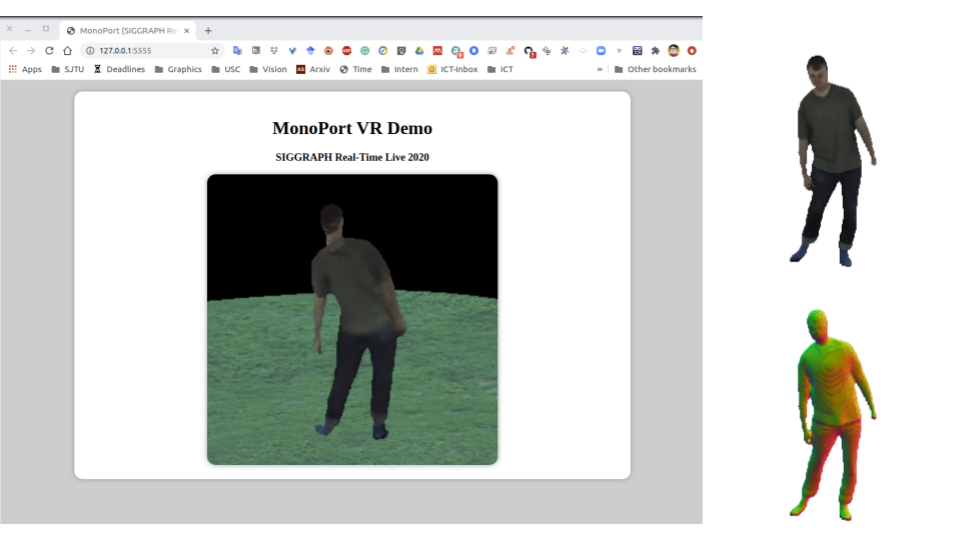

Open the page http://<YOUR_IP_ADDRESS>:5555/ on a web browser from any device (Desktop/IPad/IPhone), You should be able to see the MonoPort VR Demo page on that device, and at the same time you should be able to see the a screen poping up on your desktop, showing the reconstructed normal and texture image.

MonoPort is based on Monocular Real-Time Volumetric Performance Capture(ECCV'20), authored by Ruilong Li*(@liruilong940607), Yuliang Xiu*(@yuliangxiu), Shunsuke Saito(@shunsukesaito), Zeng Huang(@ImaginationZ) and Kyle Olszewski(@kyleolsz), Hao Li is the corresponding author.

@article{li2020monocular,

title={Monocular Real-Time Volumetric Performance Capture},

author={Li, Ruilong and Xiu, Yuliang and Saito, Shunsuke and Huang, Zeng and Olszewski, Kyle and Li, Hao},

journal={arXiv preprint arXiv:2007.13988},

year={2020}

}

@inproceedings{10.1145/3407662.3407756,

author = {Li, Ruilong and Olszewski, Kyle and Xiu, Yuliang and Saito, Shunsuke and Huang, Zeng and Li, Hao},

title = {Volumetric Human Teleportation},

year = {2020},

isbn = {9781450380607},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3407662.3407756},

doi = {10.1145/3407662.3407756},

booktitle = {ACM SIGGRAPH 2020 Real-Time Live!},

articleno = {9},

numpages = {1},

location = {Virtual Event, USA},

series = {SIGGRAPH 2020}

}

PIFu: Pixel-Aligned Implicit Function for High-Resolution Clothed Human Digitization (ICCV 2019)

Shunsuke Saito*, Zeng Huang*, Ryota Natsume*, Shigeo Morishima, Angjoo Kanazawa, Hao Li

The original work of Pixel-Aligned Implicit Function for geometry and texture reconstruction, unifying sigle-view and multi-view methods.

PIFuHD: Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization (CVPR 2020)

Shunsuke Saito, Tomas Simon, Jason Saragih, Hanbyul Joo

They further improve the quality of reconstruction by leveraging multi-level approach!

ARCH: Animatable Reconstruction of Clothed Humans (CVPR 2020)

Zeng Huang, Yuanlu Xu, Christoph Lassner, Hao Li, Tony Tung

Learning PIFu in canonical space for animatable avatar generation!

Robust 3D Self-portraits in Seconds (CVPR 2020)

Zhe Li, Tao Yu, Chuanyu Pan, Zerong Zheng, Yebin Liu

They extend PIFu to RGBD + introduce "PIFusion" utilizing PIFu reconstruction for non-rigid fusion.

Real-time VR PhD Defense

Dr. Zeng Huang defensed his PhD virtually using our system. (Media in Chinese)

Copyright (c) 2020 Ruilong Li, University of Southern California

Please read carefully the following terms and conditions and any accompanying documentation before you download and/or use this software and associated documentation files (the "Software").

The authors hereby grant you a non-exclusive, non-transferable, free of charge right to copy, modify, merge, publish, distribute, and sublicense the Software for the sole purpose of performing non-commercial scientific research, non-commercial education, or non-commercial artistic projects.

Any other use, in particular any use for commercial purposes, is prohibited. This includes, without limitation, incorporation in a commercial product, use in a commercial service, or production of other artefacts for commercial purposes.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

You understand and agree that the authors are under no obligation to provide either maintenance services, update services, notices of latent defects, or corrections of defects with regard to the Software. The authors nevertheless reserve the right to update, modify, or discontinue the Software at any time.

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. You agree to cite the Volumetric Human Teleportation and Monocular Real-Time Volumetric Performance Capture papers in documents and papers that report on research using this Software.