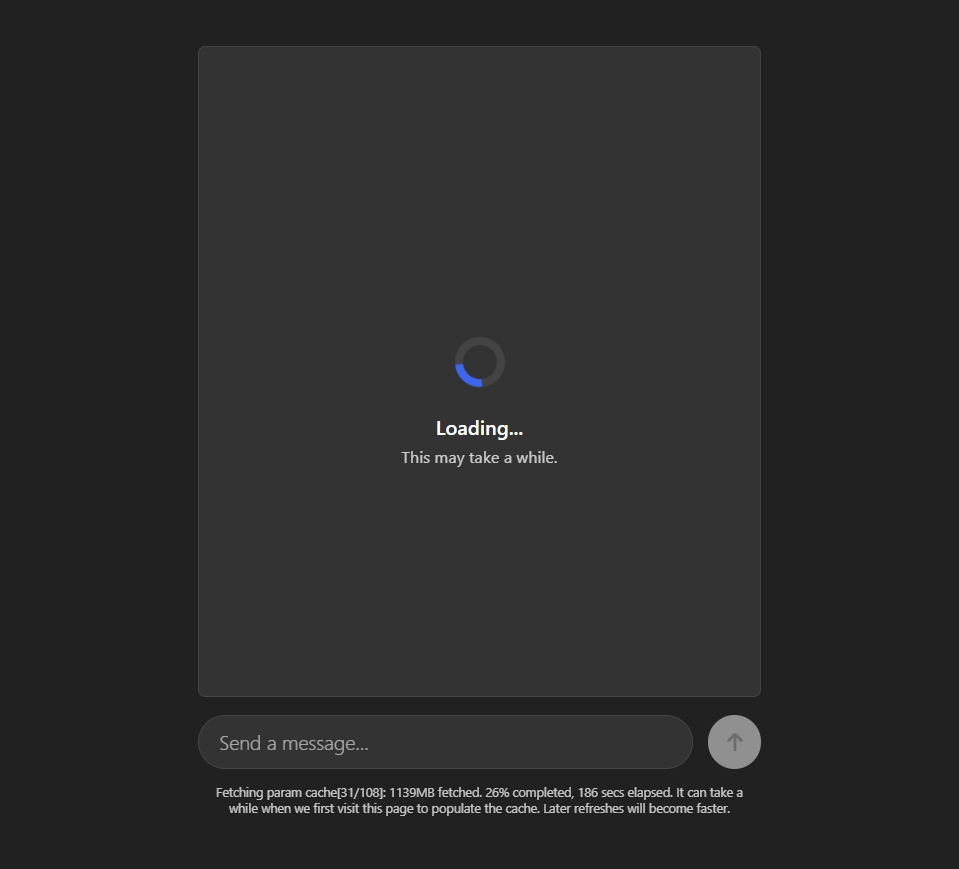

Local-LLM leverages the LLaMA-3-8B-Instruct-q4f32_1-MLC-1k language model from WebLLM for local execution. Enhancing privacy and reducing server dependency, all the data is efficiently cached in the browser.

To use Local-LLM, it is essential to ensure that web storage is enabled in your browser. Without this feature enabled, Local-LLM will not function correctly.

For example, in Google Chrome, it would be done by performing the following steps:

- Click on the menu button in the top-right corner of your Chrome window.

- Select “Settings” from that menu.

- Click “Cookies and site permissions”.

- Click on “Cookies and site data”.

- Toggle on the setting for “Allow sites to save and read cookie data (recommended)”.

Through developing Local-LLM, several key learnings have emerged:

- Integration of ECMAScript modules for efficient code structuring.

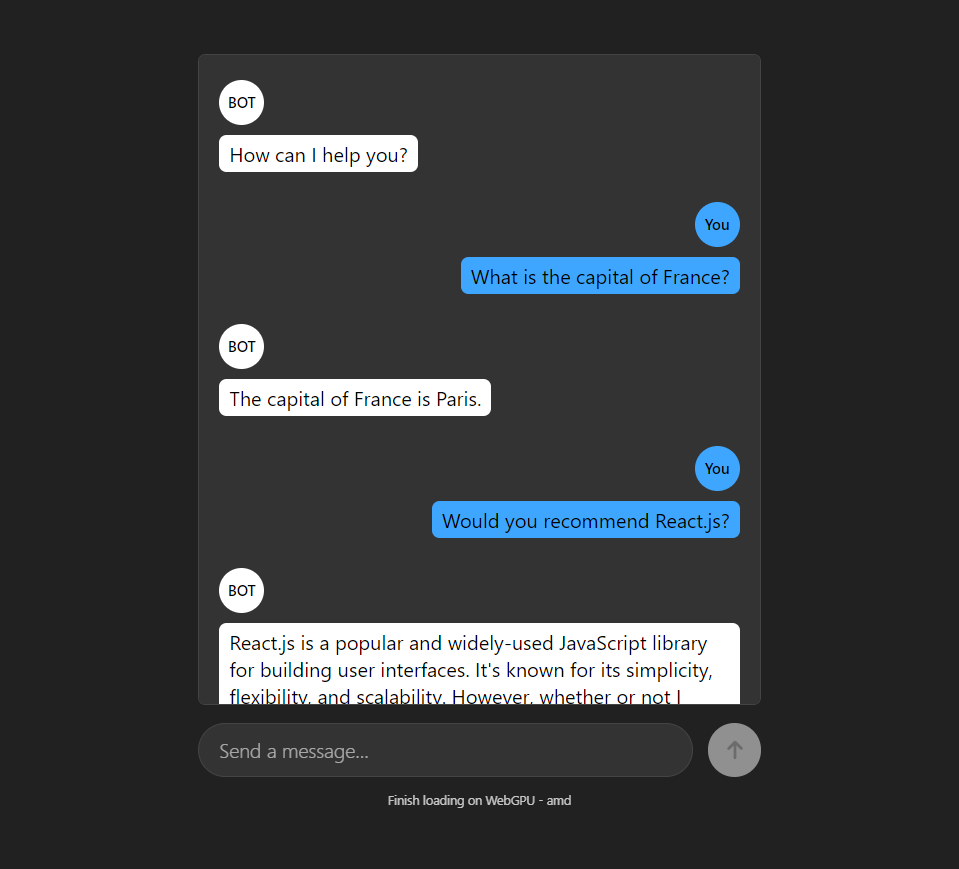

- Handling form events dynamically to enhance user interaction.

- Implementing HTML templates for flexible DOM manipulation.

- Utilizing Web Workers to optimize performance and offload intensive tasks.

- Executing a language model directly in the browser environment, exploring its feasibility and performance characteristics.