Ondrej Sika (sika.io) | ondrej@sika.io | go to course -> | install docker ->

Ondrej Sika <ondrej@ondrejsika.com>

https://github.com/ondrejsika/docker-training

My Docker course with code examples.

Write me mail to ondrej@sika.io

- https://github.com/ondrejsika/kubernetes-training-examples

- https://github.com/ondrejsika/bare-metal-kubernetes

./pull-images.sh

If you want update list of used images in file images.txt, run ./save-image-list.sh and remove locally built images.

Freelance DevOps Engineer, Consultant & Lecturer

- Complete DevOps Pipeline

- Open Source / Linux Stack

- Cloud & On-Premise

- Technologies: Git, Gitlab, Gitlab CI, Docker, Kubernetes, Terraform, Prometheus, ELK / EFK, Rancher, Proxmox

Feel free to star this repository or fork it.

If you found bug, create issue or pull request.

Also feel free to propose improvements by creating issues.

For sharing links & "secrets".

Docker is an open-source project that automates the deployment of applications inside software containers ...

Docker containers wrap up a piece of software in a complete filesystem that contains everything it needs to run: code, runtime, system tools, system libraries – anything you can install on a server.

A VM is an abstraction of physical hardware. Each VM has a full server hardware stack from virtualized BIOS to virtualized network adapters, storage, and CPU.

That stack allows run any OS on your host but it takes some power.

Containers are abstraction in linux kernel, just proces, memory, network, … namespaces.

Containers run in same kernel as host - it is not possible use different OS or kernel version, but containers are much more faster than VMs.

- Performance

- Management

- Application (image) distribution

- Security

- One kernel / "Linux only"

- Almost everywhere

- Development, Testing, Production

- Better (easier, faster) deployment process

- Separates running applications

- Kubernetes

Docker CE is ideal for individual developers and small teams looking to get started with Docker and experimenting with container-based apps.

Docker Engine - Enterprise is designed for enterprise development of a container runtime with security and an enterprise grade SLA in mind.

Docker Enterprise is designed for enterprise development and IT teams who build, ship, and run business critical applications in production at scale.

Source: https://docs.docker.com/install/overview/

Set of 12 rules how to write modern applications.

- Official installation - https://docs.docker.com/engine/installation/

- My install instructions (in Czech) - https://ondrej-sika.cz/docker/instalace/

- Bash Completion on Mac - https://blog.alexellis.io/docker-mac-bash-completion/

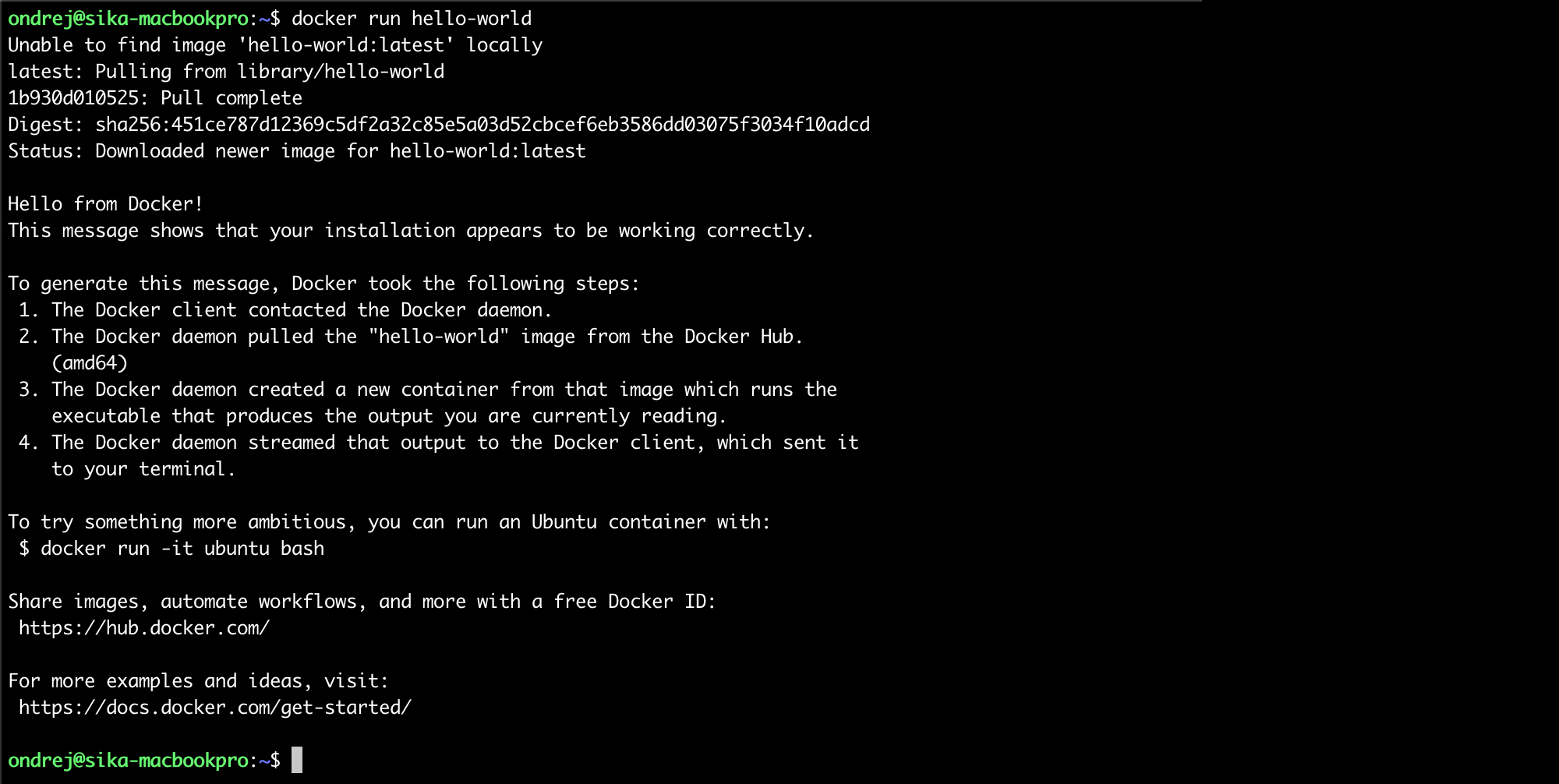

docker run hello-world

You can use remote Docker using SSH. Just export varibale DOCKER_HOST with ssh://root@docker.sikademo.com and your local Docker clint will be executed on docker.sikademo.com server.

export DOCKER_HOST=ssh://root@docker.sikademo.com

docker version

docker info

An image is an inert, immutable, file that's essentially a snapshot of a container. Images are created with the build command, and they'll produce a container when started with run. Images are stored in a Docker registry.

docker version- print versiondocker info- system wide informationdocker system df- docker disk usagedocker system prune- cleanup unused data

docker pull <image>- download an imagedocker image ls- list all imagesdocker image ls -q- quiet output, just IDsdocker image ls <image>- list image versionsdocker image rm <image>- remove imagedocker image history <image>- show image historydocker image inspect <image>- show image properties

Docker image name also contains location of it source. Those names can be used:

debian- Official images on Docker Hubondrejsika/debian- User (custom) images on Docker Hubreg.istry.cz/debian- Image in my own registry

Docker Registry is build in Gitlab and Github for no additional cost. You can find it in packages section.

You can run registry manually using this command:

docker run -d -p 5000:5000 --restart=always --name registry registry:2

See full deployment configuration here: https://docs.docker.com/registry/deploying/

You have to add registry_external_url to Your Gitlab config and reconfigure.

echo "registry_external_url 'registry.example.com'" >> /etc/gitlab/gitlab.rb

gitlab-ctl reconfigure

See: https://reg.istry.cz/v2/_catalog

Deployed into Kubernetes by sikalabs/simple-registry chart

Setup SikaLabs Charts

helm repo add sikalabs https://helm.sikalabs.io

helm repo update

Install sikalabs/simple-registry chart

helm install registry sikalabs/simple-registry --set host reg.istry.cz

I use reg for CLI and Web client. Work only with open source registry, Docker Hub use different API.

From their release page on Github: https://github.com/genuinetools/reg/releases

reg ls <registry>

Example

reg ls reg.istry.cz

reg server -r <registry>

Example

reg server -r reg.istry.cz

docker run [ARGS] <image> [<command>]

Examples

# Basic Docker Run

docker run hello-world

# With custom command

docker run debian cat /etc/os-release

docker run ubuntu cat /etc/os-release

# With TTY & Standart Input

docker run -ti debian

docker ps- list containersdocker start <container>docker stop <container>docker restart <container>docker rm <container>- remove container

--name <name>--rm- remove container after stop-d- run in detached mode-ti- map TTY a STDIN (for bash eg.)-e <variable>=<value>- set ENV variable--env-file=<env_file>- load all variables defined in ENV file

By default, if container process stop (or fail), container will be stopped.

You can choose another behavion using argument --restart <restart policy>.

--restart on-failure- restart only when container return non zero return code--restart always- always, even on Docker daemon restart (server restart also)--restart unless-stopped- similar to always, but keep stopped container stopped on Docker daemon restart (server restart also)

If you want to set maximum restart count for on-failure restart policy, you can use: --restart on-failure:<count>

docker ps- list running containersdocker ps -a- list all containersdocker ps -a -q- list IDs of all containers

Example of -q

docker rm -f $(docker ps -a -q)

or my dra (docker remove all) alias

alias dra='docker ps -a -q | xargs -r docker rm -f'

dra

docker exec <container> <command>

Arguments

-d- run in detached mode-e <variable>=<value>- set ENV variable-ti- map TTY a STDIN (for bash eg.)-u <user>- run command by specific user

Example

docker run --name pg11 -e POSTGRES_PASSWORD=pg -d postgres:11

docker exec -ti -u postgres pg11 psql

docker run --name pg12 -e POSTGRES_PASSWORD=pg -d postgres:12

docker exec -ti -u postgres pg12 psql

docker logs [-f] [-t] <container>

Args

-f- following output (similar totail -f ...)-t- show time prefix

Examples

docker run --name loop -d ondrejsika/infinite-counter

docker logs loop

docker logs -t loop

docker logs -f loop

docker logs -ft loop

You can use native Docker logging or some log drivers.

For example, if you want to log into syslog, you can use --log-driver syslog.

You can send logs directly to ELK (EFK) or Graylog using gelf. For elk logging you have to use --log-driver gelf –-log-opt gelf-address=udp://1.2.3.4:12201.

See the logging docs: https://docs.docker.com/config/containers/logging/configure/

Log Driver options:

max-size- Max size of log file (default-1- unlimited), use for example100kfor kB,10mfor MB or1gfor GB.max-file- Nuber of log rotated files (default1)compress- Compression for rotated logs (defaultdisabled)

Examle:

docker run --name log-rotation -d --log-opt max-size=1k --log-opt max-file=5 ondrejsika/log-rotation

Get lots of information about container in JSON.

docker inspect <container>

Using Go Template Language.

Examples:

docker inspect log-rotation --format "{{.NetworkSettings.IPAddress}}"

docker inspect log-rotation --format "{{.LogPath}}"

- Volumes are persistent data storage for containers.

- Volumes can be shared between containers and data are written directly to host.

CLI

docker volume- all volume management commandsdocker volume ls- list all volumesdocker volume rm <volume>- remove volumedocker volume prune- remove all not used (not bount to container) volumes

Examples

docker run -ti -v /data debiandocker run -ti -v my-volume:/data debiandocker run -ti -v $(pwd)/my-data:/data debian

docker image inspect redis --format "{{.Config.Volumes|json}}"

Install plugin

docker plugin install vieux/sshfs

Create SSH FS volume

docker volume create --driver vieux/sshfs \

-o sshcmd=root@sshfs.sikademo.com:/sshfs \

-o password=asdfasdf \

sshvolume

Use SSH FS volume

docker run -ti -v sshvolume:/data debian

Create NFS volume

docker volume create --driver local --opt type=nfs --opt o=addr=nfs.sikademo.com,rw --opt device=:/nfs nfsvolume

Use NFS volume

docker run -ti -v nfsvolume:/data debian

If you want to mount your volumes read only, you have to add :ro to volume argument.

Examples

docker run -ti -v my-volume:/data:ro debiandocker run -ti -v $(pwd)/my-data:/data:ro debian

First example does't make sense read only.

docker ps -a --format '{{ .ID }}' | xargs -I {} docker inspect -f '{{ .Name }}{{ printf "\n" }}{{ range .Mounts }}{{ printf "\n\t" }}{{ .Type }} {{ if eq .Type "bind" }}{{ .Source }}{{ end }}{{ .Name }} => {{ .Destination }}{{ end }}{{ printf "\n" }}' {}

docker ps -a --filter volume=<volume>

Example

docker ps -a --filter volume=my-volume

If you want to forward socket into container, you can also use volume. If you work with sockets, read only parameter doesn't work.

docker run -v /var/run/docker.sock:/var/run/docker.sock docker docker ps

or

docker run -v /var/run/docker.sock:/var/run/docker.sock -ti docker

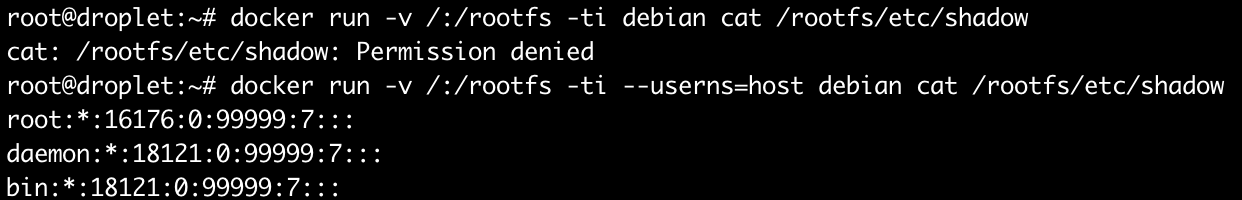

You can mount your's host rootfs to container with root privileges. Everybody ho has access to docker or docker socket has root privileges on your host.

userns-remap can fix that

docker run -v /:/rootfs -ti debian

Docker can remap root user from container to hight-number user on host.

More: https://docs.docker.com/engine/security/userns-remap/

dockerd argument

dockerd --userns-remap="default"

Config /etc/docker/daemon.json

{

"userns-remap": "default"

}docker run -v /:/rootfs -ti debian cat /rootfs/etc/shadow

docker run -v /:/rootfs -ti --userns=host debian cat /rootfs/etc/shadow

Run Docker in Docker

docker run --name docker -d --privileged docker:dind

Try run any Docker command in this container:

docker exec docker docker info

docker exec docker docker image ls

docker exec docker docker run hello-world

docker exec -ti docker sh

Docker can forward specific port from container to host

docker run -p <host port>:<cont. port> <image>

You can specify an address on the host as well

docker run -p <host address>:<host port>:<cont. port> <image>

Examples

docker run -ti -p 8080:80 nginx

docker run -ti -p 127.0.0.1:8080:80 nginx

The latter will make connection possible only from localhost.

Dockerfile is preferred way to create images.

Dockerfile defines each layer of image by some command.

To make image use command docker build

FROM <image>- define base imageRUN <command>- run command and save as layerCOPY <local path> <image path>- copy file or directory to image layerADD <source> <image path>- instead of copy, archives added by add are extractedENV <variable> <value>- set ENV variableUSER <user>- switch userWORKDIR <path>- change working directoryVOLUME <path>- define volumeCMD <command>- command we want to run on container start up. Difference betweenCMDandENTRYPOINTwill be exaplain laterEXPOSE <port>- Define on which port the conteiner will be listening

- Ignore files for docker build process.

- Similar to

.gitignore

Example of .dockerignore for Next.js (Node) project

Dockerfile

out

node_modules

.DS_Store

docker build <path> -t <image>- build imagedocker build <path> -f <dockerfile> -t <image>docker tag <source image> <target image>- rename docker image

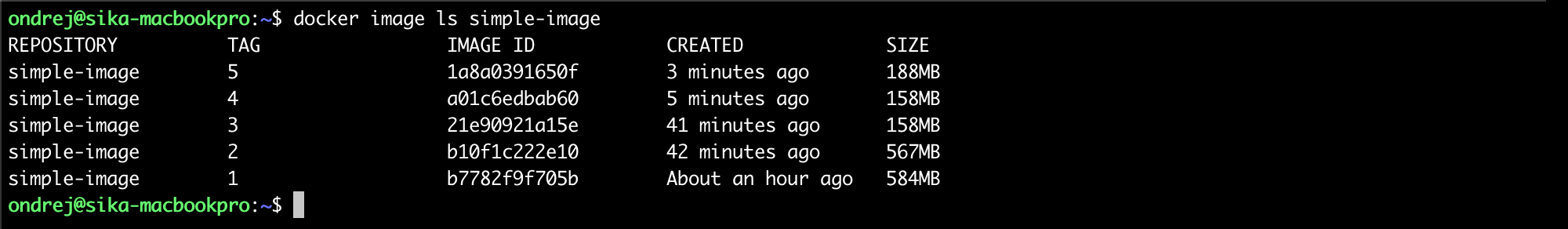

See Simple Image example

git clone https://github.com/ondrejsika/docker-training

cd docker-training/examples/simple-image

rm Dockerfile Dockerfile.debian

FROM debian:10

WORKDIR /app

RUN apt-get update && \

apt-get install -y --no-install-recommends python3 python3-pip && \

rm -rf /var/lib/apt/lists/*

COPY requirements.txt .

RUN pip3 install -r requirements.txt

COPY . .

CMD ["python3", "app.py"]

EXPOSE 80docker build -t simple-image .

docker run --name simple-image -d -p 8000:80 simple-image

docker rm -f simple-image

FROM python:3.7-slim

WORKDIR /app

COPY requirements.txt .

RUN pip3 install -r requirements.txt

COPY . .

CMD ["python3", "app.py"]

EXPOSE 80docker build -t simple-image .

docker run --name simple-image -d -p 8000:80 simple-image

docker rm -f simple-image

List images and see the difference in image sizes

docker image ls simple-image

Hadolint is Dockerfile linter.

Github: https://github.com/hadolint/hadolint

Install on Mac

brew install hadolint

Use hadolint

hadolint <dockerfile>You can ignore checks & specify trusted registries

hadolint --ignore DL3003 --ignore DL3006 <dockerfile> # exclude specific rules

hadolint --trusted-registry registry.sikademo.com <dockerfile>You can also use Hadolint from Docker

docker run --rm -i hadolint/hadolint < Dockerfile

docker run --rm -i hadolint/hadolint hadolint --ignore DL3006 - < Dockerfile

or (for PowerShell)

cat Dockerfile | docker run --rm -i hadolint/hadolint

cat Dockerfile | docker run --rm -i hadolint/hadolint hadolint --ignore DL3006 -

Example in Dockerfile

ARG FROM_IMAGE=debian:9

FROM $FROM_IMAGEFROM debian

ARG PYTHON_VERSION=3.7

RUN apt-get update && \

apt-get install python==$PYTHON_VERSIONBuild using

docker build \

--build-arg FROM_IMAGE=python .

docker build .

docker build \

--build-arg PYTHON_VERSION=3.6 .

See Build Args example.

FROM java-jdk as build

RUN gradle assembly

FROM java-jre

COPY --from=build /build/demo.jar .# By default, last stage is used

docker build -t <image> <path>

# Select output stage

docker build -t <image> --target <stage> <path>Examples

docker build -t app .

docker build -t build --target build .

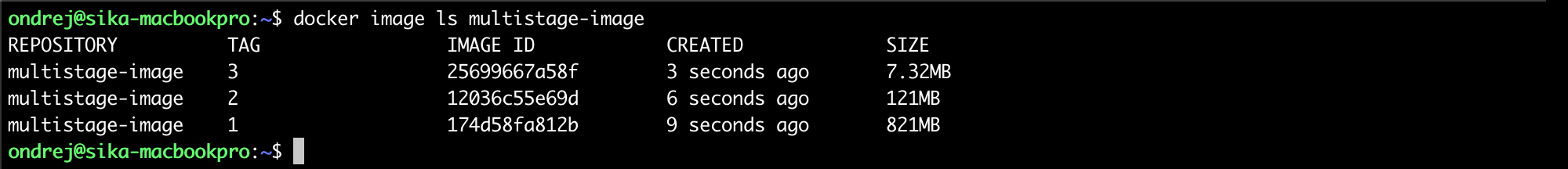

See Multistage Image example

cd ../multistage-image

rm Dockerfile

FROM golang

WORKDIR /app

COPY app.go .

RUN go build app.go

CMD ["./app"]

EXPOSE 80Build & Run

docker build -t multistage-image:1 .

docker run --name multistage-image -d -p 8000:80 multistage-image:1

Stop & remove container

docker rm -f multistage-image

See the image size

docker image ls multistage-image

FROM golang as build

WORKDIR /build

COPY app.go .

RUN go build app.go

FROM debian:10

COPY --from=build /build/app .

CMD ["/app"]

EXPOSE 80Build & Run

docker build -t multistage-image:2 .

docker run --name multistage-image -d -p 8000:80 multistage-image:2

Stop & remove container

docker rm -f multistage-image

See the image size

docker image ls multistage-image

If you build you Go app to static binary (no dynamic dependencies), you can create image from scratch - without OS.

FROM golang as build

WORKDIR /build

COPY app.go .

ENV CGO_ENABLED=0

RUN go build -a -ldflags \

'-extldflags "-static"' app.go

FROM scratch

COPY --from=build /build/app .

CMD ["/app"]

EXPOSE 80Build & Run

docker build -t multistage-image:3 .

docker run --name multistage-image -d -p 8000:80 multistage-image:3

Stop & remove container

docker rm -f multistage-image

See the image size

docker image ls multistage-image

Source: https://github.com/dotnet/dotnet-docker/tree/master/samples/aspnetapp

git clone https://github.com/dotnet/dotnet-docker.git

cd samples/aspnetapp

docker build -t dotnet-example .

docker run -ti -p 8000:80 dotnet-example

Docker has new build tool called BuildKit which can speedup your builds. For example, it build multiple stages in parallel and more. You can also extend Dockerfile functionality for caches, mounts, ...

To enable BuildKit, just set environment variable DOCKER_BUILDKIT to 1.

Example

export DOCKER_BUILDKIT=1

docker build .

or

DOCKER_BUILDKIT=1 docker build .

If you are on Windows, you can set variable in PowerShell

Set-Variable -Name "DOCKER_BUILDKIT" -Value "1"and in CMD

SET DOCKER_BUILDKIT=1You can enable BuildKit by default in Docker config file /etc/docker/daemon.json:

{ "features": { "buildkit": true } }BuildKit has interactive output by default, if you can use plan, for example for CI, use:

docker build --progress=plain .

Docker Build Kit comes with new syntax of Dockerfile.

Here is a description of Dockerfile frontend syntax - https://github.com/moby/buildkit/blob/master/frontend/dockerfile/docs/syntax.md

Example of Build Kit's paralelism: sikalabs/ci image

https://github.com/sikalabs/sikalabs-container-images/tree/master/ci

Example

cat > Dockerfile.1 <<EOF

# syntax = docker/dockerfile:1.3

FROM ubuntu

RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' > /etc/apt/apt.conf.d/keep-cache

RUN --mount=type=cache,target=/var/cache/apt --mount=type=cache,target=/var/lib/apt \

apt-get update && apt-get install -y python gcc

EOF

Build

docker build -t buildkit-example-1 -f Dockerfile.1 .

Another Dockerfile which use APT cached packages

cat > Dockerfile.2 <<EOF

# syntax = docker/dockerfile:1.3

FROM ubuntu

RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' > /etc/apt/apt.conf.d/keep-cache

RUN --mount=type=cache,target=/var/cache/apt --mount=type=cache,target=/var/lib/apt \

apt-get update && apt-get install -y gcc

EOF

Build

docker build -t buildkit-example-2 -f Dockerfile.2 .

See, packages are in APT cache, no download is needed.

More about BuildKit: https://docs.docker.com/develop/develop-images/build_enhancements/

You have your tool (echo) with default configuration (in command).

cat > Dockerfile.1 <<EOF

FROM debian:10

CMD ["echo", "hello", "world"]

EOF

Build

docker build -t echo:1 -f Dockerfile.1 .Run

# default command

docker run --rm echo:1

# updated command (didn't work)

docker run --rm echo:1 ahoj svete

# properly updated command

docker run --rm echo:1 echo ahoj sveteYou can split command array to command and entrypoint like:

cat > Dockerfile.2 <<EOF

FROM debian:10

ENTRYPOINT ["echo"]

CMD ["hello", "world"]

EOF

Build

docker build -t echo:2 -f Dockerfile.2 .Run

# default command (same)

docker run --rm echo:2

# updated command (works)

docker run --rm echo:2 ahoj sveteDocker support those network drivers:

- bridge (default)

- host

- none

- custom (bridge)

docker run debian ip a

docker run --net host debian ip a

docker run --net none debian ip a

docker network lsdocker network create <network>docker network rm <network>

Example:

docker network create -d bridge my_bridge

Run & Add Containers:

# Run on network

docker run -d --net=my_bridge --name nginx nginx

docker run -d --net=my_bridge --name apache httpd

# Connect to network

docker run -d --name nginx2 nginx

docker network connect my_bridge nginx2Test the network

docker run -ti --net my_bridge ondrejsika/host nginx

docker run -ti --net my_bridge ondrejsika/host apache

docker run -ti --net my_bridge ondrejsika/curl nginx

docker run -ti --net my_bridge ondrejsika/curl apache

If you need assign IP addresses from your local network directly to containers, you have to use Macvlan.

https://docs.docker.com/network/macvlan/

docker network create -d macvlan \

--subnet=192.168.101.0/24 \

--ip-range=192.168.101.128/25 \

--gateway=192.168.101.1\

-o parent=eth0 macvlan

Portainer is a web UI for Docker.

Homepage: portainer.io

docker run -d --name portainer -p 8000:8000 -p 9000:9000 -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer

Nixery.dev provides ad-hoc container images that contain packages from the Nix package manager. Images with arbitrary packages can be requested via the image name.

More at https://nixery.dev/

docker run nixery.dev/hello hello

docker run -ti nixery.dev/htop htop

docker run -ti nixery.dev/shell/git/curl/mc bash

google/cadvisor (homepage)

cAdvisor (Container Advisor) provides container users an understanding of the resource usage and performance characteristics of their running containers. It is a running daemon that collects, aggregates, processes, and exports information about running containers. Specifically, for each container it keeps resource isolation parameters, historical resource usage, histograms of complete historical resource usage and network statistics. This data is exported by container and machine-wide.

Install:

# use the latest release version from https://github.com/google/cadvisor/releases

VERSION=v0.36.0

docker run \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:ro \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--volume=/dev/disk/:/dev/disk:ro \

--publish=8080:8080 \

--detach=true \

--name=cadvisor \

--privileged \

--device=/dev/kmsg \

gcr.io/google-containers/cadvisor:$VERSION

Check out:

- Web UI - http://127.0.0.1:8080/

- /metrics (prometheus) - http://127.0.0.1:8080/metrics

That's it. Do you have any questions? Let's go for a beer!

Docker Compose is a tool for defining and running multi-container Docker applications.

With Docker Compose, you use a Compose file to configure your application's services.

Docker Compose is part of Docker Desktop (Mac, Windows). Only on Linux, you have to install it:

version: "3.7"

services:

app:

build: .

ports:

- 8000:80

redis:

image: redisHere is a compose file reference: https://docs.docker.com/compose/compose-file/compose-file-v3/

Here is a nice tutorial for YAML: https://learnxinyminutes.com/docs/yaml/

Service is a container running and managed by Docker Compose.

docker-compose up [ARGS] [<service>, ...]

Example

docker-compose up

Just build, don't run

docker-compose build

Build without cache

docker-compose build --no-cache

Build with args

docker-compose build --build-arg BUILD_NO=53

Just pull & run image

services:

app:

image: redisSimple, just build path

services:

app:

build: .Extended form with every build configuration

services:

app:

build:

context: ./app

dockerfile: ./app/docker/Dockerfile

args:

BUILD_NO: 1

image: reg.istry.cz/appservices:

app:

ports:

- 8000:80

- 127.0.0.1:80:80Volumes are very similar but there is a little difference

services:

app:

volumes:

- /data1

- data:/data2

- ./data:/data3

volumes:

data:services:

app:

command: ["python", "app.py"]services:

app:

environment:

RACK_ENV: development

SHOW: "true"

SESSION_SECRET:ENV Files

services:

app:

env_file:

- default.env

- prod.envDocker Compose uses standart bash variable substitution

services:

app:

image: ${IMAGE:-ondrejsika/go-hello-world:3}

services:

app:

image: ${IMAGE?Environment variable IMAGE is required}

x-base: &base

image: debian

command: ["env"]

services:

en:

<<: *base

environment:

HELLO: Hello

cs:

<<: *base

environment:

HELLO: Ahojservices:

app:

deploy:

replicas: 4git clone https://github.com/ondrejsika/docker-training.git example--simple-compose

cd example--simple-compose/examples/simple-compose

rm Dockerfile docker-compose.ymlNow, we can create Docker compose and Compose File manually.

Create Dockerfile:

FROM python:3.7-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "app.py"]

EXPOSE 80Try without Docker Compose

docker build -t counter .

docker network create counter

docker run --name redis -d --net counter redis

docker run --name counter -d --net counter -p 8000:80 counter

docker stop counter redis

docker rm counter redis

docker network rm counter

Create docker-compose.yml:

version: '3.7'

services:

counter:

build: .

image: reg.istry.cz/examples/simple-compose/counter

ports:

- ${PORT:-80}:80

depends_on:

- redis

redis:

image: redis

docker-compose config- validate & see final docker compose yamldocker-compose ps- see all composite's containersdocker-compose exec <service> <command>- run something in containerdocker-compose version- see version ofdocker-composebinarydocker-compose logs [-f] [<service>]- see logs

-d- run in detached mode--force-recreate- always create new cont.--build- build on every run--no-build- don't build, even images not exist--remove-orphans

docker-compose start [<service>]docker-compose stop [<service>]docker-compose restart [<service>]docker-compose kill [<service>]

docker-compose up

- run all services (or multiple selected services)

- you can't specify command, volums, environment from cli arguments

docker-compose run

- run only one service

- run dependencies on background

- you can specify command, volums, environment from cli arguments

docker-compose down

docker-compose up --scale <service>=<n>

If you want override your docker-compose.yml, you can use -f param for multiple compose files. You can also create docker-compose.override.yml which will be used automatically.

See compose-override example.

Docker Compose doesn't support BuildKit yet. They are working on it.

It's because Docker Compose is written in Python and Python Docker client it doesn't support yet.

See:

That's it. Do you have any questions? Let's go for a beer!

- email: ondrej@sika.io

- web: https://sika.io

- twitter: @ondrejsika

- linkedin: /in/ondrejsika/

- Newsletter, Slack, Facebook & Linkedin Groups: https://join.sika.io

Do you like the course? Write me recommendation on Twitter (with handle @ondrejsika) and LinkedIn (add me /in/ondrejsika and I'll send you request for recommendation). Thanks.

Wanna to go for a beer or do some work together? Just book me :)

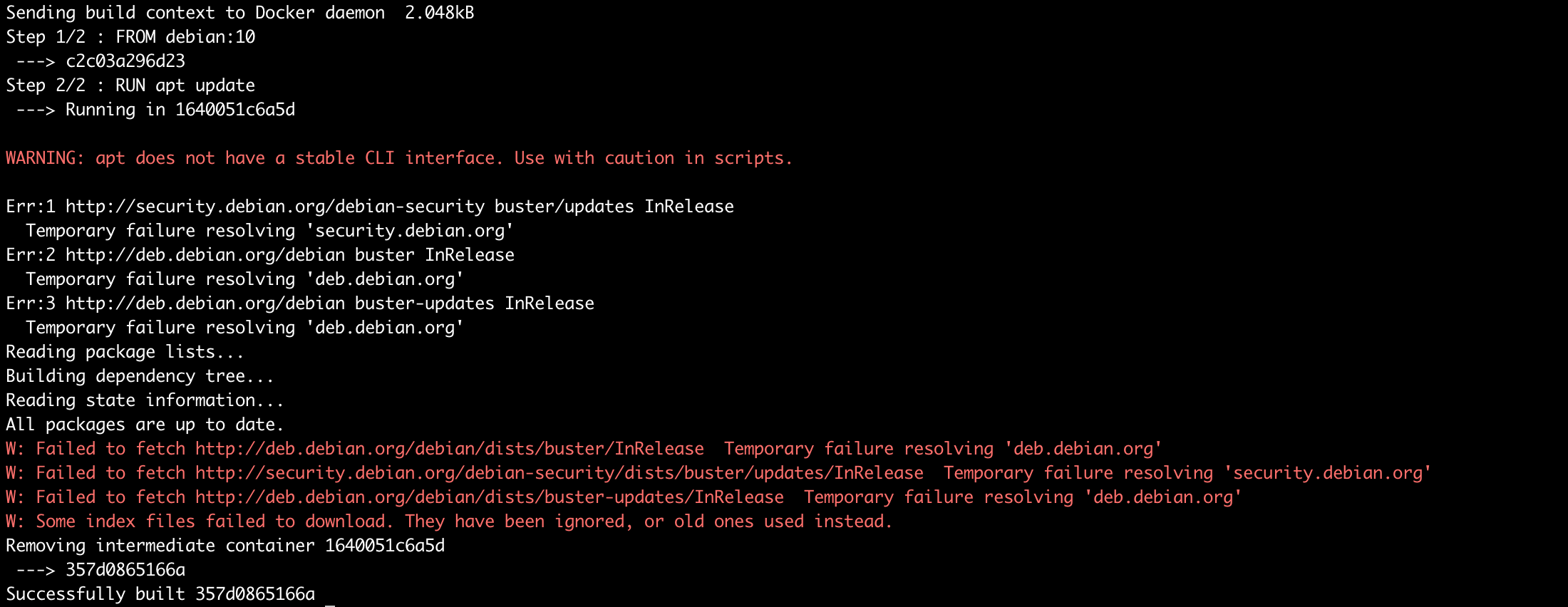

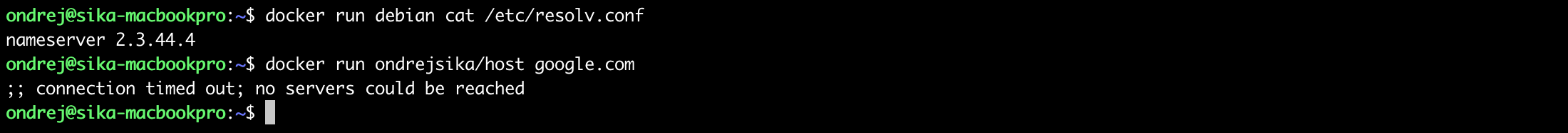

If you see something like that, it may be caused by DNS server trouble.

You can check see your DNS server using:

docker run debian cat /etc/resolv.conf

Or check if it works:

docker run ondrejsika/host google.com

You can fix it by setting Google or Cloudflare DNS to /etc/docker/daemon.json:

{ "dns": ["1.1.1.", "8.8.8.8"] }No. But they are work on it...