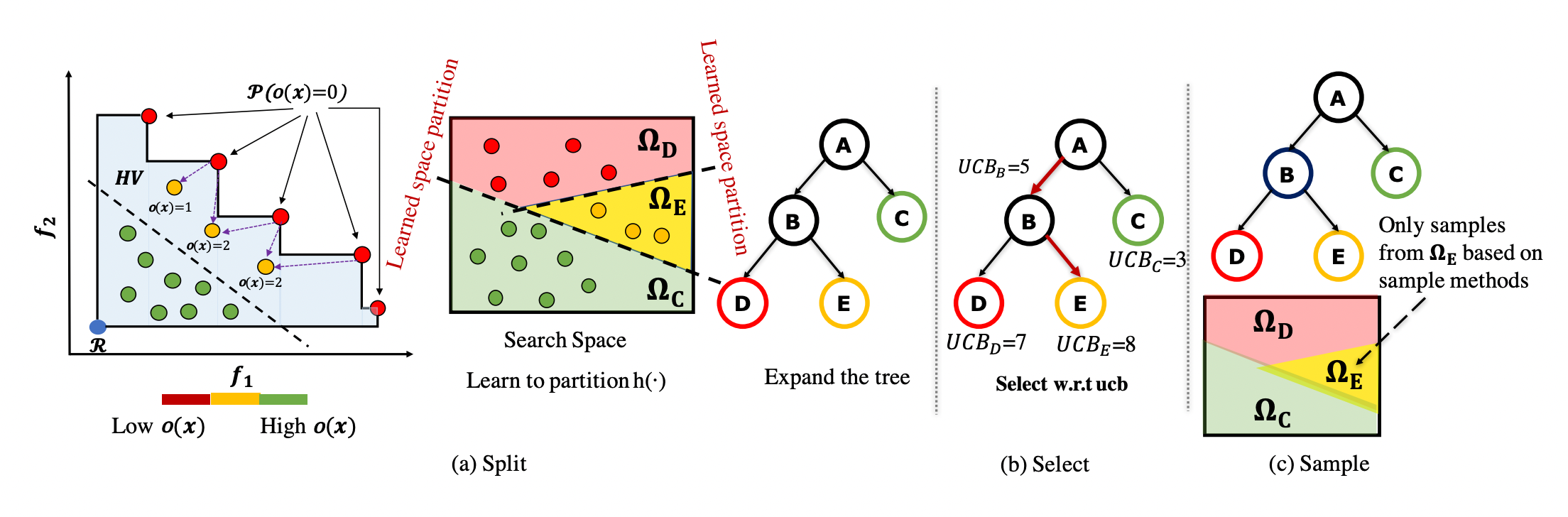

LaMOO is a novel multi-objective optimizer that learns a model from observed samples to partition the search space and then focus on promising regions that are likely to contain a subset of the Pareto frontier. So that existing solvers like Bayesian Optimizations (BO) can focus on promising subregions to mitigate the over-exploring issue.

python >= 3.8, PyTorch >= 1.8.1, gpytorch >= 1.5.1

scipy, Botorch, Numpy, cma

Extra Requirements for Molecule Discovery

networkx, RDKit >= 2019.03, Chemprop >= 1.2.0, scikit-learn==0.21.3

Please follow the installation instructions here(including the Chemprop installation https://chemprop.readthedocs.io/en/latest/installation.html, using version ==1.2.0). Then go to this folder with pip install -e . . If there is cuda unavailable on your side, you can delete all the .cuda() conversions in this directory.

Open the folder corresponding to the problem's name (including branincurrin, vehiclesafety, Nasbench201, molecule, molecule_obj3, and molecule_obj4).

python MCTS.py --data_id 0

You can change the data_id with different initializations to have different runs. All the data come from differnet trials random sampling in the search space.

You are able to run your own problems here by following several steps below.

- Please wrap your own function here and follow the defination and format of this class to customize your own function. Here is an example:

class MyProblem:

_max_hv = None ## Type the maximum hypervolume here if it is known

def __init__(self, dims:int, num_objectives:int, ref_point:torch.tensor, bounds:torch.tensor):

self.dim = dims # problem dimensions, int

self.num_objectives = num_objectives # number of objective, int

self.ref_point = ref_point # reference point, torch tensor

self.bounds = bounds # bound for dimensions, torch.tensor

#### Take two dimensions problem for example

# bounds = [(0.0, 0.99999)] * self.dim

# self.bounds = torch.tensor(bounds, dtype=torch.float).transpose(-1, -2)

def __call__(self, x):

res = MyProblem(x) # Here is to customize your function

return res

- We already pluged this class in our optimizer in MCTS.py and you are also free to initialize your function in MCTS.py line 561. Note that we only support to maximize f(x). If you would like to minimize f(x), please add a negative operator on your function.

python MCTS.py --cp "your value"

We usually set Cp = 0.1 * max of Hypervolume of the problem .

Cp controls the balance of exploration and exploitation. A larger Cp guides LaMOO to visit the sub-optimal regions more often. a "rule of thumb" is to set the Cp to be roughly 10% of the maximum hypervolume. When maximum hypervolume is unknown, Cp can be dynamically set to 10% of the hypervolume of current samples in each search iteration.

python MCTS.py --node_select "mcts"/"leaf"

Path: Traverse down the search tree to trace the current most promising search path and select the leaf node(Algorithm 1 in the paper).

Leaf: LaMOO directly select the leaf node with the highest UCB value(Algorithm 2 in the paper). This variation may reduce the optimization cost especially for the problem with large numbers of objectives.

python MCTS.py --kernel "linear"/"poly"/rbf

From our experiments, we find that the RBF kernel performs slightly better than others. Thanks to the non-linearity of the polynomial and RBF kernels, their region partitions perform better compared to a linear one.

--degree (svm degree)

--gamma (defination)

Leaf Size (Control the speed of tree growth, set here)

--sample_num (sample numsbers per iteration)

Please reference the following publication when using this package. OpenReview link.

@inproceedings{

zhao2022multiobjective,

title={Multi-objective Optimization by Learning Space Partition},

author={Yiyang Zhao and Linnan Wang and Kevin Yang and Tianjun Zhang and Tian Guo and Yuandong Tian},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=FlwzVjfMryn}

}