GeneidX provides a fast annotation of the protein-coding genes in an eukaryotic genome taking as input the genome assembly and the taxonomic ID of the species to annotate.

In the description here, you can find our preliminary results, a schema of our method and a description of the minimal requirements and commands required for running it.

Stay tuned for an article with detailed descriptions and feel free to contact us if you are trying it and find any problem.

The results of an initial benchmarking using vertebrates genomes annotated in Ensembl show that our method is as accurate as the top ab initio gene predictors, but it is between 10 and 100 times faster.

Make sure ( Docker or Singularity ) and Nextflow are installed in your computer

Required parameters to run GeneidX

the absolute path of the tsv file input file

The tsv file can contain multiple assemblies of different species, thus allowing the pipeline to annotate in parallel all the species contained in the tsv file. See data/assemblies.tsv for an example.

| ID | PATH | TAXID |

|---|---|---|

| GCA_951394045.1 | https://www.ebi.ac.uk/ena/browser/api/fasta/GCA_946965045.2?download=true&gzip=true | 2809013 |

| humanAssembly | /absolutePath/humanAssembly.fa.gz | 9606 |

IMPORTANT

- the provided paths must point to gzipped files (.gz extension is expected from the pipeline's processes)

- the path can also be a URL

the column name of the row id, in the example above is ID it must be unique

the column name of the path pointing to the fasta file, in the example above is PATH

the column name of the taxid value, in the example above is TAXID

Whether to mask the genomes or not -> boolean

Whether to store the generated geneid param file in the target species folder -> boolean

Path to the directory where the downloaded assemblies will be stored. In case of many assemblies -> string

Refers to the level of homology of the proteins taken from UniRef. Can take values 0.5, 0.9 or 1.0

Proteins are automatically retrieved from UniRef. See here for more information. The set of proteins in non-redundant considering a more strict or less strict redundancy threshold. (UniRef50, less strict threshold (less proteins, different proteins in this set are really different), > UniRef100 the most strict threshold (more proteins, "more redundant" ))

Ensure that the set of proteins downloaded from UniRef has at least this many proteins.

Ensure that the set of proteins downloaded from UniRef has at most this many proteins.

The downloader module will keep iterating and adjusting the query parameters until the number of proteins is within this range.

Template for the basic parameters of the gene structures predicted by geneid.

This is the minimal score for a DIAMOND alignment to be used in the process of automatically training the coding potential estimation matrices.

This is the minimal length of a ORF derived from DIAMOND protein alignments to be used in the process of automatically training the coding potential estimation matrices.

To make sure that what we use as introns is truly non-coding sequence, this is the margin from the end of each predicted exon to the positions where we start assuming that corresponds to an intron.

These set the lower and upper boundaries of the size of introns that are being used for building the coding potential estimation matrices.

This is a "boolean" variable indicating whether the proteins of input come from uniprot/uniref or not. This information is used for producing a first "functional annotation" of the output GFF3 since it computes the intersection with the file of protein to genome matches in such a way that for each predicted gene we provide a list of which UniRef proteins are highly homologous to it.

nextflow run guigolab/geneidx -profile <docker/singularity> \

--tsv <PATH_TO_TSV> \

--column_taxid_value <TAXID_COLUMN_NAME> \

--column_path_value <PATH_COLUMN_NAME> \

--column_id_value <ID_COLUMN_NAME> \

--outdir <OUTPUT_directory>

or alternatively, clone the repository and then run it (highly recommended)

git clone https://github.com/guigolab/geneidx.git

cd geneidx

git checkout dev/v2-fixes

nextflow run main.nf -profile <docker/singularity> \

--tsv <PATH_TO_TSV> \

--column_taxid_value <TAXID_COLUMN_NAME> \

--column_path_value <PATH_COLUMN_NAME> \

--column_id_value <ID_COLUMN_NAME> \

--outdir <OUTPUT_directory>

Revise the DETAILS section below for the minor specifications of each parameter.

Which steps are taking place as part of this pipeline?

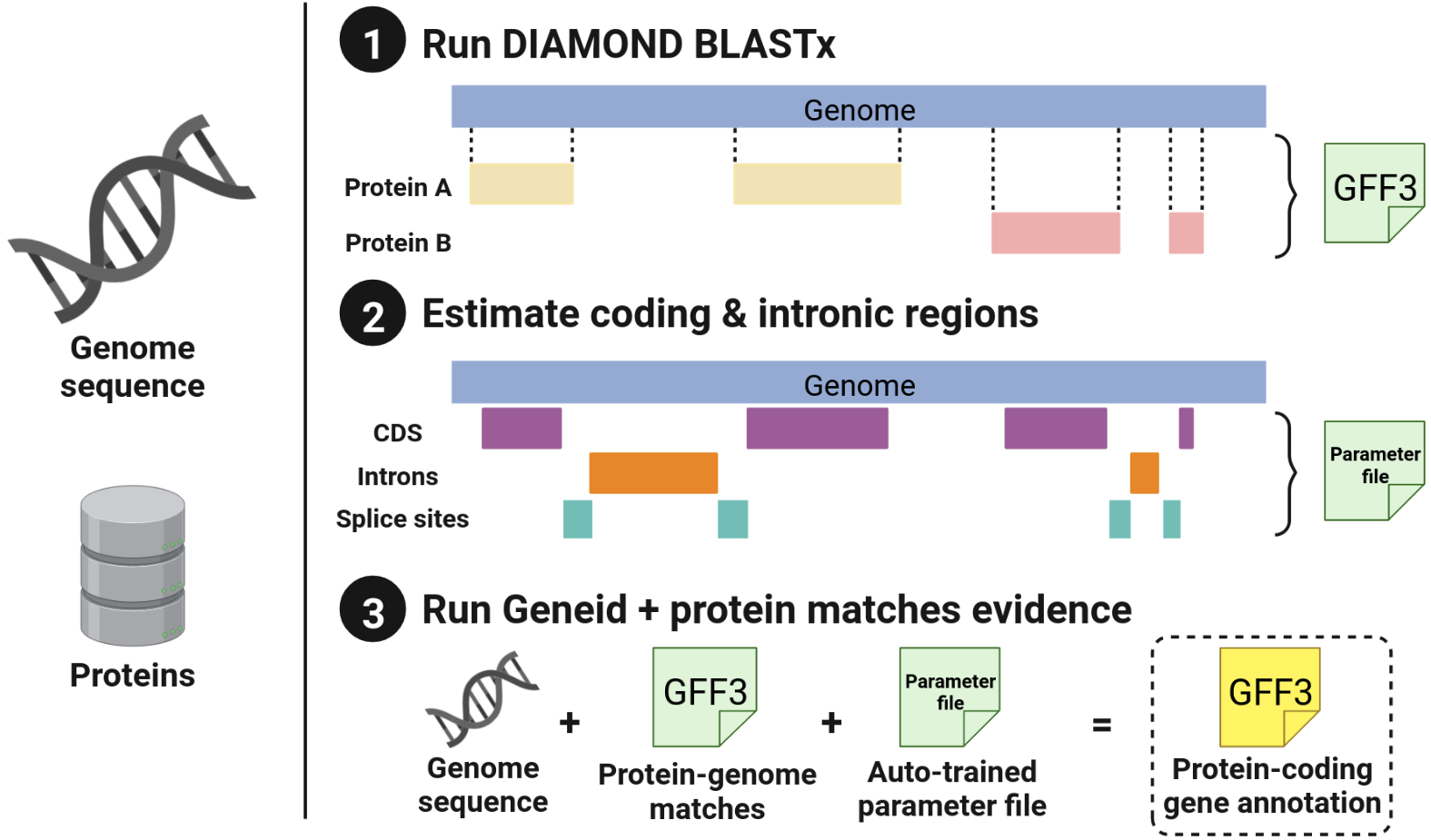

This is a graphical summary, and the specific steps are outlined below.

- Get the set of proteins to be used for the protein-to-genome alignments.

- Get the closest Geneid parameter file to use, as source of the parameters is not indicated by the user.

- Create the protein database for DIAMOND to use it as a source.

- Align the provided genome against the database created using DIAMOND BLASTx flavour.

- Run the auto-training process:

- Use matches to estimate the coding sections, look for open reading frames between stop codons.

- Use matches from the same protein to predict the potential introns.

- From the sequences of both previous steps compute the initial and transition probability matrices required for the computation of the coding potential of the genome that will be annotated.

- Update the parameter file with the parameters indicated in the params.config file and also the matrices automatically generated from the protein-DNA matches.

- Run Geneid with the new parameter file and the protein-DNA matches from the previous steps as additional evidence. This is done in parallel for each independent sequence inside the genome FASTA file, and it consists of the following steps:

- Pre-process the matches to join those that overlap and re-score them appropriately.

- Run Geneid using the evidence and obtain the GFF3 file.

- Remove the files from the internal steps.

- Concatenate all the outputs into a single GFF3 file that is sorted by coordinates.

- Add information from proteins matching the predicted genes.

-

The name of the sequences in the FASTA file cannot contain unusual characters.

-

The input genome file must be a gzip-compressed FASTA file. (.fa.gz)

-

Auto-train the parameter file always.

-

It is recommended to clone the repository and then run the pipeline from there.

-

The output of the predictions is stored in the path indicated when running the pipeline, in the following structure {provided outdir}/species/{taxid of the species}.

-

If you are running the pipeline multiple times, it is recommended that you define a directory for downloading the docker/singularity images to avoid having to download them multiple times. See

singularity.cacheDir variableinnextflow.config. -

If you have used Geneid in the past and have manually trained a parameter file, we are open to receive them and share them in our repositories giving credit to the users who generated them: to view the complete list of the available parameter files see

Contact us at ferriol.calvet@crg.eu.

Follow us on Twitter (@GuigoLab) for updates in the article describing our method, and contact us for any questions or suggestions.