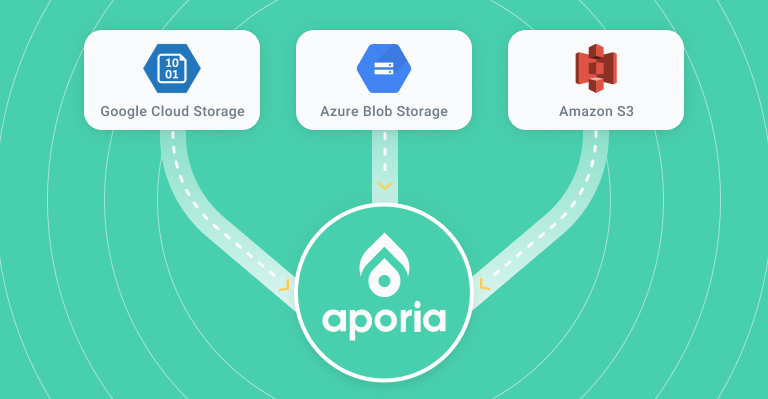

A small utility to import ML production data from your cloud storage provider and monitor it using Aporia's monitoring platform.

pip install "aporia-importer[all]"

If you only wish to install the dependencies for a specific cloud provider, you can use

pip install "aporia-importer[s3]"

aporia-importer /path/to/config.yaml

aporia-importer requires a config file as a parameter, see configuration

aporia-importer uses a YAML configuration file.

There are sample configurations in the examples directory.

Currently, the configuration requires defining a model version schema manually - the schema is a mapping of field names to field types (see here). You can find more details in our docs.

The following table describes all of the configuration fields in detail:

| Field | Required | Description |

|---|---|---|

| source | True | The path to the files you wish to upload, e.g. s3://my-bucket/my_file.csv. Glob patterns are supported. |

| format | True | The format of the files you wish to upload, see here |

| token | True | Your Aporia authentication token |

| environment | True | The environment in which Aporia will be initialized (e.g production, staging) |

| model_id | True | The ID of the model that the data is associated with |

| model_version.name | True | A name for the model version to create |

| model_version.type | True | The type of the model (regression, binary, multiclass) |

| predictions | True | A mapping of prediction fields to their field types |

| features | True | A mapping of feature fields to their field types |

| raw_inputs | False | A mapping of raw inputs fields to their field types |

| aporia_host | False | Aporia server URL. Defaults to app.aporia.com |

| aporia_port | False | Aporia server port. Defaults to 443 |

- Local files

- S3

- csv

- parquet

aporia-importer uses dask to load data from various cloud providers, and the Aporia sdk to report the data to Aporia.