This project accompanies the research paper,

SlowFast-LLaVA: A Strong Training-Free Baseline for Video Large Language Models

Mingze Xu*, Mingfei Gao*, Zhe Gan, Hong-You Chen, Zhengfeng Lai, Haiming Gang, Kai Kang, Afshin Dehghan

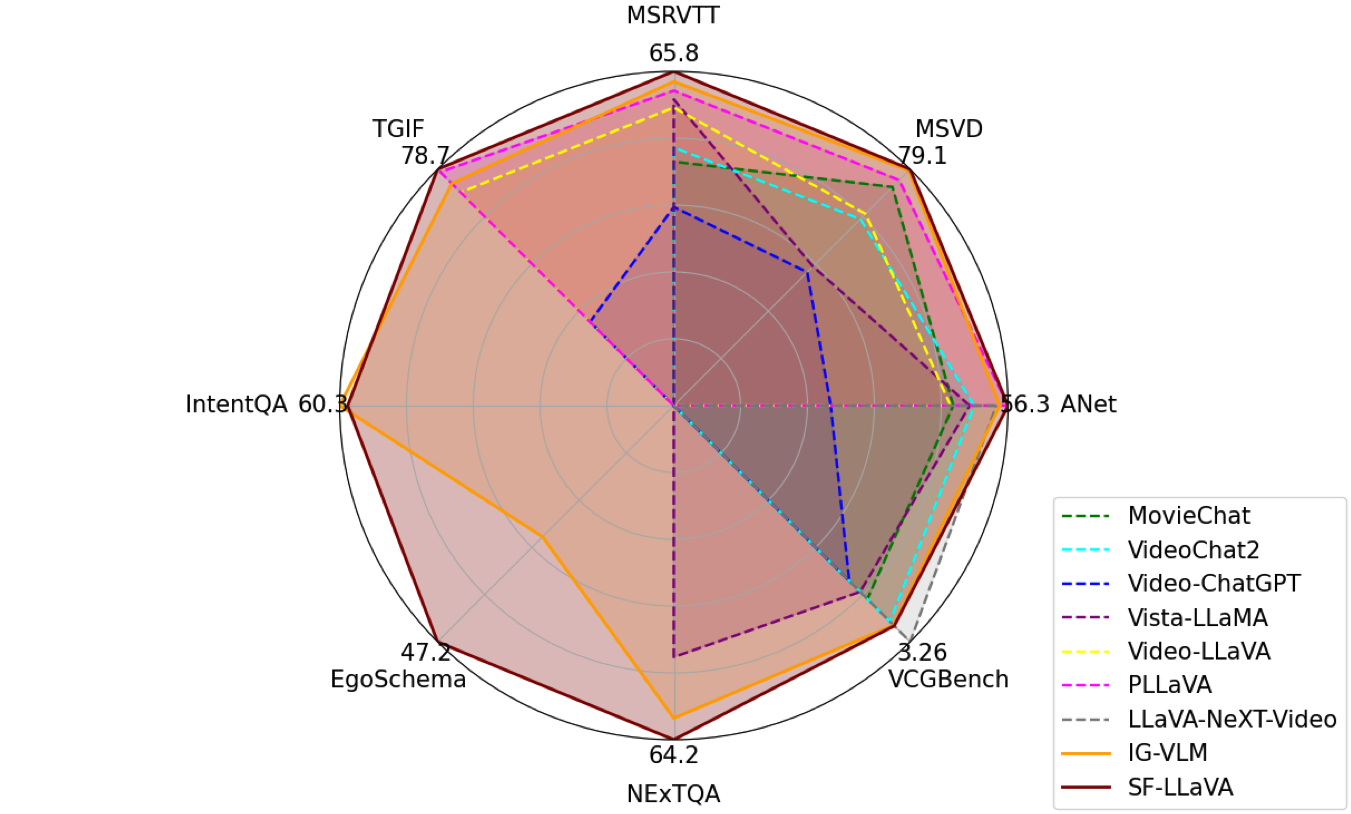

SlowFast-LLaVA is a training-free multimodal large language model (LLM) for video understanding and reasoning. Without requiring fine-tuning on any data, it achieves comparable or even better performance compared to state-of-the-art Video LLMs on a wide range of VideoQA tasks and benchmarks, as shown in the figure.

-

The code is developed with CUDA 11.7, Python >= 3.10.12, PyTorch >= 2.1.0

-

[Optional but recommended] Create a new conda environment.

conda create -n sf_llava python=3.10.12And activate the environment.

conda activate sf_llava -

Install the requirements.

bash setup_env.sh -

Add OpenAI key and organization to the system environment to use GPT-3.5-turbo for model evaluation.

export OPENAI_API_KEY=$YOUR_OPENAI_API_KEY export OPENAI_ORG=$YOUR_OPENAI_ORG # optional -

Download pre-trained LLaVA-NeXT weights from

HuggingFace, and put them under theml-slowfast-llavafolder.git lfs clone https://huggingface.co/liuhaotian/llava-v1.6-vicuna-7b liuhaotian/llava-v1.6-vicuna-7b git lfs clone https://huggingface.co/liuhaotian/llava-v1.6-34b liuhaotian/llava-v1.6-34b

-

-

We prepare the ground-truth question and answer files based on

IG-VLM, and put them under playground/gt_qa_files.- MSVD-QA

- Download the

MSVD_QA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_msvd_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- MSRVTT-QA

- Download the

MSRVTT_QA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_msrvtt_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- TGIF-QA

- Download the

TGIF_FrameQA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_tgif_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- Activitynet-QA

- Download the

Activitynet_QA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_activitynet_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- NExT-QA

- Download the

NExT_QA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_nextqa_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- EgoSchema

- Download the

EgoSchema.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_egoschema_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- IntentQA

- Download the

IntentQA.csvfrom thehere - Reformat the files by running

python scripts/data/prepare_intentqa_qa_file.py --qa_file $PATH_TO_CSV_FILE

- Download the

- VCGBench

- Download all files under

text_generation_benchmark - Reformat the files by running

python scripts/data/prepare_vcgbench_qa_file.py --qa_folder $TEXT_GENERATION_BENCHMARK

- Download all files under

- MSVD-QA

-

Download the raw videos from the official websites.

-

Openset VideoQA

- [Recomanded] Option 1: Follow the instruction in

Video-LLaVAto download raw videos. - Option 2: Download videos from the data owners.

- [Recomanded] Option 1: Follow the instruction in

-

Multiple Choice VideoQA

-

Text Generation

- The videos are based on ActivityNet, and you can reuse the one from Openset VideoQA.

-

-

Organize the raw videos under playground/data.

-

To directly use our data loaders without changing paths, please organize your datasets as follows

$ ml-slowfast-llava/playground/data ├── video_qa ├── MSVD_Zero_Shot_QA ├── videos ├── ... ├── MSRVTT_Zero_Shot_QA ├── videos ├── all ├── ... ├── TGIF_Zero_Shot_QA ├── mp4 ├── ... ├── Activitynet_Zero_Shot_QA ├── all_test ├── ... ├── multiple_choice_qa ├── NExTQA ├── video ├── ... ├── EgoSchema ├── video ├── ... ├── IntentQA ├── video ├── ...

-

We use yaml config to control the design choice of SlowFast-LLaVA. We will use the config of SlowFast-LLaVA-7B as an example to explain some important parameters.

SCRIPT: It controls the tasks that you want to run.DATA_DIRandCONV_MODE: They are the data directories and prompts for different tasks. They could be either a string or a list of strings, but must match theSCRIPT.NUM_FRAMES: The total number of sampled video frames.TEMPORAL_AGGREGATION: It controls the setting of Slow and Fast pathways. It should be a string with the patternslowfast-slow_{$S_FRMS}frms_{$S_POOL}-fast_{$F_OH}x{F_OW}, where$S_FRMSshould be an integer which indicates the number of frames in the Slow pathway,$S_POOLshould be a string which indicates the pooling operation for the Slow pathway,$F_OHand$F_OWshould be an integer and are the height and width of the output tokens in the Fast pathway.

SlowFast-LLaVA is a training-free method, so we can directly do the inference and evaluation without model training.

By default, we use 8 GPUs for the model inference. We can modify the CUDA_VISIBLE_DEVICES in the config file to accommodate your own settings. Please note that the model inference of SlowFast-LLaVA-34B requires GPUs with at least 80G memory.

cd ml-slowfast-llava

python run_inference.py --exp_config $PATH_TO_CONFIG_FILE

- This is optional, but use

export PYTHONWARNINGS="ignore"if you want to suppress the warnings.

- The inference outputs will be stored under

outputs/artifacts. - The intermediate outputs of GPT-3.5-turbo will be stored under

outputs/eval_save_dir. - The evaluation results will be stored under

outputs/logs. - All of these can be changed in the config file.

We provide a script for running video question-answering on a single video.

cd ml-slowfast-llava

python run_demo.py --video_path $PATH_TO_VIDEO --model_path $PATH_TO_LLAVA_MODEL --question "Describe this video in details"

This project is licensed under the Apple Sample Code License.

If you are using the data/code/model provided here in a publication, please cite our paper:

@article{xu2024slowfast,

title={SlowFast-LLaVA: A Strong Training-Free Baseline for Video Large Language Models},

author={Xu, Mingze and Gao, Mingfei and Gan, Zhe, and Chen, Hong-You and Lai, Zhengfeng and Gang, Haiming and Kang, Kai and Dehghan, Afshin},

journal={arXiv:2407.15841},

year={2024}

}