melodybrush is a web application that facilitates transforms song lyrics into captivating artwork. The aim of this project was to

-

allow music to be further accessible for those with hearing loss

-

generate song covers

-

display stories and create narratives

-

create social media posts

Due to our love for music, we decided to create a more immersive experience for listeners through cloud computing and generative AI.

melodybrush is composed of three major components:

- Jurassic-2 Ultra (Amazon Bedrock Edition) by AI21 Labs

- Stable Diffusion XL 1.0 by stability.ai

- Web Application

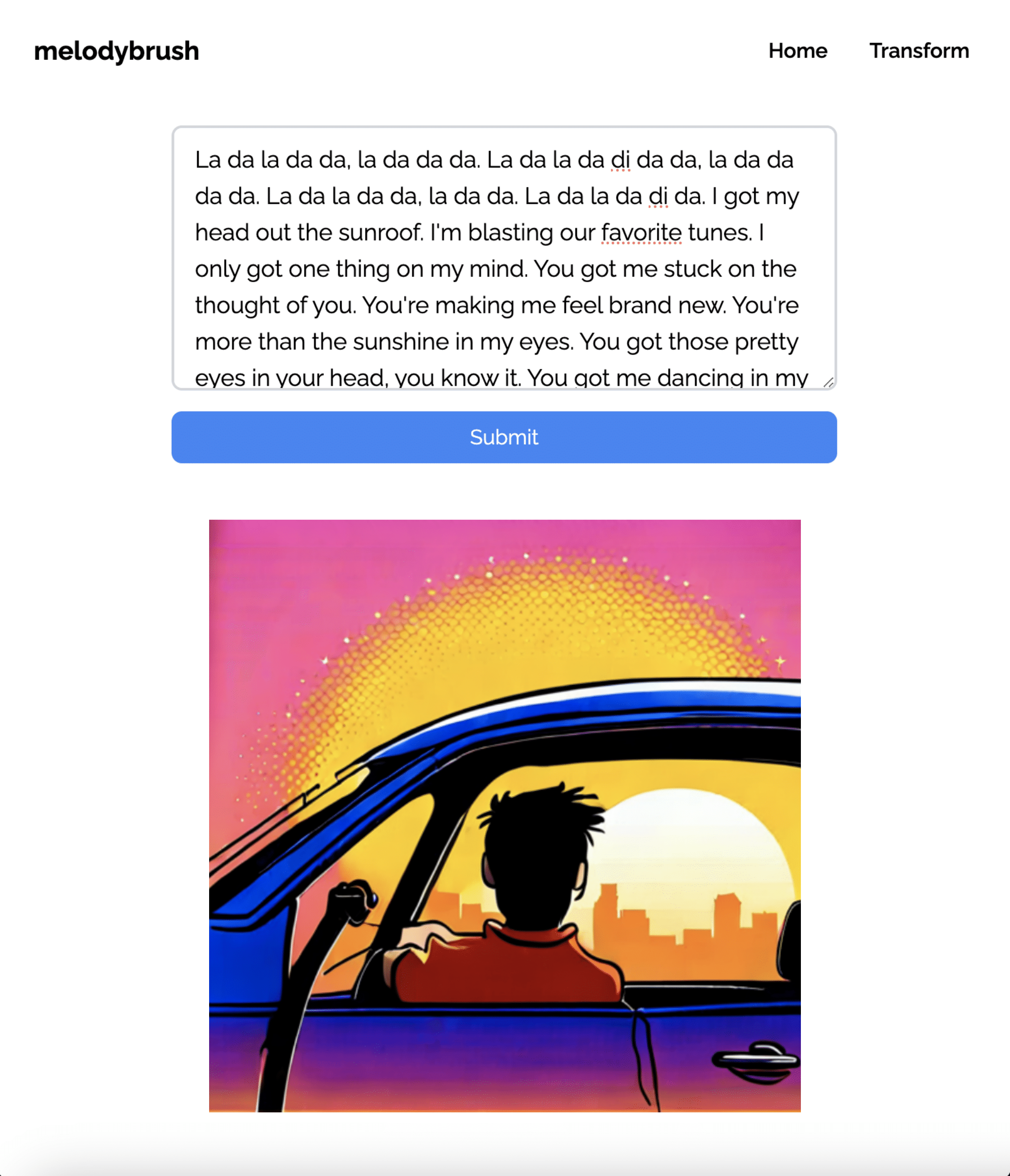

We utilized AI21's most power large language model to take lyrics and transform them into a short, descriptive prompt that can be easily drawn. We conducted hyperparameter tuning and prompt engineering through experimenting and testing with Amazon Bedrock's text playground and Jupyter Notebook.

The largest image generation model was used to take the response from Jurassic-2, and produce realistic and artistic pieces of artwork and resembles the song lyrics through various keywords, themes, and emotions. Hyperparameter tuning was conducted through Amazon Bedrock's image playground and Jupyter Notebook.

The web application was built using React JS paired with Tailwind CSS.

| Song | Artwork |

|---|---|

| Happy by Pharrell Williams |  |

| Falling by Harry Styles |  |

| Sunroof by Nicky Youre |  |

| Talking To The Moon by Bruno Mars |  |

| Twinkle Twinkle Little Star |  |

| Wake Me Up by Avicii |  |