Utilities for cuda memory usage inspection for pytorch and fastai.

pip install -qq git+git://github.com/arampacha/pt_memprofile.git

bs, sl = 8, 128

dls = DataLoaders.from_dsets(DeterministicTwinSequence(sl, 10*bs),

DeterministicTwinSequence(sl, 2*bs),

bs=bs, shuffle=False, device='cuda')

model = TransformerLM(128, 256)xb, yb = dls.one_batch()

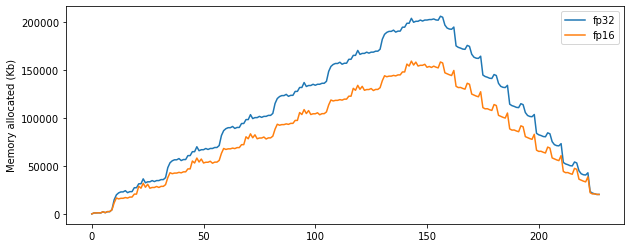

memlog1 = memprofile(model, xb, yb, plot=False, label='fp32')

memlog2 = memprofile(model, xb, yb, plot=False, label='fp16', fp16=True)plot_logs(memlog1, memlog2)learn = Learner(dls, model, loss_func=CrossEntropyLossFlat())

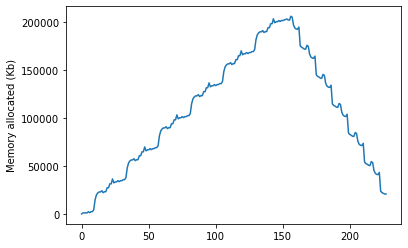

memlog1 = learn.profile_memory(){% include note.html content='Memory profiling slows down training significantly, so you probably don’t want to run it for a comlete epoch.' %}

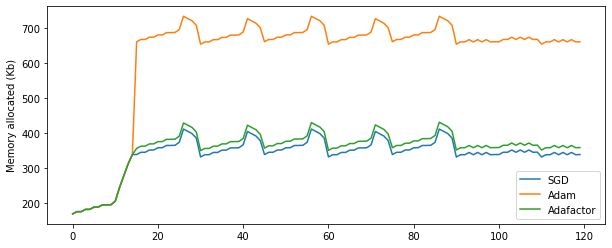

Let's examine effect of optimizer to memory usage:

#hide_output

#cuda

learn = Learner(simple_dls(), simple_model(), loss_func=CrossEntropyLossFlat(), cbs=MemStatsCallback(label='SGD'), opt_func=SGD)

with learn.no_bar(): learn.fit(1, 1e-3, cbs=[ShortEpochCallback(pct=0.1)])

memlog1 = learn.mem_stats.stats

learn = Learner(simple_dls(), simple_model(), loss_func=CrossEntropyLossFlat(), cbs=MemStatsCallback(label='Adam'), opt_func=Adam)

with learn.no_bar(): learn.fit(1, 1e-3, cbs=[ShortEpochCallback(pct=0.1)])

memlog2 = learn.mem_stats.stats

learn = Learner(simple_dls(), simple_model(), loss_func=CrossEntropyLossFlat(), cbs=MemStatsCallback(label='Adafactor'), opt_func=adafactor)

with learn.no_bar(): learn.fit(1, 1e-3, cbs=[ShortEpochCallback(pct=0.1)])

memlog3 = learn.mem_stats.statsplot_logs(memlog1, memlog2, memlog3)