Shaolei Zhang, Qingkai Fang, Shoutao Guo, Zhengrui Ma, Min Zhang, Yang Feng*

Code for ACL 2024 paper "StreamSpeech: Simultaneous Speech-to-Speech Translation with Multi-task Learning" (paper coming soon).

🎧 Listen to StreamSpeech's translated speech 🎧

💡Highlight:

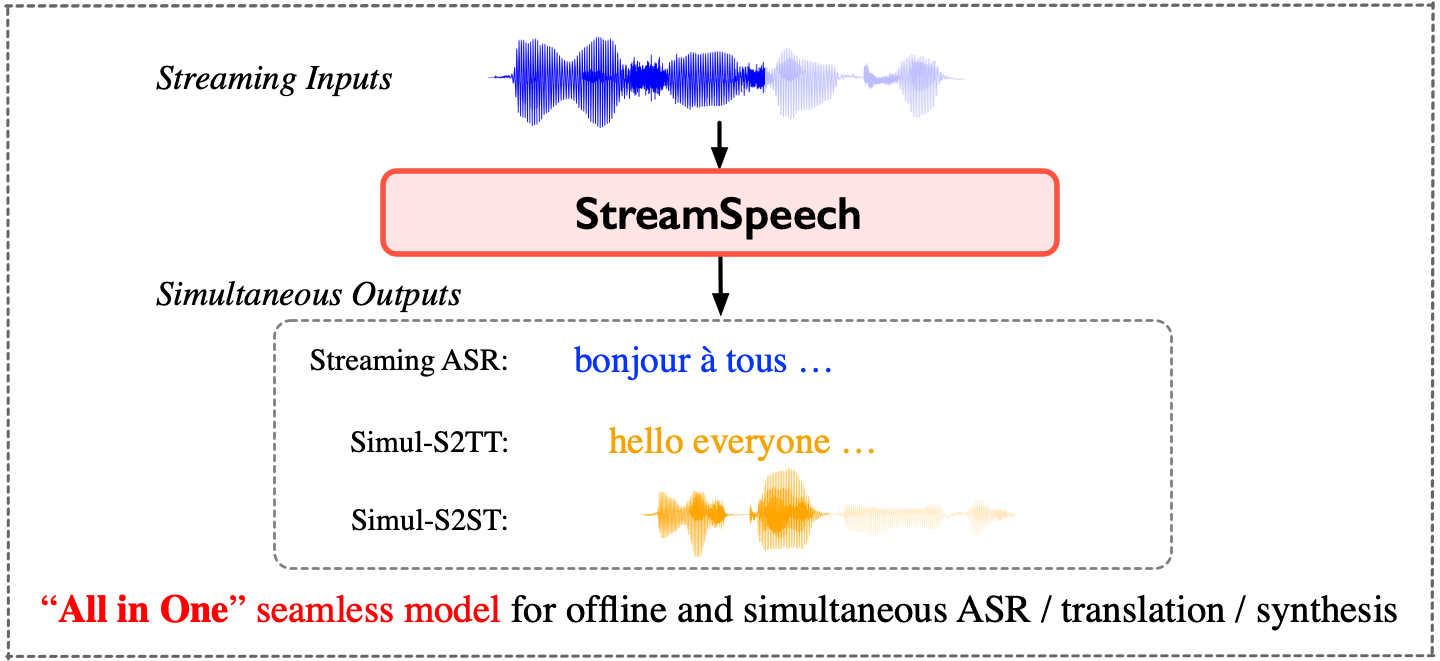

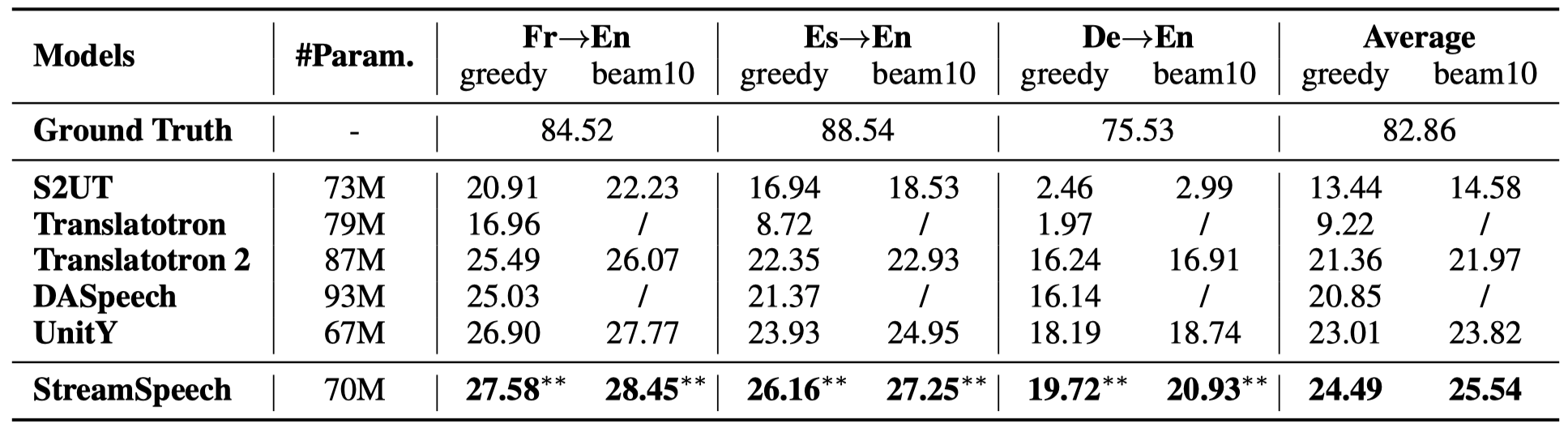

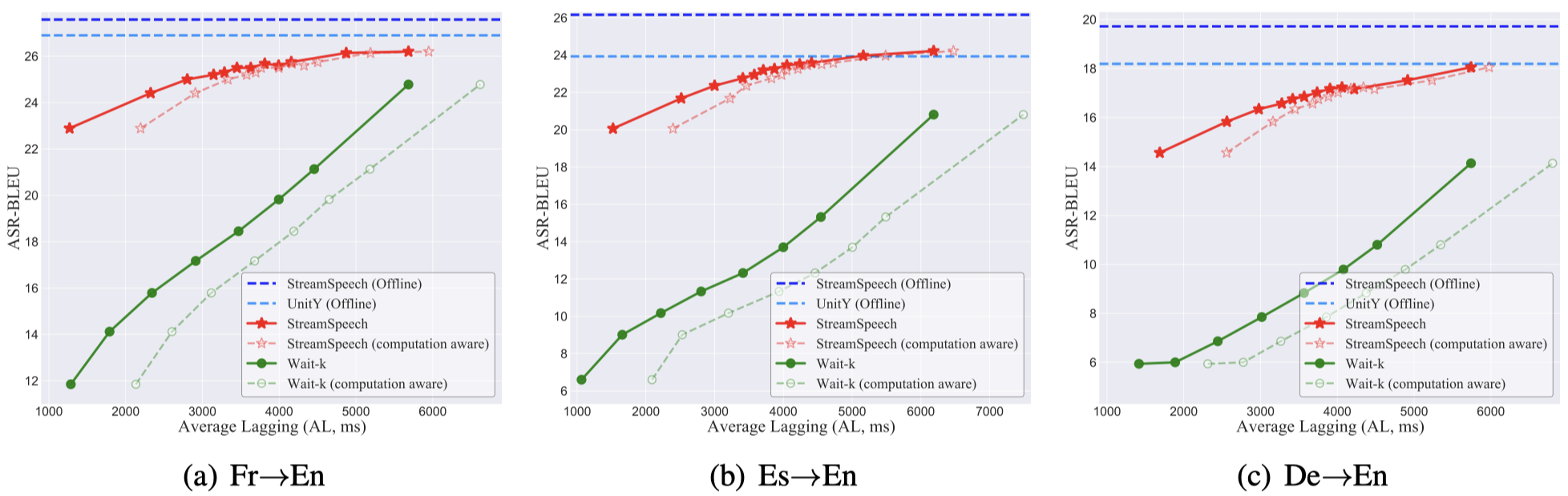

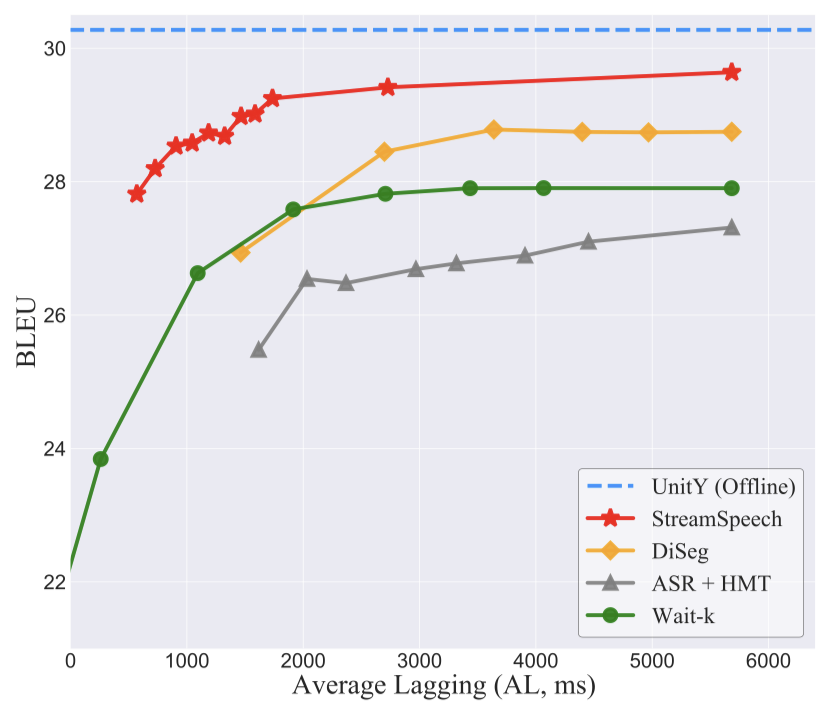

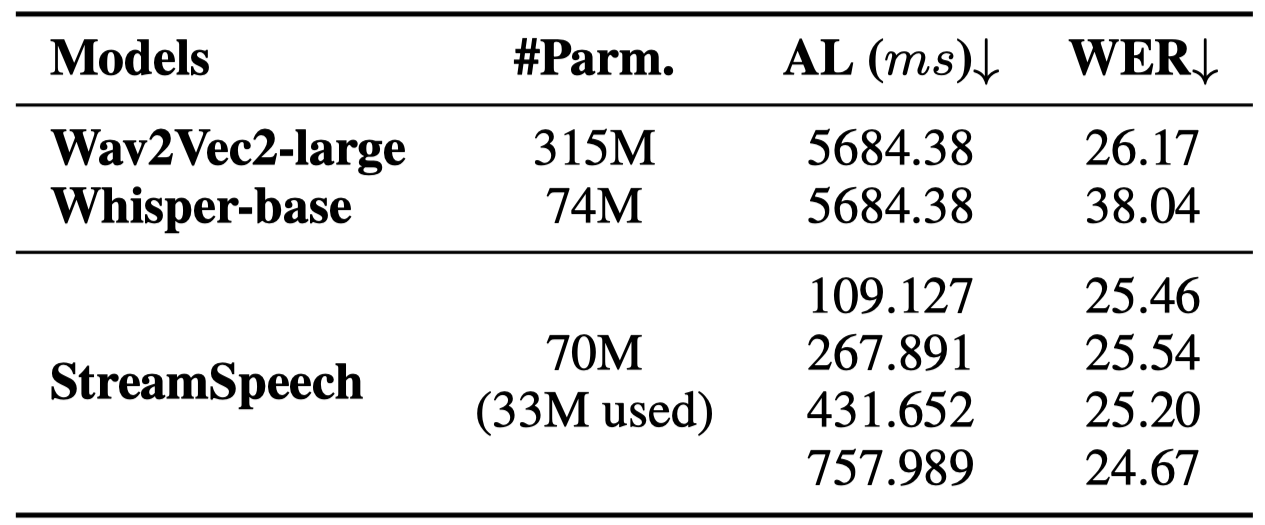

- StreamSpeech achieves state-of-the-art performance on both offline and simultaneous speech-to-speech translation.

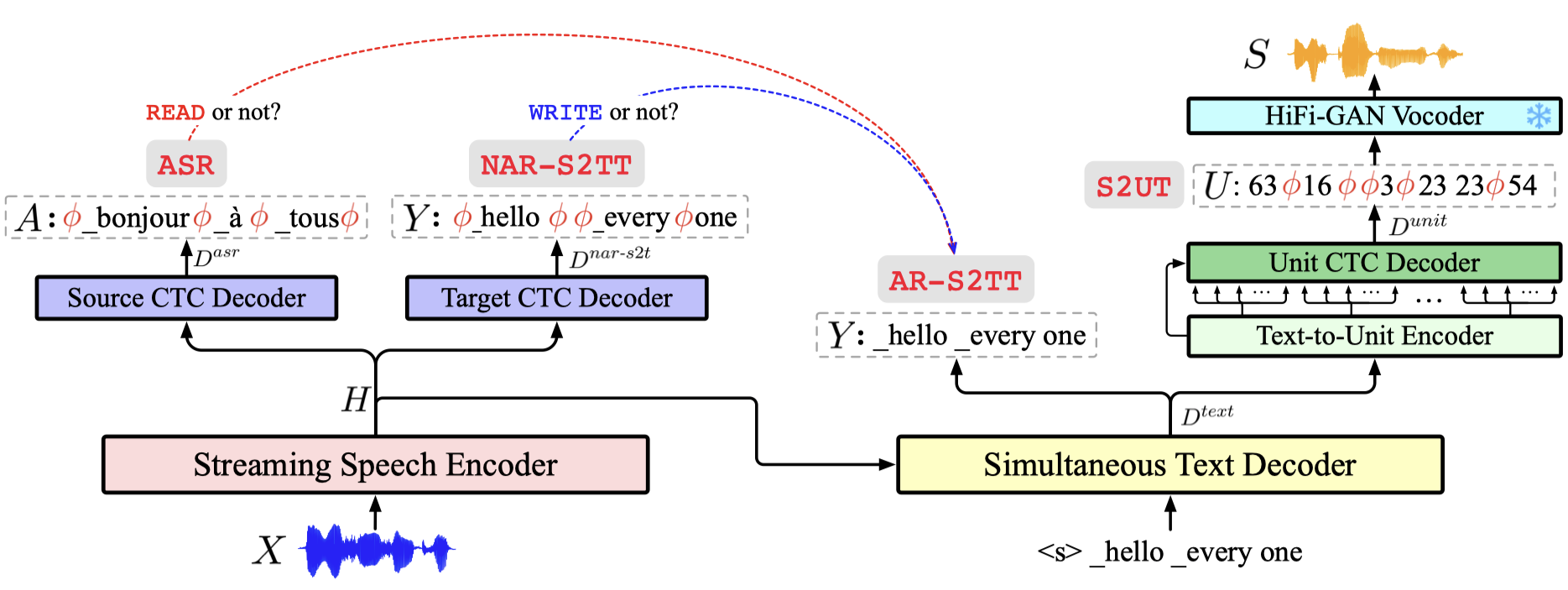

- StreamSpeech performs streaming ASR, simultaneous speech-to-text translation and simultaneous speech-to-speech translation via an "All in One" seamless model.

- StreamSpeech can present intermediate results (i.e., ASR or translation results) during simultaneous translation, offering a more comprehensive low-latency communication experience.

-

Python == 3.10, PyTorch == 2.0.1

-

Install fairseq & SimulEval:

cd fairseq pip install --editable ./ --no-build-isolation cd SimulEval pip install --editable ./

| Language | UnitY | StreamSpeech (offline) | StreamSpeech (simultaneous) |

|---|---|---|---|

| Fr-En | unity.fr-en.pt [Huggingface] [Baidu] | streamspeech.offline.fr-en.pt [Huggingface] [Baidu] | streamspeech.simultaneous.fr-en.pt [Huggingface] [Baidu] |

| Es-En | unity.es-en.pt [Huggingface] [Baidu] | streamspeech.offline.es-en.pt [Huggingface] [Baidu] | streamspeech.simultaneous.es-en.pt [Huggingface] [Baidu] |

| De-En | unity.de-en.pt [Huggingface] [Baidu] | streamspeech.offline.de-en.pt [Huggingface] [Baidu] | streamspeech.simultaneous.de-en.pt [Huggingface] [Baidu] |

| Unit config | Unit size | Vocoder language | Dataset | Model |

|---|---|---|---|---|

| mHuBERT, layer 11 | 1000 | En | LJSpeech | ckpt, config |

Replace /Users/arararz/Documents/GitHub/StreamSpeech in files configs/fr-en/config_gcmvn.yaml and configs/fr-en/config_mtl_asr_st_ctcst.yaml with your local address of StreamSpeech repo.

Prepare test data following SimulEval format. ./example provides an example:

- wav_list.txt: Each line records the path of a source speech.

- target.txt: Each line records the reference text, e.g., target translation or source transcription (used to calculate the metrics).

Run these scripts to inference StreamSpeech on streaming ASR, simultaneous S2TT and simultaneous S2ST.

use

--source-segment-sizeto set the chunk size (millisecond) and control the latency

Simultaneous Speech-to-Speech Translation

--output-asr-translation: whether to output the intermediate ASR and translated text results during simultaneous speech-to-speech translation.

export mps_VISIBLE_DEVICES=0

ROOT=/Users/arararz/Documents/GitHub/StreamSpeech # path to StreamSpeech repo

PRETRAIN_ROOT=/data/zhangshaolei/pretrain_models

VOCODER_CKPT=$PRETRAIN_ROOT/unit-based_HiFi-GAN_vocoder/mHuBERT.layer11.km1000.en/g_00500000 # path to downloaded Unit-based HiFi-GAN Vocoder

VOCODER_CFG=$PRETRAIN_ROOT/unit-based_HiFi-GAN_vocoder/mHuBERT.layer11.km1000.en/config.json # path to downloaded Unit-based HiFi-GAN Vocoder

LANG=fr

file=streamspeech.simultaneous.${LANG}-en.pt # path to downloaded StreamSpeech model

output_dir=$ROOT/res/streamspeech.simultaneous.${LANG}-en/simul-s2st

chunk_size=320 #ms

PYTHONPATH=$ROOT/fairseq simuleval --data-bin ${ROOT}/configs/${LANG}-en \

--user-dir ${ROOT}/researches/ctc_unity --agent-dir ${ROOT}/agent \

--source example/wav_list.txt --target example/target.txt \

--model-path $file \

--config-yaml config_gcmvn.yaml --multitask-config-yaml config_mtl_asr_st_ctcst.yaml \

--agent $ROOT/agent/speech_to_speech.streamspeech.agent.py \

--vocoder $VOCODER_CKPT --vocoder-cfg $VOCODER_CFG --dur-prediction \

--output $output_dir/chunk_size=$chunk_size \

--source-segment-size $chunk_size \

--quality-metrics ASR_BLEU --target-speech-lang en --latency-metrics AL AP DAL StartOffset EndOffset LAAL ATD NumChunks DiscontinuitySum DiscontinuityAve DiscontinuityNum RTF \

--device gpu --computation-aware \

--output-asr-translation TrueSimultaneous Speech-to-Text Translation

export mps_VISIBLE_DEVICES=0

ROOT=/Users/arararz/Documents/GitHub/StreamSpeech # path to StreamSpeech repo

LANG=fr

file=streamspeech.simultaneous.${LANG}-en.pt # path to downloaded StreamSpeech model

output_dir=$ROOT/res/streamspeech.simultaneous.${LANG}-en/simul-s2tt

chunk_size=320 #ms

PYTHONPATH=$ROOT/fairseq simuleval --data-bin ${ROOT}/configs/${LANG}-en \

--user-dir ${ROOT}/researches/ctc_unity --agent-dir ${ROOT}/agent \

--source example/wav_list.txt --target example/target.txt \

--model-path $file \

--config-yaml config_gcmvn.yaml --multitask-config-yaml config_mtl_asr_st_ctcst.yaml \

--agent $ROOT/agent/speech_to_text.s2tt.streamspeech.agent.py\

--output $output_dir/chunk_size=$chunk_size \

--source-segment-size $chunk_size \

--quality-metrics BLEU --latency-metrics AL AP DAL StartOffset EndOffset LAAL ATD NumChunks RTF \

--device gpu --computation-awareStreaming ASR

export mps_VISIBLE_DEVICES=0

ROOT=/Users/arararz/Documents/GitHub/StreamSpeech # path to StreamSpeech repo

LANG=fr

file=streamspeech.simultaneous.${LANG}-en.pt # path to downloaded StreamSpeech model

output_dir=$ROOT/res/streamspeech.simultaneous.${LANG}-en/streaming-asr

chunk_size=320 #ms

PYTHONPATH=$ROOT/fairseq simuleval --data-bin ${ROOT}/configs/${LANG}-en \

--user-dir ${ROOT}/researches/ctc_unity --agent-dir ${ROOT}/agent \

--source example/wav_list.txt --target example/source.txt \

--model-path $file \

--config-yaml config_gcmvn.yaml --multitask-config-yaml config_mtl_asr_st_ctcst.yaml \

--agent $ROOT/agent/speech_to_text.asr.streamspeech.agent.py\

--output $output_dir/chunk_size=$chunk_size \

--source-segment-size $chunk_size \

--quality-metrics BLEU --latency-metrics AL AP DAL StartOffset EndOffset LAAL ATD NumChunks RTF \

--device gpu --computation-aware- Follow

./preprocess_scriptsto process CVSS-C data.

Note

You can directly use the downloaded StreamSpeech model for evaluation and skip training.

- Follow

researches/ctc_unity/train_scripts/train.simul-s2st.shto train StreamSpeech for simultaneous speech-to-speech translation. - Follow

researches/ctc_unity/train_scripts/train.offline-s2st.shto train StreamSpeech for offline speech-to-speech translation. - We also provide some other StreamSpeech variants and baseline implementations.

| Model | --user-dir | --arch | Description |

|---|---|---|---|

| Translatotron 2 | researches/translatotron |

s2spect2_conformer_modified |

Translatotron 2 |

| UnitY | researches/translatotron |

unity_conformer_modified |

UnitY |

| Uni-UnitY | researches/uni_unity |

uni_unity_conformer |

Change all encoders in UnitY into unidirectional |

| Chunk-UnitY | researches/chunk_unity |

chunk_unity_conformer |

Change the Conformer in UnitY into Chunk-based Conformer |

| StreamSpeech | researches/ctc_unity |

streamspeech |

StreamSpeech |

| HMT | researches/hmt |

hmt_transformer_iwslt_de_en |

HMT: strong simultaneous text-to-text translation method |

| DiSeg | researches/diseg |

convtransformer_espnet_base_seg |

DiSeg: strong simultaneous speech-to-text translation method |

Tip

The train_scripts/ and test_scripts/ in directory --user-dir give the training and testing scripts for each model.

Refer to official repo of UnitY, Translatotron 2, HMT and DiSeg for more details.

Follow pred.offline-s2st.sh to evaluate the offline performance of StreamSpeech on ASR, S2TT and S2ST.

A trained StreamSpeech model can be used for streaming ASR, simultaneous speech-to-text translation and simultaneous speech-to-speech translation. We provide agent/ for these three tasks:

agent/speech_to_speech.streamspeech.agent.py: simultaneous speech-to-speech translationagent/speech_to_text.s2tt.streamspeech.agent.py: simultaneous speech-to-text translationagent/speech_to_text.asr.streamspeech.agent.py: streaming ASR

Follow simuleval.simul-s2st.sh, simuleval.simul-s2tt.sh, simuleval.streaming-asr.sh to evaluate StreamSpeech.

Our project page (https://ictnlp.github.io/StreamSpeech-site/) provides some translated speech generated by StreamSpeech, listen to it 🎧.

If you have any questions, please feel free to submit an issue or contact zhangshaolei20z@ict.ac.cn.

If our work is useful for you, please cite as:

@inproceedings{streamspeech,

title = "StreamSpeech: Simultaneous Speech-to-Speech Translation with Multi-task Learning",

author = " Zhang, Shaolei and

Fang, QingKai and

Guo, Shoutao and

Ma, Zhengrui and

Zhang, Min and

Feng, Yang",

booktitle = "Proceedings of the 62th Annual Meeting of the Association for Computational Linguistics (Long Papers)",

year = "2024",

publisher = "Association for Computational Linguistics"

}