- Initialize the virtual env

conda create --name redhen python=3.9

conda activate redhen

conda install --file requirements.txt - Run the annotator code

python3 annotator.py

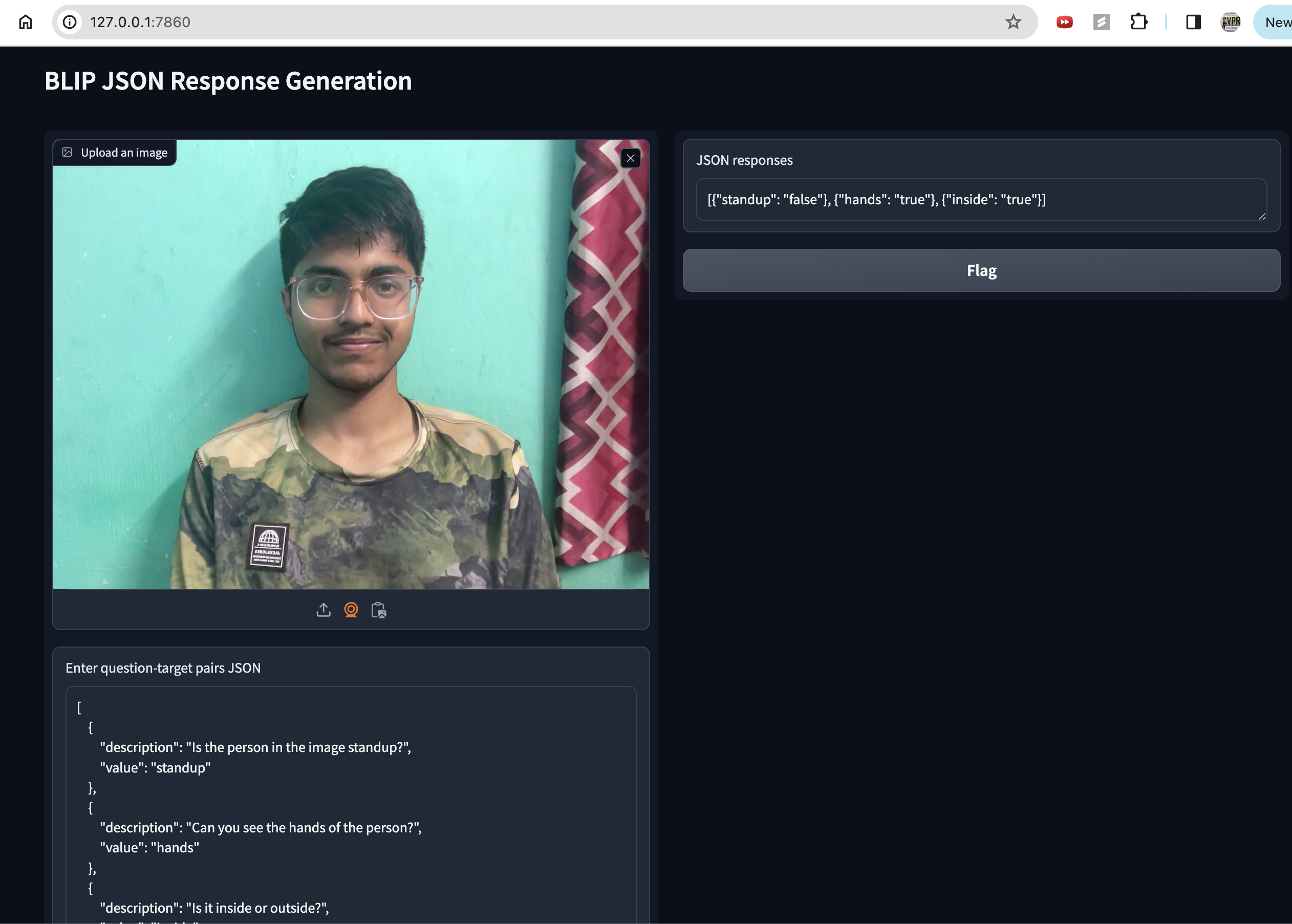

- Upload image person.png or use Webcam

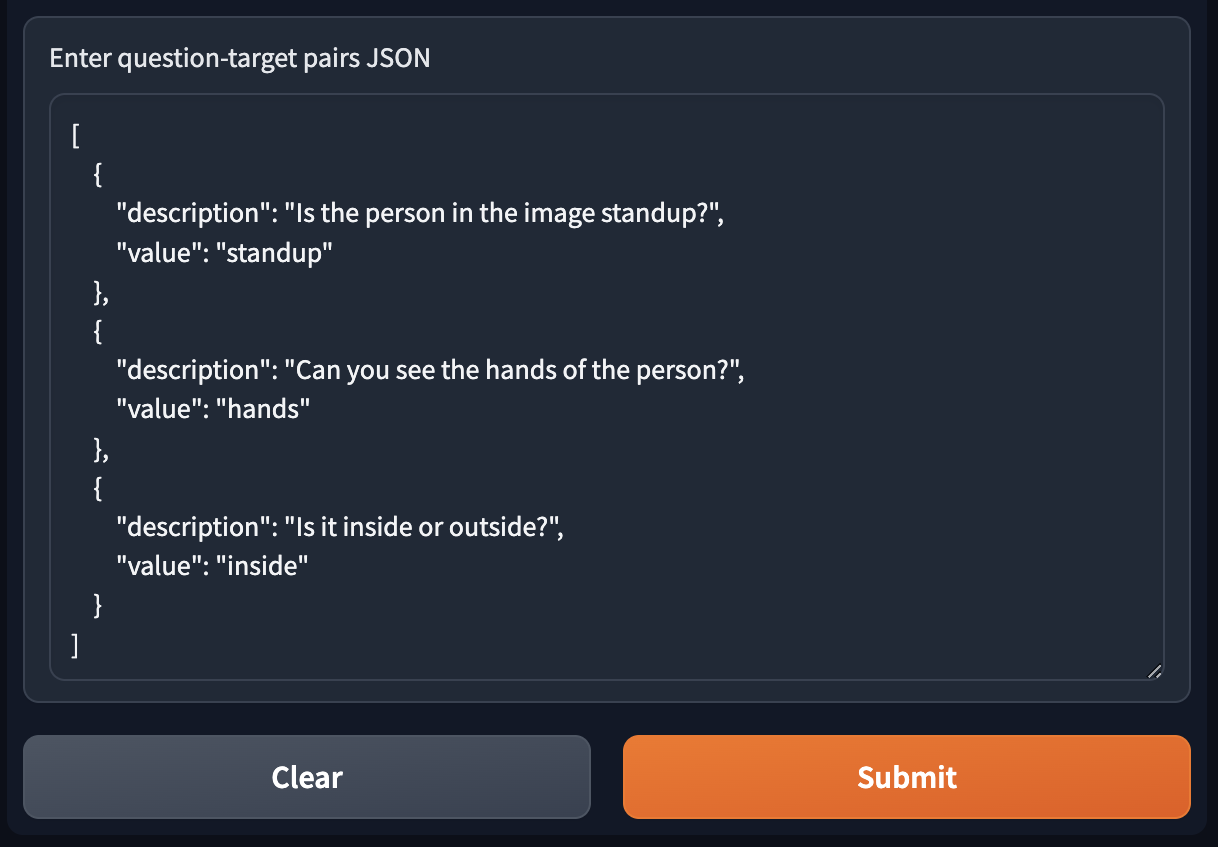

- Copy the JSON from QA.txt and submit

Gradio is leveraged to construct an intuitive web-based interface where users can seamlessly upload images and input question-target pairs. Through Gradio's simple API, the interface components such as image upload and text input are effortlessly defined and connected to the underlying functionality.

BLIP (Blind Language Image Pre-training) models, such as the one used in the code (Salesforce/blip-vqa-base), are designed to understand both images and text simultaneously. This makes them well-suited for tasks involving multimodal inputs, such as answering questions about images.

Semantic similarity scores computed using models like Sentence Transformers provide a quantitative measure of how similar two pieces of text are in meaning. By comparing the generated answer from the BLIP model with the expected value, we can determine if the answer aligns semantically with the target value, enhancing the accuracy of the response.