A sophisticated implementation of Retrieval Augmented Generation (RAG) leveraging NVIDIA's AI endpoints and Streamlit. This application transforms document analysis and question-answering through state-of-the-art language models and efficient vector search capabilities.

- 📄 Multi-PDF Document Processing

- 🔍 Advanced Text Chunking System

- 💾 FAISS Vector Store Integration

- ⚡ NVIDIA NIM Endpoints

- 🤖 Llama 3.1 405B Model Support

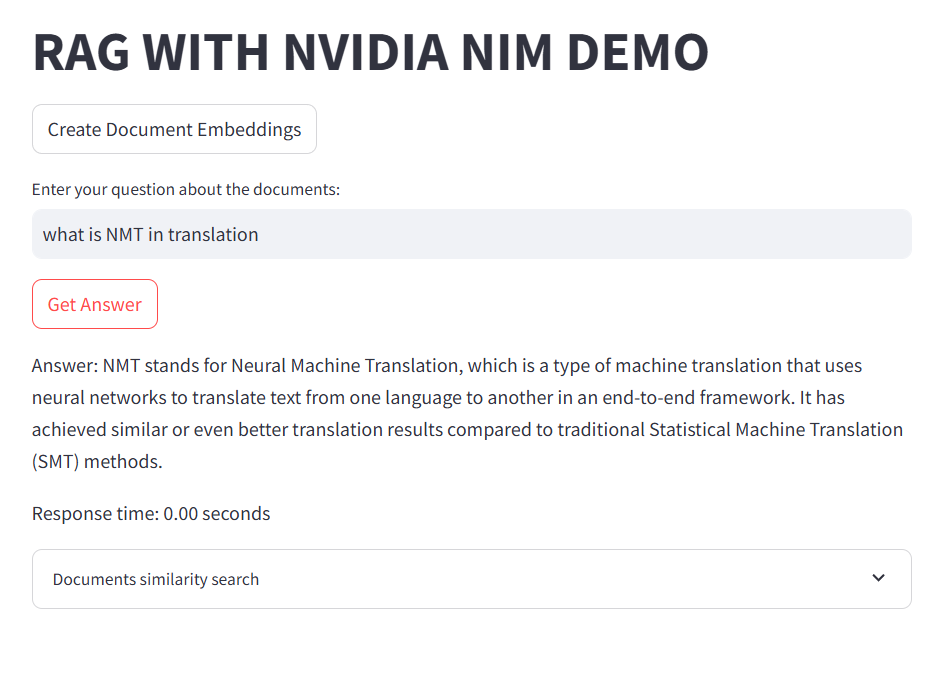

- ⏱️ Real-time Performance Metrics

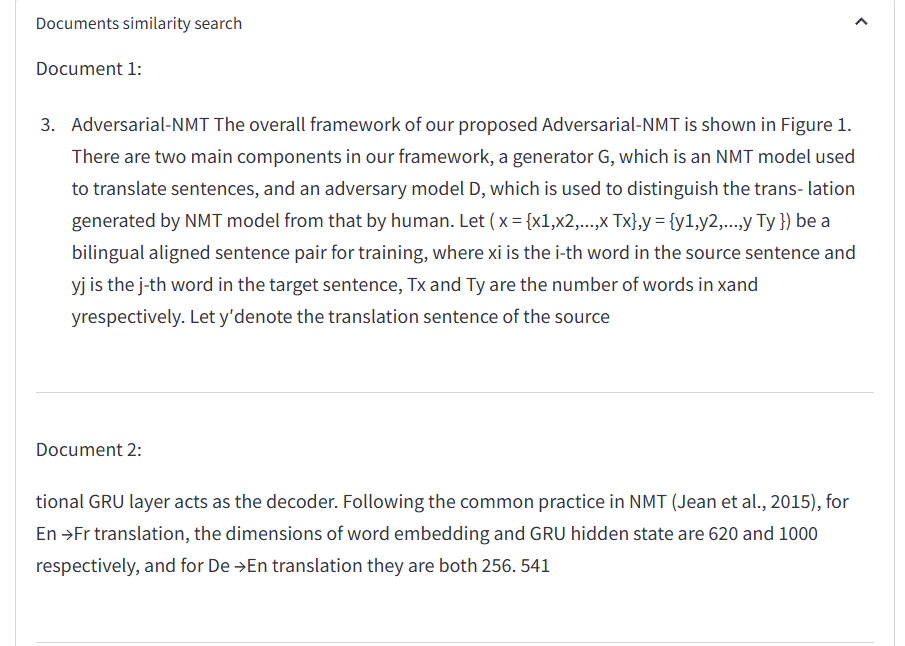

- 📊 Similarity Search Visualization

graph TD

A[PDF Documents] --> B[Document Processor]

B --> C[Text Chunker]

C --> D[NVIDIA Embeddings]

D --> E[FAISS Vector Store]

F[User Query] --> G[Query Processor]

G --> E

E --> H[NVIDIA LLM]

H --> I[Response Generator]

- Python 3.8+

- 8GB RAM minimum

- NVIDIA API access

- Internet connectivity

- PDF processing capabilities

git clone https://github.com/arsath-eng/RAG1-NVIDIA-GENAI.git

cd RAG1-NVIDIA-GENAI# Create virtual environment

python -m venv venv

# Activate environment

# For Windows

.\venv\Scripts\activate

# For Unix/Mac

source venv/bin/activate

# Install dependencies

pip install -r requirements.txt# Create environment file

touch .env

# Add required credentials

NVIDIA_API_KEY=your_api_key_herestreamlit run app.py-

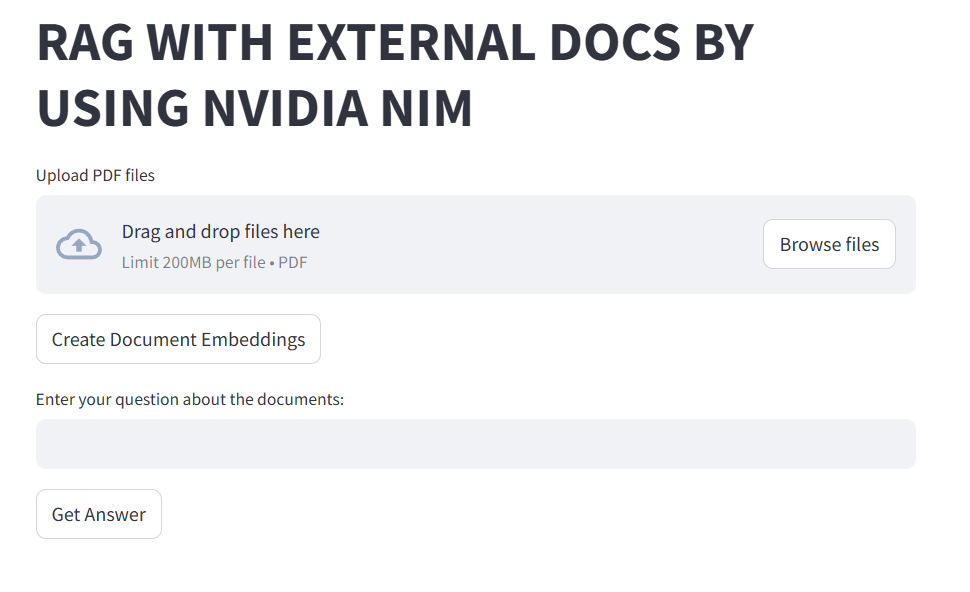

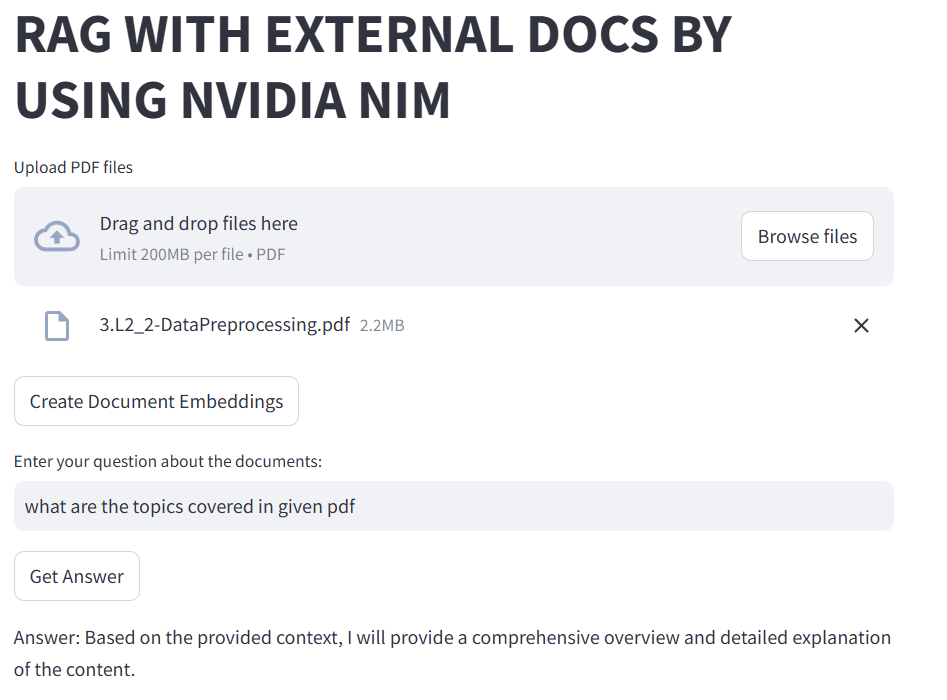

Document Upload

- Support for multiple PDF files

- Automatic text extraction

- Progress tracking

-

Embedding Creation

- Click "Create Document Embeddings"

- Automatic chunking and processing

- Vector store initialization

-

Query Processing

- Enter questions about documents

- Real-time response generation

- View similarity search results

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=700, # Adjust for document length

chunk_overlap=50 # Modify for context preservation

)llm = ChatNVIDIA(

model="meta/llama-3.1-405b-instruct",

temperature=0.7, # Adjust for response creativity

max_tokens=512 # Modify for response length

)- Optimal chunk size selection

- Embedding dimension management

- Index optimization techniques

- Query preprocessing

- Cache implementation

- Batch processing capabilities

- Content-aware text splitting

- Semantic boundary preservation

- Overlap optimization

- Nearest neighbor search

- Similarity threshold tuning

- Result ranking optimization

- Context-aware answers

- Source attribution

- Confidence scoring

- Use clear, well-formatted PDFs

- Ensure text is extractable

- Optimize document size

- Be specific and clear

- Include relevant context

- Use natural language

- Monitor memory usage

- Regular cache clearing

- Performance tracking

-

PDF Processing Errors

- Solution: Check PDF format compatibility

- Verify text extraction capabilities

-

Memory Issues

- Solution: Adjust chunk size

- Implement batch processing

-

API Connection

- Solution: Verify credentials

- Check network connectivity

- Fork repository

- Create feature branch

git checkout -b feature/YourFeature- Commit changes

git commit -m 'Add YourFeature'- Push to branch

git push origin feature/YourFeature- Submit Pull Request

This project is licensed under the MIT License. See LICENSE for details.

- NVIDIA AI Team

- Streamlit Community

- LangChain Contributors

- Meta AI Research

- Create an Issue

- Join Discussions

- Review Documentation

Made with ❤️ by @arsath-eng