Create professional AI art and storyboards instantly from text prompts. Python web app powered by Stable Diffusion XL with advanced features like inpainting, ControlNet, and batch generation.

# Clone repository

git clone https://github.com/arun-gupta/diffusion-lab.git

cd diffusion-lab

# One-command setup and run

./run_webapp.sh

# Open http://localhost:5001 in your browser# Complete clean setup (removes old environment)

./cleanup_and_reinstall.sh

# Then run the web app

python3 -m diffusionlab.api.webapp

# Open http://localhost:5001 in your browser# Clone and setup manually

git clone https://github.com/arun-gupta/diffusion-lab.git

cd diffusion-lab

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

pip install -r requirements.txt

# Run the web app

python3 -m diffusionlab.api.webapp

# Open http://localhost:5001 in your browser- 📖 Storyboard Generation: Create 5-panel storyboards with AI images and captions

- 🎨 Single-Image Art: Generate high-quality AI images from text prompts

- 🔄 Batch Generation: Create multiple variations of the same prompt for creative exploration

- 🔄 Image-to-Image: Transform sketches/photos into polished artwork

- 🎯 Inpainting: Remove objects or fill areas with AI-generated content

- 🔗 Prompt Chaining: Create evolving story sequences with multiple prompts

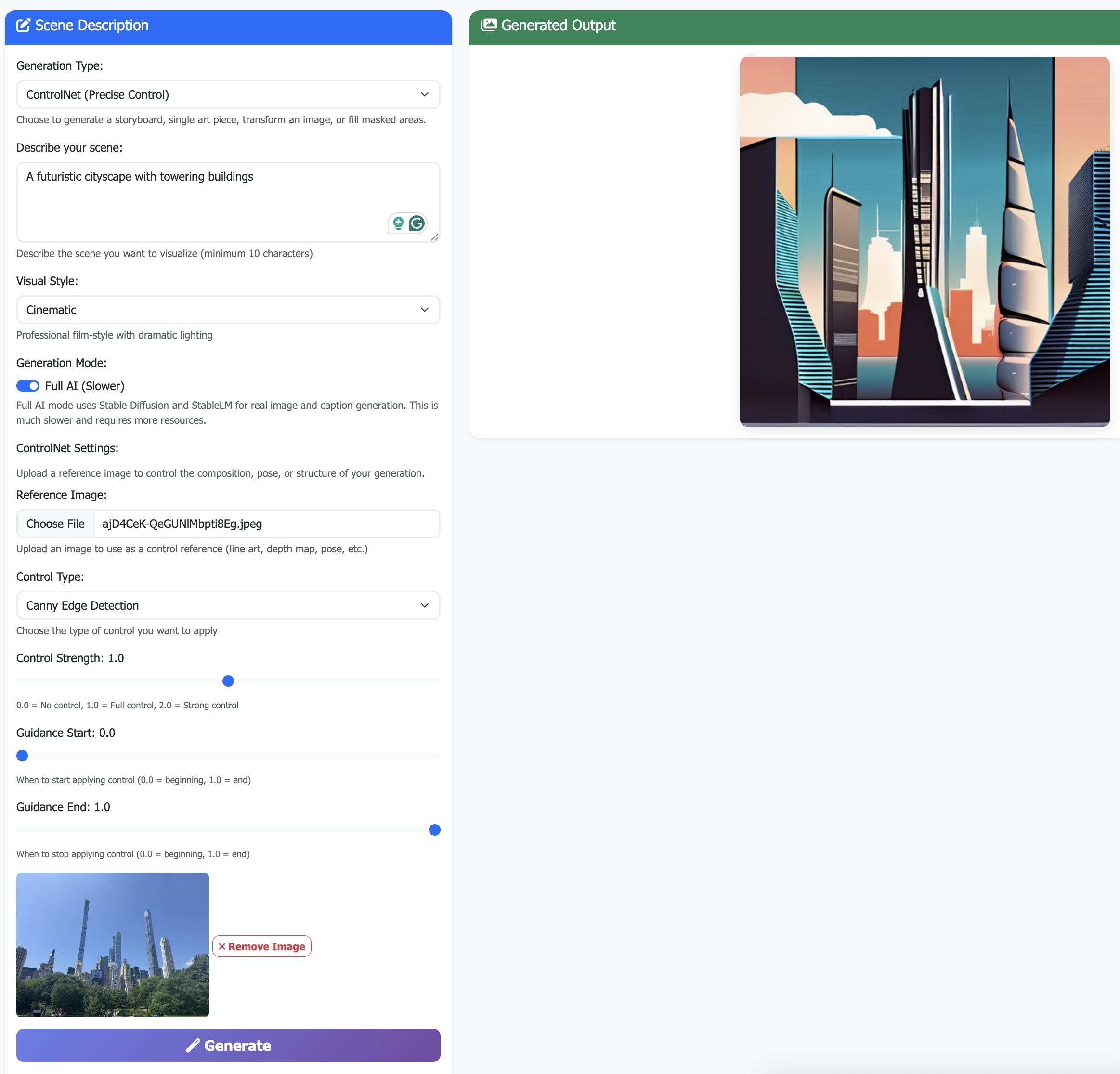

- 🎯 ControlNet: Precise control over composition, pose, and structure using reference images

- 📱 Web Interface: Modern, responsive UI with real-time generation

This application uses state-of-the-art AI models to power its generation capabilities:

- Purpose: Main image generation model

- Used for: Text-to-image generation, image-to-image transformation

- Configuration: fp16 variant with safetensors format

- Performance: Optimized for high-quality 512×512 image generation

- Purpose: Specialized inpainting pipeline for content-aware fill

- Used for: Object removal, background replacement, creative editing

- Features: Precise mask-based generation with seamless blending

- Purpose: Text generation and caption creation

- Used for:

- Generating scene variations for storyboards

- Creating descriptive captions for generated images

- Text processing and prompt enhancement

- Size: 3 billion parameters optimized for text tasks

The app includes configuration for ControlNet models for precise generation control:

- Use case: Line art, architectural drawings, precise outlines

- Best for: Converting sketches to finished artwork

- Use case: 3D scenes, architectural visualization, spatial control

- Best for: Creating images with precise depth and perspective

- Use case: Character poses, figure drawing, animation

- Best for: Maintaining specific poses from reference images

- Use case: Object placement, scene composition, layout control

- Best for: Controlling where objects appear in the scene

- CannyDetector: Edge detection preprocessing

- OpenposeDetector: Human pose estimation

- MLSDdetector: Line detection for architectural elements

- HEDdetector: Holistically-nested edge detection

- Primary models (SDXL, SDXL Inpainting, StableLM) are loaded at startup for AI mode

- ControlNet models are loaded on-demand to conserve memory

- Demo mode uses placeholder generation without loading heavy models

- Fallback mechanisms ensure the app works even if some models fail to load

- Torch dtype: float32 (configurable)

- Model format: Safetensors for faster loading

- Variant: fp16 for SDXL (optimized for memory efficiency)

- Device optimization: CUDA, MPS (Apple Silicon), or CPU fallback

- Memory management: Attention slicing enabled for performance

- Model size: ~10GB total for all models

- GPU recommended: CUDA-compatible GPU or Apple Silicon for optimal performance

- Memory requirements: 8GB+ RAM (16GB+ recommended for AI mode)

- Storage: ~10GB for all models and dependencies

- Loading time: ~30-60 seconds for initial model loading

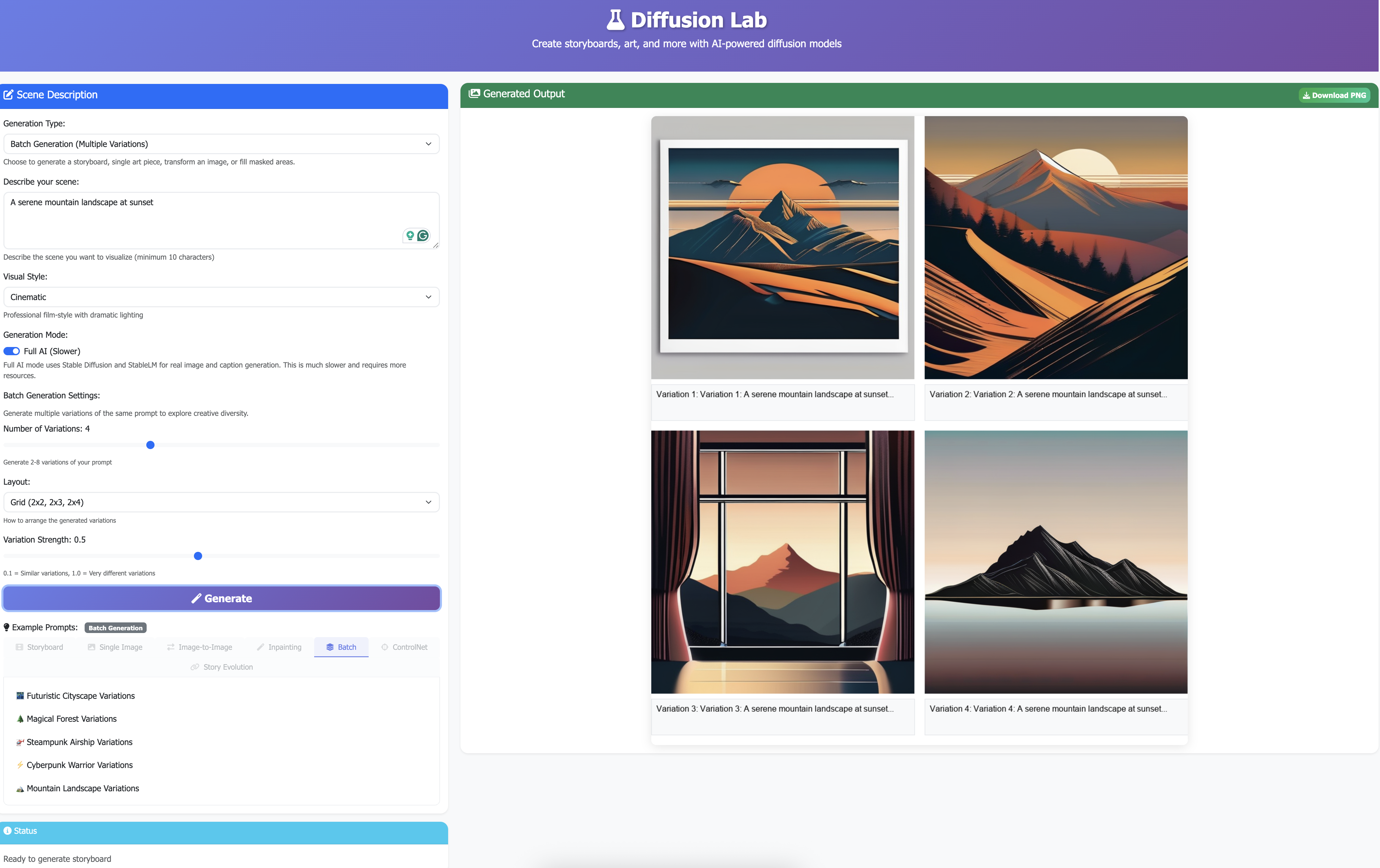

Modern web-based interface with intuitive controls and real-time generation

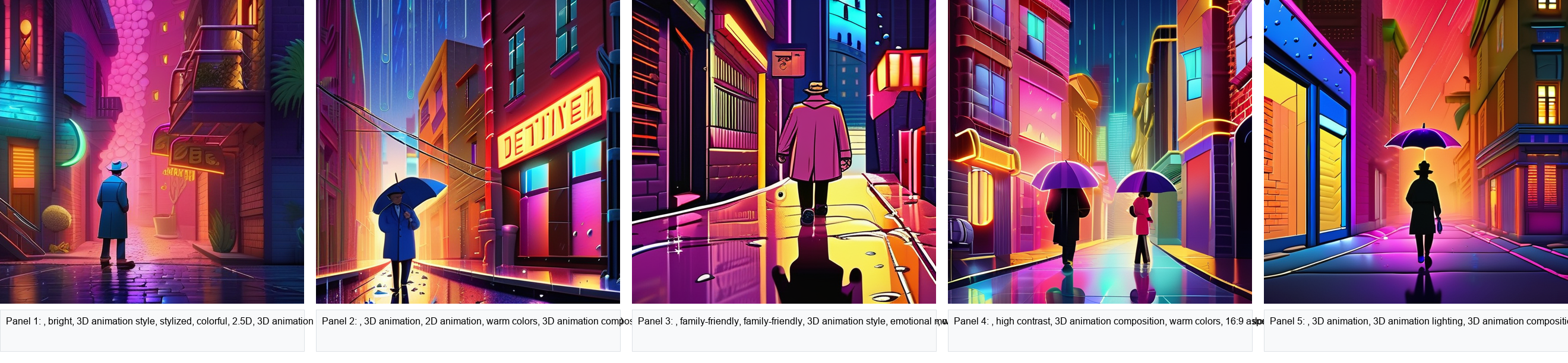

"A detective walks into a neon-lit alley at midnight, rain pouring down" (Cinematic style)

"A spaceship crew encounters an alien artifact on a distant planet" (Pixar style)

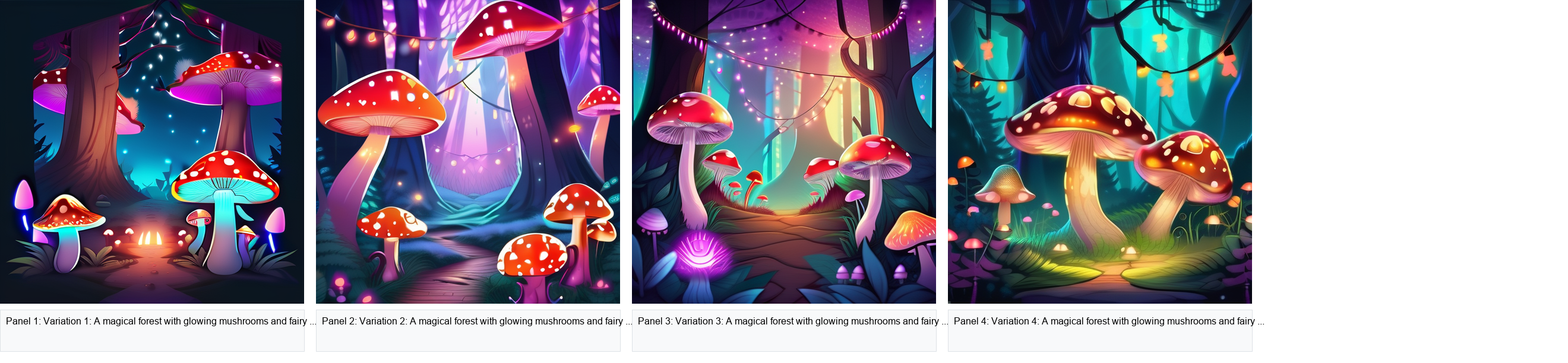

"A magical forest with glowing mushrooms and fairy lights" (4 variations, Anime style, 0.8 strength)

"A polished character design for a sci-fi video game protagonist" (Photorealistic style, strength: 0.5)

| Input Image (1870×2493 → 1024×1024) | Output Image (AI standard size) |

|---|---|

|

|

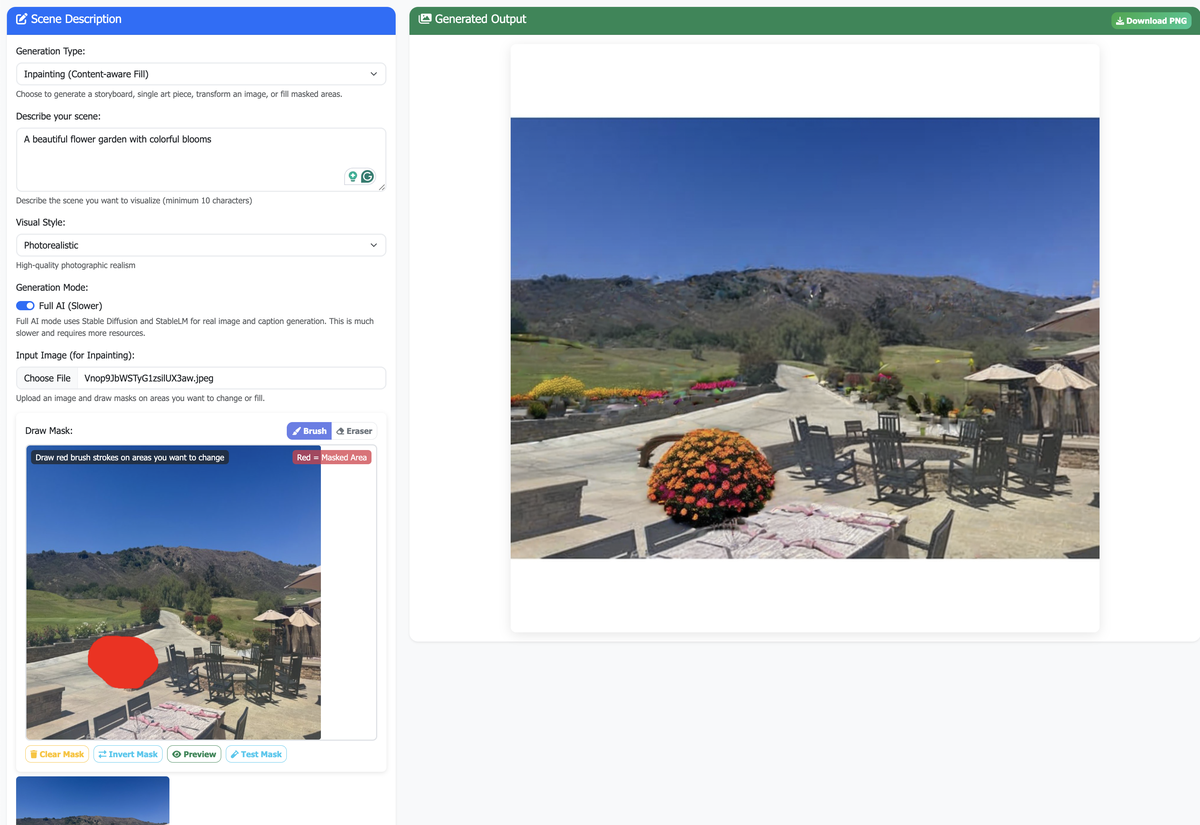

Object removal: "Natural landscape continuation with trees and sky" (Photorealistic style)

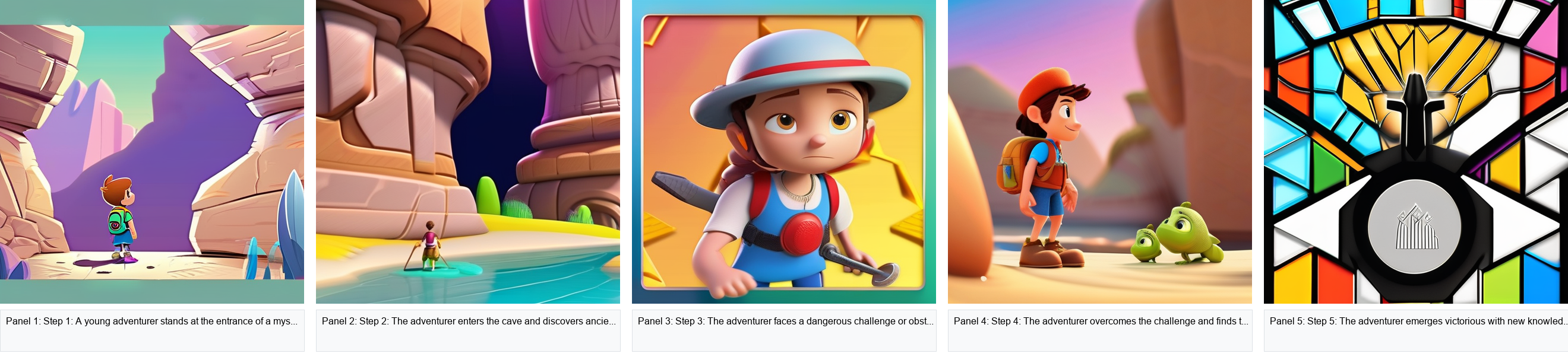

Character Journey: "A young adventurer's quest from village to mountain peak" (Pixar style, 5-step evolution)

Template Steps:

- Character introduction and setting

- Character faces a challenge or conflict

- Character overcomes the challenge

- Character learns and grows

- Character reaches their goal or destination

"A majestic dragon in a fantasy landscape" (Cinematic style, Canny edge control, strength: 1.0)

For detailed usage instructions, see the Usage Guide.

- Open

http://localhost:5001in your browser - Select your mode: Storyboard, Single-Image Art, Batch Generation, Image-to-Image, Inpainting, Prompt Chaining, or ControlNet

- Toggle between Demo (fast) and AI (full quality) modes

- Enter your prompt and choose a style

- For Batch Generation: Configure variation count, layout, and strength

- For Image-to-Image: Upload an image and adjust transformation strength

- For Inpainting: Upload an image, draw masks, and describe what should fill them

- For Prompt Chaining: Add multiple prompts for story evolution

- For ControlNet: Upload a reference image, select control type, and adjust strength/guidance

- Click "Generate" and download your results

- Generate 5-panel storyboards with AI images and captions

- Choose from multiple visual styles

- Perfect for storytelling and concept development

- Create high-quality AI images from text prompts

- Multiple style presets available

- Ideal for concept art and illustrations

- Generate multiple variations of the same prompt

- Configure variation count (2-8) and strength

- Choose from grid, horizontal, or vertical layouts

- Upload sketches, photos, or concepts

- Adjust transformation strength (0.1 = subtle, 1.0 = complete change)

- Transform into any style or concept

- Draw masks on areas to change

- Describe what should fill masked areas

- Remove objects or add new content seamlessly

- Create story sequences with multiple prompts

- Use templates like "Character Journey" or "Environmental Progression"

- Generate evolving narratives

- Upload reference images for precise control over composition

- Choose from Canny edge detection, depth maps, pose estimation, or segmentation

- Adjust control strength and guidance timing for fine-tuned results

- Python 3.8+

- CUDA-compatible GPU (recommended) or Apple Silicon (M1/M2/M3)

- 8GB+ RAM (16GB+ recommended for AI mode)

Tested System Specifications:

- Hardware: Apple M1 Max

- OS: macOS Sequoia 15.5

- Memory: 64 GB RAM

- Storage: SSD with sufficient space for AI models (~10GB)

# Clone repository

git clone https://github.com/arun-gupta/diffusion-lab.git

cd diffusion-lab

# Use automated setup scripts

./run_webapp.sh # One-command setup and run

# OR

./cleanup_and_reinstall.sh # Fresh install (removes old environment)# Clone repository

git clone https://github.com/arun-gupta/diffusion-lab.git

cd diffusion-lab

# Create virtual environment

python -m venv venv

source venv/bin/activate # On Windows: venv\Scripts\activate

# Install dependencies

pip install -r requirements.txt

# Run web application

python3 -m diffusionlab.api.webapprun_webapp.sh: One-command setup and web app launchcleanup_and_reinstall.sh: Complete fresh installationsetup.py: Cross-platform Python setup scriptrun.sh: Quick launcher with automatic setup detection

# Gradio Interface

python3 -m diffusionlab.tasks.storyboard

# Demo Version (no AI models)

python3 -m diffusionlab.tasks.demodiffusion-lab/

├── diffusionlab/

│ ├── api/webapp.py # Flask web application

│ ├── tasks/ # Generation tasks

│ ├── static/ # CSS, JS, generated images

│ ├── templates/ # HTML templates

│ └── config.py # Configuration settings

├── docs/ # Example images and documentation

├── tests/ # Test scripts

└── requirements.txt # Python dependencies

For detailed troubleshooting information, see the Troubleshooting Guide.

Static files not loading (404 errors)

# Run from project root

python3 -m diffusionlab.api.webappAI mode not available

- Check all dependencies are installed

- Ensure running from project root

- Verify

diffusionlab/tasks/directory exists

Generate button not working

- Check browser console for JavaScript errors

- Ensure

app.jsloads without 404 errors

- Check browser console (F12) for JavaScript errors

- Watch Flask logs in terminal for server errors

- Visit Issues for known problems

- See Troubleshooting Guide for comprehensive solutions

- ✅ Storyboard Generation: Create 5-panel storyboards with AI images and captions (Implemented)

- ✅ Single-Image Art: Generate high-quality AI images from text prompts (Implemented)

- ✅ Batch Generation: Create multiple variations of the same prompt for creative exploration (Implemented)

- ✅ Image-to-Image: Transform sketches/photos into polished artwork (Implemented)

- ✅ Inpainting: Remove objects or fill areas with AI-generated content (Implemented)

- ✅ Prompt Chaining: Create evolving story sequences with multiple prompts (Implemented)

- ✅ ControlNet: Precise control over composition, pose, and structure using reference images (Implemented)

- 🔄 Outpainting: Extend image borders

- 🔄 Style Transfer: Apply artistic styles

- 🔄 Animated Diffusion: Frame interpolation

- 🔄 Custom Training: DreamBooth integration

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

💡 Tip: Start with Demo mode to test features quickly, then switch to AI mode for full-quality generation!