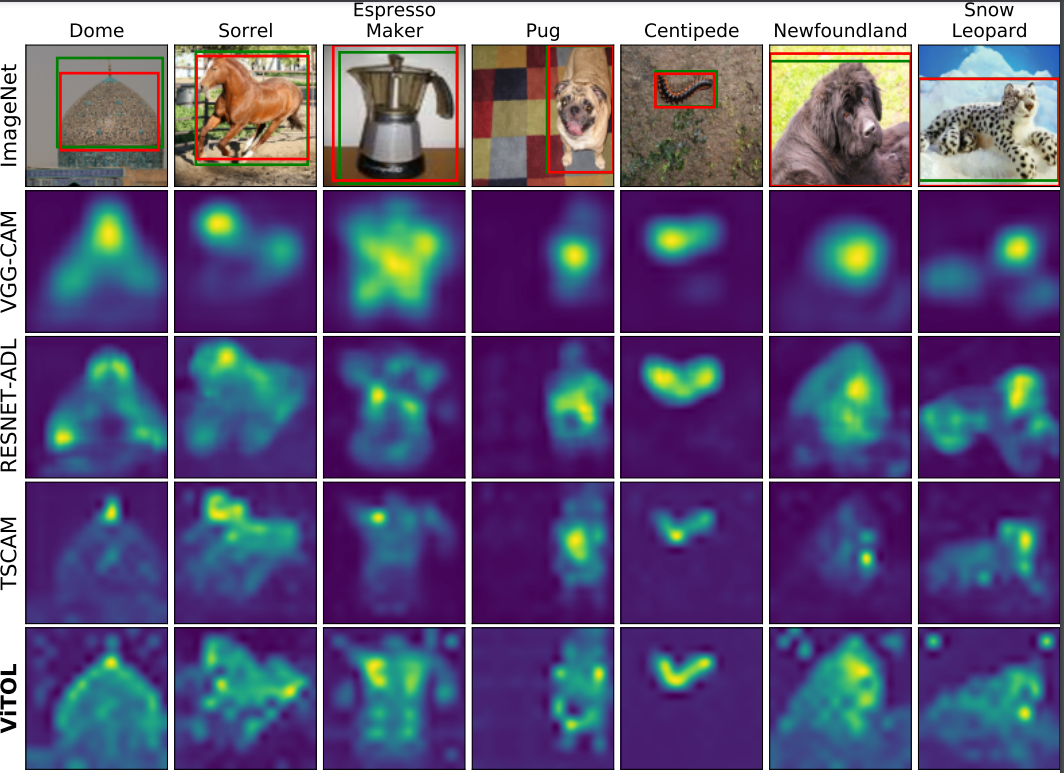

Official implementation of the paper ViTOL: Vision Transformer forWeakly Supervised Object Localization which is accepted as CVPRW-2022 paper for L3DIVU-2022.

This repository contains inference code and pre-trained model weights for our model in Pytorch framework. Code is trained and tested in Python 3.6.9 and Pytorch version 1.7.1+cu101

We provide pre-trained weights for VITOL with DeiT-S and DeiT-B backbone on ImageNet-1k and CUB datasets below.

ImageNet: ViTOL-base, ViTOL-small

| Method | MaxBoxAccV2 | Top1Acc | IOU50 | Top1Cls |

|---|---|---|---|---|

| ViTOL-GAR Small | 69.61 | 54.74 | 71.86 | 71.84 |

| ViTOL-LRP Small | 68.23 | 53.62 | 70.48 | 71.84 |

| ViTOL-GAR Base | 69.17 | 57.62 | 71.32 | 77.08 |

| ViTOL-LRP Base | 70.47 | 58.64 | 72.51 | 77.08 |

Clone the repository

git clone https://github.com/Saurav-31/ViTOL.git

Setup conda environment

conda env create -f environment.yml

conda activate vitolPlease refer here for dataset preparation

--data_root=\PATH\TO\DATASET

--metadata_root=\PATH\TO\GROUND_TRUTH --CHECKPOINT_NAME=$VITOL_WEIGHTS_TAR_FILENAME

bash evaluate.sh configs/ilsvrc/ViTOL_GAR_base.yml

bash evaluate.sh configs/ilsvrc/ViTOL_GAR_small.yml

- Setup Training Code for the same

- Train the model with more stronger backbones

- Jupyter notebook for visualization

Evaluating Weakly Supervised Object Localization Methods Right (CVPR 2020) Transformer Interpretability Beyond Attention Visualization (CVPR 2021)

If you have any question about our work or this repository, please don't hesitate to contact us by emails.

- saurav.gupta@mercedes-benz.com

- sourav.lakhotia@mercedes-benz.com

- abhay.rawat@mercedes-benz.com

- rahul.tallamraju@mercedes-benz.com

If you find this work useful, please cite as follows:

@inproceedings{gupta2022vitol,

title={ViTOL: Vision Transformer for Weakly Supervised Object Localization},

author={Gupta, Saurav and Lakhotia, Sourav and Rawat, Abhay and Tallamraju, Rahul},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={4101--4110},

year={2022}

}