The application can consume data using kafka and create models out of it.

API is used to make predictions.

Make sure you run your kafka application, zookeeper etc

Open kafka directory and run the following:

bin/zookeeper-server-start.sh config/zookeeper.properties

Open another terminal and inside the kafka directory

bin/kafka-server-start.sh config/server.properties

Next create topics and other configurations

bin/kafka-topics.sh --create --topic web-logs --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1

git clone https://github.com/asifrahaman13/online-learning.git Create virtual environment.

virtualenv .venvNext activate the virtual environment.

source .venv/bin/activateInstall the dependencies.

pip instal -r requirements.txtRun the script.

uvicorn main:app --reloadchmod +x start.sh

bash start.shNow you need to start the server which will consume data from kafka.

uvicorn src.kafka_main:app --reloadRun the script to start the kafka server. That will consume all the data.

python3 kafka_sdk.pyIn another terminal run the following command:

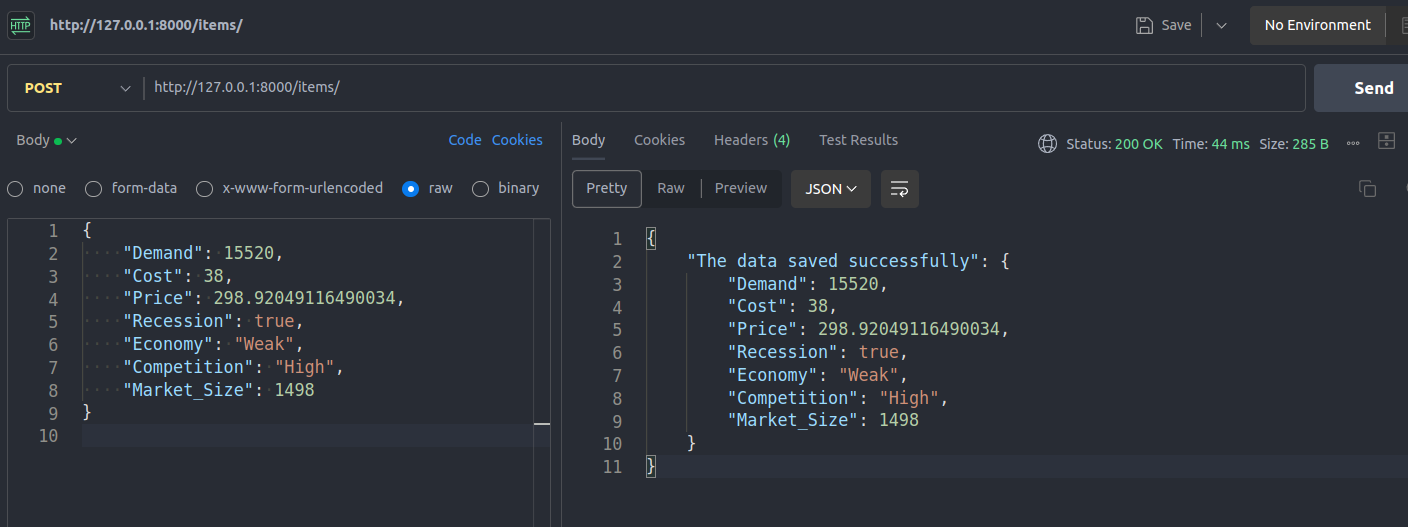

uvicorn src.main:app --reload --port=5000http://127.0.0.1:8000/items/Body:

{

"Demand": 15520,

"Cost": 38,

"Price": 298.92049116490034,

"Recession": true,

"Economy": "Weak",

"Competition": "High",

"Market_Size": 1498

}URL:

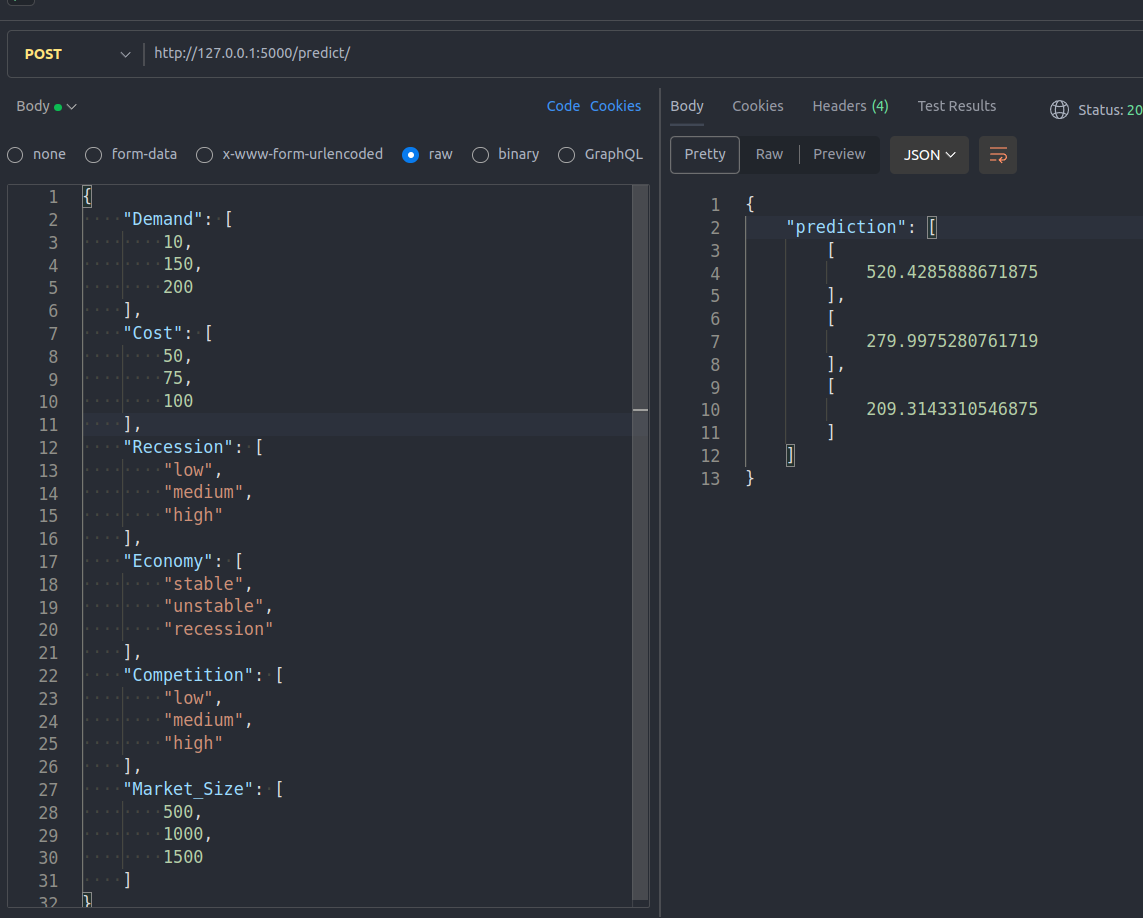

http://127.0.0.1:5000/predict/{

"Demand": [10, 150, 200],

"Cost": [50, 75, 100],

"Recession": ["low", "medium", "high"],

"Economy": ["stable", "unstable", "recession"],

"Competition": ["low", "medium", "high"],

"Market_Size": [500, 1000, 1500]

}