MAP-NEO is a fully open-sourced Large Language Model that includes the pretraining data, a data processing pipeline (Matrix), pretraining scripts, and alignment code. It is trained from scratch on 4.5T English and Chinese tokens, exhibiting performance comparable to LLaMA2 7B. The MAP-Neo model delivers proprietary-model-like performance in challenging tasks such as reasoning, mathematics, and coding, outperforming its peers of similar size. For research purposes, we aim to achieve full transparency in the LLM training process. To this end, we have made a comprehensive release of MAP-Neo, including the final and intermediate checkpoints, a self-trained tokenizer, the pre-training corpus, and an efficient, stable optimized pre-training codebase.

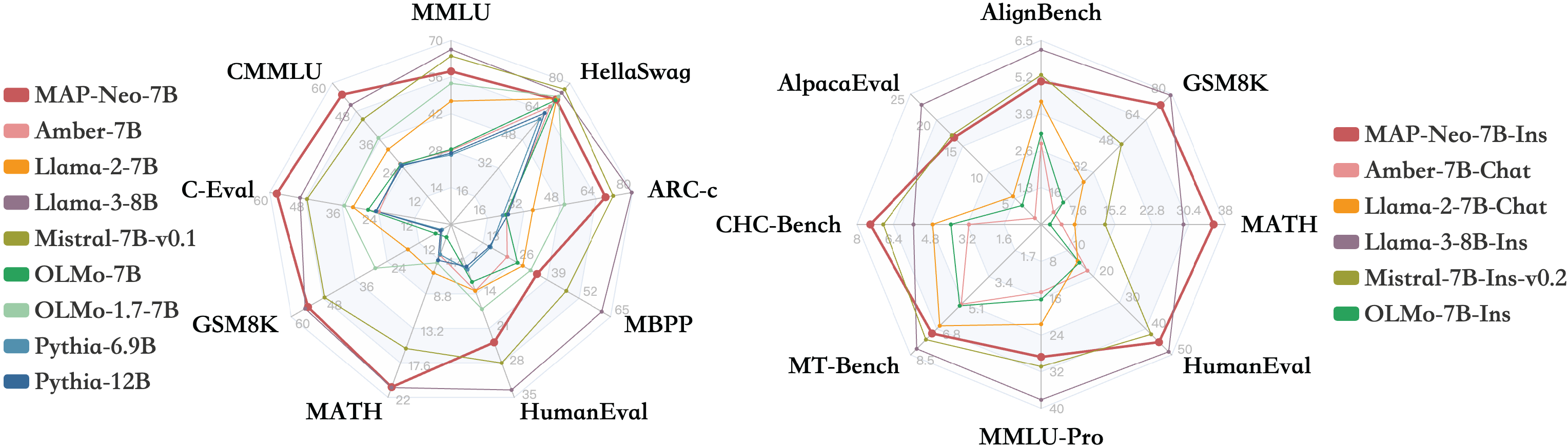

We evaluated the MAP-Neo 7B Series and other similarly sized models on various benchmarks, as shown in the following.

For more details on the performance across different benchmarks, please refer to https://map-neo.github.io/.

We are pleased to announce the public release of the MAP-NEO 7B, including base models and a serious of intermedia checkpoints. This release aims to support a broader and more diverse range of research within academic and commercial communities. Please note that the use of this model is subject to the terms outlined in License section. Commercial usage is permitted under these terms.

| Model & Dataset | Download |

|---|---|

| MAP-NEO 7B Base | 🤗 HuggingFace |

| MAP-NEO 7B Instruct | 🤗 HuggingFace |

| MAP-NEO 7B SFT | 🤗 HuggingFace |

| MAP-NEO 7B intermedia | 🤗 HuggingFace |

| MAP-NEO 7B decay | 🤗 HuggingFace |

| MAP-NEO 2B Base | 🤗 HuggingFace |

| MAP-NEO scalinglaw 980M | 🤗 HuggingFace |

| MAP-NEO scalinglaw 460M | 🤗 HuggingFace |

| MAP-NEO scalinglaw 250M | 🤗 HuggingFace |

| MAP-NEO DATA Matrix | 🤗 HuggingFace |

This code repository is licensed under the MIT License.

@article{zhang2024mapneo,

title = {MAP-Neo: Highly Capable and Transparent Bilingual Large Language Model Series},

author = {Ge Zhang and Scott Qu and Jiaheng Liu and Chenchen Zhang and Chenghua Lin and Chou Leuang Yu and Danny Pan and Esther Cheng and Jie Liu and Qunshu Lin and Raven Yuan and Tuney Zheng and Wei Pang and Xinrun Du and Yiming Liang and Yinghao Ma and Yizhi Li and Ziyang Ma and Bill Lin and Emmanouil Benetos and Huan Yang and Junting Zhou and Kaijing Ma and Minghao Liu and Morry Niu and Noah Wang and Quehry Que and Ruibo Liu and Sine Liu and Shawn Guo and Soren Gao and Wangchunshu Zhou and Xinyue Zhang and Yizhi Zhou and Yubo Wang and Yuelin Bai and Yuhan Zhang and Yuxiang Zhang and Zenith Wang and Zhenzhu Yang and Zijian Zhao and Jiajun Zhang and Wanli Ouyang and Wenhao Huang and Wenhu Chen},

year = {2024},

journal = {arXiv preprint arXiv: 2405.19327}

}

For further communications, please scan the following WeChat and Discord QR code:

| Discord | |

|

|