-

Machine learning organizations automate tasks to reduce costs or scale products. The output is the automation itself achieved by collecting data, training models, and deploying them.

-

This Project and notebooks are currently 'work in progress'.

-

It's inspired by ML-From-Scratch, peter norvig's pytudes project, multiple Project's by folks like: Made with ML, ML for Software Engineers, Chris_albon and many others.

-

Roadmap: It provides an opportunity to document some of my own learnings and acts as a road map for self-taught learners out there to learn data science for free.

-

Computational notebooks: Computational notebooks are essentially laboratory notebooks for scientific computing. We use Notebooks for Practice(as they are best suited for scientific computing). This is closely related to litrate programming paradigm(as conceived by Don Knuth)

-

This is a long list. see this great article on how to approach it depending on the Career paths you decide to take

- introduction

- [ML Basic Theory:]

- Data Acquizition

- Data Wrangling Tools and Libraries

- Exploratory Data Analysis

- Data Cleaning

- Modeling

- MLOPS

- Cool Applications

- Machine Learning is the modern probabilistic approach to artificial intelligence. It studies algorithms that learn to predict from (usually independent and identically distributed) data.

- It Utilizes past observation data to predict future observations. e.g Can we predict which products that certain customer groups are more likely to purchase?

- It also allows us to implement cool new feature like smart reply in gmail.

- In terms of impact most of AI technologies currently being deployed are mostly falling under the category of machine learning.

- Machine Learning Engineer: A machine learning engineer is someone who sits at the crossroads of data science and data engineering, and has proficiency in both data engineering and data science.

- Artificial Intelligence and Machine Learning

- AI, Deep Learning, and Machine Learning: A Primer

- For a programmers intro Welch Labs also has some great series on machine learning

- Peter Domingos ted talk

People are not very good at looking at a column of numbers or a whole spreadsheet and then determining important characteristics of the data. They find looking at numbers to be tedious, boring, and/or overwhelming. Exploratory data analysis techniques have been devised as an aid in this situation. Most of these techniques work in part by hiding certain aspects of the data while making other aspects more clear.

Overall, The goal of exploratory analysis is to examine or explore the data and find relationships that weren’t previously known. Exploratory analyses explore how different measures might be related to each other but do not confirm that relationship as causitive.

EDA always precedes formal (confirmatory) data analysis.

EDA is useful for:

- Detection of mistakes

- Checking of assumptions

- Determining relationships among the explanatory variables

- Assessing the direction and rough size of relationships between explanatory and outcome variables,

- Preliminary selection of appropriate models of the relationship between an outcome variable and one or more explanatory variables.

- EDA method is either non-graphical or graphical.

- Each method is either univariate or multivariate (usually just bivariate).

- Overall,the four types of EDA are univariate non-graphical, multivariate nongraphical, univariate graphical, and multivariate graphical.

- Non-graphical methods generally involve calculation of summary statistics, while graphical methods obviously summarize the data in a diagrammatic or pictorial way.

- Univariate methods look at one variable (data column) at a time, while multivariate methods look at two or more variables at a time to explore relationships. Usually our multivariate EDA will be bivariate (looking at exactly two variables), but occasionally it will involve three or more variables. It is almost always a good idea to perform univariate EDA on each of the components of a multivariate EDA before performing the multivariate EDA.

We should always perform appropriate EDA before further analysis of our data. Perform whatever steps are necessary to become more familiar with your data, check for obvious mistakes, learn about variable distributions, and learn about relationships between variables. EDA is not an exact science – it is a very important art!

Before EDA:

- Check the size and type of data

- See if the data is in appropriate for - Convert the data to a format you can easily manupulate (without changing the data itself)

- Sample a test set, set it aside and never look at it

EDA:

- Grab a copy of the data

- Document the EDA in a Jupyter notebook

- Study Each Attribute and its characteristics (Name, Type, % of missing values, noisy, usefulness, type of distribution)

- For Supervised Machine Learning, identify the target attribute

- Visualize the data

- Study the correlations between attributes

- Study how you would solve the problem manually

- Identify the promising transformations you may want to apply

- make plans to collect more of different data (if needed and if possible)

(Expectation and Mean, Variance and Standard deviation, Covarriance and Correlation, Median Quartile, Interquartile range, Percentile/quantile,Mode )

knowing which methods are suitable for which type of data

| Title | Description | Code |

|---|---|---|

| Univariate Non-Graphical Data Exploration | Learn Exploratory data analysis using air pollution and temperature data for the city of Chicago | Notebook |

| Univariate Non-Graphical Data Exploration | Data Exploration using data ozone levels dataset | Notebook |

| Graphical Data Exploration | Data Exploration using visualization techniques | Notebook |

Useful Guides:

- Chapter 4, Experimental Design and Analysis by Howard J. Seltman

- Section 1, Hands-On Exploratory Data Analysis with Python

- Chapter 1, Practical Statistics for Data Scientists, 2nd Edition

practice projects: https://github.com/ammarshaikh123/Projects-on-Data-Cleaning-and-Manipulation

Real-world data is rarely clean and homogeneous. It is often said that 80% of data analysis is spent on the process of cleaning and preparing the data (Dasu and Johnson 2003). Data preparation is not just a first step, but must be repeated many over the course of analysis as new problems come to light or new data is collected

Data Formats should be easy for computers to parse, people to read and widely used by systems in production. The computations we perform must be reproducible and tweakable.

Key Data Cleaning Steps:

- Fix or remove outliers

- Fill in missing values(e.g with zero, mean, median) or drop their rows

- deal with corrupted/erronous data

- (For ML) Feature Selection: Drop the attributes that provide no useful infomration for the task

| Notebook | Description | Code |

|---|---|---|

| Data Cleaning | Learn data cleaning with synthetic data | Notebook |

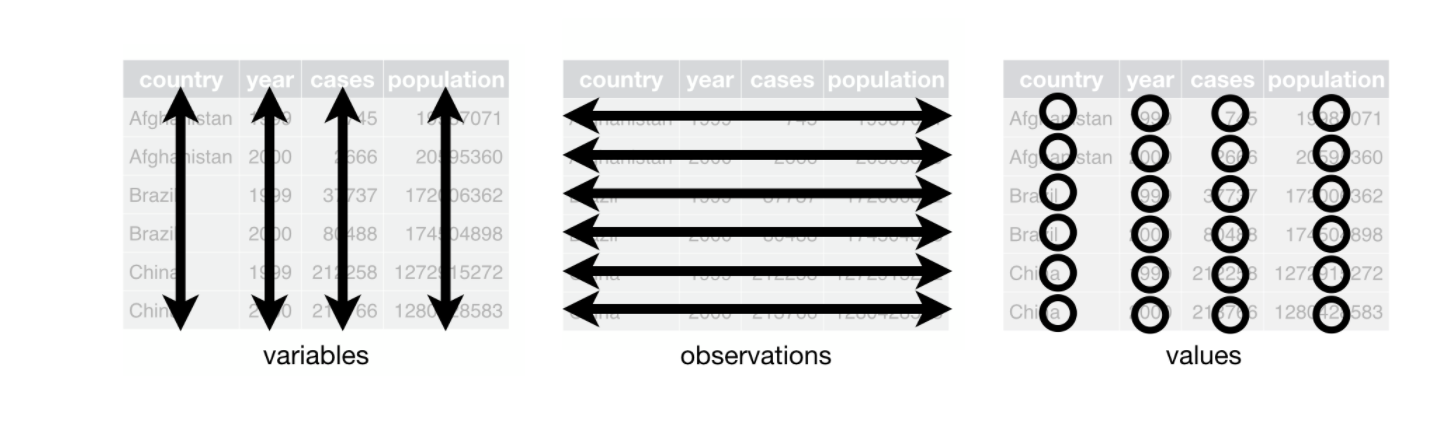

- Tidy data

- Reproducability in Data Science

- Guide by Jeff lean on how to share data with a statistician

- Best Practices in Data Cleaning: A Complete Guide to Everything You Need to Do Before and After Collecting Your Data

- Data Wrangling with Python by Jacqueline Kazil, Katharine Jarmul

- Clean Data by Megan Squire

- Python Data Cleaning Cookbook - Modern techniques and Python tools to detect and remove dirty data and extract key insights By: Michael Walker

Theory

| Topic | Description | Code |

|---|---|---|

| Introduction | ||

| linear regression | ||

| Techniques to avoid overfitting | ||

| Sampling techniques | ||

| Feature Engineering | ||

| Debugging ML Systems | ||

| Interpretability | ||

| Technical Debt in ML Systems | ||

| Process for Building end-to-end System |

https://github.com/asjad99/Machine-Learning-GYM/tree/main/ML_Notes

read more: Brief History, Key Concepts, Generalization

Notebooks

| Notebook | Description | Code |

|---|---|---|

| linear regression | ||

| Logistic Regression | ||

| MNIST | ||

| SVM | ||

| Decision Trees | ||

| Gradient Boosting | Ensembles are more powerful |

- GA_TSP - traveling salesman problem (TSP) using Genetic-Algorithms in C++

- Hybrid-CI - PCA dimensionality reduction using Genetic algorithms

- MLP - A barebones implementation of an MLP and Backprop algorithm in C++

- [MIT's Introduction to Machine Learning] ([https://openlearninglibrary.mit.edu/courses/course-v1:MITx+6.036+1T2019/course/])

- DeepLearning book chapter 4

- Andrew NG Course in ML

- PAC by j2kun

- Understanding Generalizations in Machine Learning

- Why is ML hard

- Neural Networks

- Feature Engineering and dimensionality reduction

- Machine Learning Mind Map

- Debugging ML Systems by Andrew Ng

- Book: Building Machine Learning Powered Applications going from idea to Product

- Machine Learning Design Patterns: Solutions to Common Challenges in Data Preparation, Model Building, and MLOps

- Challenges in Deploying Machine Learning: a Survey of Case Studies

- Challenges in Production