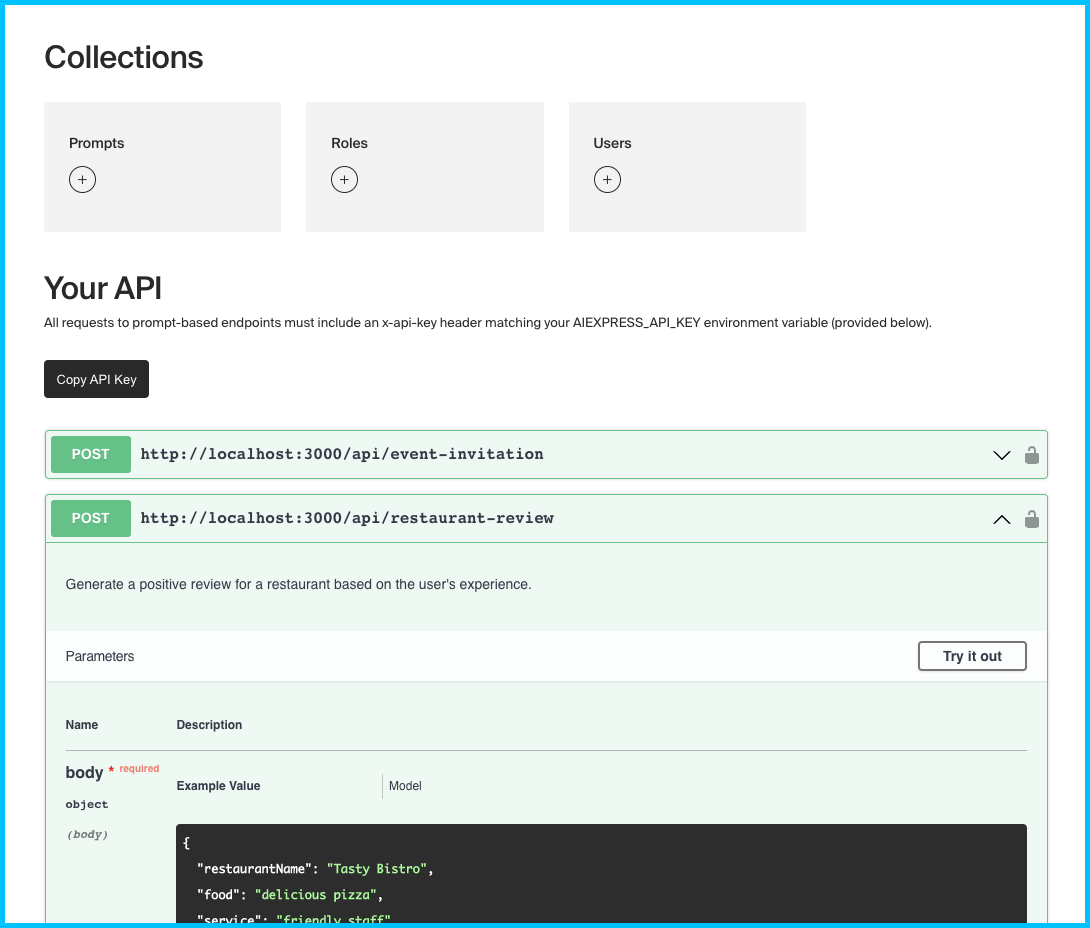

A fast way to turn AI prompts into ready-to-use API endpoints– deploy with a few clicks and manage prompts with automated variable inference & substitution in a neat visual interface. Comes out of the box with configurable input redaction, output validation, prompt retry, rate limiting & output caching infrastructure with no additional setup.

Run locally, or deploy in one click using the button below. If you are deploying AI Express for production use, make sure to customize the AIEXPRESS_API_KEY environment variable with a new random value that you keep secret.

If you're deploying elsewhere, you will likely need to set up MongoDB yourself.

-

Sign up for MongoDB. On the "Deploy your database" screen, select AWS, M0 – Free (or whatever level of hosting you'd like, but free really ought to be more than enough).

-

Create a user profile for the new database and make a note of your database username and password. Then from the "Network Access" page, click "Add IP Address" then "Allow access from anywhere". You can easily configure this later to include only the IP addresses of your Render deployment for extra security.

-

Go to "Database" in the sidebar, click the "Connect" button for the database you just created, select "Drivers", and copy the connection string URL. Note that you'll need to fill in the

<password>part of the URL with that of the profile you created in step 2.

Then set your connection URL as the MONGODB_URI environment variable wherever you host AI Express.

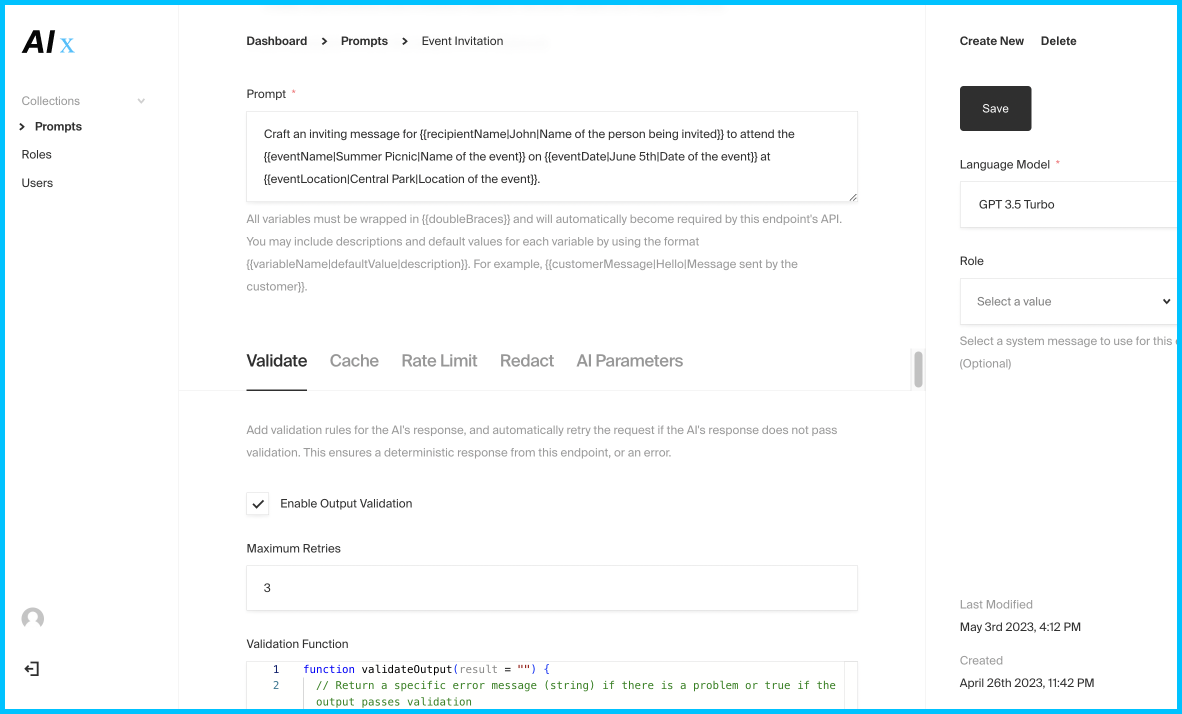

This template uses a simple express server with a Payload CMS configuration to give you an interface to manage prompts, updating your API dynamically whenever you publish/modify prompts on it.

Every prompt document created becomes a live API endpoint, and any variable in double curly braces in the prompt text, like {{name}}, {{age}}, {{color}}, etc. automatically becomes a requirement to the JSON body that API endpoint will expect and check for in an HTTP POST request.

Variable notation can be extended to include more info {{variableName|defaultValue|description}}, prompts are automatically validated for token length against the model selected in the text editor, and API documentation + a test bench are maintained via Swagger so you can test changes to your prompts without firing up Postman.

The API will wait for and output the top chat completion completion.data.choices[0].message.trim() in a simple JSON object of the format { result: <your_completion> }. If there was an error of any kind, you will receive { result: null, error: <error_message> }.

-

Ensure MongoDB is installed locally – Instructions: Mac, Windows. Don't forget to start it using

brew services start mongodb-communityor equivalent. -

Rename the

.sample.envfile to.env, adding your Open AI API key, and updatingAIEXPRESS_API_KEYwith a new random value. -

yarnandyarn devwill then start the application and reload on any changes. Requires Node 16+.

If you have docker and docker-compose installed, you can run docker compose up. You may need to run sudo chmod -R go+w /data/db on the data/db directory first.

- Generate test data and preview groups of completions

- Prebuilt and configurable processors that extract text when the endpoint is provided with URL's to files (text/excel/json/pdf/websites)

- Global values/snippets that can be pre-fetched and referenced across all prompts

Requires vector DB:

- Automatic “prompt splitting” into vector db and lookup if prompt is too long

- Optional index of and lookup through all prior output for a particular endpoint

- Separate tab where documents/urls/websites can be uploaded, extracted, and can be automatically drawn upon globally

Contributions are welcome.