python==3.10

torch==1.13.0

torch-geometric==2.2.0

docker image build -t astaka-pe/semigcn .

docker run -itd --gpus all -p 8081:8081 --name semigcn -v .:/work astaka-pe/semigcn

docker exec -it semigcn /bin/bash

- Unzip

datasets.zip - Sample meshes will be placed in

datasets/ - Put your own mesh in a new arbitrary folder as:

- Deficient mesh:

datasets/**/{mesh-name}/{mesh-name}_original.obj - Ground truth:

datasets/**/{mesh-name}/{mesh-name}_gt.obj

- Deficient mesh:

- The deficient and the ground truth meshes need not share a same connectivity but their scales must be shared

- Specify the path of the deficient mesh

- Create initial mesh and smoothed mesh

python3 preprocess/prepare.py -i datasets/**/{mesh-name}/{mesh-name}_original.obj

-

options

-r {float}: Target length of remeshing. The higher the coarser, the lower the finer.default=0.6.

-

Computation time: 30 sec

python3 sgcn.py -i datasets/**/{mesh-name} # SGCN

python3 mgcn.py -i datasets/**/{mesh-name} # MGCN

- options

-CAD: For a CAD model-real: For a real scan-cache: For using cache files (for faster computation)-mu: Weight for refinement

You can monitor the training progress through the web viewer. (Default: http://localhost:8081)

- Create

datasets/**/{mesh-name}/comparisonand put meshes for evaluation- A deficient mesh

datasets/**/{mesh-name}/comparison/original.objand a ground truth meshdatasets/**/{mesh-name}/comparison/gt.objare needed for evaluation

- A deficient mesh

python3 check/batch_dist_check.py -i datasets/**/{mesh-name}

- options

-real: For a real scan

- If you want to perform only refinement, run

python3 refinement.py \\

-src datasets/**/{mesh-name}/{mesh-name}_initial/obj \\

-dst datasets/**/{mesh-name}/output/**/100_step/.obj \\ # SGCN

# -dst datasets/**/{mesh-name}/output/**/100_step_0.obj \\ # MGCN

-vm datasets/**/{mesh-name}/{mesh-name}_vmask.json \\

-ref {arbitrary-output-filename}.obj \\

- option

-mu: Weight for refinement- Choose a weight so that the remaining vertex positions of the initial mesh and the shape of missing regions of the output mesh are saved

Please refer to tinymesh.

@article{hattori2024semigcn,

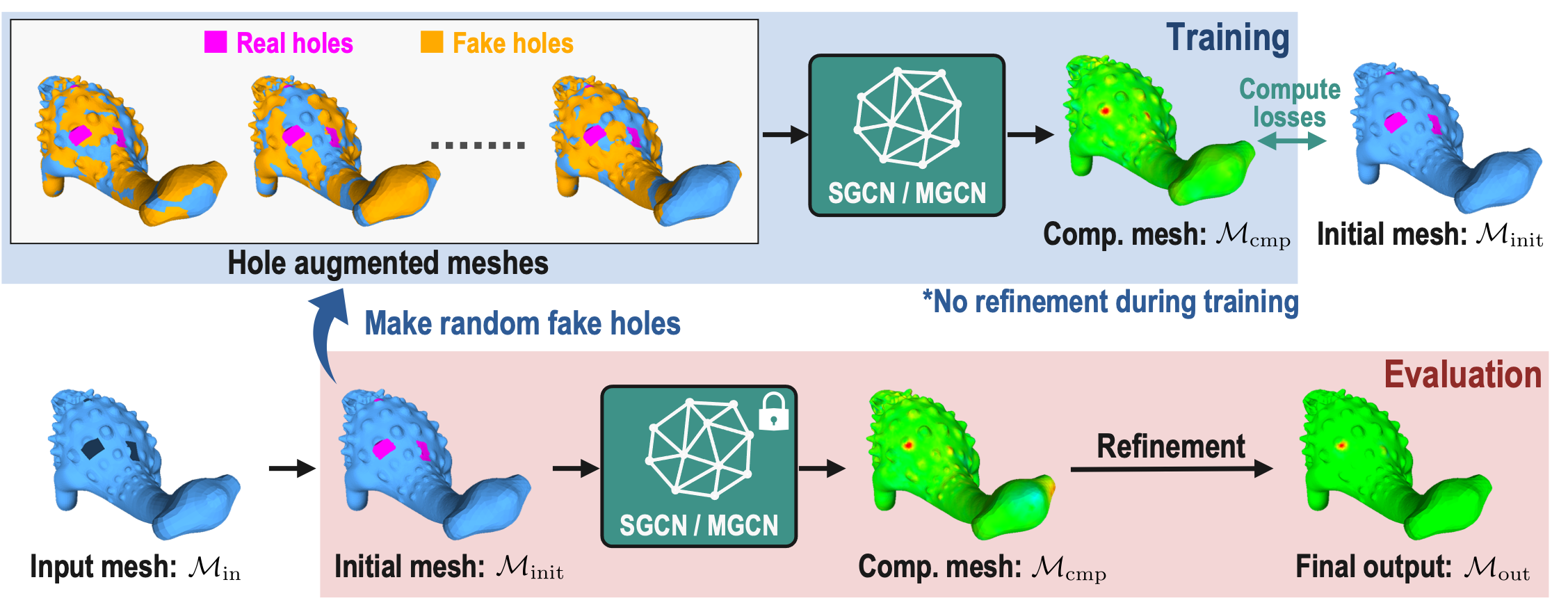

title={Learning Self-Prior for Mesh Inpainting Using Self-Supervised Graph Convolutional Networks},

author={Hattori, Shota and Yatagawa, Tatsuya and Ohtake, Yutaka and Suzuki, Hiromasa},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2024},

publisher={IEEE}

}