A fully-featured multi-source data pipeline for continuously extracting knowledge from COVID-19 data.

- Contamination figures

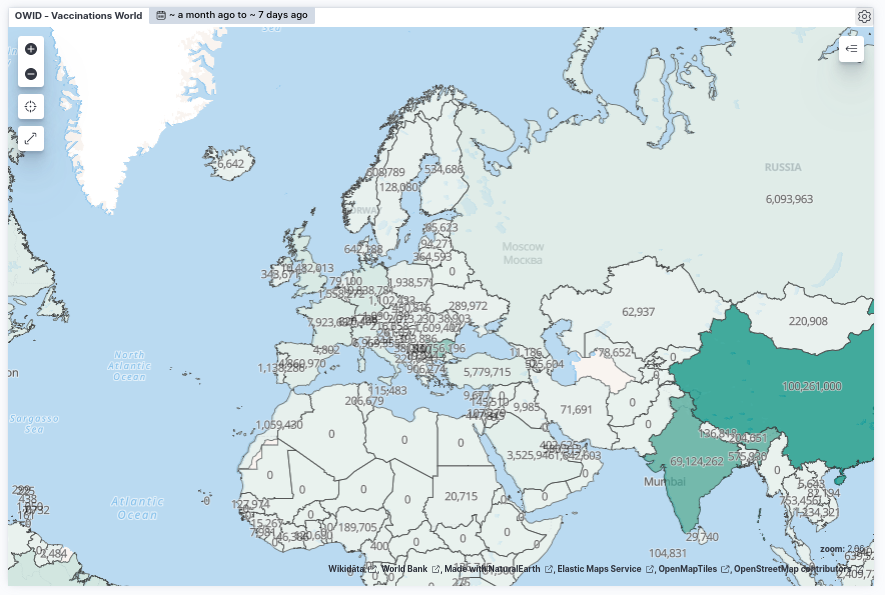

- Vaccination figures

- Death figures

- COVID-19-related news (Google News, Twitter)

| Live contaminations map + Latest news | Last 7 days news |

|---|---|

|

|

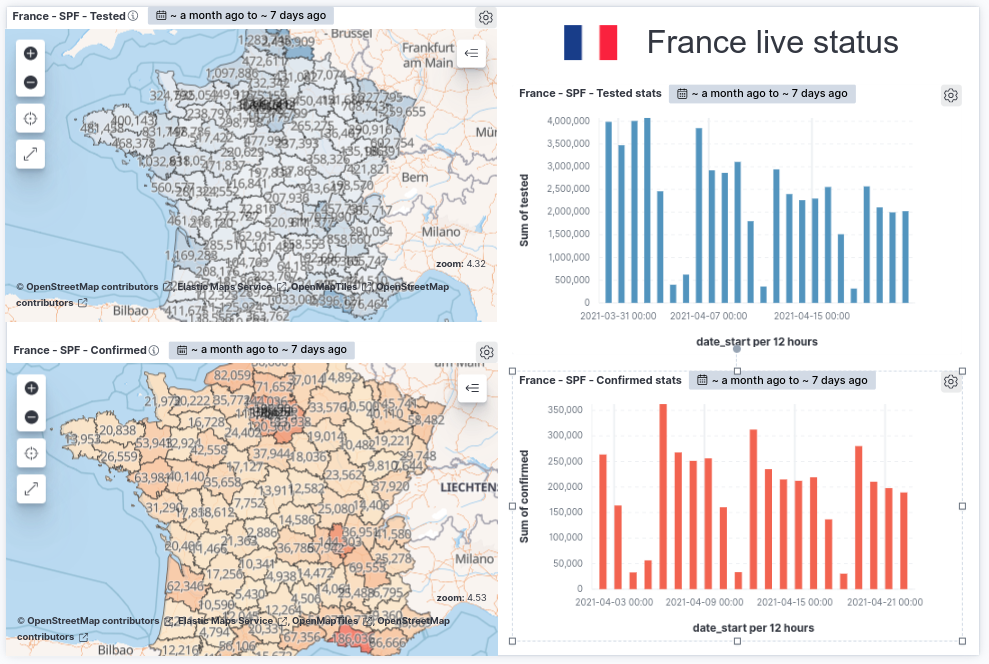

| France 3-weeks live map (Kibana Canvas) | Live vaccinations map |

|---|---|

|

|

This project was realized over 4 days as part of a MSc hackathon from ETNA, a french computer science school.

The incentives were both to experiment/prototype a big data pipeline and contribute to an open source project.

Below, you'll find the procedure to process COVID-related file and news into the Pandemic Knowledge database (elasticsearch).

The process is scheduled to run every 24 hours so you can update the files and obtain the latest news

Running this project on your local computer ? Just copy the .env.example file :

cp .env.example .envOpen this .env file and edit password-related variables.

Raise your host's ulimits for ElasticSearch to handle high I/O :

sudo sysctl -w vm.max_map_count=500000Then :

docker-compose -f create-certs.yml run --rm create_certs

docker-compose up -d es01 es02 es03 kibanaCreate a ~/.prefect/config.toml file with the following content :

# debug mode

debug = true

# base configuration directory (typically you won't change this!)

home_dir = "~/.prefect"

backend = "server"

[server]

host = "http://172.17.0.1"

port = "4200"

host_port = "4200"

endpoint = "${server.host}:${server.port}"Run Prefect :

docker-compose up -d prefect_postgres prefect_hasura prefect_graphql prefect_towel prefect_apollo prefect_uiWe need to create a tenant. Execute on your host :

pip3 install prefect

prefect backend server

prefect server create-tenant --name default --slug defaultAccess the web UI at localhost:8081

Agents are services that run your scheduled flows.

-

Open and optionally edit the

agent/config.tomlfile. -

Let's instanciate 3 workers :

docker-compose -f agent/docker-compose.yml up -d --build --scale agent=3 agentℹ️ You can run the agent on another machine than the one with the Prefect server. Edit the

agent/config.tomlfile for that.

Injection scripts should are scheduled in Prefect so they automatically inject data with the latest news (delete + inject).

There are several data source supported by Pandemic Knowledge

- Our World In Data; used by Google

- docker-compose slug :

insert_owid - MinIO bucket :

contamination-owid - Format : CSV

- docker-compose slug :

- OpenCovid19-Fr

- docker-compose slug :

insert_france - Format : CSV (download from Internet)

- docker-compose slug :

- Public Health France - Virological test results (official source)

- docker-compose slug :

insert_france_virtests - Format : CSV (download from Internet)

- docker-compose slug :

-

Start MinIO and import your files according to the buckets evoked upper.

For Our World In Data, create the

contamination-owidbucket and import the CSV file inside.docker-compose up -d minio

MinIO is available at

localhost:9000 -

Download dependencies and start the injection service of your choice. For instance :

pip3 install -r ./flow/requirements.txt docker-compose -f insert.docker-compose.yml up --build insert_owid

-

In Kibana, create an index pattern

contamination_owid_* -

Once injected, we recommend to adjust the number of replicas in the DevTool :

PUT /contamination_owid_*/_settings { "index" : { "number_of_replicas" : "2" } }

-

Start making your dashboards in Kibana !

There are two sources for news :

- Google News (elasticsearch index:

news_googlenews) - Twitter (elasticsearch index:

news_tweets)

- Run the Google News crawler :

docker-compose -f crawl.docker-compose.yml up --build crawl_google_news # and/or crawl_tweets-

In Kibana, create a

news_*index pattern -

Edit the index pattern fields :

| Name | Type | Format |

|---|---|---|

| img | string | Url |

| link | string with Type: Image with empty URL template | Url |

- Create your visualisation

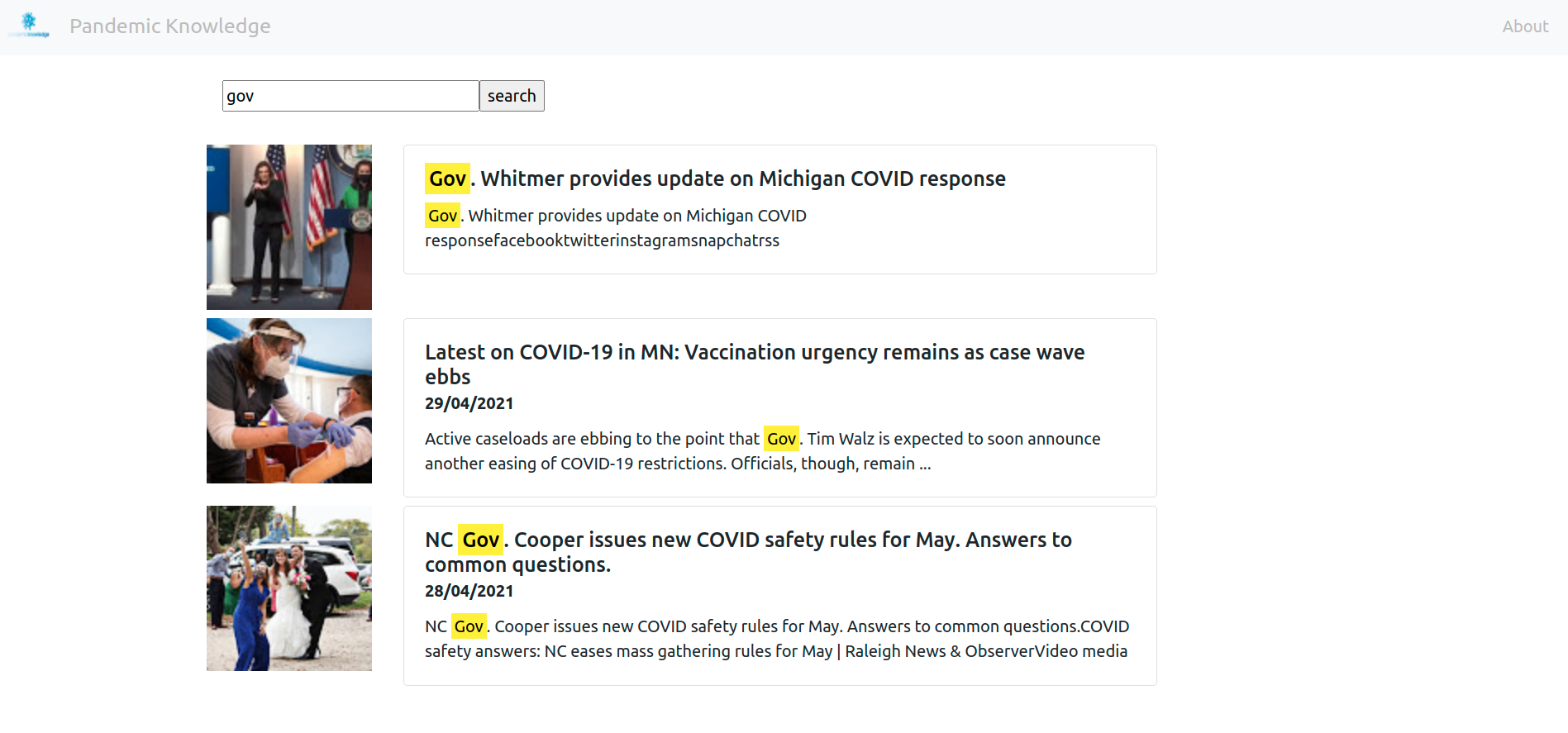

Browse through the news with our web application.

-

Make sure you've accepted the self-signed certificate of Elasticsearch at

https://localhost:9200 -

Start-up the app

docker-compose -f news_app/docker-compose.yml up --build -d

-

Discover the app at

localhost:8080

TODOs

Possible improvements :

- Using Dask for parallelizing process of CSV lines by batch of 1000

- Removing indices only when source process is successful (adding new index, then remove old index)

- Removing indices only when crawling is successful (adding new index, then remove old index)

Useful commands

To stop everything :

docker-compose down

docker-compose -f agent/docker-compose.yml down

docker-compose -f insert.docker-compose.yml down

docker-compose -f crawl.docker-compose.yml downTo start each service, step by step :

docker-compose up -d es01 es02 es03 kibana

docker-compose up -d minio

docker-compose up -d prefect_postgres prefect_hasura prefect_graphql prefect_towel prefect_apollo prefect_ui

docker-compose -f agent/docker-compose.yml up -d --build --scale agent=3 agent