Experiments with N-BEATS (Neural basis expansion analysis for interpretable time series forecasting)

Project with a TensorFlow implementation of N-BEATS and experiments with ensembles.

TensorFlow implementation of N-BEATS adapted from PyTorch variant found here

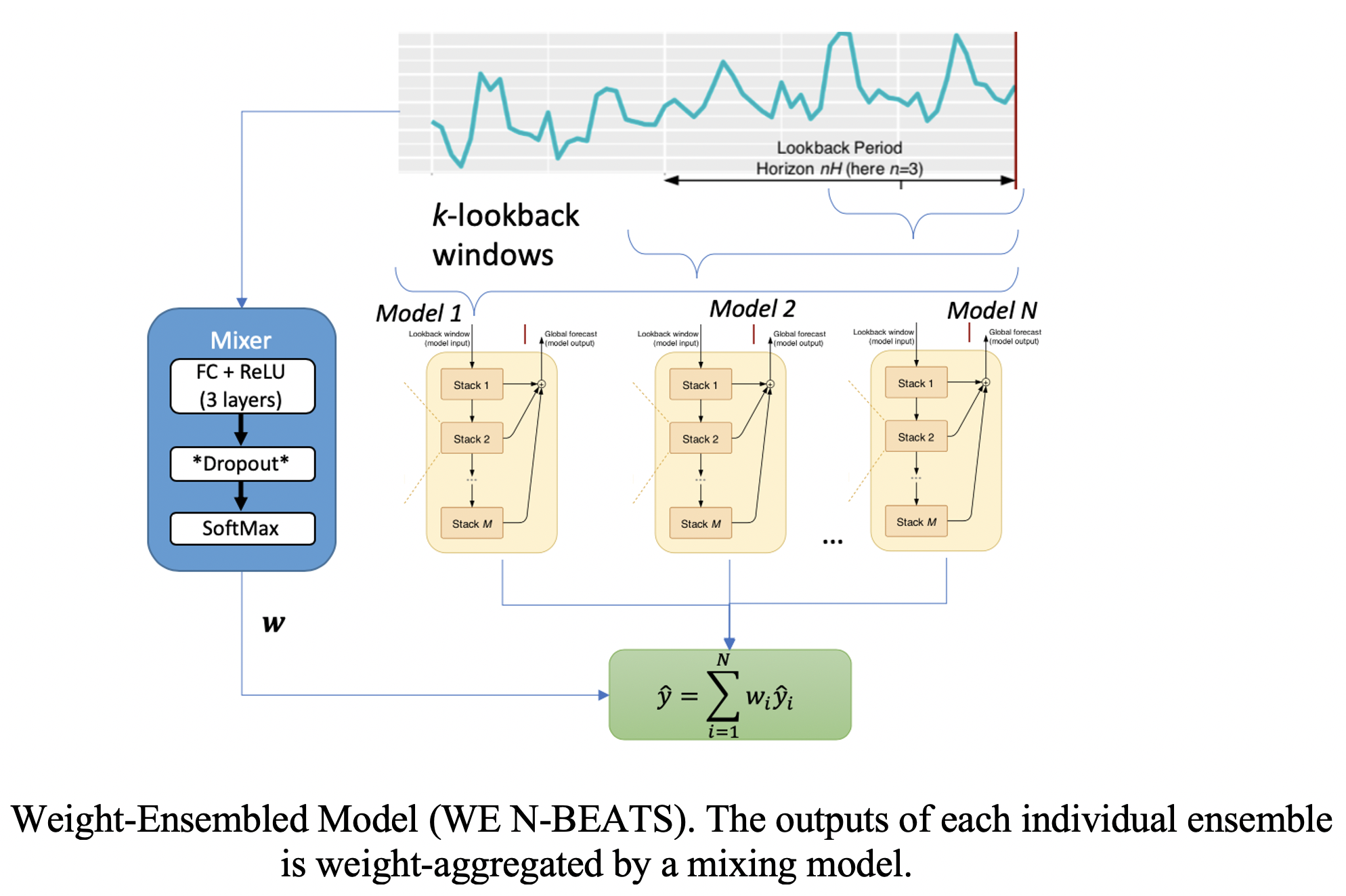

If we view each ensemble member as an expert forecaster, then a gating network [1] can be used to select an expert or blend their outputs. In the blended approach the error of the output affects the weight updates of all the experts, and as such there is co-adaptation between them. I explore one way to reduce co-adaptations by using a dropout layer, implemented as Weighted-Ensemble of N-BEATS (WE N-BEATS).

During training, the (random) zeroing out of the gate’s outputs cancels the contribution of a member to the final error. The backpropagated gradient of loss would then update only those members which contributed to the loss as well as the gate network itself, thus learning proper blending weights and reducing co-adaptations.

This project has been developed with the following packages

- tensorflow (2.3)

- pyyaml (5.3.1)

- pandas (1.1.1)

- scikit-learn (0.23.2)

- tqdm (4.48.2)

- matplotlib (3.3.1)

[1]: R. A. Jacobs, M. I. Jordan, S. J. Nowlan, G. E. Hinton, “Adaptive Mixtures of Local Experts”, Neural Computation, 3: 79-87, 1991

[2]: L. Breiman, “Bagging predictors”, Machine Learning, 24(2):123–140, Aug 1996