Pix2pix model trained to generate 512 px X 512 px santas! All credit goes to yining1023 and the original pix2pix_tensorflowjs_lite repository, the only modifications made is training a new model, and accomodating 512 x 512 images in the code.

Try it yourself: Download/clone the repository and run it locally:

$ git clone https://github.com/auduno/pix2pix_tensorflowjs_lite.git

$ cd pix2pix_tensorflowjs_lite

$ python -m SimpleHTTPServer

The rest of the text in this readme is from the original readme in pix2pix_tensorflowjs_lite repository.

Credits: This project is based on affinelayer's pix2pix-tensorflow. I want to thank christopherhesse, nsthorat, and dsmilkov for their help and suggestions from this Github issue.

-

- Prepare the data

-

- Train the model

-

- Test the model

-

- Export the model

-

- Port the model to tensorflow.js

-

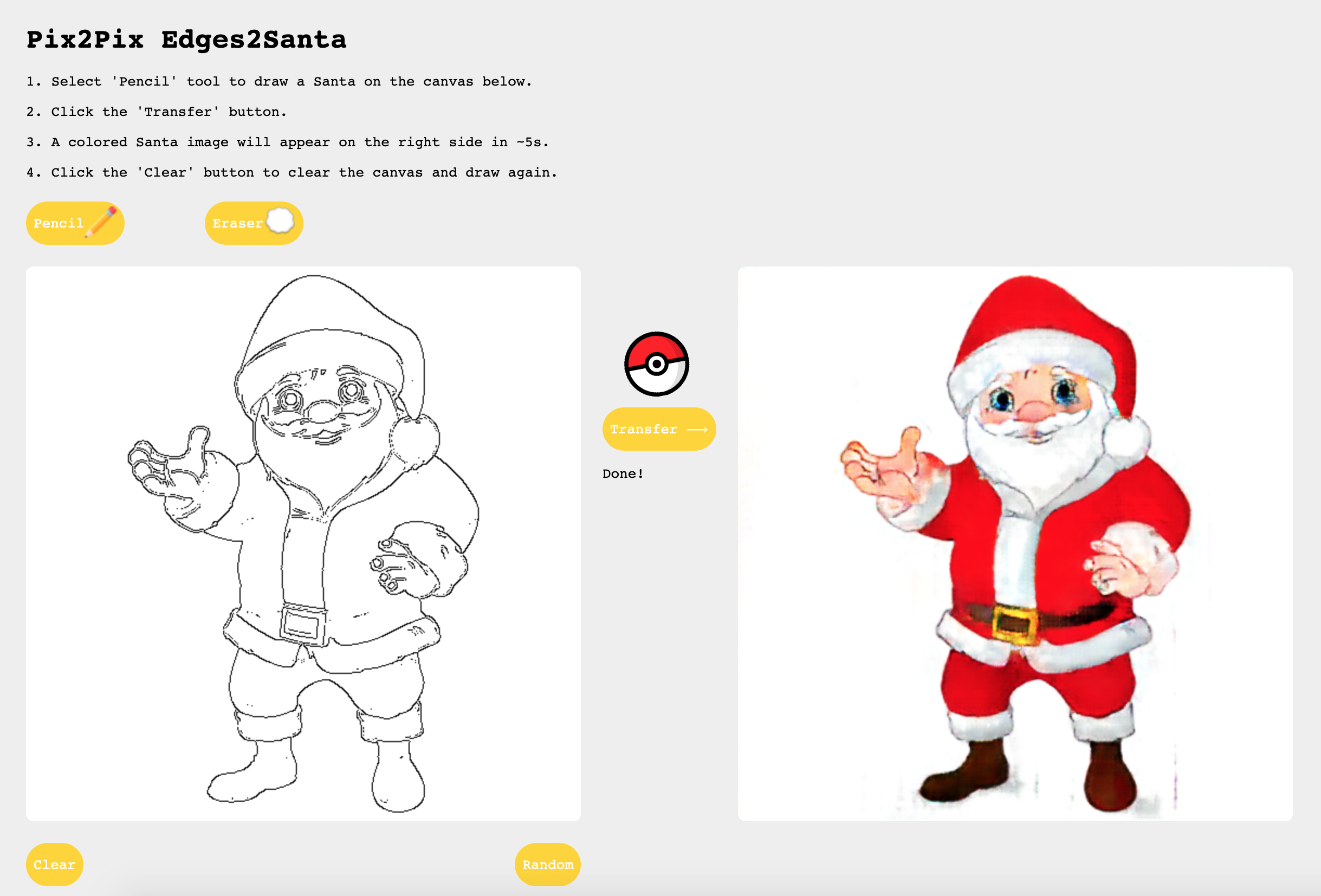

- Create an interactive interface in the browser

- 1.1 Scrape images from google search

- 1.2 Remove the background of the images

- 1.3 Resize all images into 256x256 px

- 1.4 Detect edges of all images

- 1.5 Combine input images and target images

- 1.6 Split all combined images into two folders:

trainandval

Before we start, check out affinelayer's Create your own dataset. I followed his instrustion for steps 1.3, 1.5 and 1.6.

We can create our own target images. But for this edge2pikachu project, I downloaded a lot of images from google.

I used this google_image_downloader to download images from google. But it requires too much setup, if you manage setup google_image_downloader, you can run -

$ python image_download.py <query> <number of images>

It will download images and save it to the current directory.

I recommand the two methods below to get images -

- You can try this library to get images from Flickr: https://github.com/antiboredom/flickr-scrape. You can get an API key for Flickr in a few minutes: https://www.flickr.com/services/api/misc.api_keys.html

- Here is a chrome extension can batch download images on a web page: Fatkun Batch Download Image

Some images have some background. I'm using grabcut with OpenCV to remove background Check out the script here: https://github.com/yining1023/pix2pix-tensorflow/blob/master/tools/grabcut.py To run the script-

$ python grabcut.py <filename>

It will open an interactive interface, here are some instructions: https://github.com/symao/InteractiveImageSegmentation Here's an example of removing background using grabcut:

Download pix2pix-tensorflow repo.

Put all images we got into photos/original folder

Run -

$ python tools/process.py --input_dir photos/original --operation resize --output_dir photos/resized

We should be able to see a new folder called resized with all resized images in it.

The script that I use to detect edges of images from one folder at once is here: https://github.com/yining1023/pix2pix-tensorflow/blob/master/tools/edge-detection.py, we need to change the path of the input images directory on line 31, and create a new empty folder called edges in the same directory.

Run -

$ python edge-detection.py

We should be able to see edged-detected images in the edges folder.

Here's an example of edge detection: left(original) right(edge detected)

python tools/process.py --input_dir photos/resized --b_dir photos/blank --operation combine --output_dir photos/combined

Here is an example of the combined image: Notice that the size of the combined image is 512x256px. The size is important for training the model successfully.

Read more here: affinelayer's Create your own dataset

python tools/split.py --dir photos/combined

Read more here: affinelayer's Create your own dataset

I collected 305 images for training and 78 images for testing.

# train the model

python pix2pix.py --mode train --output_dir pikachu_train --max_epochs 200 --input_dir pikachu/train --which_direction BtoA --ngf 32 --ndf 32

I used --ngf 32 --ndf 32 here, and the model is much smaller and faster than using the default value which is 64.

Read more here: https://github.com/affinelayer/pix2pix-tensorflow#getting-started

I used the High Power Computer(HPC) at NYU to train the model. You can see more instruction here: https://github.com/cvalenzuela/hpc. You can request GPU and submit a job to HPC, and use tunnels to tranfer large files between the HPC and your computer.

The training takes me 4 hours and 16 mins. After train, there should be a pikachu_train folder with checkpoint in it.

# test the model

python pix2pix.py --mode test --output_dir pikachu_test --input_dir pikachu/val --checkpoint pikachu_train

After testing, there should be a new folder called pikachu_test. In the folder, if you open the index.html, you should be able to see something like this in your browser:

Read more here: https://github.com/affinelayer/pix2pix-tensorflow#getting-started

python pix2pix.py --mode export --output_dir /export/ --checkpoint /pikachu_train/ --which_direction BtoA

It will create a new export folder

I followed affinelayer's instruction here: https://github.com/affinelayer/pix2pix-tensorflow/tree/master/server#exporting

cd server

python tools/export-checkpoint.py --checkpoint ../export --output_file static/models/pikachu_BtoA.pict

We should be able to get a file named pikachu_BtoA.pict, which is 13.6 MB.

Copy the model we get from step 5 to the models folder.